>- **🍨 本文为[🔗365天深度学习训练营]中的学习记录博客**

>- **🍖 原作者:[K同学啊]**

本次主要是探究不同优化器、以及不同参数配置对模型的影响

🚀我的环境:

- 语言环境:Python3.11.7

- 编译器:jupyter notebook

- 深度学习框架:TensorFlow2.13.0

一、设置GPU

import tensorflow as tf

gpus=tf.config.list_physical_devices("GPU")

if gpus:

gpu0=gpus[0]

tf.config.experimental.set_memory_growth(gpu0,True)

tf.config.set_visible_devices([gpu0],"GPU")

import warnings

warnings.filterwarnings("ignore")二、导入数据

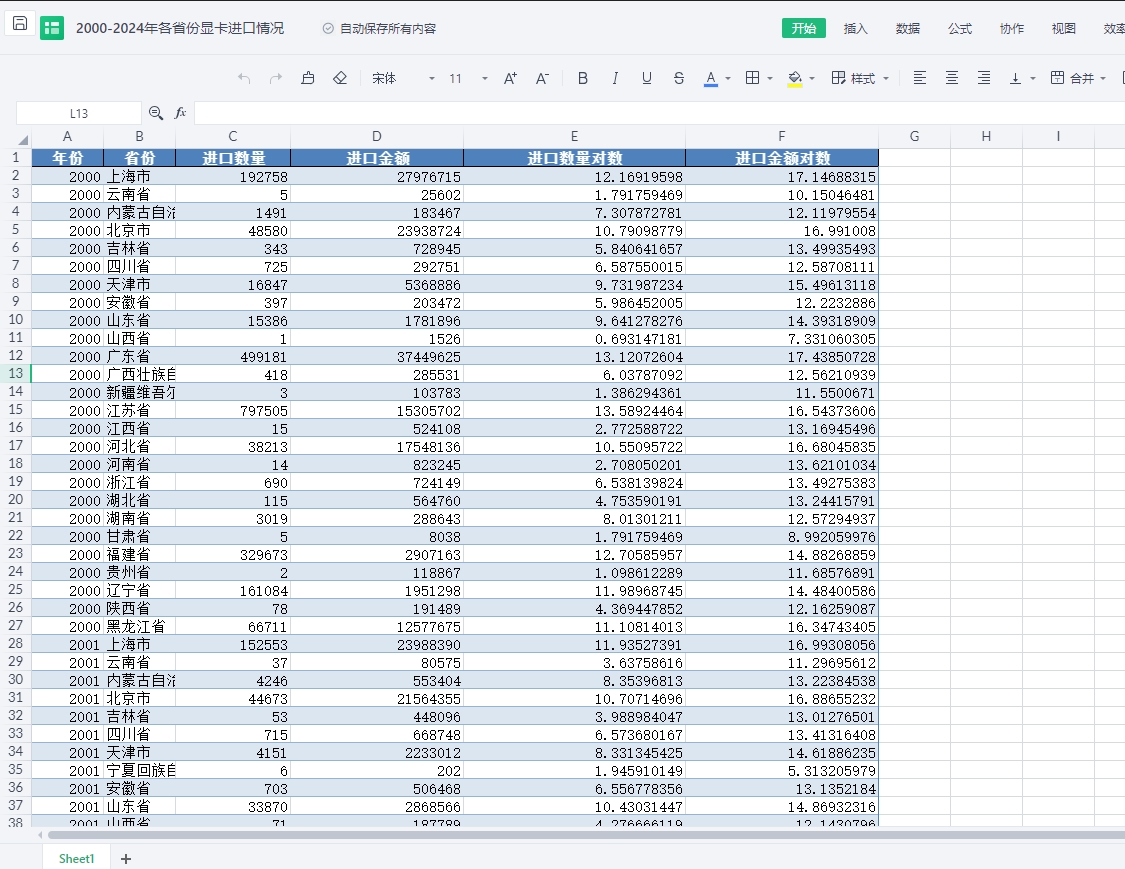

1. 导入数据

import pathlib

data_dir="D:\THE MNIST DATABASE\P6-data"

data_dir=pathlib.Path(data_dir)

image_count=len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)运行结果:

图片总数为: 18002. 加载数据

加载训练集:

train_ds=tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=12,

image_size=(224,224),

batch_size=16

)运行结果:

Found 1800 files belonging to 17 classes.

Using 1440 files for training.加载验证集:

val_ds=tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=12,

image_size=(224,224),

batch_size=16

)运行结果:

Found 1800 files belonging to 17 classes.

Using 360 files for validation.显示数据集分类情况:

class_names=train_ds.class_names

print(class_names)运行结果:

['Angelina Jolie', 'Brad Pitt', 'Denzel Washington', 'Hugh Jackman', 'Jennifer Lawrence', 'Johnny Depp', 'Kate Winslet', 'Leonardo DiCaprio', 'Megan Fox', 'Natalie Portman', 'Nicole Kidman', 'Robert Downey Jr', 'Sandra Bullock', 'Scarlett Johansson', 'Tom Cruise', 'Tom Hanks', 'Will Smith']3. 检查数据

for image_batch,labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break运行结果:

(16, 224, 224, 3)

(16,)

4. 配置数据集

AUTOTUNE=tf.data.AUTOTUNE

def train_preprocessing(image,label):

return (image/255.0,label)

train_ds=(train_ds.cache().shuffle(1000).map(train_preprocessing).prefetch(buffer_size=AUTOTUNE))

val_ds=(val_ds.cache().shuffle(1000).map(train_preprocessing).prefetch(buffer_size=AUTOTUNE))5. 数据可视化

import matplotlib.pyplot as plt

plt.figure(figsize=(15,8))

plt.suptitle("数据展示")

for images,labels in train_ds.take(1):

for i in range(15):

plt.subplot(4,5,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

#显示图片

plt.imshow(images[i])

#显示标签

plt.xlabel(class_names[labels[i]-1])

plt.show()运行结果:

三、构建模型

from tensorflow.keras.layers import Dropout,Dense,BatchNormalization

from tensorflow.keras.models import Model

def create_model(optimizer='adam'):

#加载预训练模型

vgg16_base_model=tf.keras.applications.vgg16.VGG16(

weights='imagenet',

include_top=False,

input_shape=(224,224,3),

pooling='avg'

)

for layer in vgg16_base_model.layers:

layer.trainable=False

x=vgg16_base_model.output

x=Dense(170,activation='relu')(x)

x=BatchNormalization()(x)

x=Dropout(0.5)(x)

output=Dense(len(calss_names),activation='softmax')(x)

vgg16_model=Model(inputs=vgg16_base_model.input,outputs=output)

vgg16_model.compile(optimizer=optimizer,

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

return vgg16_model

model1=create_model(optimizer=tf.keras.optimizers.Adam())

model2=create_model(optimizer=tf.keras.optimizers.SGD())

model2.summary()运行结果:

Model: "model_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 224, 224, 3)] 0

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

global_average_pooling2d_1 (None, 512) 0

(GlobalAveragePooling2D)

dense_2 (Dense) (None, 170) 87210

batch_normalization_1 (Bat (None, 170) 680

chNormalization)

dropout_1 (Dropout) (None, 170) 0

dense_3 (Dense) (None, 17) 2907

=================================================================

Total params: 14805485 (56.48 MB)

Trainable params: 90457 (353.35 KB)

Non-trainable params: 14715028 (56.13 MB)

_________________________________________________________________四、训练模型

no_epochs=50

history_model1=model1.fit(train_ds,epochs=no_epochs,verbose=1,validation_data=val_ds)

history_model2=model2.fit(train_ds,epochs=no_epochs,verbose=1,validation_data=val_ds)运行结果:

Epoch 1/50

90/90 [==============================] - 131s 1s/step - loss: 2.8359 - accuracy: 0.1500 - val_loss: 2.6786 - val_accuracy: 0.1083

Epoch 2/50

90/90 [==============================] - 127s 1s/step - loss: 2.1186 - accuracy: 0.3243 - val_loss: 2.3780 - val_accuracy: 0.3361

Epoch 3/50

90/90 [==============================] - 127s 1s/step - loss: 1.7924 - accuracy: 0.4229 - val_loss: 2.1311 - val_accuracy: 0.4000

Epoch 4/50

90/90 [==============================] - 128s 1s/step - loss: 1.6097 - accuracy: 0.4750 - val_loss: 1.9252 - val_accuracy: 0.4028

……

Epoch 46/50

90/90 [==============================] - 129s 1s/step - loss: 0.1764 - accuracy: 0.9465 - val_loss: 2.7244 - val_accuracy: 0.5528

Epoch 47/50

90/90 [==============================] - 131s 1s/step - loss: 0.1833 - accuracy: 0.9410 - val_loss: 2.3910 - val_accuracy: 0.5278

Epoch 48/50

90/90 [==============================] - 131s 1s/step - loss: 0.2151 - accuracy: 0.9340 - val_loss: 2.8985 - val_accuracy: 0.4389

Epoch 49/50

90/90 [==============================] - 130s 1s/step - loss: 0.1725 - accuracy: 0.9458 - val_loss: 2.3219 - val_accuracy: 0.5306

Epoch 50/50

90/90 [==============================] - 130s 1s/step - loss: 0.1764 - accuracy: 0.9375 - val_loss: 2.9708 - val_accuracy: 0.4972

Epoch 1/50

90/90 [==============================] - 130s 1s/step - loss: 3.0062 - accuracy: 0.1125 - val_loss: 2.7298 - val_accuracy: 0.1778

Epoch 2/50

90/90 [==============================] - 129s 1s/step - loss: 2.4726 - accuracy: 0.2271 - val_loss: 2.5667 - val_accuracy: 0.2250

Epoch 3/50

90/90 [==============================] - 129s 1s/step - loss: 2.2530 - accuracy: 0.2917 - val_loss: 2.3789 - val_accuracy: 0.2972

Epoch 4/50

90/90 [==============================] - 129s 1s/step - loss: 2.0593 - accuracy: 0.3458 - val_loss: 2.1837 - val_accuracy: 0.3194

…………

Epoch 47/50

90/90 [==============================] - 146s 2s/step - loss: 0.6468 - accuracy: 0.8021 - val_loss: 1.5983 - val_accuracy: 0.5194

Epoch 48/50

90/90 [==============================] - 146s 2s/step - loss: 0.6093 - accuracy: 0.8111 - val_loss: 1.6223 - val_accuracy: 0.4972

Epoch 49/50

90/90 [==============================] - 146s 2s/step - loss: 0.6051 - accuracy: 0.7979 - val_loss: 1.6518 - val_accuracy: 0.5139

Epoch 50/50

90/90 [==============================] - 150s 2s/step - loss: 0.6074 - accuracy: 0.8007 - val_loss: 1.6507 - val_accuracy: 0.5167五、评估模型

1. Accuracy与Loss图

from matplotlib.ticker import MultipleLocator

plt.rcParams['savefig.dpi']=300 #图片像素

plt.rcParams['figure.dpi']=300 #图片分辨率

acc1=history_model1.history['accuracy']

acc2=history_model2.history['accuracy']

val_acc1=history_model1.history['val_accuracy']

val_acc2=history_model2.history['val_accuracy']

loss1=history_model1.history['loss']

loss2=history_model2.history['loss']

val_loss1=history_model1.history['val_loss']

val_loss2=history_model2.history['val_loss']

epochs_range=range(len(acc1))

plt.figure(figsize=(16,4))

plt.subplot(1,2,1)

plt.plot(epochs_range,acc1,label="Training Accuracy-Adam")

plt.plot(epochs_range,acc2,label="Training Accuracy-SGD")

plt.plot(epochs_range,val_acc1,label="Validation Accuracy-Adam")

plt.plot(epochs_range,val_acc2,label="Validation Accuracy-SGD")

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

#设置刻度间隔,x轴每1一个刻度

ax=plt.gca()

ax.xaxis.set_major_locator(MultipleLocator(1))

plt.subplot(1,2,2)

plt.plot(epochs_range,loss1,label="Training Loss-Adam")

plt.plot(epochs_range,loss2,label="Training Loss-SGD")

plt.plot(epochs_range,val_loss1,label="Validation Loss-Adam")

plt.plot(epochs_range,val_loss2,label="Validation Loss-SGD")

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

#设置刻度间隔,x轴每1一个刻度

ax=plt.gca()

ax.xaxis.set_major_locator(MultipleLocator(1))

plt.show()运行结果:

2. 模型评估

def test_accuracy_report(model):

score=model.evaluate(val_ds,verbose=0)

print('Loss function: %s,accuracy:' % score[0],score[1])

test_accuracy_report(model2)运行结果:

Loss function: 1.6506924629211426,accuracy: 0.5166666507720947六、心得体会

通过本项目的练习,学习如何在不同优化器环境下进行对比实验,通过实验可以筛选出提升准确率的优化器。

当然,以此类推,可以通过修改模型中的各项参数建立各类模型,最后对比各类模型的结果,以求达到最优模型。

![[Linux#43][线程] 死锁 | 同步 | 基于 BlockingQueue 的生产者消费者模型](https://img-blog.csdnimg.cn/img_convert/972053f30a6f66157a53adfccea9b84f.jpeg)