Planning-oriented Autonomous Driving

面向规划的自动驾驶

https://github.com/OpenDriveLab/UniAD

Abstract

Modern autonomous driving system is characterized as modular tasks in sequential order, i.e., perception, prediction, and planning. In order to perform a wide diversity of tasks and achieve advanced-level intelligence, contemporary approaches either deploy standalone models for individual tasks, or design a multi-task paradigm with separate heads. However, they might suffer from accumulative errors or deficient task coordination. Instead, we argue that a favorable framework should be devised and optimized in pursuit of the ultimate goal, i.e., planning of the self-driving car. Oriented at this, we revisit the key components within perception and prediction, and prioritize the tasks such that all these tasks contribute to planning. We introduce Unified Autonomous Driving (UniAD), a comprehensive framework up-to-date that incorporates full-stack driving tasks in one network. It is exquisitely devised to leverage advantages of each module, and provide complementary feature abstractions for agent interaction from a global perspective. Tasks are communicated with unified query interfaces to facilitate each other toward planning. We instantiate UniAD on the challenging nuScenes benchmark. With extensive ablations, the effectiveness of using such a philosophy is proven by substantially outperforming previous state-of-the-arts in all aspects. Code and models are public.

现代自动驾驶系统以顺序执行的模块化任务为特点,即感知、预测和规划。为了执行多样化的任务并达到高级智能水平,当代方法要么为每个单独任务部署独立模型,要么设计具有不同分支的多任务模型。然而,这些方法可能会遇到累积误差或任务协调不足的问题。相反,我们认为应该设计并优化一个有利的框架,以实现最终目标,即自动驾驶汽车的规划。为此,我们重新审视了感知和预测中的关键组件,并优先考虑任务,以确保所有这些任务都能促进规划。我们提出了统一自动驾驶(UniAD),这是一个集成了全栈驾驶任务的全面框架。它精心设计,旨在利用每个模块的优势,并从全局视角为代理交互提供互补的特征抽象。任务通过统一的查询接口进行通信,以相互促进规划。我们在具有挑战性的nuScenes基准测试上实现了UniAD。通过广泛的消融研究,证明了采用这种理念的有效性,它在所有方面都显著超越了以前的最先进水平。代码和模型是公开的。

1. Introduction

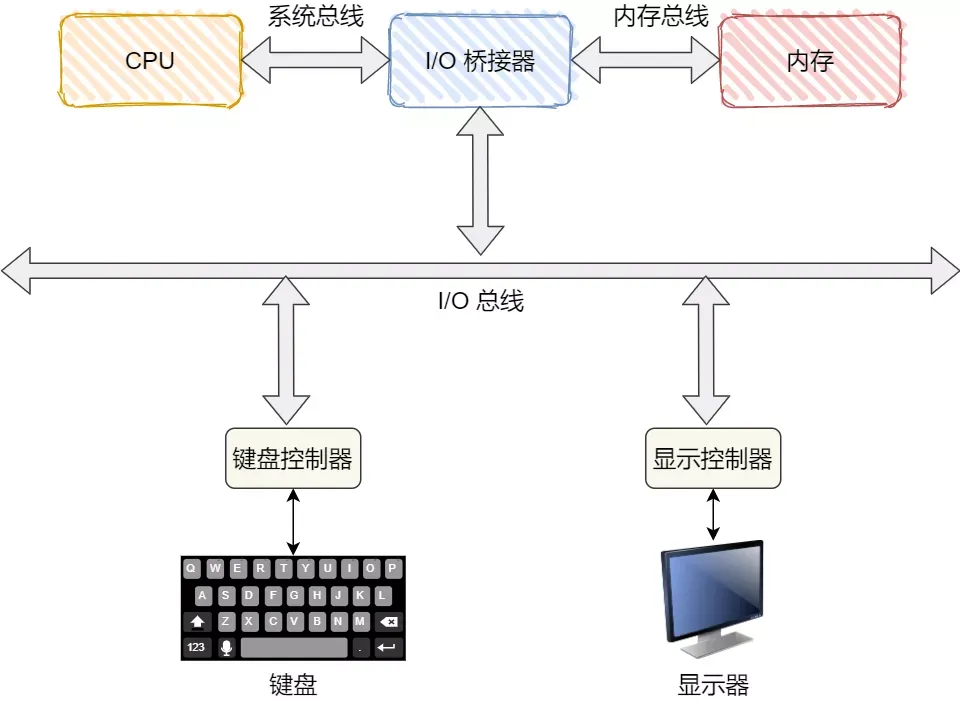

With the successful development of deep learning, autonomous driving algorithms are assembled with a series of tasks1, including detection, tracking, mapping in perception; and motion and occupancy forecast in prediction. As depicted in Fig. 1(a), most industry solutions deploy stan-dalone models for each task independently [68, 71], as long as the resource bandwidth of the onboard chip allows. Although such a design simplifies the R&D difficulty across teams, it bares the risk of information loss across modules, error accumulation and feature misalignment due to the isolation of optimization targets [57, 66, 82].

随着深度学习技术的快速发展,自动驾驶算法由多个任务组成,包括感知任务中的物体检测、目标跟踪和地图构建;以及预测任务中的动作预测和占用预测。如图1(a)所示,大多数行业解决方案会为每个任务独立部署单独的模型,只要车载芯片的资源带宽足够。尽管这种设计方法简化了不同团队之间的研发难度,但它也带来了信息在不同模块间丢失、误差累积以及由于优化目标隔离导致的特征不对齐的风险。

图 1. 展示了自动驾驶框架的多种设计对比。

(a) 大多数行业解决方案为不同任务独立部署了各自的模型。

(b) 多任务学习框架通过共享一个基础网络结构,但为不同任务分配了不同的任务头部。

© 端到端的设计将感知和预测模块整合在一起。之前的尝试要么是在 (c.1) 中直接对规划进行优化,要么是在 (c.2) 中构建包含部分组件的系统。而我们则在 (c.3) 中提出,一个理想的系统不仅应该是面向规划的,还应该适当地组织前期任务,以便于促进规划的进行。

A more elegant design is to incorporate a wide span of tasks into a multi-task learning (MTL) paradigm, by plugging several task-specific heads into a shared feature extractor as shown in Fig. 1(b). This is a popular practice in many domains, including general vision [79,92,108], autonomous driving2 [15, 60, 101, 105], such as Transfuser [20], BEV-erse [105], and industrialized products, e.g., Mobileye [68], Tesla [87], Nvidia [71], etc. In MTL, the co-training strategy across tasks could leverage feature abstraction; it could effortlessly extend to additional tasks, and save computation cost for onboard chips. However, such a scheme may cause undesirable “negative transfer” [23, 64].

一种更优雅的设计方案是将广泛的任务整合到多任务学习(MTL)框架中,通过将多个特定于任务的头部接入一个共享的特征提取器,如图1(b)所示。这种做法在包括通用视觉[79,92,108]、自动驾驶[15,60,101,105]等多个领域中非常流行,例如Transfuser[20]、BEV-erse[105]以及一些产业化产品,如Mobileye[68]、Tesla[87]、Nvidia[71]等。在MTL中,不同任务之间的共同训练策略可以利用特征抽象的优势;它能够轻松扩展到更多的任务,同时为车载芯片节省计算成本。然而,这种方案可能会引发不希望的“负迁移”现象[23,64]。

By contrast, the emergence of end-to-end autonomous driving [11, 15, 19, 38, 97] unites all nodes from perception, prediction and planning as a whole. The choice and priority of preceding tasks should be determined in favor of planning. The system should be planning-oriented, exquisitely designed with certain components involved, such that there are few accumulative error as in the standalone option or negative transfer as in the MTL scheme. Table 1 describes the task taxonomy of different framework designs.

与之相对,端到端自动驾驶[11, 15, 19, 38, 97]将从感知、预测到规划的所有环节整合为一个整体。选择和优先级的任务应当以规划为重。系统应该以规划为导向,精心设计,包含特定组件,以便像独立模型那样减少累积误差,或者像多任务学习(MTL)方案那样减少负迁移现象。表1 描述了不同框架设计中的任务分类。

表 1. 任务对比和分类。"设计"一栏是按照图 1 进行分类的。"Det."代表三维物体检测,"Map"指的是在线地图制作,而**"Occ."表示占用预测图**。†:这些研究工作虽然不是直接为了规划而提出的,但它们仍然体现了联合感知和预测的共同精神。UniAD执行五个基本的驾驶任务,以帮助实现规划。

Following the end-to-end paradigm, one “tabula-rasa” practice is to directly predict the planned trajectory, without any explicit supervision of perception and prediction as shown in Fig. 1(c.1). Pioneering works [14,16,21,22,78,95, 97, 106] verified this vanilla design in the closed-loop simulation [26]. While such a direction deserves further exploration, it is inadequate in safety guarantee and interpretability, especially for highly dynamic urban scenarios. In this paper, we lean toward another perspective and ask the following question: Toward a reliable and planning-oriented autonomous driving system, how to design the pipeline in favor of planning? which preceding tasks are requisite?

遵循端到端的设计理念,一种“白纸”做法是直接预测计划好的轨迹,而无需对感知和预测进行显式监督,如图1(c.1)所示。一些开创性的研究[14,16,21,22,78,95,97,106]在闭环仿真[26]中验证了这种基本设计的有效性。虽然这种方向值得进一步探索,但它在安全保障和可解释性方面存在不足,特别是在高度动态的城市环境中。在本文中,我们从另一个角度出发,提出了以下问题:为了构建一个可靠且以规划为导向的自动驾驶系统,应该如何设计流程以支持规划?哪些前期任务是必不可少的?

An intuitive resolution would be to perceive surrounding objects, predict future behaviors and plan a safe maneuver explicitly, as illustrated in Fig. 1(c.2). Contemporary approaches [11, 30, 38, 57, 82] provide good insights and achieve impressive performance. However, we argue that the devil lies in the details; previous works more or less fail to consider certain components (see block (c.2) in Table 1), being reminiscent of the planning-oriented spirit. We elaborate on the detailed definition and terminology, the necessity of these modules in the Supplementary.

直观的解决办法是感知周围的物体,预测它们未来的行为,并明确地规划一个安全的行驶路径,如图1(c.2)所展示的那样。现代的方法[11, 30, 38, 57, 82]提供了深刻的见解,并取得了令人瞩目的成果。然而,我们认为问题往往隐藏在细节之中;先前的研究或多或少没有充分考虑到某些组件(参见表1中的(c.2)部分),这与面向规划的精神有所呼应。我们在补充材料中详细解释了这些模块的具体定义和术语,以及它们的重要性。

To this end, we introduce UniAD, a Unified Autonomous Driving algorithm framework to leverage five essential tasks toward a safe and robust system as depicted in Fig. 1(c.3) and Table 1(c.3). UniAD is designed in a planning-oriented spirit. We argue that this is not a simple stack of tasks with mere engineering effort. A key component is the querybased design to connect all nodes. Compared to the classic bounding box representation, queries benefit from a larger receptive field to soften the compounding error from upstream predictions. Moreover, queries are flexible to model and encode a variety of interactions, e.g., relations among multiple agents. To the best of our knowledge, UniAD is the first work to comprehensively investigate the joint cooperation of such a variety of tasks including perception, prediction and planning in the field of autonomous driving.

为了实现这一目标,我们提出了UniAD,一个统一的自动驾驶算法框架,它利用五个基本任务来实现一个安全且稳健的系统,如图1(c.3)和表1(c.3)所展示。UniAD以面向规划的理念设计。我们认为,这不仅仅是简单地将任务堆叠起来,而是需要真正的工程努力。一个关键的组成部分是基于查询的设计,用以连接所有节点。与经典的边界框表示法相比,查询由于具有更大的感知范围,从而能够减轻上游预测带来的累积误差。此外,查询在建模和编码各种交互方面具有灵活性,例如,多个代理之间的关系。据我们所知,UniAD是第一个全面研究在自动驾驶领域内,包括感知、预测和规划在内的多样化任务的联合协作的工作。

The contributions are summarized as follows. (a) we embrace a new outlook of autonomous driving framework following a planning-oriented philosophy, and demonstrate the necessity of effective task coordination, rather than standalone design or simple multi-task learning. (b) we present UniAD, a comprehensive end-to-end system that leverages a wide span of tasks. The key component to hit the ground running is the query design as interfaces connecting all nodes. As such, UniAD enjoys flexible intermediate representations and exchanging multi-task knowledge toward planning. © we instantiate UniAD on the challenging benchmark for realistic scenarios. Through extensive ablations, we verify the superiority of our method over previous state-of-the-arts in all aspects.

贡献可以概括为以下几点:

(a) 我们采纳了一种新的自动驾驶框架视角,遵循以规划为导向的理念,并证明了有效任务协调的必要性,而不是单独设计或简单的多任务学习方法。

(b) 我们介绍了UniAD,这是一个全面的端到端系统,利用了广泛的任务。关键的启动组件是查询设计,它作为连接所有节点的接口。因此,UniAD具有灵活的中间表示形式,并能够为规划目的交流多任务知识。

© 我们在具有挑战性的现实场景基准测试中实现了UniAD。通过广泛的消融研究,我们验证了我们的方法在所有方面都优于以前的最先进水平。

We hope this work could shed some light on the targetdriven design for the autonomous driving system, providing a starting point for coordinating various driving tasks.

我们期望这项研究能够为自动驾驶系统的以目标驱动的设计思路带来一些启发,为整合不同的驾驶任务提供一个初步的出发点。

2. Methodology

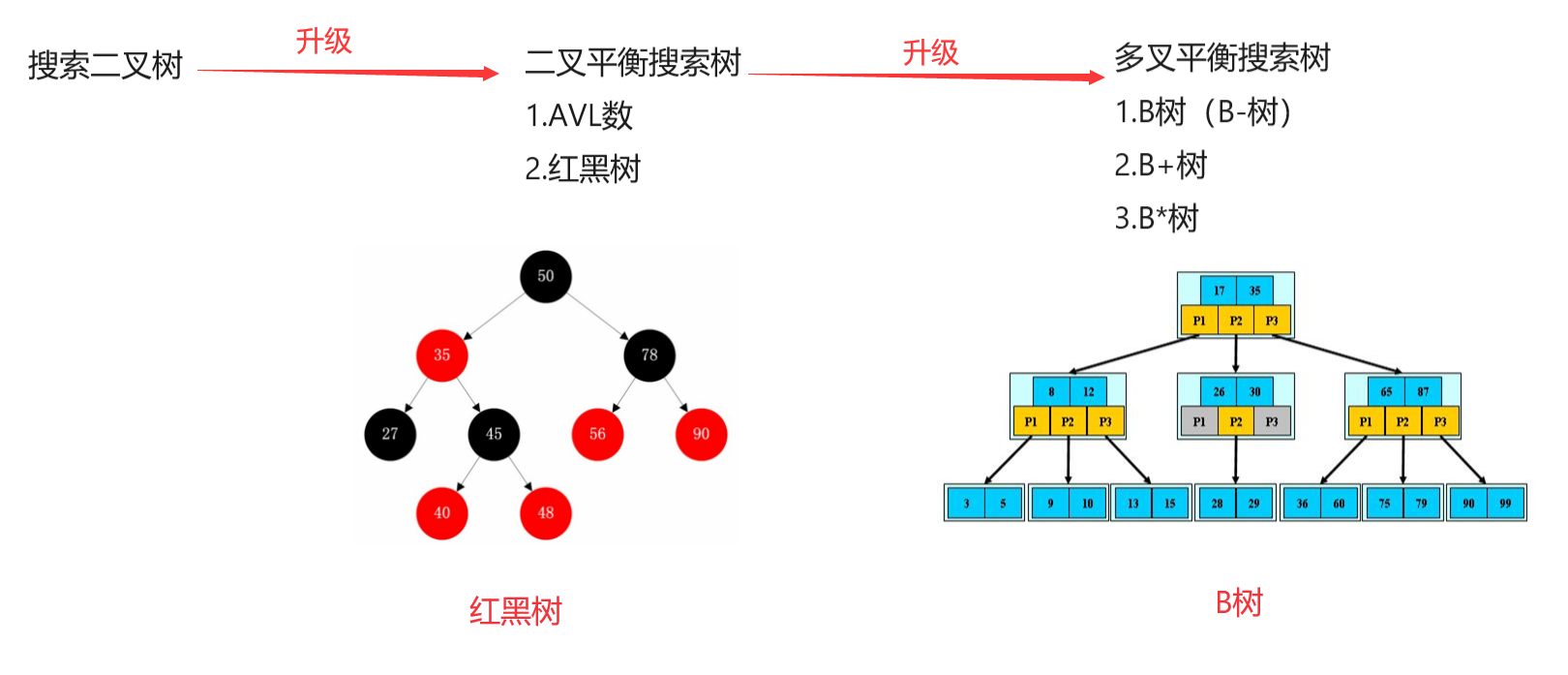

Overview. As illustrated in Fig. 2, UniAD comprises four transformer decoder-based perception and prediction modules and one planner in the end. Queries Q play the role of connecting the pipeline to model different interactions of entities in the driving scenario. Specifically, a sequence of multi-camera images is fed into the feature extractor, and the resulting perspective-view features are transformed into a unified bird’s-eye-view (BEV) feature B by an off-theshelf BEV encoder in BEVFormer [55]. Note that UniAD is not confined to a specific BEV encoder, and one can utilize other alternatives to extract richer BEV representations with long-term temporal fusion [31, 74] or multi-modality fusion [58,64]. In TrackFormer, the learnable embeddings that we refer to as track queries inquire about the agents’ information from B to detect and track agents. MapFormer takes map queries as semantic abstractions of road elements (e.g., lanes and dividers) and performs panoptic seg-mentation of the map. With the above queries representing agents and maps, MotionFormer captures interactions among agents and maps and forecasts per-agent future trajectories. Since the action of each agent can significantly impact others in the scene, this module makes joint predictions for all agents considered. Meanwhile, we devise an ego-vehicle query to explicitly model the ego-vehicle and enable it to interact with other agents in such a scenecentric paradigm. OccFormer employs the BEV feature B as queries, equipped with agent-wise knowledge as keys and values, and predicts multi-step future occupancy with agent identity preserved. Finally, Planner utilizes the expressive ego-vehicle query from MotionFormer to predict the planning result, and keep itself away from occupied regions predicted by OccFormer to avoid collisions.

概述。如图2 所示,UniAD由四个基于transformer解码器的感知和预测模块和一个规划器组成。查询Q作为连接管道的角色,用于模拟驾驶场景中实体的不同交互。具体来说,一系列多摄像头图像被输入到特征提取器中,然后由现成的BEV编码器在BEFormer[55]中将生成的透视图视图特征转换为统一的鸟瞰视图(BEV)特征B。请注意,UniAD不局限于特定的BEV编码器,人们可以使用其他替代方案来提取具有长期时间融合[31, 74]或多模态融合[58,64]的更丰富的BEV表示。在TrackFormer中,我们所说的可学习的嵌入,即轨迹查询,从B中查询代理的信息以检测和跟踪代理。MapFormer采用地图查询作为道路元素(例如,车道和分隔线)的语义抽象,并执行地图的全视图分割。有了上述代表代理和地图的查询,MotionFormer捕捉代理和地图之间的交互,并预测每个代理的未来轨迹。由于每个代理的行为可能显著影响场景中的其他代理,这个模块会考虑所有代理的联合预测。同时,我们设计了一个自车查询来显式地建模自车,并使其能够在这个以场景为中心的范例中与其他代理交互。OccFormer使用BEV特征B作为查询,配备有代理知识作为键和值,并预测具有代理身份保留的多步未来占用。最后,规划器使用来自MotionFormer的表达性强车查询来预测规划结果,并避免与OccFormer预测的占用区域发生碰撞。

图 2. 展示了统一自动驾驶(UniAD)的流程。这个流程是根据面向规划的设计理念精心构建的。UniAD 不仅仅是一系列任务的简单叠加,而是深入研究了感知和预测中每个模块的作用,充分利用了从前面的节点到驾驶场景中的最终规划的联合优化优势。所有的感知和预测模块都采用了基于 transformer 解码器的结构设计,使用任务查询作为连接各个节点的接口。在流程的末端是一个基于注意力机制的简单规划器,它负责预测自我车辆的未来路径点,同时考虑了从前面的节点提取出的知识。图中展示的占用地图仅用于视觉辅助理解。

2.1. Perception: Tracking and Mapping

TrackFormer. It jointly performs detection and multiobject tracking (MOT) without non-differentiable postprocessing. Inspired by [100, 104], we take a similar query design. Besides the conventional detection queries utilized in object detection [8, 109], additional track queries are introduced to track agents across frames. Specifically, at each time step, initialized detection queries are responsible for detecting newborn agents that are perceived for the first time, while track queries keep modeling those agents detected in previous frames. Both detection queries and track queries capture the agent abstractions by attending to BEV feature B. As the scene continuously evolves, track queries at the current frame interact with previously recorded ones in a self-attention module to aggregate temporal information, until the corresponding agents disappear completely (untracked in a certain time period). Similar to [8], TrackFormer contains N layers and the final output state QA provides knowledge of Na valid agents for downstream prediction tasks. Besides queries encoding other agents surrounding the ego-vehicle, we introduce one particular ego-vehicle query in the query set to explicitly model the self-driving vehicle itself, which is further used in planning.

TrackFormer 是 UniAD 框架中的一个核心模块,它同时执行检测和多目标跟踪(MOT),无需进行不可微分的后处理步骤。TrackFormer 的设计受到了 [100, 104] 的启发,采用了类似的查询设计理念。除了在目标检测中常用的检测查询之外,还引入了额外的跟踪查询来实现跨帧的代理跟踪。具体来说,在每个时间点,初始化的检测查询负责识别首次被感知到的新代理,而跟踪查询则继续对之前帧中已检测到的代理进行建模。无论是检测查询还是跟踪查询,都通过关注 BEV 特征 B 来捕获代理的特征表示。随着场景的持续变化,当前帧中的跟踪查询会与之前记录的查询在自注意力模块中相互作用,以聚合时间信息,直到相关代理完全消失(在一定时间段内未被跟踪)。

与 [8] 类似,TrackFormer 包含 N 层,最终输出状态 QA 为下游预测任务提供了 Na 个有效代理的知识。除了编码周围其他代理的查询外,TrackFormer 还在查询集中特别引入了一个自我车辆查询,用于显式地建模自动驾驶车辆本身,这在后续的规划任务中将被进一步使用。

MapFormer. We design it based on a 2D panoptic segmentation method Panoptic SegFormer [56]. We sparsely represent road elements as map queries to help downstream motion forecasting, with location and structure knowledge encoded. For driving scenarios, we set lanes, dividers and crossings as things, and the drivable area as stuff [50]. MapFormer also has N stacked layers whose output results of each layer are all supervised, while only the updated queries QM in the last layer are forwarded to MotionFormer for agent-map interaction.

MapFormer 是基于 2D 全景分割方法 Panoptic SegFormer [56] 设计的组件。它将道路元素以稀疏的地图查询形式表示,以辅助后续的运动预测任务,同时将位置和结构信息进行编码。在驾驶场景中,我们将车道、分隔线和交叉口定义为“事物”(things),而将可行驶区域定义为“物质”(stuff)[50]。

MapFormer 也采用了 N 层堆叠的设计,每一层的输出都会受到监督。但是,只有最后一层中更新后的查询 QM 会被传递到 MotionFormer,用于处理代理与地图之间的交互。

2.2. Prediction: Motion Forecasting

Recent studies have proven the effectiveness of transformer structure on the motion task [43,44,63,69,70,84,99], inspired by which we propose MotionFormer in the end-toend setting. With highly abstract queries for dynamic agents QA and static map QM from TrackFormer and MapFormer respectively, MotionFormer predicts all agents’ multimodal future movements, i.e., top-k possible trajectories, in a scene-centric manner. This paradigm produces multi-agent trajectories in the frame with a single forward pass, which greatly saves the computational cost of aligning the whole scene to each agent’s coordinate [49]. Meanwhile, we pass the ego-vehicle query from TrackFormer through MotionFormer to engage ego-vehicle to interact with other agents, considering the future dynamics. Formally, the output motion is formulated as {xˆi,k ∈ RT×2|i = 1, . . . , Na; k = 1, . . . , K} , where i indexes the agent, k indexes the modality of trajectories and T is the length of prediction horizon.

近期的研究已经证实了 transformer 结构在运动预测任务上的有效性 [43,44,63,69,70,84,99],正是基于这些研究成果,我们在端到端的框架中引入了 MotionFormer。MotionFormer 使用 TrackFormer 和 MapFormer 提供的动态代理的高度抽象查询 QA 和静态地图的查询 QM,以场景为中心的方式预测所有代理的多模态未来运动,也就是 top-k 个可能的轨迹。这种模式可以在单次前向传播中生成框架内的多代理轨迹,这大大减少了将整个场景与每个代理坐标系对齐所需的计算成本 [49]。同时,我们将 TrackFormer 中的自我车辆查询传递给 MotionFormer,使自我车辆能够与其他代理进行交互,并考虑未来的动态变化。具体来说,输出的运动被表达为

{

x

^

i

,

k

∈

R

T

×

2

∣

i

=

1

,

.

.

.

,

N

a

;

k

=

1

,

.

.

.

,

K

}

\{{x̂_{i,k} ∈ \mathbb{R}^{T×2} | i = 1, ..., N_a; k = 1, ..., K}\}

{x^i,k∈RT×2∣i=1,...,Na;k=1,...,K},其中 i 表示代理的索引,k 表示轨迹模态的索引,T 代表预测时间范围的长度。

MotionFormer. It is composed of N layers, and each layer captures three types of interactions: agent-agent,agent-map and agent-goal point. For each motion query Qi,k (defined later, and we omit subscripts i, k in the following context for simplicity), its interactions between other agents QA or map elements QM could be formulated as:

MotionFormer 由 N 层构成,每一层都负责捕捉三种类型的交互:代理之间的交互、代理与地图的交互,以及代理与目标点的交互。对于每一个运动查询

Q

i

,

k

Q_{i,k}

Qi,k(将在之后定义,为了简化表述,在接下来的上下文中我们将省略下标 i, k),它与其他代理 QA 或地图元素 QM 之间的交互。这种设计使得 MotionFormer 能够在每一层中细致地处理并整合来自不同代理和环境元素的复杂交互,从而生成准确的运动预测。代理间的交互帮助模型理解不同交通参与者的相互影响,代理与地图的交互使模型能够考虑道路结构和环境特征,而代理与目标点的交互则使模型能够预测代理朝目标方向的运动趋势。

where MHCA, MHSA denote multi-head cross-attention and multi-head self-attention [91] respectively. As it is also important to focus on the intended position, i.e., goal point, to refine the predicted trajectory, we devise an agent-goal point attention via deformable attention [109] as follows:

这里,MHCA 和 MHSA 分别代表多头交叉注意力和多头自注意力[91]。由于关注预期位置,也就是目标点,对于细化预测的轨迹同样至关重要,我们通过变形注意力机制[109]设计了一种代理到目标点的注意力机制, 这种机制使得模型能够不仅考虑当前的交互,还考虑代理预期达到的目标点,从而提升轨迹预测的准确性和目标导向性。变形注意力机制可以动态调整其感知范围,以便更好地适应目标点周围环境的变化。

where xˆl−1 T is the endpoint of the predicted trajectory of previous layer. DeformAttn(q,r,x), a deformable attention module, takes in the query q, reference point r and spatial feature x. It performs sparse attention on the spatial feature around the reference point. Through this, the predicted trajectory is further refined as aware of the endpoint surroundings. All three interactions are modeled in parallel, where the generated Qa, Qm and Qg are concatenated and passed to a multi-layer perceptron (MLP), resulting query context Qctx. Then, Qctx is sent to the successive layer for refinement or decoded as prediction results at the last layer.

在这里,

x

^

T

l

−

1

\hat{x}^{l-1}_T

x^Tl−1 表示前一层预测出的轨迹终点。

D

e

f

o

r

m

A

t

t

n

(

q

,

r

,

x

)

DeformAttn(q, r, x)

DeformAttn(q,r,x) 是一个变形注意力模块,它接收查询 ( q )、参考点 ( r ) 和空间特征 ( x )。该模块在参考点周围的空间特征上执行稀疏注意力操作。这样,预测的轨迹就能够根据终点周围的环境进一步进行精细化调整。代理-代理、代理-地图和代理-目标点这三种交互是并行建模的,生成的 ( Q_a )、( Q_m ) 和 ( Q_g ) 被串联起来,并通过一个多层感知器(MLP),生成查询上下文

Q

c

t

x

Q_{ctx}

Qctx。随后,

Q

c

t

x

Q_{ctx}

Qctx 被传递到下一层以进行进一步的细化,或者在最后一层被解码为预测结果。

Motion queries. The input queries for each layer of MotionFormer, termed motion queries, comprise two components: the query context Qctx produced by the preceding layer as described before, and the query position Qpos. Specifically, Qpos integrates the positional knowledge in four-folds as in Eq. (3): (1) the position of scene-level anchors Is; (2) the position of agent-level anchors Ia; (3) current location of the agent i and (4) the predicted goal point.

运动查询。在 MotionFormer 中,每一层的输入查询,称为运动查询,由两部分组成:一部分是由前一层生成的查询上下文

Q

c

t

x

Q_{ctx}

Qctx,另一部分是查询位置

Q

p

o

s

Q_{pos}

Qpos。具体来说,

Q

p

o

s

Q_{pos}

Qpos 融合了四方面的位置信息,如公式(3)所示:(1) 场景级锚点的位置

I

s

I^s

Is;(2) 代理级锚点的位置

I

a

I^a

Ia;(3) 代理 i 的当前位置;(4) 预测的目标点。

这种设计使得模型能够在每一层都考虑到代理的当前状态和目标点,从而更精确地模拟和预测代理的运动。通过结合场景级和代理级锚点的位置信息,模型能够更深入地理解交通环境和代理之间的相互关系。

Here the sinusoidal position encoding PE(·) followed by an MLP is utilized to encode the positional points and xˆ0 T is set as Is at the first layer (subscripts i, k are also omitted). The scene-level anchor represents prior movement statistics in a global view, while the agent-level anchor captures the possible intention in the local coordinate. They are both clustered by k-means algorithm on the endpoints of groundtruth trajectories, to narrow down the uncertainty of prediction. Contrary to the prior knowledge, the start point provides customized positional embedding for each agent, and the predicted endpoint serves as a dynamic anchor optimized layer-by-layer in a coarse-to-fine fashion.

这里采用了正弦位置编码

P

E

(

⋅

)

PE(\cdot)

PE(⋅),随后通过一个多层感知器(MLP)对位置点进行编码,第一层中将

x

^

0

T

\hat{x}_0^T

x^0T 设定为场景级锚点

I

s

I^s

Is(同样,下标 i, k 在此被省略)。场景级锚点反映了全局视角下的先验运动统计信息,而代理级锚点则捕捉了局部坐标系中可能的意图。这两个锚点都是基于真实轨迹端点通过 k-means 聚类算法来确定的,目的是减少预测的不确定性。与这些先验知识相对,起始点为每个代理提供了定制化的位置嵌入,而预测的终点则充当了一个动态锚点,它在每一层中以从粗糙到精细的方式进行优化。

Non-linear Optimization. Different from conventional motion forecasting works which have direct access to ground truth perceptual results, i.e., agents’ location and corresponding tracks, we consider the prediction uncertainty from the prior module in our end-to-end paradigm. Brutally regressing the ground-truth waypoints from an imperfect detection position or heading angle may lead to unrealistic trajectory predictions with large curvature and acceleration. To tackle this, we adopt a non-linear smoother [7] to adjust the target trajectories and make them physically feasible given an imprecise starting point predicted by the upstream module. The process is:

非线性优化。与那些能够直接获取真实感知结果——即代理的位置和相应轨迹——的传统运动预测方法不同,在端到端的框架中,我们考虑了来自前一个模块的预测不确定性。如果从不准确的检测位置或航向角直接回归真实航点,可能会导致预测出具有大曲率和加速度的不切实际的轨迹。为了解决这个问题,我们采用了非线性平滑器[7]来调整目标轨迹,使其在给定上游模块预测的不精确起点的情况下物理上可行。这个过程包括:

- 初始化:使用前一个模块预测的起点和目标点来初始化轨迹。

- 迭代调整:通过非线性平滑器对轨迹进行迭代调整,以最小化预测轨迹与物理可行性之间的差异。

- 平滑约束:应用平滑约束以避免轨迹出现不切实际的曲率变化。

- 终止条件:当轨迹满足一定的物理合理性标准或达到预定的迭代次数时,优化过程结束。

where x˜ and x˜∗ denote the ground-truth and smoothed trajectory, x is generated by multiple-shooting [3], and the cost function is as follows:

在这里,

x

~

\tilde{x}

x~ 表示真实轨迹(ground-truth trajectory),而

x

~

∗

\tilde{x}^*

x~∗ 表示经过平滑处理后的轨迹(smoothed trajectory)。

x

x

x 是通过多重射击法(multiple-shooting)[3]生成的,成本函数(cost function)如下所示:

多重射击法是一种用于解决边界值问题的数值优化技术,它将问题分解为多个子问题,每个子问题都有自己的初始条件和目标条件,然后通过迭代求解这些子问题来逼近整个问题的解。

where λ xy and λgoal are hyperparameters, the kinematic function set Φ has five terms including jerk, curvature, curvature rate, acceleration and lateral acceleration. The cost function regularizes the target trajectory to obey kinematic constraints. This target trajectory optimization is only conducted in training and does not affect inference.

这里的

λ

x

y

\lambda_{xy}

λxy 和

λ

goal

\lambda_{\text{goal}}

λgoal 是超参数,而动力学函数集合

Φ

\Phi

Φ 包括五个术语:急动度、曲率、曲率变化率、加速度和侧向加速度。成本函数用于使目标轨迹符合动力学约束。这种目标轨迹的优化仅在训练阶段进行,并不会影响模型的推理过程。

2.3. Prediction: Occupancy Prediction

Occupancy grid map is a discretized BEV representation where each cell holds a belief indicating whether it is occupied, and the occupancy prediction task is to discover how the grid map changes in the future. Previous approaches utilize RNN structure for temporally expanding future predictions from observed BEV features [35,38,105]. However, they rely on highly hand-crafted clustering postprocessing to generate per-agent occupancy maps, as they are mostly agent-agnostic by compressing BEV features as a whole into RNN hidden states. Due to the deficient usage of agent-wise knowledge, it is challenging for them to predict the behaviors of all agents globally, which is essential to understand how the scene evolves. To address this, we present OccFormer to incorporate both scene-level and agent-level semantics in two aspects: (1) a dense scene feature acquires agent-level features via an exquisitely designed attention module when unrolling to future horizons; (2) we produce instance-wise occupancy easily by a matrix multiplication between agent-level features and dense scene features without heavy post-processing.

占用网格地图是一种将鸟瞰视图(BEV)离散化的表现形式,地图上的每个单元格都有一个表示其被占用与否的信念值。占用预测任务旨在探索这个网格地图在未来的变化情况。之前的方法利用递归神经网络(RNN)结构,基于观察到的 BEV 特征来扩展对未来的预测[35,38,105]。但这些方法大多需要依赖手工定制的聚类后处理步骤来生成每个代理的占用地图,因为它们通常会将 BEV 特征作为一个整体压缩进 RNN 的隐状态,而不考虑个别代理的特性。由于缺乏对个别代理知识的利用,这些方法在预测所有代理的全局行为时面临挑战,而这对于理解场景的演变至关重要。为了解决这个问题,我们提出了 OccFormer,它在两个层面上整合了场景级和代理级语义信息:(1) 在展开到未来时间视野的过程中,密集的场景特征通过一个精心设计的关注模块来获取代理级特征;(2) 我们通过代理级特征与密集场景特征之间的矩阵乘法,无需复杂的后处理步骤,就能轻松生成针对每个实例的占用地图。

OccFormer is composed of To sequential blocks where To indicates the prediction horizon. Note that To is typically smaller than T in the motion task, due to the high computation cost of densely represented occupancy. Each block takes as input the rich agent features Gt and the state (dense feature) Ft−1 from the previous layer, and generates Ft for timestep t considering both instance- and scene-level information. To get agent feature Gt with dynamics and spatial priors, we max-pool motion queries from MotionFormer in the modality dimension denoted as QX ∈ RNa×D, with D as the feature dimension. Then we fuse it with the upstream track query QA and current position embedding PA via a temporal-specific MLP:

OccFormer由预测时间范围

T

o

T_o

To 个序列块组成,其中

T

o

T_o

To 代表预测的时间范围。请注意,由于密集表示的占用预测的高计算成本,

T

o

T_o

To 通常小于运动预测任务中的

T

T

T。每个块接收丰富的代理特征

G

t

G_t

Gt 和前一层的状态(密集特征)

∗

∗

F

t

−

1

**F_{t-1}

∗∗Ft−1 作为输入,并生成 考虑实例级和场景级信息的时间步

t

t

t 的 特征

F

t

F_t

Ft。为了 获得具有动态和空间先验的代理特征

G

t

G_t

Gt,我们将来自MotionFormer的运动查询在模态维度上进行最大池化**,得到

Q

X

∈

R

N

a

×

D

Q_X \in \mathbb{R}^{N_a \times D}

QX∈RNa×D,其中

D

D

D 是特征维度。然后,我们通过一个特定于时间的多层感知器(MLP)将其与上游的轨迹查询

Q

A

Q_A

QA 和当前位置嵌入

P

A

P_A

PA 进行融合:

这个过程包括以下几个步骤:

- 运动查询的最大池化:从MotionFormer获取的运动查询通过最大池化来捕获代理的动态特征。

- 特征融合:将最大池化后的运动查询特征与轨迹查询和位置嵌入相结合。

- 时间特定的MLP:使用一个针对时间敏感的MLP来进一步处理和整合特征,以捕获时间依赖性。

这种设计使得OccFormer能够在生成未来占用网格地图时,同时考虑代理的动态行为和场景的空间结构。

where [·] indicates concatenation. For the scene-level knowledge, the BEV feature B is downscaled to 1/4 resolution for training efficiency to serve as the first block input F 0. To further conserve training memory, each block follows a downsample-upsample manner with an attention module in between to conduct pixel-agent interaction at 1/8 downscaled feature, denoted as Fds t .

在这里,“[·]”表示的是串联操作。对于场景级知识,BEV特征

B

B

B 被降低到1/4的分辨率以提高训练效率,并作为第一个序列块的输入

F

0

F^0

F0。为了进一步节省训练时的内存,每个序列块都采用了先下采样再上采样的方式,在中间通过一个注意力模块来进行1/8分辨率下的特征的像素-代理交互,这种特征表示为

F

t

ds

F^{\text{ds}}_t

Ftds。

这个过程包括以下几个步骤:

- 下采样:将BEV特征 B B B 的分辨率降低,以减少计算量和内存使用。

- 注意力模块:在1/8分辨率的特征上应用注意力机制,以实现像素与代理之间的交互。

- 上采样:将经过注意力模块处理的特征重新上采样到适合后续处理的分辨率。

这种设计使得OccFormer在保持计算效率的同时,能够通过注意力机制有效地结合场景级和代理级的信息。

Pixel-agent interaction is designed to unify the sceneand agent-level understanding when predicting future occupancy. We take the dense feature Fds t as queries, instancelevel features as keys and values to update the dense feature over time. Detailedly, Fds t is passed through a self-attention layer to model responses between distant grids, then a crossattention layer models interactions between agent features Gt and per-grid features. Moreover, to align the pixel-agent correspondence, we constrain the cross-attention by an attention mask, which restricts each pixel to only look at the agent occupying it at timestep t, inspired by [17]. The update process of the dense feature is formulated as:

像素-代理交互旨在在预测未来占用情况时,整合场景级和代理级的理解。我们将密集特征

F

ds

t

F_{\text{ds}}^t

Fdst 当作查询,实例级特征作为键和值,以便随时间更新密集特征。具体来说,

F

ds

t

F_{\text{ds}}^t

Fdst 首先通过自注意力层来模拟远距离网格之间的相互作用,然后通过交叉注意力层来模拟代理特征

G

t

G^t

Gt 与每个网格特征之间的交互。为了确保像素-代理对应关系的对齐,我们使用注意力掩码来限制交叉注意力,使得每个像素只关注在时间步

t

t

t 占据它的代理,这一方法的灵感来源于 [17]。密集特征的更新过程可以表示为:

kimi

The attention mask Ot m is semantically similar to occupancy, and is generated by multiplying an additional agentlevel feature and the dense feature F t ds, where we name the agent-level feature here as mask feature Mt = MLP(Gt). After the interaction process in Eq. (7), Dds t is upsampled to 1/4 size of B. We further add Dt ds with block input F t−1 as a residual connection, and the resulting feature F t is passed to the next block.

注意力掩码

O

m

t

\mathbf{O^t_m}

Omt 在语义上与占用率相似,它是通过将一个额外的代理级特征与密集特征

F

d

s

t

\mathbf{F}^t_{ds}

Fdst 相乘来生成的,我们在这里将代理级特征命名为掩码特征

M

t

=

MLP

(

G

t

)

\mathbf{M}^t = \text{MLP}(\mathbf{G^t})

Mt=MLP(Gt)。在等式 (7) 中的交互过程之后,

D

d

s

t

\mathbf{D}^{t}_{ds}

Ddst 被上采样到

B

B

B 的 1/4 大小。我们进一步将

D

d

s

t

\mathbf{D}^t_{ds}

Ddst 与块输入

F

t

−

1

\mathbf{F}^{t-1}

Ft−1 相加作为残差连接,并将结果特征

F

t

\mathbf{F^t}

Ft 传递到下一个块。

Instance-level occupancy. It represents the occupancy with each agent’s identity preserved. It could be simply drawn via matrix multiplication, as in recent query-based segmentation works [18, 52]. Formally, in order to get an occupancy prediction of original size H × W of BEV feature B, the scene-level features F t are upsampled to F t dec ∈ RC×H×W by a convolutional decoder, where C is the channel dimension. For the agent-level feature, we further update the coarse mask feature Mt to the occupancy feature Ut ∈ RNa×C by another MLP. We empirically find that generating Ut from mask feature Mt instead of original agent feature Gt leads to superior performance. The final instance-level occupancy of timestep t is:

实例级占用。它保留了每个代理的身份来表示占用。它可以通过矩阵乘法简单地绘制,就像最近基于查询的分割工作[18, 52]中那样。正式地说,为了获得原始大小

H

×

W

H \times W

H×W 的 BEV 特征

B

B

B 的占用预测,场景级特征

F

t

\mathbf{F^t}

Ft 通过一个卷积解码器上采样到

F

t

d

e

c

∈

R

C

×

H

×

W

\mathbf{F^t}_{dec} \in \mathbb{R}^{C \times H \times W}

Ftdec∈RC×H×W,其中

C

C

C 是通道维度。对于代理级特征,我们进一步通过另一个多层感知器(MLP)将粗糙的掩码特征

M

t

\mathbf{M^t}

Mt 更新为占用特征

U

t

∈

R

N

a

×

C

\mathbf{U^t} \in \mathbb{R}^{N_a \times C}

Ut∈RNa×C。我们通过实验发现,从掩码特征

M

t

\mathbf{M^t}

Mt 而不是原始代理特征

G

t

\mathbf{G^t}

Gt 生成

U

t

\mathbf{U^t}

Ut 可以带来更优越的性能。时间步

t

t

t 的最终实例级占用是:

2.4. Planning

Planning without high-definition (HD) maps or predefined routes usually requires a high-level command to indicate the direction to go [11, 38]. Following this, we convert the raw navigation signals (i.e., turn left, turn right and keep forward) into three learnable embeddings, named command embeddings. As the ego-vehicle query from MotionFormer already expresses its multimodal intentions, we equip it with command embeddings to form a “plan query”. We attend plan query to BEV features B to make it aware of surroundings, and then decode it to future waypoints τˆ.

在没有高清晰度(HD)地图或预定义路线的规划中,通常需要一个高级命令来指示前进的方向[11, 38]。随后,我们将原始导航信号(即左转、右转和直行)转换为三个可学习的嵌入,称为命令嵌入。由于MotionFormer中的自车查询已经表达了其多模态意图,我们为其配备命令嵌入,形成一个“规划查询”。我们使规划查询关注到BEV(鸟瞰视图)特征B,以使其意识到周围环境,然后将其解码为未来的航点

τ

^

\hat{\tau}

τ^。

To further avoid collisions, we optimize τˆ based on Newton’s method in inference only by the following:

为了避免进一步的碰撞,我们仅在推理过程中使用牛顿方法来优化

τ

^

\hat{\tau}

τ^。

牛顿方法是一种寻找函数零点的数学技术,通常用于求解方程或优化问题。在自动驾驶或其他需要精确路径规划的应用中,这种方法可以用来微调航点,以确保路径的平滑性和安全性。然而,由于文本中断,具体的优化步骤或公式没有提供。

where τˆ is the original planning prediction, τ∗ denotes the optimized planning, which is selected from multipleshooting [3] trajectories τ as to minimize cost function f(·). Oˆ is a classical binary occupancy map merged from the instance-wise occupancy prediction from OccFormer. The cost function f(·) is calculated by:

在这里,

τ

^

\hat{\tau}

τ^ 是原始的规划预测,

τ

∗

\tau^*

τ∗ 表示优化后的规划,它是从多射击(multi-shoot)轨迹

τ

\tau

τ 中选择出来的,目的是最小化代价函数

f

(

⋅

)

f(\cdot)

f(⋅)。

O

^

\hat{O}

O^ 是从OccFormer的实例级占用预测中合并而成的经典二进制占用图。代价函数

f

(

⋅

)

f(\cdot)

f(⋅) 的计算方式是:

通常,在自动驾驶和机器人导航领域,代价函数会综合考虑多种因素,如路径的平滑性、安全性、到达目的地的效率等。代价函数的设计对于优化算法的性能至关重要,因为它直接影响到最终选择的路径。牛顿方法在这里可能被用来迭代地调整路径,以找到最小化代价函数的最优解。

Here λcoord, λobs, and σ are hyperparameters, and t indexes a timestep of future horizons. The l2 cost pulls the trajectory toward the original predicted one, while the collision term D pushes it away from occupied grids, considering surrounding positions confined to S = {(x, y)|∥(x, y)−τt∥2 < d, Oˆx,y t = 1}.

2.5. Learning

UniAD is trained in two stages. We first jointly train perception parts, i.e., the tracking and mapping modules, for a few epochs (6 in our experiments), and then train the model end-to-end for 20 epochs with all perception, prediction and planning modules. The two-stage training is found more stable empirically. We refer the audience to the Supplementary for details of each loss.

UniAD(Unified Autonomous Driving)的训练分为两个阶段。首先,我们联合训练感知部分,即跟踪和映射模块,进行几个周期的训练(在我们的实验中是6个周期),然后以端到端的方式训练模型20个周期,包括所有感知、预测和规划模块。通过实证研究发现,这种两阶段训练方法在实践中更为稳定。我们引导读者参考补充材料以获取每个损失函数的详细信息。

这种分阶段的训练策略通常用于深度学习模型,特别是当模型包含多个子模块时。通过先分别训练每个子模块,可以确保它们在集成到整个系统中之前已经得到了充分的优化****。一旦子模块被训练好,就可以通过端到端的训练进一步优化整个模型,使得所有模块协同工作,以达到更好的整体性能。两阶段训练有助于解决训练过程中可能出现的不稳定性和收敛问题。

Shared matching. Since UniAD involves instance-wise modeling, pairing predictions to the ground truth set is required in perception and prediction tasks. Similar to DETR [8, 56], the bipartite matching algorithm is adopted in the tracking and online mapping stage. As for tracking, candidates from detection queries are paired with newborn ground truth objects, and predictions from track queries inherit the assignment from previous frames. The matching results in the tracking module are reused in motion and occupancy nodes to consistently model agents from historical tracks to future motions in the end-to-end framework.

共享匹配。由于UniAD涉及实例级建模,在感知和预测任务中需要将预测与真实数据集进行配对。类似于DETR[8, 56],我们在跟踪和在线映射阶段采用了二分图匹配算法。对于跟踪来说,来自检测查询的候选对象与新生成的真实数据对象进行配对,而来自轨迹查询的预测则继承了前一帧的分配结果。在跟踪模块中的匹配结果被重新用于运动和占用节点,以便在端到端框架中一致地对从历史轨迹到未来运动的代理进行建模。

解释一下上述内容:

- 实例级建模:指的是模型能够识别并跟踪每个单独的实例(如车辆、行人等)。

- 二分图匹配:一种算法,用于在两个集合之间找到最优的配对方式,常用于计算机视觉中的对象跟踪问题。

- DETR:Detection Transformer,一种用于目标检测的模型,它使用Transformer架构进行端到端的对象检测。

- 跟踪:在连续帧中识别和关联同一对象的过程。

- 在线映射:实时更新地图信息,以反映环境的当前状态。

- 检测查询:模型生成的用于检测场景中对象的查询。

- 轨迹查询:模型生成的用于预测对象未来位置的查询。

- 继承分配:在连续帧中,轨迹查询的预测结果会继承前一帧的匹配结果,以保持一致性。

- 运动和占用节点:模型中的不同部分,分别负责预测对象的运动和占用空间的状态。

通过共享匹配,UniAD能够确保在感知和预测任务中,对象的识别和跟踪是连贯的,从而提高整个系统的准确性和鲁棒性。

3. Experiments

We conduct experiments on the challenging nuScenes dataset [6]. In this section, we validate the effectiveness of our design in three aspects: joint results revealing the advantage of task coordination and its effect on planning, modular results of each task compared with previous methods, and ablations on the design space for specific modules. Due to space limit, the full suite of protocols, some ablations and visualizations are provided in the Supplementary.

我们对具有挑战性的nuScenes数据集[6]进行了实验。在这一部分,我们从三个方面验证了我们设计的效率:联合结果揭示了任务协调的优势及其对规划的影响,每个任务的模块化结果与先前方法的比较,以及针对特定模块的设计空间的消融分析。由于空间限制,全套协议、一些消融分析和可视化结果提供在补充材料中。

3.1. Joint Results

We conduct extensive ablations as shown in Table 2 to prove the effectiveness and necessity of preceding tasks in the end-to-end pipeline. Each row of this table shows the model performance when incorporating task modules listed in the second Modules column. The first row (ID-0) serves as a vanilla multi-task baseline with separate task heads for comparison. The best result of each metric is marked in bold, and the runner-up result is underlined in each column.

我们在表2 中进行了广泛的消融分析,以证明端到端流程中先前任务的有效性和必要性。这张表的每一行显示了在结合第二列“模块”中列出的任务模块时模型的性能。第一行(ID-0)作为一个标准的多任务基线,具有独立的任务头,用于比较。每个度量指标的最佳结果用粗体标记,每个列的亚军结果则在下方划线。

表2. 对每个任务有效性的详细消融分析。我们可以得出结论,两个感知子任务极大地帮助了运动预测,并且预测性能也从统一两个预测模块中受益。有了所有先前表示,我们的目标规划显著提升,以确保安全性。UniAD在预测和规划任务上大幅度超越了简单的多任务学习(MTL)方案,并且它还具有在感知性能上没有显著下降的优势。为了简洁,只显示了主要指标。"avg.L2"和"avg.Col"是在整个规划范围内的平均值。*:ID-0是每个任务都有独立头的MTL方案。

Roadmap toward safe planning. As prediction is closer to planning compared to perception, we first investigate the two types of prediction tasks in our framework, i.e., motion forecasting and occupancy prediction. In Exp.10- 12, only when the two tasks are introduced simultaneously (Exp.12), both metrics of the planning L2 and collision rate achieve the best results, compared to naive endto-end planning without any intermediate tasks (Exp.10, Fig. 1(c.1)). Thus we conclude that both these two prediction tasks are required for a safe planning objective. Taking a step back, in Exp.7-9, we show the cooperative effect of two types of prediction. The performance of both tasks get improved when they are closely integrated (Exp.9, -3.5% minADE, -5.8% minFDE, -1.3 MR(%), +2.4 IoUf.(%), +2.4 VPQ-f.(%)), which demonstrates the necessity to include both agent and scene representations. Meanwhile, in order to realize a superior motion forecasting performance, we explore how perception modules could contribute in Exp.4-6. Notably, incorporating both tracking and mapping nodes brings remarkable improvement to forecasting results (-9.7% minADE, -12.9% minFDE, -2.3 MR(%)). We also present Exp.1-3, which indicate training perception sub-tasks together leads to comparable results to a single task. Additionally, compared with naive multi-task learning (Exp.0, Fig. 1(b)), Exp.12 outperforms it by a significant margin in all essential metrics (-15.2% minADE, - 17.0% minFDE, -3.2 MR(%)), +4.9 IoU-f.(%)., +5.9 VPQf.(%), -0.15m avg.L2, -0.51 avg.Col.(%)), showing the superiority of our planning-oriented design.

安全规划的路线图。由于预测与规划相比,比感知更接近,我们首先研究了我们框架中的两种预测任务,即运动预测和占用预测。在实验10至12中,只有当两项任务同时引入(实验12)时,规划L2和碰撞率的两个指标才达到最佳结果,与没有任何中间任务的简单端到端规划相比(实验10,图1(c.1))。因此,我们得出结论,为了实现安全规划目标,这两个预测任务都是必需的。退一步讲,在实验7至9中,我们展示了两种预测类型的协同效应。当它们紧密集成时(实验9,最小ADE下降3.5%,最小FDE下降5.8%,MR(%)下降1.3,IoUf.(%)提高2.4,VPQ-f.(%)提高2.4),两项任务的性能都得到了提升,这证明了包括代理和场景表示的必要性。同时,为了实现优越的运动预测性能,我们在实验4至6中探索了感知模块如何做出贡献。值得注意的是,同时整合跟踪和映射节点为预测结果带来了显著的改进(最小ADE下降9.7%,最小FDE下降12.9%,MR(%)下降2.3)。我们还展示了实验1至3,它们表明同时训练感知子任务可以与单一任务取得相当的结果。此外,与简单的多任务学习相比(实验0,图1(b)),实验12在所有关键指标上都有显著的提高(最小ADE下降15.2%,最小FDE下降17.0%,MR(%)下降3.2,IoU-f.(%)提高4.9,VPQf.(%)提高5.9,avg.L2下降0.15m,avg.Col.(%)下降0.51),显示了我们面向规划的设计的优越性。

3.2. Modular Results

Following the sequential order of perception-predictionplanning, we report the performance of each task module in comparison to prior state-of-the-arts on the nuScenes validation set. Note that UniAD jointly performs all these tasks with a single trained network. The main metric for each task is marked with gray background in tables.

按照感知-预测-规划的顺序,我们报告了每个任务模块在nuScenes验证集上与之前最先进技术的比较性能。请注意,UniAD使用单个训练网络联合执行所有这些任务。每个任务的主要指标在表格中用灰色背景标记。

Perception results. As for multi-object tracking in Table 3, UniAD yields a significant improvement of +6.5 and +14.2 AMOTA(%) compared to MUTR3D [104] and ViP3D [30] respectively. Moreover, UniAD achieves the lowest ID switch score, showing its temporal consistency for each tracklet. For online mapping in Table 4, UniAD performs well on segmenting lanes (+7.4 IoU(%) compared to BEVFormer), which is crucial for downstream agentroad interaction in the motion module. As our tracking module follows an end-to-end paradigm, it is still inferior to tracking-by-detection methods with complex associations such as Immortal Tracker [93], and our mapping results trail previous perception-oriented methods on specific classes. We argue that UniAD is to benefit final planning with perceived information rather than optimizing perception with full model capacity.

感知结果。关于表3 中的多目标跟踪,UniAD与MUTR3D [104]和ViP3D [30]相比,分别显著提高了+6.5%和+14.2%的AMOTA(平均重叠度)。此外,UniAD实现了最低的ID切换得分,显示了其对每个轨迹片段的时间一致性。对于表4 中的在线映射,UniAD在车道分割上表现良好(与BEVFormer相比提高了+7.4%的IoU),这对于运动模块中的下游代理-道路交互至关重要。由于我们的跟踪模块遵循端到端的范式,它仍然不如具有复杂关联的基于检测的跟踪方法,如Immortal Tracker [93],并且我们的映射结果在特定类别上落后于之前的以感知为导向的方法。我们认为,UniAD的目的是通过感知信息来优化最终的规划,而不是用整个模型的容量来优化感知。

表3. 多目标跟踪。UniAD在所有指标上都超越了之前的端到端MOT技术(仅限图像输入)。†:使用后关联的基于检测的跟踪方法,为了公平比较,重新实现了BEVFormer。

表4. 在线映射。UniAD在全面的道路交通语义方面,与最先进的以感知为导向的方法相比,取得了有竞争力的性能。我们报告了分割IoU(%)。†:使用BEVFormer重新实现。

Prediction results. Motion forecasting results are shown in Table 5, where UniAD remarkably outperforms previous vision-based end-to-end methods. It reduces prediction errors by 38.3% and 65.4% on minADE compared to PnPNet-vision [57] and ViP3D [30] respectively. In terms of occupancy prediction reported in Table 6, UniAD gets notable advances in nearby areas, yielding +4.0 and +2.0 on IoU-near(%) compared to FIERY [35] and BEVerse [105] with heavy augmentations, respectively.

预测结果。运动预测结果在表5中展示,UniAD显著超越了之前的基于视觉的端到端方法。与PnPNet-vision [57]和ViP3D [30]相比,它在最小平均位移误差(minADE)上分别减少了38.3%和65.4%的预测误差。在表6中报告的占用预测方面,UniAD在近区域取得了显著进展,与FIERY [35]和BEVerse [105](分别进行了大量增强处理)相比,在近区域IoU(IoU-near(%))上分别提高了+4.0和+2.0。

表5. 运动预测。UniAD在性能上显著超越了以往的基于视觉的端到端方法。我们还报告了两种将车辆建模为恒定位置或速度的设置作为比较。†:使用BEVFormer重新实现。

表6. 占用预测。UniAD在附近区域取得了显著改进,这对于规划更为关键。“n.” 和 “f.” 分别表示近(30×30米)和远(50×50米)的评估范围。†:使用大量增强训练。

Planning results. Benefiting from rich spatial-temporal information in both the ego-vehicle query and occupancy, UniAD reduces planning L2 error and collision rate by 51.2% and 56.3% compared to ST-P3 [38], in terms of the average value for the planning horizon. Moreover, it notably outperforms several LiDAR-based counterparts, which is often deemed challenging for perception tasks.

规划结果。得益于在自车查询和占用信息中丰富的时空信息,UniAD在规划L2误差和碰撞率方面,与ST-P3 [38]相比分别降低了51.2%和56.3%,这是基于规划范围的平均值。此外,它显著超越了几个基于激光雷达的对手,这通常被认为对感知任务来说是一个挑战。

表7. 规划。UniAD在所有时间间隔中实现了最低的L2误差和碰撞率,甚至在大多数情况下超越了基于激光雷达的方法(†),验证了我们系统的安全性。

3.3. Qualitative Results

Fig. 3 visualizes the results of all tasks for one complex scene. The ego vehicle drives with notice to the potential movement of a front vehicle and lane. In the Supplementary, we show more visualizations of challenging scenarios and one promising case for the planning-oriented design, that inaccurate results occur in prior modules while the later tasks could still recover, e.g., the planned trajectory remains reasonable though objects have a large heading angle deviation or fail to be detected in tracking results. Besides, we analyze that failure cases of UniAD are mainly under some long-tail scenarios such as large trucks and trailers, shown in the Supplementary as well.

图3 展示了一个复杂场景下所有任务的结果。自车在注意到前车和车道的潜在移动时行驶。在补充材料中,我们展示了更多具有挑战性场景的可视化,以及面向规划的设计的一个有希望的案例,即尽管在前面的模块中出现了不准确的结果,但后续任务仍然可以恢复,例如,即使物体在跟踪结果中存在大的航向角偏差或未能被检测到,规划的轨迹仍然保持合理。此外,我们分析了UniAD的失败案例主要出现在一些长尾场景下,例如大型卡车和拖车,这些也在补充材料中展示。

图3. 可视化结果。我们在环视图和鸟瞰图(BEV)中展示了所有任务的结果。运动和占用模块的预测是一致的,在这种情况下,自车正在让路给前面的黑色汽车。每个代理都用一种独特的颜色表示。在图像视图和BEV上,分别只选择了运动预测的前1名和前3名轨迹用于可视化。

3.4. Ablation Study

Effect of designs in MotionFormer. Table 8 shows that all of our proposed components described in Sec. 2.2 contribute to final performance regarding minADE, minFDE, Miss Rate and minFDE-mAP metrics. Notably, the rotated scene-level anchor shows a significant performance boost (- 15.8% minADE, -11.2% minFDE, +1.9 minFDE-mAP(%)),indicating that it is essential to do motion forecasting in the scene-centric manner. The agent-goal point interaction enhances the motion query with the planning-oriented visual feature, and surrounding agents can further benefit from considering the ego vehicle’s intention. Moreover, the non-linear optimization strategy improves the performance (-5.0% minADE, -8.4% minFDE, -1.0 MR(%), +0.7 minFDE-mAP(%)) by taking perceptual uncertainty into account in the end-to-end scenario.

MotionFormer中设计的影响。表8 显示,我们在第2.2节中描述的所有提出的组件都对最终性能有贡献,这些性能指标包括最小平均位移误差(minADE)、最小最终位移误差(minFDE)、遗漏率(Miss Rate)和最小FDE-mAP指标。值得注意的是,旋转的场景级锚点显示出显著的性能提升(最小平均位移误差下降15.8%,最小最终位移误差下降11.2%,最小FDE-mAP提高1.9%),这表明以场景为中心的方式进行运动预测是至关重要的。代理-目标点交互通过规划导向的视觉特征增强了运动查询,周围的代理也可以通过考虑自车意图进一步受益。此外,非线性优化策略通过考虑端到端场景中的感知不确定性,提高了性能(最小平均位移误差下降5.0%,最小最终位移误差下降8.4%,遗漏率下降1.0%,最小FDE-mAP提高0.7%)。

表8. 运动预测模块中设计的消融分析。所有组件都对最终性能有所贡献。"Scenel. Anch."表示旋转的场景级锚点。"Goal Inter."指的是代理-目标点交互。"Ego Q"代表自车查询,"NLO."是非线性优化策略。*:一个同时考虑检测和预测准确性的指标,我们已在补充材料中提供了详细信息。

Effect of designs in OccFormer. As illustrated in Table 9, attending each pixel to all agents without locality constraints (Exp.2) results in slightly worse performance compared to an attention-free baseline (Exp.1). The occupancy guided attention mask resolves the problem and brings in gain, especially for nearby areas (Exp.3, +1.0 IoU-n.(%), +1.4 VPQ-n.(%)). Additionally, reusing the mask feature Mt instead of the agent feature to acquire the occupancy feature further enhances performance.

OccFormer中设计的影响。正如表9 所示,如果不加局部性约束地让每个像素都关注所有代理(实验2),与没有使用注意力机制的基线(实验1)相比,性能会略微下降。占用引导的注意力掩码解决了这个问题并带来了提升,尤其是在近区域(实验3,IoU-n.(%) 提高1.0,VPQ-n.(%) 提高1.4)。此外,重新使用掩码特征Mt而不是代理特征来获取占用特征,进一步增强了性能。

Effect of designs in Planner. We provide ablations on the proposed designs in planner in Table 10, i.e., attending BEV features, training with the collision loss and the optimization strategy with occupancy. Similar to previous research [37, 38], a lower collision rate is preferred for safety over naive trajectory mimicking (L2 metric), and is reduced with all parts applied in UniAD.

规划器中设计的影响。我们在表10 中提供了对规划器中提出设计的消融分析,即,关注鸟瞰图(BEV)特征、使用碰撞损失进行训练以及考虑占用的优化策略。与之前的研究[37, 38]类似,为了安全,更倾向于降低碰撞率而不是简单地模仿轨迹(L2指标),并且在UniAD中应用所有部分后,碰撞率有所降低。

表10. 规划模块设计的消融分析。结果证明了每个先前任务的必要性。“BEV Att.” 表示关注BEV(鸟瞰图)特征。“Col. Loss” 表示碰撞损失。“Occ. Optim.” 是使用占用优化策略。

4. Conclusion and Future Work

We discuss the system-level design for the autonomous driving algorithm framework. A planning-oriented pipeline is proposed toward the ultimate pursuit for planning, namely UniAD. We provide detailed analyses on the necessity of each module within perception and prediction. To unify tasks, a query-based design is proposed to connect all nodes in UniAD, benefiting from richer representations for agent interaction in the environment. Extensive experiments verify the proposed method in all aspects.

我们讨论了自动驾驶算法框架的系统级设计。为了最终追求规划,我们提出了一个面向规划的流程,即UniAD。我们详细分析了感知和预测中每个模块的必要性。为了统一任务,我们提出了一种基于查询的设计,以连接UniAD中的所有节点,从而利用环境中代理交互的更丰富表示。广泛的实验验证了所提出方法的各个方面。

Limitations and future work. Coordinating such a comprehensive system with multiple tasks is non-trivial and needs extensive computational power, especially trained with temporal history. How to devise and curate the system for a lightweight deployment deserves future exploration. Moreover, whether or not to incorporate more tasks such as depth estimation, behavior prediction, and how to embed them into the system, are worthy future directions as well.

局限性与未来工作。协调这样一个包含多个任务的全面系统并非易事,并且需要大量的计算能力,特别是当使用时间历史数据进行训练时。如何设计并策划该系统以实现轻量级部署,值得未来的探索。此外,是否纳入更多任务,例如深度估计、行为预测,以及如何将它们嵌入到系统中,也是值得未来研究的方向。

Acknowledgements. This work is partially supported by National Key R&D Program of China (2022ZD0160100), and in part by Shanghai Committee of Science and Technology (21DZ1100100), and NSFC (62206172).

A. Task Definition

Detection and tracking. Detection and tracking are two crucial perception tasks for autonomous driving, and we focus on representing them in the 3D space to facilitate downstream usage. 3D Detection is responsible for locating surrounding objects (coordinates, length, width, height, etc.) at each time stamp; tracking aims at finding the correspondences between different objects across time stamps and associating them temporally (i.e., assigning a consistent track ID for each agent). In the paper, we use multi-object tracking in some cases to denote the detection and tracking process. The final output is a series of associated 3D boxes in each frame, and their corresponding features QA are forwarded to the motion module. Additionally, note that we have one special query named ego-vehicle query for downstream tasks, which would not be included in the predictionground truth matching process and it regresses the location of ego-vehicle accordingly.

检测与跟踪。检测和跟踪是自动驾驶中两个关键的感知任务,我们专注于在3D空间中表示它们,以便于后续使用。3D检测负责在每个时间戳定位周围物体(坐标、长度、宽度、高度等);跟踪旨在发现不同时间戳之间不同物体的对应关系并将它们在时间上关联起来(即,为每个代理分配一致的轨迹ID)。在论文中,我们在某些情况下使用多目标跟踪来表示检测和跟踪过程。最终输出是每个帧中一系列关联的3D框,以及它们对应的特征QA,这些特征被传递到运动模块。此外,请注意,我们有一个特殊的查询称为自车查询,用于下游任务,它将不会被包括在预测真实匹配过程中,而是相应地回归自车的位置。

Online mapping. Map intuitively embodies the geometric and semantic information of the environment, and online mapping is to segment meaningful road elements with onboard sensor data (multi-view images in our case) as a substitute for offline annotated high-definition (HD) maps. In UniAD, we model the online map into four categories: lanes, drivable area, dividers and pedestrian crossings, and we segment them in bird’s-eye-view (BEV). Similar to QA, the map queries QM would be further utilized in the motion forecasting module to model the agent-map interaction.

在线映射。地图直观地体现了环境的几何和语义信息,在线映射是使用车载传感器数据(在我们的情况下是多视图图像)来分割有意义的道路元素,作为离线标注的高清晰度(HD)地图的替代。在UniAD中,我们将在线地图建模为四类:车道、可行驶区域、分隔线和人行横道,我们以鸟瞰图(BEV)的方式进行分割。与QA类似,地图查询QM将被进一步用于运动预测模块,以模拟代理与地图的交互。

Motion forecasting. Bridging perception and planning, prediction plays an important role in the whole autonomous driving system to ensure final safety. Typically, motion forecasting is an independently developed module that predicts agents’ future trajectories with detected bounding boxes and HD maps. And the bounding boxes are ground truth annotations in most current motion datasets [27], which is not realistic in onboard scenarios. While in this paper, the motion forecasting module takes previously encoded sparse queries (i.e., QA and QM ) and dense BEV features B as inputs, and forecasts K plausible trajectories in future T timesteps for each agent. Besides, to be compatible with our end-to-end and scene-centric scenarios, we predict trajectories as offset according to each agent’s current position. The agent features before the last decoding MLPs, which have encoded both the historical and future information will be sent to the occupancy module for scene-level future understanding. For the ego-vehicle query, it predicts future ego-motion as well (actually providing a coarse planning estimation), and the feature is employed by the planner to generate the ultimate goal.

运动预测。作为感知和规划之间的桥梁,预测在确保最终安全方面在整个自动驾驶系统中发挥着重要作用。通常,运动预测是一个独立开发的模块,它使用检测到的边界框和高清地图来预测代理的未来轨迹。而且,在大多数当前的运动数据集中,边界框是地面真实注释[27],这在车载场景中并不现实。而在本文中,运动预测模块采用之前编码的稀疏查询(即QA和QM)和密集的鸟瞰图(BEV)特征B作为输入,并为每个代理预测未来T时间步的K条合理轨迹。此外,为了与我们的端到端和以场景为中心的场景兼容,我们根据每个代理的当前位置预测轨迹作为偏移。在最后的解码MLPs之前,代理特征已经编码了历史和未来信息,这些特征将被发送到占用模块,用于场景级别的未来理解。对于自车查询,它也预测未来的自车运动(实际上提供了一个粗略的规划估计),并且该特征被规划器用来生成最终目标。

Occupancy prediction. Occupancy grid map is a discretized BEV representation where each cell holds a belief indicating whether it is occupied, and the occupancy prediction task is designed to discover how the grid map changes in the future for To timesteps with multiple agent dynamics. Complementary to motion forecasting which is conditioned on sparse agents, occupancy prediction is densely represented in the whole-scene level. To investigate how the scene evolves with sparse agent knowledge, our proposed occupancy module takes as inputs both the observed BEV feature B and agent features Gt. After the multi-step agentscene interaction (detailedly described in Appendix E), the instance-level probability map OˆAt ∈ RNa×H×W is generated via matrix multiplication between occupancy feature and dense scene feature. To form whole-scene occupancy with agent identity preserved Oˆt ∈RH×W which is used for occupancy evaluation and downstream planning, we simply merge the instance-level probability at each timestep using pixel-wise argmax as in [8].

占用预测。占用网格地图是一种离散化的鸟瞰图(BEV)表示,其中每个单元格包含一个可信值,指示它是否被占用,占用预测任务旨在发现在未来T时间步中,随着多个代理动态,网格地图如何变化。与以稀疏代理为条件的运动预测相补充,占用预测在全场景级别上密集表示。为了探究在稀疏代理知识下场景如何演变,我们提出的占用模块接收观察到的BEV特征B和代理特征

G

t

G^t

Gt 作为输入。在多步代理-场景交互之后(详细描述见附录E),通过占用特征和密集场景特征的矩阵乘法生成实例级概率图

O

^

A

t

∈

R

N

a

×

H

×

W

\hat{O}_A^t \in \mathbb{R}^{N_a \times H \times W}

O^At∈RNa×H×W。为了形成保留代理身份的全场景占用

O

^

t

∈

R

H

×

W

\hat{O}^t \in \mathbb{R}^{H \times W}

O^t∈RH×W,它用于占用评估和下游规划,我们简单地使用像素级argmax合并每个时间步的实例级概率,如文献[8]中所述。

Planning. As an ultimate goal, the planning module takes all upstream results into consideration. Traditional planning methods in the industry often are rule-based, formulated by “if-else” state machines conditioned on various scenarios which are described with prior detection and prediction results. In our learning-based model, we take the upstream ego-vehicle query, and the dense BEV feature B as input, and predict one trajectory τˆ for total Tp timesteps. Then, the trajectory τˆ is optimized with the upstream predicted future occupancy Oˆ to avoid collision and ensure final safety.

规划。作为最终目标,规划模块考虑了所有上游结果。工业界中的传统规划方法通常是基于规则的,由“if-else”状态机根据各种场景制定,这些场景是用预先的检测和预测结果描述的。在我们的基于学习的模型中,我们采用上游的自车查询和密集的BEV特征B作为输入,并预测一个总时间步

T

p

T_p

Tp 的轨迹

τ

^

\hat{\tau}

τ^ 。然后,轨迹

τ

^

\hat{\tau}

τ^ 会根据上游预测的未来占用

O

^

\hat{O}

O^ 进行优化,以避免碰撞并确保最终安全。

B. The Necessity of Each Task

In terms of perception, tracking in the loop as does in PnPNet [57] and ViP3D [30] is proven to complement spatial-temporal features and provide history tracks for occluded agents, refraining from catastrophic decisions for downstream planning. With the aid of HD maps [30,57,82, 101] and motion forecasting, planning becomes more accurate toward higher-level intelligence. However, such information is expensive to construct and prone to be outdated, raising the demand for online mapping without HD maps. As for prediction, motion forecasting [10, 29, 41, 42, 107] generates long-term future behaviors and preserves agent identity in form of sparse waypoint outputs. Nonetheless, there exists the challenge to integrate non-differentiable box representation into subsequent planning module [30, 57]. Some recent literature investigates another type of prediction task named occupancy [88] prediction to assist endto-end planning, in form of cost maps. However, the lack of agent identity and dynamics in occupancy makes it impractical to model social interactions for safe planning. The large computational consumption of modeling multi-step dense features also leads to a much shorter temporal horizon compared to motion forecasting. Therefore, to benefit from the two complementary types of prediction tasks for safe planning, we incorporate both agent-centric motion and whole-scene occupancy in UniAD.

在感知方面,像PnPNet [57]和ViP3D [30]中那样在循环中进行跟踪已被证明可以补充空间-时间特征,并为被遮挡的代理提供历史轨迹,避免下游规划中做出灾难性的决策。借助高清地图[30,57,82,101]和运动预测,规划变得更加准确,朝着更高层次的智能发展。然而,这样的信息构建成本高昂,且容易过时,这增加了无需高清地图的在线映射的需求。至于预测,运动预测[10,29,41,42,107]生成长期的未来行为,并以稀疏航点输出的形式保留代理身份。尽管如此,将不可微分的框表示集成到后续规划模块中[30,57]存在挑战。一些最近的文献研究了另一种名为占用[88]预测的预测任务,以成本图的形式协助端到端规划。然而,占用中缺乏代理身份和动态使其在安全规划中模拟社会互动变得不切实际。建模多步密集特征的大量计算消耗也导致了比运动预测短得多的时间范围。因此,为了从两种互补的预测任务中受益,以实现安全规划,我们在UniAD中结合了以代理为中心的运动和全场景占用。

C. Related Work

C.1. Joint perception and prediction

Joint learning of perception and prediction is proposed to avoid the cascading error in traditional modularindependence pipelines. Similar to the motion forecasting task alone, it usually has two types of output representations: agent-level bounding boxes and scene-level occupancy grid maps. Pioneering work FaF [66] predicts boxes in the future and aggregates past information to produce tracklets. IntentNet [10] extends it to reason about intentions and [25, 28] further predict future states in a refinement fashion. Some exploit detection first and utilize agent features in the second prediction stage [9, 53, 75]. Noticing that history information is ignored, PnPNet [57] enriches it by estimating tracking association scores to avert the non-differentiable optimization process as adopted by the tracking-by-detection paradigm [54, 64, 85, 98]. Yet, all these methods rely on non-maximum suppression (NMS) in detection which still leads to information loss. ViP3D [30] which is closely related to our work, employs agent queries in [104] to forecast, taking HD map as another input. We follow the philosophy of [30, 104] in agent track queries, but also develop non-linear optimization on target trajectories to alleviate the potential inaccurate perception problem. Moreover, we introduce an ego-vehicle query for better capturing the ego behaviors in the dynamic environment, and incorporate online mapping to prevent the localization risk or high construction cost with HD map.

提出了联合学习感知和预测的方法,以避免传统模块独立流水线中的级联错误。与单独的运动预测任务类似,它通常有两种输出表示:代理级别的边界框和场景级别的占用网格地图。开创性的工作FaF [66]预测未来的框,并将过去的信息聚合以产生轨迹片段。IntentNet [10]将其扩展为推理意图,[25, 28]进一步以细化的方式预测未来状态。一些方法首先利用检测,然后在第二预测阶段使用代理特征[9, 53, 75]。注意到历史信息被忽略,PnPNet [57]通过估计跟踪关联分数来丰富它,以避免采用基于检测的跟踪范式[54, 64, 85, 98]中的不可微分优化过程。然而,所有这些方法都依赖于检测中的非极大值抑制(NMS),这仍然会导致信息丢失。与我们的工作密切相关的ViP3D [30],在[104]中使用代理查询进行预测,将高清地图作为另一个输入。我们遵循[30, 104]中代理轨迹查询的理念,但也开发了目标轨迹上的非线性优化,以减轻潜在的不准确感知问题。此外,我们引入了自车查询,以更好地捕捉动态环境中的自车行为,并结合在线映射,以防止高清地图的定位风险或高昂的构建成本。

The alternative representation, namely the occupancy grid map, discretizes the BEV map into grid cells which holds a belief indicating if it is occupied. Wu et al. [96] estimate a dense motion field, while it could not capture multimodal behaviors. Fishing Net [33] also predicts deterministic future BEV semantic segmentation with multiple sensors. To address this, P3 [82] proposes non-parametric distribution of future semantic occupancy and FIERY [35] devises the first paradigm for multi-view cameras. A few methods improve the performance of FIERY with more sophisticated uncertainty modeling [1, 38, 105]. Notably, this representation could easily extend to motion planning for collision avoidance [11,38,82], while it loses the agent identity characteristic and takes a heavy burden to computation which may constrain the prediction horizon. In contrast, we leverage agent-level information for occupancy prediction and ensure accurate and safe planning by unifying these two modes.

另一种表示方法,即占用网格地图,将鸟瞰图(BEV)地图离散化为网格单元,这些单元包含一个信念值,指示它是否被占用。Wu等人[96]估计了一个密集的运动场,但它不能捕捉多模态行为。Fishing Net[33]也使用多种传感器预测确定性的未来BEV语义分割。为了解决这个问题,P3[82]提出了未来语义占用的非参数分布,而FIERY[35]为多视图相机设计了第一个范式。一些方法通过更复杂的不确定性建模提高了FIERY的性能[1,38,105]。值得注意的是,这种表示可以很容易地扩展到运动规划以避免碰撞[11,38,82],同时它失去了代理身份特征,并且承担了沉重的计算负担,这可能会限制预测范围。相比之下,我们利用代理级别的信息进行占用预测,并通过统一这两种模式确保准确和安全的规划。

C.2. Joint prediction and planning

PRECOG [81] proposes a recurrent model that conditions forecasting on the goal position of the ego vehicle, while PiP [86] generates agents’ motion considering complete presumed planning trajectories. However, producing a rough future trajectory is still challenging in the real world, toward which [62] presents a deep structured model to derive both prediction and planning from the same set of learnable costs. [39,40] couple the prediction model with classic optimization methods. Meanwhile, some motion forecasting methods implicitly include the planning task by producing their future trajectories simultaneously [12,45,70]. Similarly, we encode possible behaviors of the ego vehicle in the scene-centric motion forecasting module, but the interpretable occupancy map is utilized to further optimize the plan to stay safe.

PRECOG [81] 提出了一个循环模型,该模型以自车的目标位置为条件进行预测,而PiP [86] 生成代理的运动时考虑了完整的假设规划轨迹。然而,在现实世界中,产生一个粗略的未来轨迹仍然是一个挑战,为此 [62] 提出了一个深度结构模型,从同一组可学习的成本中派生出预测和规划。[39,40] 将预测模型与经典优化方法结合起来。与此同时,一些运动预测方法通过同时产生它们的未来轨迹隐含地包含了规划任务 [12,45,70]。同样,我们在以场景为中心的运动预测模块中编码了自车的可能行为,但是为了保持安全,我们利用可解释的占用地图进一步优化计划。

C.3. End-to-end motion planning

End-to-end motion planning has been an active research domain since Pomerleau [77] uses a single neural network that directly predicts control signals. Subsequent studies make great advances especially in closed-loop simulation with deeper networks [4], multi-modal inputs [2, 21, 78], multi-task learning [20, 97], reinforcement learning [13, 14, 46, 59, 89] and distillation from certain privilege knowledge [16, 103, 106]. However, for such methods of directly generating control outputs from sensor data, the transfer from the synthetic environment to realistic application remains a problem considering their robustness and safety assurance [22, 38]. Thus researchers aim at explicitly designing the intermediate representations of the network to prompt safety, where predicting how the scene evolves attracts broad interest. Some works [19,34,83] jointly decode planning and BEV semantic predictions to enhance interpretability, while PLOP [5] adopts a polynomial formulation to provide smooth planning results for both ego vehicle and neighbors. Cui et al. [24] introduce a contingency planner with diverse sets of future predictions and LAV [15] trains the planner with all vehicles’ trajectories to provide richer training data. NMP [101] and its variant [94] estimate a cost volume to select the plan with minimal cost besides deterministic future perception. Though they risk producing inconsistent results between two modules, the cost map design is intuitive to recover the final plan in complex scenarios. Inspired by [101], most recent works [11,37,38,82,102] propose models that construct costs with both learned occupancy prediction and hand-crafted penalties. However, their performances heavily rely on the tailored cost based on human experience and the distribution from where trajectories are sampled [47]. Contrary to these approaches, we leverage the ego-motion information without sophisticated cost design and present the first attempt that incorporates the tracking module along with two genres of prediction representations simultaneously in an end-to-end model.

端到端的运动规划自从Pomerleau [77]使用一个直接预测控制信号的单一神经网络以来,一直是一个活跃的研究领域。随后的研究在闭环仿真中取得了巨大进展,尤其是通过更深的网络[4]、多模态输入[2, 21, 78]、多任务学习[20, 97]、强化学习[13, 14, 46, 59, 89]以及从特定特权知识中提取[16, 103, 106]。然而,这些直接从传感器数据生成控制输出的方法,在从合成环境转移到现实应用时,它们的鲁棒性和安全保证仍然是一个问题[22, 38]。因此,研究人员旨在明确设计网络的中间表示以促进安全,其中预测场景如何演变引起了广泛的兴趣。一些工作[19,34,83]联合解码规划和BEV语义预测以增强可解释性,而PLOP [5]采用多项式公式为自车和邻近车辆提供平滑的规划结果。Cui等人[24]引入了一个具有多样化未来预测集的应急规划器,而LAV [15]用所有车辆的轨迹训练规划器以提供更丰富的训练数据。NMP [101]及其变体[94]估计一个成本体积,以选择除了确定性未来感知之外成本最小的计划。尽管它们有产生两个模块之间不一致结果的风险,但成本图设计直观地恢复复杂场景中的最终计划。受[101]的启发,最近的大多数工作[11,37,38,82,102]提出了构建成本的模型,这些成本既包括学习到的占用预测,也包括手工制作的惩罚。然而,它们的表现严重依赖于基于人类经验和轨迹采样分布的定制成本[47]。与这些方法相反,我们利用自运动信息,无需复杂的成本设计,并首次尝试在端到端模型中同时结合跟踪模块和两种类型的预测表示。

D. Notations

We provide a lookup table of notations and their shapes mentioned in this paper in Table 11 for reference.

我们在本文的表11中提供了一个符号及其形状的查找表供参考。

E. Implementation Details

E.1. Detection and Tracking

We inherit most of the detection designs from BEVFormer [55] which takes a BEV encoder to transform image features into BEV feature B and adopts a Deformable DETR head [109] to perform detection on B. To further conduct end-to-end tracking without heavy post association, we introduce another group of queries named track queries as in MOTR [100] which continuously tracks previously observed instances according to its assigned track ID. We introduce the tracking process in detail below.

我们从BEVFormer [55]继承了大部分检测设计,它采用BEV编码器将图像特征转换为BEV特征B,并采用可变形DETR头部[109]在B上执行检测。为了在没有繁重的后处理关联的情况下进一步进行端到端跟踪,我们引入了另一组名为跟踪查询的查询,如MOTR [100]中那样,根据其分配的跟踪ID连续跟踪先前观察到的实例。我们将在下面详细介绍跟踪过程。

Training stage: At the beginning (i.e., first frame) of each training sequence, all queries are considered detection queries and predict all newborn objects, which is actually the same as BEVFormer. Detection queries are matched to the ground truth by the Hungarian algorithm [8]. They will be stored and updated via the query interaction module (QIM) for the next timestamp serving as track queries following MOTR [100]. In the next timestamp, track queries will be directly matched with a part of ground-truth objects according to the corresponding track ID, and detection queries will be matched with the remaining ground-truth objects (newborn objects). To stabilize training, we adopt the 3D IoU metric to filter the matched queries. Only those predictions having the 3D IoU with ground-truth boxes larger than a certain threshold (0.5 in practice) will be stored and updated.

训练阶段:在每个训练序列的开始(即第一帧),所有查询都被视为检测查询,并预测所有新出现的对象,这实际上与BEVFormer相同。通过匈牙利算法[8]将检测查询与真实标注匹配。它们将被存储并通过查询交互模块(QIM)更新,以便在下一个时间戳作为跟踪查询使用,遵循MOTR[100]的方式。在下一个时间戳,跟踪查询将根据相应的跟踪ID直接与部分真实标注对象匹配,而检测查询将与剩余的真实标注对象(新出现的对象)匹配。为了稳定训练,我们采用3D IoU度量来过滤匹配的查询。只有那些与真实标注框的3D IoU大于某个阈值(实践中为0.5)的预测才会被存储和更新。

Inference stage: Different from the training stage, each frame of a sequence is sent to the network sequentially, meaning that track queries could exist for a longer horizon than the training time. Another difference emerging in the inference stage is about query updating, that we use classification scores to filter the queries (0.4 for detection queries and 0.35 for track queries in practice) instead of the 3D IoU metric since the ground truth is not available. Besides, to avoid the interruption of tracklets caused by short-time occlusion, we use a lifecycle mechanism for the tracklets in the inference stage. Specifically, for each track query, it will be considered to disappear completely and be removed only when its corresponding classification score is smaller than 0.35 for a continuous period (2s in practice).

推理阶段:与训练阶段不同,序列中的每一帧都依次发送到网络,这意味着跟踪查询可能存在的时间范围比训练时间长。推理阶段出现的另一个区别是关于查询更新,我们使用分类分数来过滤查询(实践中检测查询为0.4,跟踪查询为0.35),而不是使用3D IoU度量,因为真实标注不可用。此外,为了避免由于短时间遮挡导致的轨迹中断,我们在推理阶段使用生命周期机制来处理轨迹。具体来说,对于每个跟踪查询,只有当其对应的分类分数在连续一段时间内(实践中为2秒)小于0.35时,才会被视为完全消失并被移除。

E.2. Online Mapping

Following [56], we decompose the map query set into thing queries and stuff queries. The thing queries model instance-wise map elements (i.e., lanes, boundaries, and pedestrian crossings) and are matched with ground truth via bipartite matching, while the stuff query is only in charge of semantic elements (i.e., drivable area) and is processed with a class-fixed assignment. We set the total number of thing queries to 300 and only 1 stuff query for the drivable area. Also, we stack 6 location decoder layers and 4 mask decoder layers (we follow the structure of those layers as in [56]). We empirically choose thing queries after the location decoder as our map queries QM for downstream tasks.

遵循[56],我们将地图查询集分解为事物查询和物质查询。事物查询模拟实例化的地图元素(即,车道、边界和人行横道),并通过二分图匹配与地面真实情况进行匹配,而物质查询只负责语义元素(即,可行驶区域),并用固定类别的分配进行处理。我们将事物查询的总数设置为300,并且只有1个物质查询用于可行驶区域。此外,我们堆叠了6个位置解码器层和4个掩码解码器层(我们遵循这些层的结构,如[56]中所述)。我们根据经验选择位置解码器之后的事物查询作为我们的地图查询QM,用于下游任务。

E.3. Motion Forecasting

To better illustrate the details, we provide a diagram as shown in Fig. 4. Our MotionFormer takes ITa, ITs , xˆ0, xˆl−1 T ∈ RK×2 to embed query position, and takes Ql ctx −1 as query context. Specifically, the anchors are clustered among training data of all agents by the k-means algorithm, and we set K = 6 which is compatible with our output modalities. To embed the scene-level prior, the anchor ITa is rotated and translated into the global coordinate frame according to each agent’s current location and heading angle, which is denoted as Is T , as shown in Eq. (12),

为了更好地说明细节,我们提供了图4 所示的图表。我们的MotionFormer采用

I

T

a

,

I

T

s

I_T^a, I_T^s

ITa,ITs,

x

^

0

,

x

^

T

l

−

1

∈

R

K

×

2

\hat{x}_0, \hat{x}^{l-1}_T ∈ R^{K×2}

x^0,x^Tl−1∈RK×2 来嵌入查询位置,并采用

Q

c

t

x

l

−

1

Q^{l-1}_{ctx}

Qctxl−1 作为查询上下文。具体来说,锚点通过所有代理的训练数据使用 k-means 算法进行聚类,我们将 K 设置为 6,这与我们的输出模态兼容。为了嵌入场景级先验,锚点

I

T

a

I_T^a

ITa 根据每个代理的当前位置和航向角旋转和平移到全局坐标系中,这在Eq. (12)中表示为

I

T

s

I^s_T

ITs。

图4. MotionFormer。它由N个堆叠的代理-代理、代理-地图和代理-目标交互变换器组成。代理-代理和代理-地图交互模块是使用标准变换器解码器层构建的。代理-目标交互模块是基于可变形交叉注意力模块[109]构建的。

I

T

s

I_T^s

ITs:场景级锚点的终点,

I

T

a

I_T^a

ITa:聚类代理级锚点的终点,

x

^

0

\hat{x}_0

x^0:代理的当前位置,

x

^

T

l

−

1

\hat{x}_T^{l-1}

x^Tl−1:从上一层预测的目标点,

Q

c

t

x

l

−

1

Q^{l-1}_{ctx}

Qctxl−1:来自上一层的查询上下文。

where i is the index of the agent, and it is omitted later for brevity. To facilitate the coarse-to-fine paradigm, we also adopt the goal point predicted from the previous layer xˆl T−1. In the meantime, the agent’s current position is broadcast across the modality, denoted as xˆ0. Then, MLPs and sinusoidal positional embeddings are applied for each of the prior positional knowledge and we summarize them as the query position Qpos ∈ RK×D, which is of the same shape as the query context Qctx. Qpos and Qctx together build up our motion query. We set D to 256 throughout MotionFormer.

其中 i 是代理的索引,在后文中为了简洁省略了。为了便于实现从粗糙到精细的范式,我们还采用了从上一层预测出的目标点

x

^

T

l

−

1

\hat{x}^{l-1}_T

x^Tl−1。与此同时,代理的当前位置在模态间广播,表示为

x

^

0

\hat{x}_0

x^0。然后,对于每个先验位置知识,应用多层感知器(MLPs)和正弦位置嵌入,并将它们总结为查询位置

Q

pos

∈

R

K

×

D

Q_{\text{pos}} \in \mathbb{R}^{K \times D}

Qpos∈RK×D,它与查询上下文

Q

ctx

Q_{\text{ctx}}

Qctx 的形状相同。

Q

p

o

s

Q_{pos}

Qpos 和

Q

c

t

x

Q_{ctx}

Qctx 共同构建了我们的运动查询。在整个MotionFormer中,我们将D设置为256。

As shown in Fig. 4, our MotionFormer consists of three major transformer blocks, i.e., agent-agent, agent-map and agent-goal interaction modules. The agent-agent, agentmap interaction modules are built with standard transformer decoder layers, which are composed of a multi-head selfattention (MHSA) layer and a multi-head cross-attention (MHCA) layer, a feed-forward network (FFN) and several residual and normalization layers in between [8]. Apart from the agent queries QA and map queries QM , we also add the positional embeddings to those queries with sinusoidal positional embedding followed by MLP layers. The agent-goal interaction module is built upon deformable cross-attention module [109], where the goal point from the previously predicted trajectory (Rixˆl i,T −1 + Ti) is adopted as the reference point, as shown in Fig. 5. Specifically, we set the number of sampled points to 4 per trajectory, and 6 trajectories per agent as we mention above. The output features of each interaction module are concatenated and projected with MLP layers to dimension D = 256. Then, we use Gaussian Mixture Model to build each agent’s trajectories, where xˆl ∈ RK×T×5. We set the prediction time horizon T to 12 (6 seconds) in UniAD. Note that we only take the first two of the last dimension (i.e., x and y) as final output trajectories. Besides, the scores of each modality are also predicted (score(ˆ xl) ∈ RK). We stack the overall modules for N times, and N is set to 3 in practice.

如图4 所示,我们的MotionFormer由三个主要的变换器块组成,即代理-代理、代理-地图和代理-目标交互模块。代理-代理、代理-地图交互模块由标准变换器解码器层构建,这些层由多头自注意力(MHSA)层和多头交叉注意力(MHCA)层、前馈网络(FFN)以及中间的几个残差和归一化层组成[8]。除了代理查询QA和地图查询QM之外,我们还使用正弦位置嵌入和随后的MLP层向这些查询添加位置嵌入。代理-目标交互模块基于可变形交叉注意力模块[109]构建,其中采用之前预测的轨迹的终点(

R

i

x

^

i

,

T

l

−

1

+

T

i

)

R_i\hat x^{l-1}_{i,T} + T_i )

Rix^i,Tl−1+Ti)作为参考点,如图5 所示。具体来说,我们为每个轨迹设置了4个采样点,并为每个代理设置了6条轨迹,正如我们上面提到的。每个交互模块的输出特征被连接并通过MLP层投影到维度D = 256。然后,我们使用高斯混合模型构建每个代理的轨迹,其中

x

^

l

∈

R

K

×

T

×

5

\hat{x}_l \in \mathbb{R}^{K \times T \times 5}

x^l∈RK×T×5。我们在UniAD中将预测时间范围T设置为12(6秒)。请注意,我们只取最后维度的前两个(即,x和y)作为最终输出轨迹。此外,还预测了每个模态的分数(

s

c

o

r

e

(

x

^

l

)

∈

R

K

score(\hat{x}_l) ∈ R^K

score(x^l)∈RK)。我们将整体模块堆叠N次,而在实践中N被设置为3。

E.4. Occupancy Prediction

Given the BEV feature from upstream modules, we first downsample it by /4 with convolutional layers for efficient multi-step prediction, then pass it to our proposed OccFormer. OccFormer is composed of To sequential blocks shown in Fig. 6, where To = 5 is the temporal horizon (including current and future frames) and each block is responsible for generating occupancy of one specific frame.

给定上游模块提供的鸟瞰图(BEV)特征,我们首先使用卷积层将其下采样 1/4 以实现高效的多步预测,然后将其传递给我们提出的OccFormer。OccFormer由图6 所示的

T

o

T_o

To 个序列块组成,其中

T

o

=

5

T_o = 5

To=5是时间范围(包括当前和未来的帧),每个块负责生成一个特定帧的占据情况。

Different from prior works which are short of agent-level knowledge, our proposed method incorporates both dense scene features and sparse agent features when unrolling the future representations. The dense scene feature is from the output of the last block (or the observed feature for current frame) and it’s further downscaled (/8) by a convolution layer to reduce computation for pixel-agent interaction. The sparse agent feature is derived from the concatenation of track query QA, agent positions PA, and motion query QX, and it is then passed to a temporal-specific MLP for temporal sensitivity. We conduct pixel-level selfattention to model the long-term dependency required in some rapidly changing scenes, then perform scene-agent incorporation by attending each pixel of the scene to corresponding agents. To enhance the location alignment between agents and pixels, we restrict the cross-attention with an attention mask which is generated by a matrix multiplication between mask feature and downscaled scene feature, where the mask feature is produced by encoding agent feature with an MLP. We then upsample the attended dense feature to the same resolution as input Ft−1 (/4) and add it with Ft−1 as a residual connection for stability. The resulting feature Ft is both sent to the next block and a convolutional decoder for predicting occupancy at the original BEV resolution (/1). We reuse the mask feature and pass it to another MLP to form occupancy feature, and the instance-level occupancy is therefore generated by a matrix multiplication between occupancy feature and decoded dense feature Fdec t (/1). Note that the MLP layer for mask feature, the MLP layer for occupancy feature, and the convolutional decoder are shared across all To blocks while other components are independent in each block. Dimensions of all dense features and agent features are 256 in OccFormer.

与之前缺乏代理级知识的工作不同,我们提出的方法在展开未来表示时结合了密集的场景特征和稀疏的代理特征。密集的场景特征来自最后一个块的输出(或当前帧的观察特征),并通过卷积层进一步下采样(1/8)以减少像素-代理交互的计算量。稀疏的代理特征是从轨迹查询

Q

A

Q_A

QA 、代理位置

P

A

P_A

PA 和运动查询

Q

X

Q_X

QX 的组合中派生出来的,然后将其传递给特定时间的 MLP 以实现时间敏感性。我们进行像素级自注意力以模拟一些快速变化场景所需的长期依赖性,然后通过将场景中的每个像素与相应的代理进行比较来执行场景-代理整合。为了增强代理和像素之间的位置对齐,我们使用注意力掩码来限制交叉注意力,该掩码是通过掩码特征和下采样场景特征之间的矩阵乘法生成的,其中掩码特征是通过使用MLP编码代理特征产生的。然后,我们将被注意的密集特征上采样到与输入

F

t

−

1

F^{t−1}

Ft−1(1/4)相同的分辨率,并将其与

F

t

−

1

F^{t−1}

Ft−1 相加以作为残差连接以保持稳定性。结果特征

F

t

F^t

Ft 既被发送到下一个块,也被发送到卷积解码器以预测原始BEV分辨率(1/1)的占据情况。我们重用掩码特征并将其传递给另一个MLP以形成占据特征,**实例级占据因此是通过占据特征和解码的密集特征

F

d

e

c

t

F_{dec}^t

Fdect(1/1)之间的矩阵乘法生成的。请注意,掩码特征的MLP层、占据特征的MLP层和卷积解码器在所有

T

o

T_o

To 块中共享,而其他组件在每个块中是独立的。在OccFormer中,所有密集特征和代理特征的维度都是256。

E.5. Planning

As shown in Fig. 7, our planner takes the ego-vehicle query generated from the tracking and motion forecasting module, which is symbolized with the blue triangle and yellow rectangle respectively. These two queries, along with the command embedding, are encoded with MLP layers followed by a max-pooling layer across the modality dimension, where the most salient modal features are selected and aggregated. The BEV feature interaction module is built with standard transformer decoder layers, and it is stacked for N layers, where we set N = 3 here. Specifically, it cross-attends the dense BEV feature with the aggregated plan query. More qualitative results can be found in Appendix F.5 showing the effectiveness of this module. To embed location information, we fuse the planquery with learned position embedding and the BEV feature with sinusoidal positional embedding. We then regress the planning trajectory with MLP layers, which is denoted as τˆ ∈ RTp×2. Here we set T p = 6 (3 seconds). Finally,we devise the collision optimizer for obstacle avoidance, which takes the predicted occupancy Oˆ and trajectory τˆ as input as Eq. (10) in the main paper. We set d = 5, σ = 1.0, λcoord =1.0, λobs =5.0.

如图7 所示,我们的规划器采用由跟踪和运动预测模块生成的自我车辆查询,分别用蓝色三角形和黄色矩形表示。这两个查询以及命令嵌入,经过MLP层编码,然后在模态维度上通过最大池化层进行聚合,选择并聚合最显著的模态特征。鸟瞰图(BEV)特征交互模块由标准变换器解码器层构建,并堆叠了N层,这里我们设置N=3。具体来说,它交叉关注密集的BEV特征与聚合的计划查询。更多的定性结果可以在附录F.5中找到,展示了这个模块的有效性。为了嵌入位置信息,我们将计划查询与学习到的位置嵌入融合,并将BEV特征与正弦位置嵌入融合。然后我们用MLP层回归规划轨迹,表示为

τ

^

∈

R

T

p

×

2

\hat{\tau} \in \mathbb{R}^{T_p \times 2}

τ^∈RTp×2。这里我们设置

T

p

=

6

T_p = 6

Tp=6(3秒)。最后,我们设计了碰撞优化器用于避障,它以预测的占据

O

^

\hat{O}

O^ 和轨迹

τ

^

\hat{\tau}

τ^ 作为输入,如主论文中的等式(10)。我们设置

d

=

5

,

σ

=

1.0

,

λ

c

o

o

r

d

=

1.0

,

λ

o

b

s

=

5.0

d = 5,σ = 1.0,λ_{coord} =1.0,λ_{obs} =5.0

d=5,σ=1.0,λcoord=1.0,λobs=5.0。

图7. 规划器。

Q

A

e

g

o

Q^{ego}_A

QAego 和

Q

c

t

x

e

g

o

Q^{ego}_{ctx}

Qctxego 分别是来自跟踪和运动预测模块的自车查询。它们与高层级指令一起,通过多层感知器(MLP)层编码,然后通过模态维度上的最大池化层,选择并聚合最显著的模态特征。鸟瞰图(BEV)特征交互模块由标准的变换器解码器层构建,并堆叠了N层。

E.6. Training Details

Joint learning. UniAD is trained in two stages which we find more stable. In stage one, we pre-train perception tasks including tracking and online mapping to stabilize perception predictions. Specifically, we load corresponding pretrained BEVFormer [55] weights to UniAD for fast convergence including image backbone, FPN, BEV encoder and detection decoder except for object query embeddings (due to the additional ego-vehicle query). We stop the gradient back-propagation in the image backbone to reduce memory cost and train UniAD for 6 epochs with tracking and online mapping losses as follows:

联合学习。UniAD 通过两个阶段进行训练,我们发现这种方法更稳定。在第一阶段,我们预先训练包括跟踪和在线映射在内的感知任务,以稳定感知预测。具体来说,我们加载相应的预训练 BEVFormer [55] 权重到 UniAD 中,以实现快速收敛,包括图像主干、FPN(特征金字塔网络)、BEV(鸟瞰图)编码器和检测解码器,但不包括对象查询嵌入(因为增加了自车查询)。我们停止图像主干的梯度反向传播以减少内存成本,并使用以下跟踪和在线映射损失训练 UniAD 6 个周期:

In stage two, we keep the image backbone frozen as well, and additionally freeze BEV encoder, which is used for view transformation from image view to BEV, to further reduce memory consumption with more downstream modules. UniAD now is trained with all task losses including tracking, mapping, motion forecasting, occupancy prediction, and planning for 20 epochs (for various ablation studies in main paper, it’s trained for 8 epochs for efficiency):