Title: 三维点云深度网络 PointNeXt 源码阅读 (IV) —— PointNeXt-B

文章目录

- I. PointNeXt-B 与其他版本的区别

- II. PointNeXt-B 自动生成的网络

- III. PointNeXt-B 编码部分的结构

- IV. 显存溢出的规避

- 总结

关联博文

[1] 三维点云深度网络 PointNeXt 的安装配置与测试[2] 三维点云深度网络 PointNeXt 源码阅读 (I) —— 注册机制与参数解析

[3] 三维点云深度网络 PointNeXt 源码阅读 (II) —— 点云数据集构造与预处理

[4] 三维点云深度网络 PointNeXt 源码阅读 (III) —— 骨干网络模型

[5] 三维点云深度网络 PointNeXt 源码阅读 (IV) —— PointNeXt-B ⇐ \qquad \Leftarrow ⇐ 本篇

本篇博文只是前面博文的补充, 做个记录.

感谢原作者 Guocheng Qian 开源 PointNeXt 供我们学习 !

I. PointNeXt-B 与其他版本的区别

前面的博文都是针对 PointNeXt-S 版本网络, 该版本网络是 PointNeXt 系列网络模型中最简单的一种.

而 PointNeXt 中第二简单的 PointNeXt-B 相对于 PointNeXt-S 的最主要区别在于 “是否调用 InvResMLP 模块”, 及 “SetAbstraction 模块中是否使用残差”.

所谓 “InvResMLP” 是指 “inverted bottleneck design + Residual MLP”.

因为 InvResMLP 本身自带残差计算, 所以当编码器 encoder[i] 中附加了 InvResMLP 模块时, 该编码器中的 SetAbstraction 内部就不再需要残差结构了.

PointNeXt-B 中解码器 decoder[i]、head 等部分和 PointNeXt-S 中没有区别.

PointNeXt 中更复杂的网络模型, 如 PointNeXt-L 和 PointNeXt-XL, 比起 PointNeXt-B 只是在编码部分四个阶段中累加 InvResMLP 模块数量的区别.

II. PointNeXt-B 自动生成的网络

自动构建的 PointNeXt-B 网络模型如下.

BaseSeg(

(encoder): PointNextEncoder(

(encoder): Sequential(

(0): Sequential(

(0): SetAbstraction(

(convs): Sequential(

(0): Sequential(

(0): Conv1d(4, 32, kernel_size=(1,), stride=(1,))

)

)

)

)

(1): Sequential(

(0): SetAbstraction(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(35, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(1): InvResMLP(

(convs): LocalAggregation(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(67, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(pwconv): Sequential(

(0): Sequential(

(0): Conv1d(64, 256, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(256, 64, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(act): ReLU(inplace=True)

)

)

(2): Sequential(

(0): SetAbstraction(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(67, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(1): InvResMLP(

(convs): LocalAggregation(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(131, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(pwconv): Sequential(

(0): Sequential(

(0): Conv1d(128, 512, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(512, 128, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(act): ReLU(inplace=True)

)

(2): InvResMLP(

(convs): LocalAggregation(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(131, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(pwconv): Sequential(

(0): Sequential(

(0): Conv1d(128, 512, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(512, 128, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(act): ReLU(inplace=True)

)

)

(3): Sequential(

(0): SetAbstraction(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(131, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(1): InvResMLP(

(convs): LocalAggregation(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(259, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(pwconv): Sequential(

(0): Sequential(

(0): Conv1d(256, 1024, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(1024, 256, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(act): ReLU(inplace=True)

)

)

(4): Sequential(

(0): SetAbstraction(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(259, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(1): InvResMLP(

(convs): LocalAggregation(

(convs): Sequential(

(0): Sequential(

(0): Conv2d(515, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(grouper): QueryAndGroup()

)

(pwconv): Sequential(

(0): Sequential(

(0): Conv1d(512, 2048, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(2048, 512, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(act): ReLU(inplace=True)

)

)

)

)

(decoder): PointNextDecoder(

(decoder): Sequential(

(0): Sequential(

(0): FeaturePropogation(

(convs): Sequential(

(0): Sequential(

(0): Conv1d(96, 32, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(32, 32, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

)

(1): Sequential(

(0): FeaturePropogation(

(convs): Sequential(

(0): Sequential(

(0): Conv1d(192, 64, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(64, 64, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

)

(2): Sequential(

(0): FeaturePropogation(

(convs): Sequential(

(0): Sequential(

(0): Conv1d(384, 128, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(128, 128, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

)

(3): Sequential(

(0): FeaturePropogation(

(convs): Sequential(

(0): Sequential(

(0): Conv1d(768, 256, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv1d(256, 256, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

)

)

)

(head): SegHead(

(head): Sequential(

(0): Sequential(

(0): Conv1d(32, 32, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Dropout(p=0.5, inplace=False)

(2): Sequential(

(0): Conv1d(32, 13, kernel_size=(1,), stride=(1,))

)

)

)

)

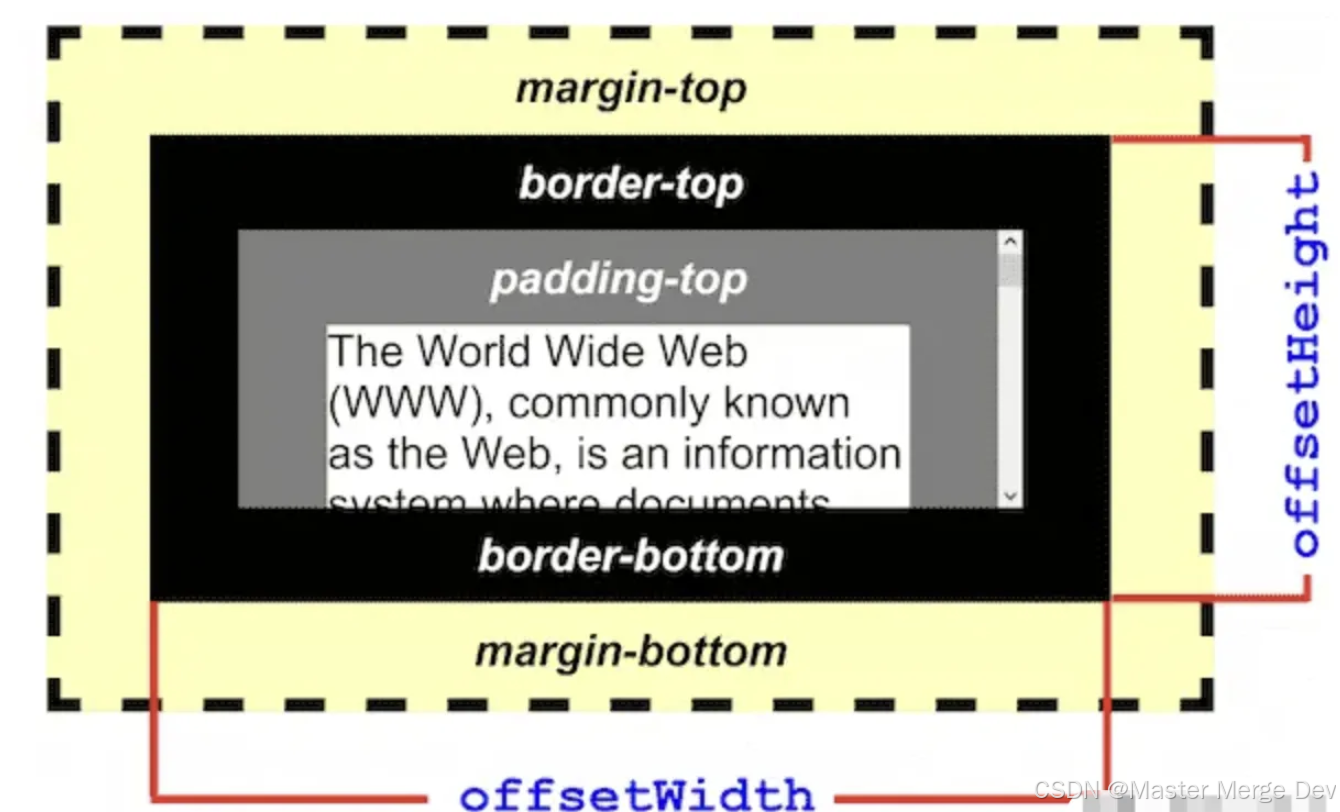

III. PointNeXt-B 编码部分的结构

直接看图比较清晰, 重点是对 InvResMLP 的理解.

IV. 显存溢出的规避

为了避免 “RuntimeError: CUDA out of memory” 显存溢出问题, 先可以将 cfgs/s3dis/default.yaml 中配置的 batch_size 改小一半为 16.

然后, 通过 vpdb 配置 vscode 调试环境

vpdb CUDA_VISIBLE_DEVICES=0,1 python examples/segmentation/main.py --cfg cfgs/s3dis/pointnext-b.yaml mode=train

就可以开启 debug 模式了.

总结

到此我们对 PointNeXt 模型的构建和结构有了基本理解.