在数据驱动决策的时代,数据伦理和隐私保护已成为至关重要的议题。组织必须在利用数据创新和保护用户隐私之间找到平衡。本文将探讨数据伦理的核心原则、隐私保护的技术实现,以及如何在合规和创新之间取得平衡。

目录

- 1. 数据伦理的核心原则

- 1.1 透明度

- 1.2 同意

- 1.3 数据最小化

- 2. 隐私保护技术

- 2.1 数据加密

- 2.2 数据匿名化

- 2.3 差分隐私

- 3. 合规与创新的平衡

- 3.1 隐私设计

- 3.2 数据治理

- 3.3 持续评估和改进

- 结语

1. 数据伦理的核心原则

数据伦理涉及数据的收集、使用和分享过程中的道德考量。以下是一些核心原则:

1.1 透明度

组织应该清晰地告知用户他们的数据将如何被使用。

class DataUsagePolicy:

def __init__(self):

self.policies = {}

def add_policy(self, data_type, usage):

if data_type not in self.policies:

self.policies[data_type] = set()

self.policies[data_type].add(usage)

def get_policy(self, data_type):

return self.policies.get(data_type, set())

def display_all_policies(self):

for data_type, usages in self.policies.items():

print(f"{data_type}: {', '.join(usages)}")

# 使用示例

policy = DataUsagePolicy()

policy.add_policy("Email", "Marketing communications")

policy.add_policy("Email", "Account security")

policy.add_policy("Location", "Personalized recommendations")

print("Our data usage policies:")

policy.display_all_policies()

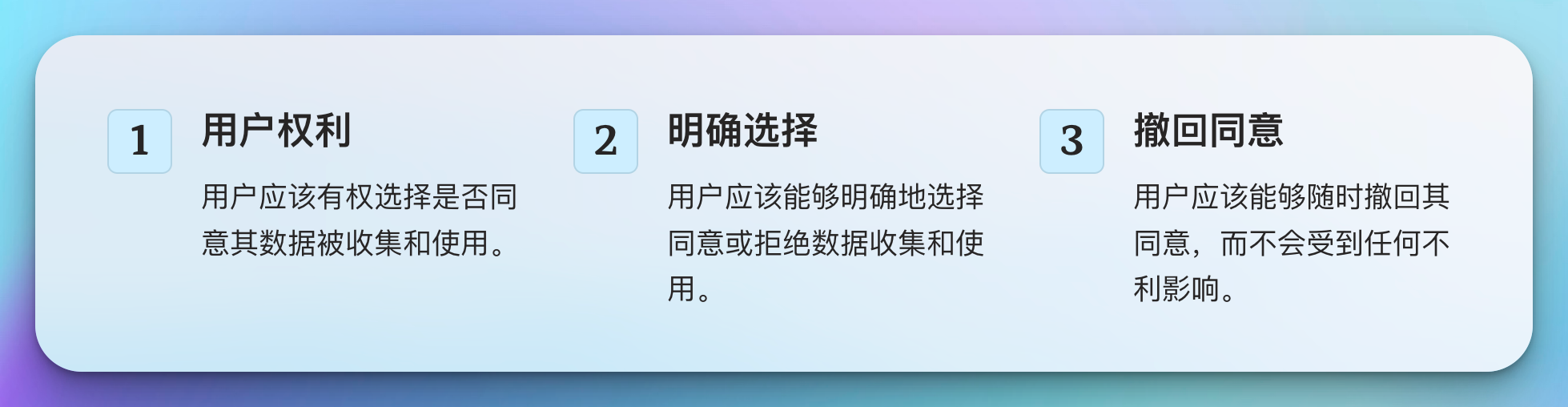

1.2 同意

用户应该有权选择是否同意其数据被收集和使用。

class UserConsent:

def __init__(self):

self.consents = {}

def give_consent(self, user_id, data_type):

if user_id not in self.consents:

self.consents[user_id] = set()

self.consents[user_id].add(data_type)

def revoke_consent(self, user_id, data_type):

if user_id in self.consents:

self.consents[user_id].discard(data_type)

def check_consent(self, user_id, data_type):

return data_type in self.consents.get(user_id, set())

# 使用示例

consent_manager = UserConsent()

consent_manager.give_consent("user123", "email_marketing")

consent_manager.give_consent("user123", "location_tracking")

print("User123 consented to email marketing:",

consent_manager.check_consent("user123", "email_marketing"))

print("User123 consented to data sharing:",

consent_manager.check_consent("user123", "data_sharing"))

consent_manager.revoke_consent("user123", "location_tracking")

print("User123 consented to location tracking:",

consent_manager.check_consent("user123", "location_tracking"))

1.3 数据最小化

只收集必要的数据,避免过度收集。

def collect_user_data(required_fields, optional_fields, user_input):

collected_data = {}

for field in required_fields:

if field not in user_input:

raise ValueError(f"Missing required field: {field}")

collected_data[field] = user_input[field]

for field in optional_fields:

if field in user_input:

collected_data[field] = user_input[field]

return collected_data

# 使用示例

required_fields = ["name", "email"]

optional_fields = ["age", "location"]

user_input = {

"name": "John Doe",

"email": "john@example.com",

"age": 30,

"favorite_color": "blue"

}

try:

user_data = collect_user_data(required_fields, optional_fields, user_input)

print("Collected user data:", user_data)

except ValueError as e:

print("Error:", str(e))

2. 隐私保护技术

2.1 数据加密

加密是保护数据安全的基本技术。

from cryptography.fernet import Fernet

def encrypt_data(data):

key = Fernet.generate_key()

f = Fernet(key)

encrypted_data = f.encrypt(data.encode())

return key, encrypted_data

def decrypt_data(key, encrypted_data):

f = Fernet(key)

decrypted_data = f.decrypt(encrypted_data).decode()

return decrypted_data

# 使用示例

sensitive_data = "This is sensitive information"

key, encrypted = encrypt_data(sensitive_data)

print("Encrypted data:", encrypted)

decrypted = decrypt_data(key, encrypted)

print("Decrypted data:", decrypted)

2.2 数据匿名化

匿名化技术可以保护个人身份信息。

import pandas as pd

import numpy as np

def anonymize_data(df, columns_to_anonymize):

df_anonymized = df.copy()

for column in columns_to_anonymize:

if df[column].dtype == 'object':

df_anonymized[column] = df_anonymized[column].apply(lambda x: hash(x))

else:

min_val = df[column].min()

max_val = df[column].max()

df_anonymized[column] = df_anonymized[column].apply(lambda x: (x - min_val) / (max_val - min_val))

return df_anonymized

# 使用示例

data = pd.DataFrame({

'name': ['Alice', 'Bob', 'Charlie'],

'age': [25, 30, 35],

'email': ['alice@example.com', 'bob@example.com', 'charlie@example.com']

})

anonymized_data = anonymize_data(data, ['name', 'age', 'email'])

print("Original data:")

print(data)

print("\nAnonymized data:")

print(anonymized_data)

2.3 差分隐私

差分隐私是一种添加噪声以保护个人隐私的技术。

import numpy as np

def add_laplace_noise(data, epsilon):

sensitivity = 1.0 # Assuming the sensitivity is 1

noise = np.random.laplace(0, sensitivity / epsilon, data.shape)

return data + noise

# 使用示例

original_data = np.array([10, 20, 30, 40, 50])

epsilon = 0.1 # Privacy parameter

noisy_data = add_laplace_noise(original_data, epsilon)

print("Original data:", original_data)

print("Noisy data:", noisy_data)

3. 合规与创新的平衡

3.1 隐私设计

将隐私保护纳入产品设计的各个阶段。

class PrivacyByDesign:

@staticmethod

def data_collection(data_fields):

return [field for field in data_fields if field.startswith('necessary_')]

@staticmethod

def data_storage(data):

return {k: v for k, v in data.items() if not k.startswith('temporary_')}

@staticmethod

def data_usage(data, purpose):

return {k: v for k, v in data.items() if k in purpose}

@staticmethod

def data_sharing(data, third_party_agreement):

return {k: v for k, v in data.items() if k in third_party_agreement}

# 使用示例

data_fields = ['necessary_name', 'necessary_email', 'optional_age', 'temporary_session_id']

collected_data = PrivacyByDesign.data_collection(data_fields)

print("Collected data fields:", collected_data)

user_data = {

'necessary_name': 'John Doe',

'necessary_email': 'john@example.com',

'temporary_session_id': '12345'

}

stored_data = PrivacyByDesign.data_storage(user_data)

print("Stored data:", stored_data)

marketing_purpose = ['necessary_email']

marketing_data = PrivacyByDesign.data_usage(stored_data, marketing_purpose)

print("Data used for marketing:", marketing_data)

3.2 数据治理

建立强有力的数据治理框架。

class DataGovernance:

def __init__(self):

self.data_inventory = {}

self.access_logs = []

def register_data(self, data_type, sensitivity, retention_period):

self.data_inventory[data_type] = {

'sensitivity': sensitivity,

'retention_period': retention_period

}

def access_data(self, user, data_type, purpose):

if data_type in self.data_inventory:

self.access_logs.append({

'user': user,

'data_type': data_type,

'purpose': purpose,

'timestamp': pd.Timestamp.now()

})

return True

return False

def audit_access(self, start_date, end_date):

df = pd.DataFrame(self.access_logs)

return df[(df['timestamp'] >= start_date) & (df['timestamp'] <= end_date)]

# 使用示例

governance = DataGovernance()

governance.register_data('customer_email', 'high', '1 year')

governance.register_data('purchase_history', 'medium', '5 years')

governance.access_data('analyst1', 'customer_email', 'marketing campaign')

governance.access_data('analyst2', 'purchase_history', 'sales analysis')

audit_result = governance.audit_access('2023-01-01', '2023-12-31')

print(audit_result)

3.3 持续评估和改进

定期评估隐私实践并进行改进。

import random

class PrivacyAssessment:

def __init__(self):

self.privacy_scores = {}

def assess_privacy_practice(self, practice, score):

self.privacy_scores[practice] = score

def get_overall_score(self):

return sum(self.privacy_scores.values()) / len(self.privacy_scores)

def identify_improvements(self):

return [practice for practice, score in self.privacy_scores.items() if score < 7]

def simulate_improvement(self):

practice_to_improve = random.choice(self.identify_improvements())

old_score = self.privacy_scores[practice_to_improve]

new_score = min(10, old_score + random.uniform(0.5, 2.0))

self.privacy_scores[practice_to_improve] = new_score

return practice_to_improve, old_score, new_score

# 使用示例

assessment = PrivacyAssessment()

assessment.assess_privacy_practice('Data Collection', 8)

assessment.assess_privacy_practice('Data Storage', 7)

assessment.assess_privacy_practice('Data Sharing', 6)

assessment.assess_privacy_practice('User Consent', 9)

print("Overall Privacy Score:", assessment.get_overall_score())

print("Areas for Improvement:", assessment.identify_improvements())

improved_practice, old_score, new_score = assessment.simulate_improvement()

print(f"Improved {improved_practice} from {old_score:.2f} to {new_score:.2f}")

print("New Overall Privacy Score:", assessment.get_overall_score())

结语

在数据驱动的世界中,平衡合规要求和创新需求是一项持续的挑战。组织需要:

- 将数据伦理和隐私保护视为核心价值,而不仅仅是法律义务。

- 投资于隐私保护技术和实践,如加密、匿名化和差分隐私。

- 采用"隐私设计"的方法,将隐私考虑纳入产品和服务的每个阶段。

- 建立强大的数据治理框架,确保数据的负责任使用。

- 持续评估和改进隐私实践,适应不断变化的技术和监管环境。

通过采取这些措施,组织可以在保护用户隐私和推动数据驱动创新之间取得平衡。这不仅有助于遵守法规,还能建立用户信任,最终推动业务的长期成功。

记住,数据伦理和隐私保护不应被视为创新的障碍,而应该被视为创新的推动力。通过负责任地使用数据,我们可以创造出既有价值又受到用户信任的产品和服务。

在数据时代,那些能够在合规和创新之间取得平衡的组织将会在竞争中脱颖而出,成为行业的领导者。