文章目录

- 一、paas平台环境配置

- 二、模型下载

- 三、环境下载

- 1.pip 正常安装

- 2.diffusers安装

- 四、代码准备

- 五、运行效果演示

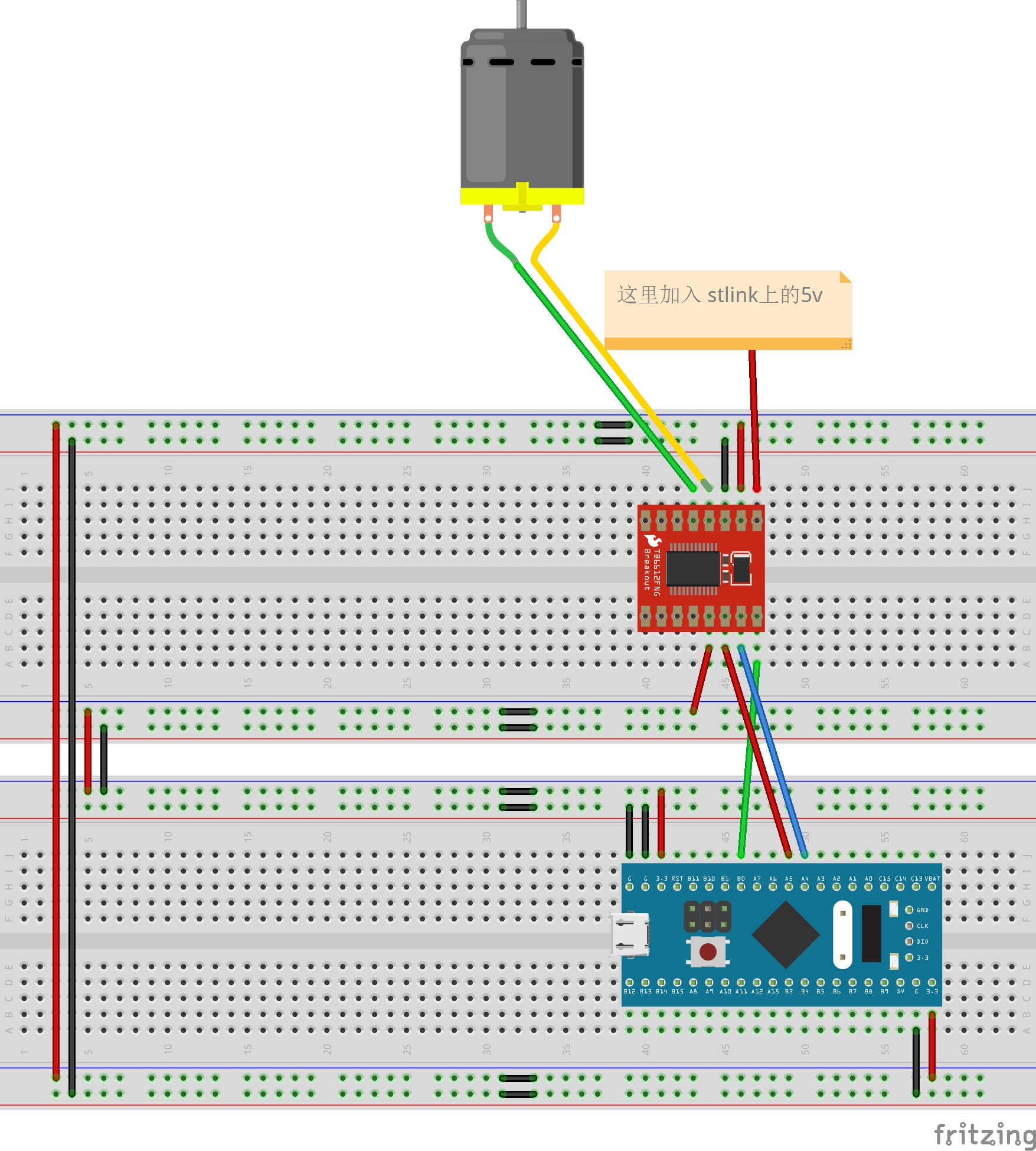

一、paas平台环境配置

驱动版本选择:大于或等于5.10.29

显卡选择:MLU370系列

卡数:1-8卡【推荐2卡起步】

镜像选择:pytorch:v24.06-torch2.1.0-catch1.21.0-ubuntu22.04-py310

二、模型下载

依然是我们的老朋友魔搭社区

apt install git-lfs -y

git-lfs clone https://www.modelscope.cn/ZhipuAI/CogVideoX-2b.git

三、环境下载

1.pip 正常安装

pip install transformers==4.44.0 accelerate==0.31.0 opencv-python sentencepiece

#如果你的环境有自带apex的话,请卸载它

pip uninstall apex

2.diffusers安装

请私聊

四、代码准备

import torch

import torch_mlu

from diffusers import CogVideoXPipeline

from torch_mlu.utils.model_transfer import transfer

from diffusers.utils import export_to_video

prompt = "A panda, dressed in a small, red jacket and a tiny hat, sits on a wooden stool in a serene bamboo forest. The panda's fluffy paws strum a miniature acoustic guitar, producing soft, melodic tunes. Nearby, a few other pandas gather, watching curiously and some clapping in rhythm. Sunlight filters through the tall bamboo, casting a gentle glow on the scene. The panda's face is expressive, showing concentration and joy as it plays. The background includes a small, flowing stream and vibrant green foliage, enhancing the peaceful and magical atmosphere of this unique musical performance."

pipe = CogVideoXPipeline.from_pretrained(

"/workspace/volume/guojunceshi2/CogVideoX-2b",

torch_dtype=torch.float16

)

pipe.enable_model_cpu_offload()

prompt_embeds, _ = pipe.encode_prompt(

prompt=prompt,

do_classifier_free_guidance=True,

num_videos_per_prompt=1,

max_sequence_length=226,

device="cuda",

dtype=torch.float16,

)

video = pipe(

num_inference_steps=50,

guidance_scale=6,

prompt_embeds=prompt_embeds,

).frames[0]

export_to_video(video, "output.mp4", fps=8)

五、运行效果演示