1、概述

1. 1 算法概述

人脸换脸是一种使用人工智能技术来实时或离线地将视频中的人脸替换成另一张人脸的技术。近年来,随着深度学习技术的发展,这一领域取得了显著进展。常见的人脸换脸项目有:

-

Deepfake:这是最著名的换脸算法之一,使用深度学习技术合成人脸图像,通常使用卷积神经网络(CNN)和生成对抗网络(GAN)来生成逼真的人脸图像。它在面部特征捕捉方面表现优异,但可能存在面部表情不自然、光影效果不协调等问题 。

-

Face2Face:这是一种实时面部捕捉和重演算法,能够将输入的人脸图像或视频中的面部表情实时转换为另一张人脸图像的面部表情。该算法可以处理各种面部细节,适合视频通话、在线教育、游戏等领域 。

-

FaceFusion: 是一款开源的 AI 幻脸工具,能够将一个人的面部特征替换到另一个人的身体上,实现面部表情和动作的同步。

AI换脸技术的应用前景广阔,包括娱乐、电影制作、教育等领域。然而,它也面临着伦理和法律方面的挑战,可能被用于制造虚假音视频,进行诈骗、诽谤等违法行为。因此,在使用AI换脸技术时,我们必须谨慎行事,确保其应用符合伦理和法律规定 。

1.2 算法实现流程

下面是人脸换脸的实现流程,可以看出这是一个整全的项目,涉及人脸检测、人脸关键点提取、人脸特征提取对齐、人脸换脸、人脸图像修复。

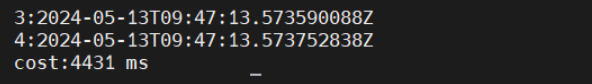

1.3 当前的问题与开发环境

因涉及的算法与模型前向推理计算复杂度,要保持视频清晰度同时能达到实时推理的效果,这里必须依赖GPU进行推理部署,为了更好提升模型的推理速度,选择了C++做开发语言,开发环境在RTX3080与RTX4090都进行了测试,依赖库cuda11.7,cudnn 8.5,onnxruntime-gpu 1.12.1。在RTX4090进行推理部署时,视频的分辨率在720*1080能在40ms完成一帧的推理到修复,按一秒25帧计算,可达到实现推理的效果。

2、人脸检测

2.1 人脸检测

人脸检测(Face Detection)是指在图像或视频中检测和定位人脸的位置。它的目标是确定图像中是否存在人脸,并标记人脸的位置和边界框。人脸检测通常是作为其他人脸相关任务的预处理步骤,如人脸识别、表情分析、面部特征提取等。它的输出是一个或多个表示人脸位置的矩形边界框。

一个完美的人脸检测器应该是:

- 快速——最好是实时的(最低1 FPS以上)。

- 准确——它应该只检测人脸(没有假阳性)和检测所有的人脸(没有假阴性)。

- 健壮——应该在不同的姿势、旋转、光照条件等情况下检测到人脸。

- 利用所有可用的资源——如果可能的话使用GPU,使用彩色(RGB)输入等。

2.2 人脸关键点检测

在计算机视觉人脸计算领域,人脸关键点检测是一个十分重要的区域,可以实现例如一些人脸矫正、表情分析、姿态分析、人脸识别、人脸美颜等方向。

人脸关键点数据集通常有 5点、15点、68点、96点、98点、106点、186点 等,例如通用 Dlib 中的 68 点检测,它将人脸关键点分为脸部关键点和轮廓关键点,脸部关键点包含眉毛、眼睛、鼻子、嘴巴共计51个关键点,轮廓关键点包含17个关键点。但在换脸这个项目,只需要5个关键点。

2.3 Yolov5 Face

项目地址:https://github.com/deepcam-cn/yolov5-face

主要贡献

(1)在 YOLOv5 网络中加了一个关键点 regression head。损失函数用 Wing loss。

(2)用 Stem 块结构取代 YOLOv5 的 Focus 层。增加了网络的泛化能力,并降低了计算的复杂性,同时性能也没有下降。

(3)对 SPP 块进行了改变,使用一个更小的 kernel(内核)。促使 YOLOv5 更适合于人脸检测,并提高检测精度。

(4)增加一个 stride 为 64 的 P6 输出块。可以增加检测大型人脸的能力。

(5)作者发现,一些用于一般目标检测的数据增强方法并不适合用于人脸检测,包括 up-down flipping 和 Mosaic。去除 up-down flipping 性能会改善。当使用小图像时,Mosaic 增强法会降低性能。然而,当小脸被忽略时,它的效果很好。随机剪裁有助于提高性能。

(6)设计两个基于 ShuffleNetV2 的超轻量级模型。其骨干网与 CSP 网络大不相同。这些模型超级小,同时实现了嵌入式或移动设备的 SOTA 性能。

网络结构

添加一个stride = 64的P6输出块,P6可以提高对大人脸的检测性能。(之前的人脸检测模型大多关注提高小人脸的检测性能,这里作者关注了大人脸的检测效果,提高大人脸的检测性能来提升模型整体的检测性能)。P6的特征图大小为10x10。

定义了一个名为CBS的关键模块,它由Conv层、BN层和一个SILU[37]激活函数组成。这个CBS块在许多其他模块中使用。

显示head 的输出标签,包括边界框(bbox)、置信度(conf)、分类(cls)和五点LandMark。LandMark是我们对YOLOv5的添加,使其成为带有LandMark输出的人脸检测器。如果没有LandMark,最后一个维度16应该是6。请注意,每个anchor 的输出尺寸为: P3的80x80x16, P4的40x40x16, P5的20x20x16,可选P6的10x10x16。实际尺寸应乘以anchor的数量。

图中显示了一个Stem结构[38],用于替换YOLOv5中的原始Focus层。在YOLOv5中引入用于人脸检测的Stem模块是我们的创新之一。

提高了网络的泛化能力,降低了计算复杂度,同时性能也没有下降。

第2个和第4个CBS是1x1卷积,第1个和第3个CBS是3x3,stride=2的卷积。stem以后图像大小由604x640变成了160x160。

CSP模块(C3)的灵感来自DenseNet[39]。但是,并不是在CNN的几层之后加上完整的输入和输出,而是把输入分成两半。其中一半通过CBS块,一系列Bottleneck 块,然后通过另一个Conv层。另一半通过Conv层传递,然后将两者连接起来,然后是另一个CBS块。

对SPP模块进行更新,使用更小的kernel,使yolov5更适用于人脸检测并提高了检测精度。YOLOv5用的SPPkernel(5,9,13),YOLO5Face用的kernel(3,5,7)。

Bottleneck 模块。

SPP块中,YOLOv5中的三个内核尺寸13x13、9x9、5x5在我们的人脸检测器中被修改为7x7、5x5、3x3。这已经被证明是提高人脸检测性能的创新之一。

2.4 项目部署与模型下载

可以从官方的git下载到预训练的模型,然后通过官方公布的脚本把模型转换成onnx模型,这里使用的模型是yolov5s face的模型,官方的转换代码如下:

"""Exports a YOLOv5 *.pt model to ONNX and TorchScript formats

Usage:

$ export PYTHONPATH="$PWD" && python models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1

"""

import argparse

import sys

import time

sys.path.append('./') # to run '$ python *.py' files in subdirectories

import torch

import torch.nn as nn

import models

from models.experimental import attempt_load

from utils.activations import Hardswish, SiLU

from utils.general import set_logging, check_img_size

import onnx

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='./yolov5s.pt', help='weights path') # from yolov5/models/

parser.add_argument('--img_size', nargs='+', type=int, default=[640, 640], help='image size') # height, width

parser.add_argument('--batch_size', type=int, default=1, help='batch size')

parser.add_argument('--dynamic', action='store_true', default=False, help='enable dynamic axis in onnx model')

parser.add_argument('--onnx2pb', action='store_true', default=False, help='export onnx to pb')

parser.add_argument('--onnx_infer', action='store_true', default=True, help='onnx infer test')

#=======================TensorRT=================================

parser.add_argument('--onnx2trt', action='store_true', default=False, help='export onnx to tensorrt')

parser.add_argument('--fp16_trt', action='store_true', default=False, help='fp16 infer')

#================================================================

opt = parser.parse_args()

opt.img_size *= 2 if len(opt.img_size) == 1 else 1 # expand

print(opt)

set_logging()

t = time.time()

# Load PyTorch model

model = attempt_load(opt.weights, map_location=torch.device('cpu')) # load FP32 model

delattr(model.model[-1], 'anchor_grid')

model.model[-1].anchor_grid=[torch.zeros(1)] * 3 # nl=3 number of detection layers

model.model[-1].export_cat = True

model.eval()

labels = model.names

# Checks

gs = int(max(model.stride)) # grid size (max stride)

opt.img_size = [check_img_size(x, gs) for x in opt.img_size] # verify img_size are gs-multiples

# Input

img = torch.zeros(opt.batch_size, 3, *opt.img_size) # image size(1,3,320,192) iDetection

# Update model

for k, m in model.named_modules():

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if isinstance(m, models.common.Conv): # assign export-friendly activations

if isinstance(m.act, nn.Hardswish):

m.act = Hardswish()

elif isinstance(m.act, nn.SiLU):

m.act = SiLU()

# elif isinstance(m, models.yolo.Detect):

# m.forward = m.forward_export # assign forward (optional)

if isinstance(m, models.common.ShuffleV2Block):#shufflenet block nn.SiLU

for i in range(len(m.branch1)):

if isinstance(m.branch1[i], nn.SiLU):

m.branch1[i] = SiLU()

for i in range(len(m.branch2)):

if isinstance(m.branch2[i], nn.SiLU):

m.branch2[i] = SiLU()

y = model(img) # dry run

# ONNX export

print('\nStarting ONNX export with onnx %s...' % onnx.__version__)

f = opt.weights.replace('.pt', '.onnx') # filename

model.fuse() # only for ONNX

input_names=['input']

output_names=['output']

torch.onnx.export(model, img, f, verbose=False, opset_version=12,

input_names=input_names,

output_names=output_names,

dynamic_axes = {'input': {0: 'batch'},

'output': {0: 'batch'}

} if opt.dynamic else None)

# Checks

onnx_model = onnx.load(f) # load onnx model

onnx.checker.check_model(onnx_model) # check onnx model

print('ONNX export success, saved as %s' % f)

# Finish

print('\nExport complete (%.2fs). Visualize with https://github.com/lutzroeder/netron.' % (time.time() - t))

# onnx infer

if opt.onnx_infer:

import onnxruntime

import numpy as np

providers = ['CPUExecutionProvider']

session = onnxruntime.InferenceSession(f, providers=providers)

im = img.cpu().numpy().astype(np.float32) # torch to numpy

y_onnx = session.run([session.get_outputs()[0].name], {session.get_inputs()[0].name: im})[0]

print("pred's shape is ",y_onnx.shape)

print("max(|torch_pred - onnx_pred|) =",abs(y.cpu().numpy()-y_onnx).max())

# TensorRT export

if opt.onnx2trt:

from torch2trt.trt_model import ONNX_to_TRT

print('\nStarting TensorRT...')

ONNX_to_TRT(onnx_model_path=f,trt_engine_path=f.replace('.onnx', '.trt'),fp16_mode=opt.fp16_trt)

# PB export

if opt.onnx2pb:

print('download the newest onnx_tf by https://github.com/onnx/onnx-tensorflow/tree/master/onnx_tf')

from onnx_tf.backend import prepare

import tensorflow as tf

outpb = f.replace('.onnx', '.pb') # filename

# strict=True maybe leads to KeyError: 'pyfunc_0', check: https://github.com/onnx/onnx-tensorflow/issues/167

tf_rep = prepare(onnx_model, strict=False) # prepare tf representation

tf_rep.export_graph(outpb) # export the model

out_onnx = tf_rep.run(img) # onnx output

# check pb

with tf.Graph().as_default():

graph_def = tf.GraphDef()

with open(outpb, "rb") as f:

graph_def.ParseFromString(f.read())

tf.import_graph_def(graph_def, name="")

with tf.Session() as sess:

init = tf.global_variables_initializer()

input_x = sess.graph.get_tensor_by_name(input_names[0]+':0') # input

outputs = []

for i in output_names:

outputs.append(sess.graph.get_tensor_by_name(i+':0'))

out_pb = sess.run(outputs, feed_dict={input_x: img})

print(f'out_pytorch {y}')

print(f'out_onnx {out_onnx}')

print(f'out_pb {out_pb}')

2.5 C++ 推理代码

转成onnx模型之后,就可以使用onnxruntime进行模型推理。

#include "YOLOV5Face.h"

YOLOV5Face::YOLOV5Face(std::string& onnx_path, unsigned int _num_threads)

{

std::wstring widestr = std::wstring(onnx_path.begin(), onnx_path.end());

Ort::Env env = Ort::Env(ORT_LOGGING_LEVEL_ERROR, "YOLOV5Face");

Ort::SessionOptions session_options;

session_options.SetIntraOpNumThreads(4);

OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(session_options, 0);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

ort_session = new Ort::Session(env, widestr.c_str(), session_options);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

Ort::AllocatorWithDefaultOptions allocator;

input_name = ort_session->GetInputName(0, allocator);

input_node_names.resize(1);

input_node_names[0] = input_name;

Ort::TypeInfo type_info = ort_session->GetInputTypeInfo(0);

auto tensor_info = type_info.GetTensorTypeAndShapeInfo();

input_tensor_size = 1;

input_node_dims = tensor_info.GetShape();

for (unsigned int i = 0; i < input_node_dims.size(); ++i)

input_tensor_size *= input_node_dims.at(i);

input_values_handler.resize(input_tensor_size);

// 4. output names & output dimms

num_outputs = ort_session->GetOutputCount();

output_node_names.resize(num_outputs);

for (unsigned int i = 0; i < num_outputs; ++i)

{

output_node_names[i] = ort_session->GetOutputName(i, allocator);

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

}

YOLOV5Face::YOLOV5Face()

{

}

void YOLOV5Face::set_gpu(int gpu_index, int gpu_ram)

{

std::vector<std::string> available_providers = Ort::GetAvailableProviders();

auto cuda_available = std::find(available_providers.begin(),

available_providers.end(), "CUDAExecutionProvider");

if (gpu_index >= 0 && (cuda_available != available_providers.end()))

{

OrtCUDAProviderOptions cuda_options;

cuda_options.device_id = gpu_index;

cuda_options.arena_extend_strategy = 0;

if (gpu_ram == -1)

{

cuda_options.gpu_mem_limit = ~0ULL;

}

else

{

cuda_options.gpu_mem_limit = size_t(gpu_ram * 1024 * 1024 * 1024);

}

cuda_options.cudnn_conv_algo_search = OrtCudnnConvAlgoSearch::OrtCudnnConvAlgoSearchExhaustive;

cuda_options.do_copy_in_default_stream = 1;

session_options.AppendExecutionProvider_CUDA(cuda_options);

}

}

void YOLOV5Face::set_num_thread(int num_thread)

{

_num_thread = num_thread;

session_options.SetInterOpNumThreads(num_thread);

session_options.SetIntraOpNumThreads(num_thread);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

}

bool YOLOV5Face::read_model(const std::string model_path)

{

try

{

std::wstring widestr = std::wstring(model_path.begin(), model_path.end());

ort_session = new Ort::Session(env, widestr.c_str(), session_options);

Ort::AllocatorWithDefaultOptions allocator;

input_name = ort_session->GetInputName(0, allocator);

input_node_names.resize(1);

input_node_names[0] = input_name;

Ort::TypeInfo type_info = ort_session->GetInputTypeInfo(0);

auto tensor_info = type_info.GetTensorTypeAndShapeInfo();

input_tensor_size = 1;

input_node_dims = tensor_info.GetShape();

for (unsigned int i = 0; i < input_node_dims.size(); ++i)

input_tensor_size *= input_node_dims.at(i);

input_values_handler.resize(input_tensor_size);

// 4. output names & output dimms

num_outputs = ort_session->GetOutputCount();

output_node_names.resize(num_outputs);

for (unsigned int i = 0; i < num_outputs; ++i)

{

output_node_names[i] = ort_session->GetOutputName(i, allocator);

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

return true;

}

catch (const std::exception&)

{

return false;

}

return true;

}

cv::Mat normalize(const cv::Mat& mat, float mean, float scale)

{

cv::Mat matf;

if (mat.type() != CV_32FC3) mat.convertTo(matf, CV_32FC3);

else matf = mat; // reference

return (matf - mean) * scale;

}

void normalize(const cv::Mat& inmat, cv::Mat& outmat,

float mean, float scale)

{

outmat = normalize(inmat, mean, scale);

}

void normalize_inplace(cv::Mat& mat_inplace, float mean, float scale)

{

if (mat_inplace.type() != CV_32FC3) mat_inplace.convertTo(mat_inplace, CV_32FC3);

normalize(mat_inplace, mat_inplace, mean, scale);

}

Ort::Value create_tensor(const cv::Mat& mat,

const std::vector<int64_t>& tensor_dims,

const Ort::MemoryInfo& memory_info_handler,

std::vector<float>& tensor_value_handler,

unsigned int data_format)

throw(std::runtime_error)

{

const unsigned int rows = mat.rows;

const unsigned int cols = mat.cols;

const unsigned int channels = mat.channels();

cv::Mat mat_ref;

if (mat.type() != CV_32FC(channels)) mat.convertTo(mat_ref, CV_32FC(channels));

else mat_ref = mat; // reference only. zero-time cost. support 1/2/3/... channels

if (tensor_dims.size() != 4) throw std::runtime_error("dims mismatch.");

if (tensor_dims.at(0) != 1) throw std::runtime_error("batch != 1");

// CXHXW

if (data_format == CHW)

{

const unsigned int target_height = tensor_dims.at(2);

const unsigned int target_width = tensor_dims.at(3);

const unsigned int target_channel = tensor_dims.at(1);

const unsigned int target_tensor_size = target_channel * target_height * target_width;

if (target_channel != channels) throw std::runtime_error("channel mismatch.");

tensor_value_handler.resize(target_tensor_size);

cv::Mat resize_mat_ref;

if (target_height != rows || target_width != cols)

cv::resize(mat_ref, resize_mat_ref, cv::Size(target_width, target_height));

else resize_mat_ref = mat_ref; // reference only. zero-time cost.

std::vector<cv::Mat> mat_channels;

cv::split(resize_mat_ref, mat_channels);

// CXHXW

for (unsigned int i = 0; i < channels; ++i)

std::memcpy(tensor_value_handler.data() + i * (target_height * target_width),

mat_channels.at(i).data, target_height * target_width * sizeof(float));

return Ort::Value::CreateTensor<float>(memory_info_handler, tensor_value_handler.data(),

target_tensor_size, tensor_dims.data(),

tensor_dims.size());

}

// HXWXC

const unsigned int target_height = tensor_dims.at(1);

const unsigned int target_width = tensor_dims.at(2);

const unsigned int target_channel = tensor_dims.at(3);

const unsigned int target_tensor_size = target_channel * target_height * target_width;

if (target_channel != channels) throw std::runtime_error("channel mismatch!");

tensor_value_handler.resize(target_tensor_size);

cv::Mat resize_mat_ref;

if (target_height != rows || target_width != cols)

cv::resize(mat_ref, resize_mat_ref, cv::Size(target_width, target_height));

else resize_mat_ref = mat_ref; // reference only. zero-time cost.

std::memcpy(tensor_value_handler.data(), resize_mat_ref.data, target_tensor_size * sizeof(float));

return Ort::Value::CreateTensor<float>(memory_info_handler, tensor_value_handler.data(),

target_tensor_size, tensor_dims.data(),

tensor_dims.size());

}

Ort::Value YOLOV5Face::transform(const cv::Mat& mat_rs)

{

cv::Mat canvas;

cv::cvtColor(mat_rs, canvas, cv::COLOR_BGR2RGB);

normalize_inplace(canvas, mean_val, scale_val); // float32

return create_tensor(

canvas, input_node_dims, memory_info_handler,

input_values_handler, CHW);

}

void YOLOV5Face::resize_unscale(const cv::Mat& mat, cv::Mat& mat_rs,

int target_height, int target_width,ScaleParams& scale_params)

{

if (mat.empty()) return;

int img_height = static_cast<int>(mat.rows);

int img_width = static_cast<int>(mat.cols);

mat_rs = cv::Mat(target_height, target_width, CV_8UC3,

cv::Scalar(0, 0, 0));

// scale ratio (new / old) new_shape(h,w)

float w_r = (float)target_width / (float)img_width;

float h_r = (float)target_height / (float)img_height;

float r = std::min(w_r, h_r);

// compute padding

int new_unpad_w = static_cast<int>((float)img_width * r); // floor

int new_unpad_h = static_cast<int>((float)img_height * r); // floor

int pad_w = target_width - new_unpad_w; // >=0

int pad_h = target_height - new_unpad_h; // >=0

int dw = pad_w / 2;

int dh = pad_h / 2;

// resize with unscaling

cv::Mat new_unpad_mat;

// cv::Mat new_unpad_mat = mat.clone(); // may not need clone.

cv::resize(mat, new_unpad_mat, cv::Size(new_unpad_w, new_unpad_h));

new_unpad_mat.copyTo(mat_rs(cv::Rect(dw, dh, new_unpad_w, new_unpad_h)));

// record scale params.

scale_params.ratio = r;

scale_params.dw = dw;

scale_params.dh = dh;

scale_params.flag = true;

}

void YOLOV5Face::generate_bboxes_kps(const ScaleParams& scale_params,

std::vector<lite::types::BoxfWithLandmarks>& bbox_kps_collection,

std::vector<Ort::Value>& output_tensors, float score_threshold,

float img_height, float img_width)

{

Ort::Value& output = output_tensors.at(0); // (1,n,16=4+1+10+1)

auto output_dims = output_node_dims.at(0); // (1,n,16)

const unsigned int num_anchors = output_dims.at(1); // n = ?

const float* output_ptr = output.GetTensorMutableData<float>();

float r_ = scale_params.ratio;

int dw_ = scale_params.dw;

int dh_ = scale_params.dh;

bbox_kps_collection.clear();

unsigned int count = 0;

for (unsigned int i = 0; i < num_anchors; ++i)

{

const float* row_ptr = output_ptr + i * 16;

float obj_conf = row_ptr[4];

if (obj_conf < score_threshold) continue; // filter first.

float cls_conf = row_ptr[15];

if (cls_conf < score_threshold) continue; // face score.

// bounding box

const float* offsets = row_ptr;

float cx = offsets[0];

float cy = offsets[1];

float w = offsets[2];

float h = offsets[3];

lite::types::BoxfWithLandmarks box_kps;

float x1 = ((cx - w / 2.f) - (float)dw_) / r_;

float y1 = ((cy - h / 2.f) - (float)dh_) / r_;

float x2 = ((cx + w / 2.f) - (float)dw_) / r_;

float y2 = ((cy + h / 2.f) - (float)dh_) / r_;

box_kps.box.x1 = std::max(0.f, x1);

box_kps.box.y1 = std::max(0.f, y1);

box_kps.box.x2 = std::min(img_width - 1.f, x2);

box_kps.box.y2 = std::min(img_height - 1.f, y2);

box_kps.box.score = cls_conf;

box_kps.box.label = 1;

box_kps.box.label_text = "face";

box_kps.box.flag = true;

// landmarks

const float* kps_offsets = row_ptr + 5;

for (unsigned int j = 0; j < 10; j += 2)

{

cv::Point2f kps;

float kps_x = (kps_offsets[j] - (float)dw_) / r_;

float kps_y = (kps_offsets[j + 1] - (float)dh_) / r_;

kps.x = std::min(std::max(0.f, kps_x), img_width - 1.f);

kps.y = std::min(std::max(0.f, kps_y), img_height - 1.f);

box_kps.landmarks.points.push_back(kps);

}

box_kps.landmarks.flag = true;

box_kps.flag = true;

bbox_kps_collection.push_back(box_kps);

count += 1; // limit boxes for nms.

if (count > max_nms)

break;

}

}

void YOLOV5Face::nms_bboxes_kps(std::vector<lite::types::BoxfWithLandmarks>& input,

std::vector<lite::types::BoxfWithLandmarks>& output,

float iou_threshold, unsigned int topk)

{

if (input.empty()) return;

std::sort(

input.begin(), input.end(),

[](const lite::types::BoxfWithLandmarks& a, const lite::types::BoxfWithLandmarks& b)

{ return a.box.score > b.box.score; }

);

const unsigned int box_num = input.size();

std::vector<int> merged(box_num, 0);

unsigned int count = 0;

for (unsigned int i = 0; i < box_num; ++i)

{

if (merged[i]) continue;

std::vector<lite::types::BoxfWithLandmarks> buf;

buf.push_back(input[i]);

merged[i] = 1;

for (unsigned int j = i + 1; j < box_num; ++j)

{

if (merged[j]) continue;

float iou = static_cast<float>(input[i].box.iou_of(input[j].box));

if (iou > iou_threshold)

{

merged[j] = 1;

buf.push_back(input[j]);

}

}

output.push_back(buf[0]);

// keep top k

count += 1;

if (count >= topk)

break;

}

}

void YOLOV5Face::detect(const cv::Mat& mat, std::vector<lite::types::BoxfWithLandmarks>& detected_boxes_kps,

float score_threshold, float iou_threshold, unsigned int topk)

{

if (mat.empty()) return;

auto img_height = static_cast<float>(mat.rows);

auto img_width = static_cast<float>(mat.cols);

const int target_height = (int)input_node_dims.at(2);

const int target_width = (int)input_node_dims.at(3);

// resize & unscale

cv::Mat mat_rs;

ScaleParams scale_params;

this->resize_unscale(mat, mat_rs, target_height, target_width, scale_params);

// 1. make input tensor

Ort::Value input_tensor = this->transform(mat_rs);

// 2. inference scores & boxes.

auto output_tensors = ort_session->Run(

Ort::RunOptions{ nullptr }, input_node_names.data(),

&input_tensor, 1, output_node_names.data(), num_outputs

);

// 3. rescale & exclude.

std::vector<lite::types::BoxfWithLandmarks> bbox_kps_collection;

this->generate_bboxes_kps(scale_params, bbox_kps_collection, output_tensors,

score_threshold, img_height, img_width);

// 4. hard nms with topk.

this->nms_bboxes_kps(bbox_kps_collection, detected_boxes_kps, iou_threshold, topk);

}

void YOLOV5Face::detect(const cv::Mat& cv_src, std::vector<FaceInfo>& face_infos, int position,

float score_threshold, float iou_threshold, unsigned int topk)

{

auto img_height = static_cast<float>(cv_src.rows);

auto img_width = static_cast<float>(cv_src.cols);

const int target_height = (int)input_node_dims.at(2);

const int target_width = (int)input_node_dims.at(3);

// resize & unscale

cv::Mat mat_rs;

ScaleParams scale_params;

this->resize_unscale(cv_src, mat_rs, target_height, target_width, scale_params);

// 1. make input tensor

Ort::Value input_tensor = this->transform(mat_rs);

// 2. inference scores & boxes.

auto output_tensors = ort_session->Run(

Ort::RunOptions{ nullptr }, input_node_names.data(),

&input_tensor, 1, output_node_names.data(), num_outputs

);

// 3. rescale & exclude.

std::vector<lite::types::BoxfWithLandmarks> bbox_kps_collection;

this->generate_bboxes_kps(scale_params, bbox_kps_collection, output_tensors,

score_threshold, img_height, img_width);

std::vector<lite::types::BoxfWithLandmarks> detected_boxes_kps;

// 4. hard nms with topk.

this->nms_bboxes_kps(bbox_kps_collection, detected_boxes_kps, iou_threshold, topk);

if (detected_boxes_kps.size() == 1)

{

FaceInfo info;

info.bbox.xmin = detected_boxes_kps[0].box.rect().x;

info.bbox.ymin = detected_boxes_kps[0].box.rect().y;

info.bbox.xmax = detected_boxes_kps[0].box.rect().br().x;

info.bbox.ymax = detected_boxes_kps[0].box.rect().br().y;

info.points = detected_boxes_kps[0].landmarks.points;

face_infos.push_back(info);

}

if (detected_boxes_kps.size() > 1 && position == 1)

{

int arec = 0;

int index = 0;

for (int i = 0; i < detected_boxes_kps.size(); ++i)

{

if (detected_boxes_kps[i].box.score >= 0.7)

{

if (arec <= detected_boxes_kps[i].box.rect().area())

{

arec = detected_boxes_kps[i].box.rect().area();

index = i;

}

}

}

FaceInfo info;

info.bbox.xmin = detected_boxes_kps[index].box.rect().x;

info.bbox.ymin = detected_boxes_kps[index].box.rect().y;

info.bbox.xmax = detected_boxes_kps[index].box.rect().br().x;

info.bbox.ymax = detected_boxes_kps[index].box.rect().br().y;

info.points = detected_boxes_kps[index].landmarks.points;

face_infos.push_back(info);

}

}

实现的效果:

3、人脸对齐

3.1 人脸特征值获取

ArcFace是一种深度学习算法,用于获取人脸特征值,它通过特定的网络结构和损失函数来优化人脸识别的性能。ArcFace算法的主要目标是提高人脸识别的准确性和鲁棒性,尤其是在大规模人脸识别任务中。该算法通过增加类间角度间隔和减少类内角度变化来提高识别性能,从而使得同一身份的人脸特征更加紧凑,不同身份的人脸特征区分度更高。ArcFace算法的实现涉及到深度神经网络模型的训练和优化,通过这种方式,它能够提取出具有高度区分度的人脸特征向量,这些特征向量可以用于人脸验证、人脸识别等多种应用场景。

在获取人脸特征值的过程中,ArcFace算法通过特定的训练过程学习到的人脸特征具有较高的辨识度,能够在存在光照变化、表情变化、遮挡等情况下保持较好的识别效果。此外,ArcFace算法的优化还包括对模型参数的调整和损失函数的改进,以进一步提高人脸识别的准确性和效率。通过这些技术手段,ArcFace算法在人脸识别领域展现出了较高的性能和准确性,被广泛应用于各种需要人脸识别的场景中。

3.2 C++ 推理代码

模型可以从Facefusion-assets下载,模型已经转好成onnx,下载之后可以直接使用C++进行推理。

#include"facerecognizer.h"

FaceEmbdding::FaceEmbdding()

{

}

void FaceEmbdding::set_gpu(int gpu_index, int gpu_ram)

{

std::vector<std::string> available_providers = Ort::GetAvailableProviders();

auto cuda_available = std::find(available_providers.begin(),

available_providers.end(), "CUDAExecutionProvider");

if (gpu_index >= 0 && (cuda_available != available_providers.end()))

{

OrtCUDAProviderOptions cuda_options;

cuda_options.device_id = gpu_index;

cuda_options.arena_extend_strategy = 0;

if (gpu_ram == -1)

{

cuda_options.gpu_mem_limit = ~0ULL;

}

else

{

cuda_options.gpu_mem_limit = size_t(gpu_ram * 1024 * 1024 * 1024);

}

cuda_options.cudnn_conv_algo_search = OrtCudnnConvAlgoSearch::OrtCudnnConvAlgoSearchExhaustive;

cuda_options.do_copy_in_default_stream = 1;

session_options.AppendExecutionProvider_CUDA(cuda_options);

}

}

void FaceEmbdding::set_num_thread(int num_thread)

{

_num_thread = num_thread;

session_options.SetInterOpNumThreads(num_thread);

session_options.SetIntraOpNumThreads(num_thread);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

}

bool FaceEmbdding::read_model(const std::string model_path)

{

try

{

std::wstring widestr = std::wstring(model_path.begin(), model_path.end());

ort_session = new Ort::Session(env, widestr.c_str(), session_options);

//ort_session = new Session(env, model_path.c_str(), sessionOptions);

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

Ort::AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

input_names.push_back(ort_session->GetInputName(i, allocator));

/* AllocatedStringPtr input_name_Ptr = ort_session->GetInputNameAllocated(i, allocator);

input_names.push_back(input_name_Ptr.get()); */

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_node_dims.push_back(input_dims);

}

for (int i = 0; i < numOutputNodes; i++)

{

output_names.push_back(ort_session->GetOutputName(i, allocator));

/*AllocatedStringPtr output_name_Ptr= ort_session->GetInputNameAllocated(i, allocator);

output_names.push_back(output_name_Ptr.get());*/

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

this->input_height = input_node_dims[0][2];

this->input_width = input_node_dims[0][3];

this->normed_template.emplace_back(cv::Point2f(38.29459984, 51.69630032));

this->normed_template.emplace_back(cv::Point2f(73.53180016, 51.50140016));

this->normed_template.emplace_back(cv::Point2f(56.0252, 71.73660032));

this->normed_template.emplace_back(cv::Point2f(41.54929968, 92.36549952));

this->normed_template.emplace_back(cv::Point2f(70.72989952, 92.20409968));

return true;

}

catch (const std::exception&)

{

return false;

}

return true;

}

void FaceEmbdding::preprocess(const cv::Mat &cv_src, const std::vector<cv::Point2f> &face_landmark_5)

{

cv::Mat crop_img;

warp_face_by_face_landmark_5(cv_src, crop_img, face_landmark_5, this->normed_template, cv::Size(112, 112));

/*vector<uchar> inliers(face_landmark_5.size(), 0);

Mat affine_matrix = cv::estimateAffinePartial2D(face_landmark_5, this->normed_template, cv::noArray(), cv::RANSAC, 100.0);

Mat crop_img;

Size crop_size(112, 112);

warpAffine(srcimg, crop_img, affine_matrix, crop_size, cv::INTER_AREA, cv::BORDER_REPLICATE);*/

std::vector<cv::Mat> bgrChannels(3);

split(crop_img, bgrChannels);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].convertTo(bgrChannels[c], CV_32FC1, 1 / 127.5, -1.0);

}

const int image_area = this->input_height * this->input_width;

this->input_image.resize(3 * image_area);

size_t single_chn_size = image_area * sizeof(float);

memcpy(this->input_image.data(), (float *)bgrChannels[2].data, single_chn_size);

memcpy(this->input_image.data() + image_area, (float *)bgrChannels[1].data, single_chn_size);

memcpy(this->input_image.data() + image_area * 2, (float *)bgrChannels[0].data, single_chn_size);

}

std::vector<float> FaceEmbdding::detect(const cv::Mat &cv_src, const std::vector<cv::Point2f> &face_landmark_5)

{

this->preprocess(cv_src, face_landmark_5);

std::vector<int64_t> input_img_shape = {1, 3, this->input_height, this->input_width};

Ort::Value input_tensor_ = Ort::Value::CreateTensor<float>(memory_info_handler, this->input_image.data(), this->input_image.size(), input_img_shape.data(), input_img_shape.size());

Ort::RunOptions runOptions;

std::vector<Ort::Value> ort_outputs = this->ort_session->Run(runOptions, this->input_names.data(), &input_tensor_, 1, this->output_names.data(), output_names.size());

float *pdata = ort_outputs[0].GetTensorMutableData<float>();

const int len_feature = ort_outputs[0].GetTensorTypeAndShapeInfo().GetShape()[1];

std::vector<float> embedding(len_feature);

memcpy(embedding.data(), pdata, len_feature*sizeof(float));

return embedding;

}

4、人脸换脸

4.1 技术概述

人脸换脸,通常称为人脸交换或人脸替换,是一种使用图像或视频编辑技术将一个人的面部特征替换成另一个人的面部特征的过程。这项技术在娱乐、电影制作、安全监控和身份验证等领域有广泛应用,但同时也伴随着隐私和伦理问题。

(1). 技术基础:

- 人脸换脸技术通常基于深度学习算法,尤其是生成对抗网络(GANs),这些算法能够学习并模拟真实人脸的特征。

(2). 关键组件:

- 数据集:需要大量人脸图像来训练模型,以便捕捉不同的表情、光照条件和姿态。

- 预处理:包括人脸检测、对齐和归一化,确保输入数据适合模型处理。

- 模型训练:使用深度学习框架训练模型,使其能够生成逼真的人脸图像。

(3). 生成对抗网络(GANs):

- GANs包含两个网络:生成器和判别器。生成器负责创建逼真的人脸图像,而判别器则评估图像的真实性。两者相互竞争,推动生成器不断改进。

(4). 应用场景:

- 在电影和视频制作中,人脸换脸技术可以用来更换演员的脸或创造虚拟角色。

- 在安全监控领域,可以帮助识别和追踪嫌疑人。

- 在身份验证系统中,可以增强生物识别的准确性。

(5). 伦理和隐私问题:

- 人脸换脸技术可能被用于制造虚假内容,如“深度伪造”(deepfakes),引发法律和道德上的争议。

- 对个人隐私的侵犯,特别是在未经同意的情况下使用他人的形象。

(6). 技术挑战:

- 确保生成的人脸图像在不同光照和角度下都保持逼真。

- 处理视频中连续帧之间的一致性,避免闪烁或其他不自然的效果。

- 部署过程前向推理的运行速度。

(7). 未来发展:

- 随着技术的进步,人脸换脸技术将变得更加精细和逼真。

- 法规和检测技术也在发展,以应对由人脸换脸技术带来的潜在风险。

4.2 代码实现

换脸的模型可以从Facefusion-assets下载,模型已经转好成onnx,有原模型和fp16量化的模型,量化的模型速度要快过原模型,但效果要比原模型差一点,模型下载之后可以直接使用C++进行推理。

#include"faceswap.h"

SwapFace::SwapFace()

{

}

SwapFace::~SwapFace()

{

delete[] this->model_matrix;

this->model_matrix = nullptr;

this->normed_template.clear();

}

void SwapFace::set_gpu(int gpu_index, int gpu_ram)

{

std::vector<std::string> available_providers = Ort::GetAvailableProviders();

auto cuda_available = std::find(available_providers.begin(),

available_providers.end(), "CUDAExecutionProvider");

if (gpu_index >= 0 && (cuda_available != available_providers.end()))

{

OrtCUDAProviderOptions cuda_options;

cuda_options.device_id = gpu_index;

cuda_options.arena_extend_strategy = 0;

if (gpu_ram == -1)

{

cuda_options.gpu_mem_limit = ~0ULL;

}

else

{

cuda_options.gpu_mem_limit = size_t(gpu_ram * 1024 * 1024 * 1024);

}

cuda_options.cudnn_conv_algo_search = OrtCudnnConvAlgoSearch::OrtCudnnConvAlgoSearchExhaustive;

cuda_options.do_copy_in_default_stream = 1;

session_options.AppendExecutionProvider_CUDA(cuda_options);

}

}

void SwapFace::set_num_thread(int num_thread)

{

_num_thread = num_thread;

session_options.SetInterOpNumThreads(num_thread);

session_options.SetIntraOpNumThreads(num_thread);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

}

bool SwapFace::read_model(const std::string model_path,std::string matrix_path)

{

try

{

std::wstring widestr = std::wstring(model_path.begin(), model_path.end());

ort_session = new Ort::Session(env, widestr.c_str(), session_options);

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

Ort::AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

input_names.push_back(ort_session->GetInputName(i, allocator));

/* AllocatedStringPtr input_name_Ptr = ort_session->GetInputNameAllocated(i, allocator);

input_names.push_back(input_name_Ptr.get()); */

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_node_dims.push_back(input_dims);

}

for (int i = 0; i < numOutputNodes; i++)

{

output_names.push_back(ort_session->GetOutputName(i, allocator));

/* AllocatedStringPtr output_name_Ptr= ort_session->GetInputNameAllocated(i, allocator);

output_names.push_back(output_name_Ptr.get());*/

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

this->input_height = input_node_dims[0][2];

this->input_width = input_node_dims[0][3];

const int length = this->len_feature * this->len_feature;

this->model_matrix = new float[length];

FILE* fp = fopen(matrix_path.c_str(), "rb");

fread(this->model_matrix, sizeof(float), length, fp);

fclose(fp);

this->normed_template.emplace_back(cv::Point2f(46.29459968, 51.69629952));

this->normed_template.emplace_back(cv::Point2f(81.53180032, 51.50140032));

this->normed_template.emplace_back(cv::Point2f(64.02519936, 71.73660032));

this->normed_template.emplace_back(cv::Point2f(49.54930048, 92.36550016));

this->normed_template.emplace_back(cv::Point2f(78.72989952, 92.20409984));

return true;

}

catch (const std::exception&)

{

return false;

}

return true;

}

void SwapFace::preprocess(const cv::Mat &cv_src, const std::vector<cv::Point2f> &face_landmark_5,

const std::vector<float> &source_face_embedding, cv::Mat& affine_matrix, cv::Mat& box_mask)

{

cv::Mat crop_img;

affine_matrix = warp_face_by_face_landmark_5(cv_src, crop_img, face_landmark_5, this->normed_template, cv::Size(128, 128));

const int crop_size[2] = {crop_img.cols, crop_img.rows};

box_mask = create_static_box_mask(crop_size, this->FACE_MASK_BLUR, this->FACE_MASK_PADDING);

std::vector<cv::Mat> bgrChannels(3);

split(crop_img, bgrChannels);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].convertTo(bgrChannels[c], CV_32FC1, 1 / (255.0*this->INSWAPPER_128_MODEL_STD[c]), -this->INSWAPPER_128_MODEL_MEAN[c]/this->INSWAPPER_128_MODEL_STD[c]);

}

const int image_area = this->input_height * this->input_width;

this->input_image.resize(3 * image_area);

size_t single_chn_size = image_area * sizeof(float);

memcpy(this->input_image.data(), (float *)bgrChannels[2].data, single_chn_size);

memcpy(this->input_image.data() + image_area, (float *)bgrChannels[1].data, single_chn_size);

memcpy(this->input_image.data() + image_area * 2, (float *)bgrChannels[0].data, single_chn_size);

float linalg_norm = 0;

for(int i=0;i<this->len_feature;i++)

{

linalg_norm += powf(source_face_embedding[i], 2);

}

linalg_norm = sqrt(linalg_norm);

this->input_embedding.resize(this->len_feature);

for(int i=0;i<this->len_feature;i++)

{

float sum=0;

for(int j=0;j<this->len_feature;j++)

{

sum += (source_face_embedding[j]*this->model_matrix[j*this->len_feature+i]);

}

this->input_embedding[i] = sum/linalg_norm;

}

}

cv::Mat SwapFace::process(const cv::Mat &cv_src, const std::vector<float> &source_face_embedding,

const std::vector<cv::Point2f> &target_landmark_5)

{

cv::Mat affine_matrix;

cv::Mat box_mask;

this->preprocess(cv_src, target_landmark_5, source_face_embedding, affine_matrix, box_mask);

std::vector<Ort::Value> inputs_tensor;

std::vector<int64_t> input_img_shape = {1, 3, this->input_height, this->input_width};

inputs_tensor.emplace_back(Ort::Value::CreateTensor<float>(memory_info_handler, this->input_image.data(), this->input_image.size(), input_img_shape.data(), input_img_shape.size()));

std::vector<int64_t> input_embedding_shape = {1, this->len_feature};

inputs_tensor.emplace_back(Ort::Value::CreateTensor<float>(memory_info_handler, this->input_embedding.data(), this->input_embedding.size(), input_embedding_shape.data(), input_embedding_shape.size()));

Ort::RunOptions runOptions;

std::vector<Ort::Value> ort_outputs = this->ort_session->Run(runOptions, this->input_names.data(), inputs_tensor.data(), inputs_tensor.size(), this->output_names.data(), output_names.size());

float* pdata = ort_outputs[0].GetTensorMutableData<float>();

std::vector<int64_t> outs_shape = ort_outputs[0].GetTensorTypeAndShapeInfo().GetShape();

const int out_h = outs_shape[2];

const int out_w = outs_shape[3];

const int channel_step = out_h * out_w;

cv::Mat rmat(out_h, out_w, CV_32FC1, pdata);

cv::Mat gmat(out_h, out_w, CV_32FC1, pdata + channel_step);

cv::Mat bmat(out_h, out_w, CV_32FC1, pdata + 2 * channel_step);

rmat *= 255.f;

gmat *= 255.f;

bmat *= 255.f;

rmat.setTo(0, rmat < 0);

rmat.setTo(255, rmat > 255);

gmat.setTo(0, gmat < 0);

gmat.setTo(255, gmat > 255);

bmat.setTo(0, bmat < 0);

bmat.setTo(255, bmat > 255);

std::vector<cv::Mat> channel_mats(3);

channel_mats[0] = bmat;

channel_mats[1] = gmat;

channel_mats[2] = rmat;

cv::Mat result;

merge(channel_mats, result);

box_mask.setTo(0, box_mask < 0);

box_mask.setTo(1, box_mask > 1);

cv::Mat dstimg = paste_back(cv_src, result, box_mask, affine_matrix);

return dstimg;

}

5、人脸修复

5.1 人脸修复算法

盲脸修复是一项极具挑战性的任务,它涉及到在缺乏足够视觉信息的情况下重建人脸图像。这一过程不仅需要增强从受损输入到理想输出的映射能力,还需补充输入中缺失的高细节质量。在本文中,作者提出了一种新颖的方法,通过在代理空间中学习离散的码本先验,将人脸修复问题转化为一个码预测任务,有效降低了修复过程中的不确定性和模糊度,同时为生成高细节的脸部图像提供了丰富的视觉元素。

GFPGAN是一个专注于现实世界面部恢复的开源项目。它利用了预训练的面部生成对抗网络(GAN),例如大名鼎鼎的StyleGAN2,来恢复模糊、损坏或低分辨率的人脸图像。简而言之,GFPGAN就像是一位数字世界的整形医生,能够修复和提升照片中的面部质量。

(1).实用性强:GFPGAN不仅在理论上有所突破,更注重实际应用,能够处理各种真实世界中的面部图像。

(2).模型更新:GFPGAN不断更新其模型,最新的V1.4和V1.3模型在细节恢复和身份保持上都有显著提升。

(3).多平台支持:无论是Windows、Linux还是其他操作系统,GFPGAN都能提供良好的支持。

(4).在线演示:项目提供了在线演示,用户可以直接在网页上体验面部恢复的效果,无需下载和安装任何软件。

项目地址:https://github.com/TencentARC/GFPGAN

5.2 模型转换

从官方下载预训练模型,之后使用脚本把模型转成onnx:

# -*- coding: utf-8 -*-

#import cv2

import numpy as np

import time

import torch

import pdb

from collections import OrderedDict

import sys

sys.path.append('.')

sys.path.append('./lib')

import torch.nn as nn

from torch.autograd import Variable

import onnxruntime

import timeit

import argparse

from GFPGANReconsitution import GFPGAN

parser = argparse.ArgumentParser("ONNX converter")

parser.add_argument('--src_model_path', type=str, default=None, help='src model path')

parser.add_argument('--dst_model_path', type=str, default=None, help='dst model path')

parser.add_argument('--img_size', type=int, default=None, help='img size')

args = parser.parse_args()

#device = torch.device('cuda')

model_path = args.src_model_path

onnx_model_path = args.dst_model_path

img_size = args.img_size

model = GFPGAN()#.cuda()

x = torch.rand(1, 3, 512, 512)#.cuda()

state_dict = torch.load(model_path)['params_ema']

new_state_dict = OrderedDict()

for k, v in state_dict.items():

# stylegan_decoderdotto_rgbsdot1dotmodulated_convdotbias

if "stylegan_decoder" in k:

k = k.replace('.', 'dot')

new_state_dict[k] = v

k = k.replace('dotweight', '.weight')

k = k.replace('dotbias', '.bias')

new_state_dict[k] = v

else:

new_state_dict[k] = v

model.load_state_dict(new_state_dict, strict=False)

model.eval()

torch.onnx.export(model, x, onnx_model_path,

export_params=True, opset_version=11, do_constant_folding=True,

input_names = ['input'],output_names = [])

####

try:

original_model = onnx.load(onnx_model_path)

passes = ['fuse_bn_into_conv']

optimized_model = optimizer.optimize(original_model, passes)

onnx.save(optimized_model, onnx_model_path)

except:

print('skip optimize.')

####

ort_session = onnxruntime.InferenceSession(onnx_model_path)

for var in ort_session.get_inputs():

print(var.name)

for var in ort_session.get_outputs():

print(var.name)

_,_,input_h,input_w = ort_session.get_inputs()[0].shape

t = timeit.default_timer()

img = np.zeros((input_h,input_w,3))

img = (np.transpose(np.float32(img[:,:,:,np.newaxis]), (3,2,0,1)) )#*self.scale

img = np.ascontiguousarray(img)

#

ort_inputs = {ort_session.get_inputs()[0].name: img}

ort_outs = ort_session.run(None, ort_inputs)

print('onnxruntime infer time:', timeit.default_timer()-t)

print(ort_outs[0].shape)

# python torch2onnx.py --src_model_path ./experiments/pretrained_models/GFPGANCleanv1-NoCE-C2.pth --dst_model_path ./GFPGAN.onnx --img_size 512

# 新版本

# wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth

# python torch2onnx.py --src_model_path ./GFPGANv1.4.pth --dst_model_path ./GFPGANv1.4.onnx --img_size 512

# python torch2onnx.py --src_model_path ./GFPGANCleanv1-NoCE-C2.pth --dst_model_path ./GFPGANv1.2.onnx --img_size 512

5.3 C++ 模型推理

#include"faceenhancer.h"

using namespace cv;

using namespace std;

using namespace Ort;

FaceEnhance::FaceEnhance(string model_path)

{

OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0);

sessionOptions.SetGraphOptimizationLevel(ORT_ENABLE_BASIC);

sessionOptions.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

std::wstring widestr = std::wstring(model_path.begin(), model_path.end());

ort_session = new Session(env, widestr.c_str(), sessionOptions);

//ort_session = new Session(env, model_path.c_str(), sessionOptions);

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

input_names.push_back(ort_session->GetInputName(i, allocator));

/* AllocatedStringPtr input_name_Ptr = ort_session->GetInputNameAllocated(i, allocator);

input_names.push_back(input_name_Ptr.get()); */

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_node_dims.push_back(input_dims);

}

for (int i = 0; i < numOutputNodes; i++)

{

output_names.push_back(ort_session->GetOutputName(i, allocator));

/* AllocatedStringPtr output_name_Ptr= ort_session->GetInputNameAllocated(i, allocator);

output_names.push_back(output_name_Ptr.get()); */

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

this->input_height = input_node_dims[0][2];

this->input_width = input_node_dims[0][3];

this->normed_template.emplace_back(Point2f(192.98138112, 239.94707968));

this->normed_template.emplace_back(Point2f(318.90276864, 240.19360256));

this->normed_template.emplace_back(Point2f(256.63415808, 314.01934848));

this->normed_template.emplace_back(Point2f(201.26116864, 371.410432));

this->normed_template.emplace_back(Point2f(313.0890496, 371.1511808));

}

void FaceEnhance::preprocess(Mat srcimg, const vector<Point2f> face_landmark_5, Mat& affine_matrix, Mat& box_mask)

{

Mat crop_img;

affine_matrix = warp_face_by_face_landmark_5(srcimg, crop_img, face_landmark_5, this->normed_template, Size(512, 512));

const int crop_size[2] = {crop_img.cols, crop_img.rows};

box_mask = create_static_box_mask(crop_size, this->FACE_MASK_BLUR, this->FACE_MASK_PADDING);

vector<cv::Mat> bgrChannels(3);

split(crop_img, bgrChannels);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].convertTo(bgrChannels[c], CV_32FC1, 1 / (255.0*0.5), -1.0);

}

const int image_area = this->input_height * this->input_width;

this->input_image.resize(3 * image_area);

size_t single_chn_size = image_area * sizeof(float);

memcpy(this->input_image.data(), (float *)bgrChannels[2].data, single_chn_size);

memcpy(this->input_image.data() + image_area, (float *)bgrChannels[1].data, single_chn_size);

memcpy(this->input_image.data() + image_area * 2, (float *)bgrChannels[0].data, single_chn_size);

}

Mat FaceEnhance::process(Mat target_img, const vector<Point2f> target_landmark_5)

{

Mat affine_matrix;

Mat box_mask;

this->preprocess(target_img, target_landmark_5, affine_matrix, box_mask);

std::vector<int64_t> input_img_shape = {1, 3, this->input_height, this->input_width};

Value input_tensor_ = Value::CreateTensor<float>(memory_info_handler, this->input_image.data(), this->input_image.size(), input_img_shape.data(), input_img_shape.size());

Ort::RunOptions runOptions;

vector<Value> ort_outputs = this->ort_session->Run(runOptions, this->input_names.data(), &input_tensor_, 1, this->output_names.data(), output_names.size());

float* pdata = ort_outputs[0].GetTensorMutableData<float>();

std::vector<int64_t> outs_shape = ort_outputs[0].GetTensorTypeAndShapeInfo().GetShape();

const int out_h = outs_shape[2];

const int out_w = outs_shape[3];

const int channel_step = out_h * out_w;

Mat rmat(out_h, out_w, CV_32FC1, pdata);

Mat gmat(out_h, out_w, CV_32FC1, pdata + channel_step);

Mat bmat(out_h, out_w, CV_32FC1, pdata + 2 * channel_step);

rmat.setTo(-1, rmat < -1);

rmat.setTo(1, rmat > 1);

rmat = (rmat+1)*0.5;

gmat.setTo(-1, gmat < -1);

gmat.setTo(1, gmat > 1);

gmat = (gmat+1)*0.5;

bmat.setTo(-1, bmat < -1);

bmat.setTo(1, bmat > 1);

bmat = (bmat+1)*0.5;

rmat *= 255.f;

gmat *= 255.f;

bmat *= 255.f;

rmat.setTo(0, rmat < 0);

rmat.setTo(255, rmat > 255);

gmat.setTo(0, gmat < 0);

gmat.setTo(255, gmat > 255);

bmat.setTo(0, bmat < 0);

bmat.setTo(255, bmat > 255);

vector<Mat> channel_mats(3);

channel_mats[0] = bmat;

channel_mats[1] = gmat;

channel_mats[2] = rmat;

Mat result;

merge(channel_mats, result);

result.convertTo(result, CV_8UC3);

box_mask.setTo(0, box_mask < 0);

box_mask.setTo(1, box_mask > 1);

Mat paste_frame = paste_back(target_img, result, box_mask, affine_matrix);

Mat dstimg = blend_frame(target_img, paste_frame);

return dstimg;

}