import math

import numpy as np

from opt_utils import *

import matplotlib.pyplot as plt

# 标准梯度下降

def update_parameters_with_gd(parameters, grads, learning_rate):

L = len(parameters) // 2

for l in range(1, L + 1):

parameters[f"W{l}"] = parameters[f"W{l}"] - learning_rate * grads[f"dW{l}"]

parameters[f"b{l}"] = parameters[f"b{l}"] - learning_rate * grads[f"db{l}"]

return parameters

# mini-batch梯度下降

def random_mini_batches(X, Y, mini_batch_size=64, seed=0):

np.random.seed(seed)

m = X.shape[1]

mini_batches = []

permutation = list(np.random.permutation(m))

# 洗牌

shuffled_X = X[:, permutation]

shuffled_Y = Y[:, permutation].reshape((1, m))

# 分割

num_complete_minibatches = math.floor(m / mini_batch_size)

for k in range(0, num_complete_minibatches):

mini_batch_X = shuffled_X[:, k * mini_batch_size:(k + 1) * mini_batch_size]

mini_batch_Y = shuffled_Y[:, k * mini_batch_size:(k + 1) * mini_batch_size]

mini_batch = (mini_batch_X, mini_batch_Y)

mini_batches.append(mini_batch)

if m % mini_batch_size != 0:

mini_batch_X = shuffled_X[:, num_complete_minibatches * mini_batch_size:]

mini_batch_Y = shuffled_Y[:, num_complete_minibatches * mini_batch_size:]

mini_batch = (mini_batch_X, mini_batch_Y)

mini_batches.append(mini_batch)

return mini_batches

# 动量梯度下降

def initialize_velocity(parameters):

L = len(parameters) // 2

v = {}

for l in range(1, L + 1):

v[f"dW{l}"] = np.zeros_like(parameters[f"W{l}"])

v[f"db{l}"] = np.zeros_like(parameters[f"b{l}"])

return v

def update_parameters_with_momentum(parameters, grads, v, beta, learning_rate):

L = len(parameters) // 2

for l in range(L):

v["dW" + str(l + 1)] = beta * v["dW" + str(l + 1)] + (1 - beta) * grads['dW' + str(l + 1)]

v["db" + str(l + 1)] = beta * v["db" + str(l + 1)] + (1 - beta) * grads['db' + str(l + 1)]

parameters["W" + str(l + 1)] = parameters["W" + str(l + 1)] - learning_rate * v["dW" + str(l + 1)]

parameters["b" + str(l + 1)] = parameters["b" + str(l + 1)] - learning_rate * v["db" + str(l + 1)]

return parameters, v

# Adam梯度下降

def initialize_adam(parameters):

L = len(parameters) // 2

v = {}

s = {}

for l in range(L):

v["dW" + str(l + 1)] = np.zeros_like(parameters["W" + str(l + 1)])

v["db" + str(l + 1)] = np.zeros_like(parameters["b" + str(l + 1)])

s["dW" + str(l + 1)] = np.zeros_like(parameters["W" + str(l + 1)])

s["db" + str(l + 1)] = np.zeros_like(parameters["b" + str(l + 1)])

return v, s

def update_parameters_with_adam(parameters, grads, v, s, t, learning_rate=0.01,

beta1=0.9, beta2=0.999, epsilon=1e-8):

L = len(parameters) // 2

v_corrected = {}

s_corrected = {}

for l in range(L):

v["dW" + str(l + 1)] = beta1 * v["dW" + str(l + 1)] + (1 - beta1) * grads['dW' + str(l + 1)]

v["db" + str(l + 1)] = beta1 * v["db" + str(l + 1)] + (1 - beta1) * grads['db' + str(l + 1)]

v_corrected["dW" + str(l + 1)] = v["dW" + str(l + 1)] / (1 - np.power(beta1, t))

v_corrected["db" + str(l + 1)] = v["db" + str(l + 1)] / (1 - np.power(beta1, t))

s["dW" + str(l + 1)] = beta2 * s["dW" + str(l + 1)] + (1 - beta2) * np.power(grads['dW' + str(l + 1)], 2)

s["db" + str(l + 1)] = beta2 * s["db" + str(l + 1)] + (1 - beta2) * np.power(grads['db' + str(l + 1)], 2)

s_corrected["dW" + str(l + 1)] = s["dW" + str(l + 1)] / (1 - np.power(beta2, t))

s_corrected["db" + str(l + 1)] = s["db" + str(l + 1)] / (1 - np.power(beta2, t))

parameters["W" + str(l + 1)] = parameters["W" + str(l + 1)] - learning_rate * v_corrected[

"dW" + str(l + 1)] / np.sqrt(s_corrected["dW" + str(l + 1)] + epsilon)

parameters["b" + str(l + 1)] = parameters["b" + str(l + 1)] - learning_rate * v_corrected[

"db" + str(l + 1)] / np.sqrt(s_corrected["db" + str(l + 1)] + epsilon)

return parameters, v, s

def model(X, Y, layers_dims, optimizer, learning_rate=0.0007, mini_batch_size=64, beta=0.9, beta1=0.9, beta2=0.999,

epsilon=1e-8, num_epochs=10000, print_cost=True):

L = len(layers_dims)

costs = []

t = 0

seed = 10

parameters = initialize_parameters(layers_dims)

if optimizer == "gd":

pass

elif optimizer == "momentum":

v = initialize_velocity(parameters)

elif optimizer == "adam":

v, s = initialize_adam(parameters)

# 一个epoch 就是遍历整个数据集一遍,一个epoch有多个mini-batch

for i in range(num_epochs):

seed = seed + 1

minibatches = random_mini_batches(X, Y, mini_batch_size, seed)

for minibatch in minibatches:

(minibatch_X, minibatch_Y) = minibatch

a3, caches = forward_propagation(minibatch_X, parameters)

cost = compute_cost(a3, minibatch_Y)

grads = backward_propagation(minibatch_X, minibatch_Y, caches)

if optimizer == "gd":

parameters = update_parameters_with_gd(parameters, grads, learning_rate)

elif optimizer == "momentum":

parameters, v = update_parameters_with_momentum(parameters, grads, v, beta, learning_rate)

elif optimizer == "adam":

t = t + 1

parameters, v, s = update_parameters_with_adam(parameters, grads, v, s, t, learning_rate, beta1, beta2,

epsilon)

if print_cost and i % 1000 == 0:

print("Cost after epoch %i: %f" % (i, cost))

if print_cost and i % 100 == 0:

costs.append(cost)

return parameters, costs

def training1(train_X, train_Y, layers_dims):

# 标准mini-batch梯度下降

parameters, costs = model(train_X, train_Y, layers_dims, optimizer="gd")

p = predict_dec(parameters, train_X)

print("Accuracy: " + str(np.mean((p[0, :] == train_Y[0, :]))))

plt.subplot(131)

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('epochs (per 100)')

plt.title("Learning rate = " + str(0.0007))

# plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y.ravel())

def training2(train_X, train_Y, layers_dims):

# mini-batch动量梯度下降

parameters, costs = model(train_X, train_Y, layers_dims, optimizer="momentum")

p = predict_dec(parameters, train_X)

print("Accuracy: " + str(np.mean((p[0, :] == train_Y[0, :]))))

plt.subplot(132)

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('epochs (per 100)')

# plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y.ravel())

def training3(train_X, train_Y, layers_dims):

# mini-batch Adam梯度下降

parameters, costs = model(train_X, train_Y, layers_dims, optimizer="adam")

p = predict_dec(parameters, train_X)

print("Accuracy: " + str(np.mean((p[0, :] == train_Y[0, :]))))

plt.subplot(133)

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('epochs (per 100)')

# plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y.ravel())

if __name__ == "__main__":

train_X, train_Y = load_dataset()

layers_dims = [train_X.shape[0], 5, 2, 1]

print("mini batch with gd:")

training1(train_X, train_Y, layers_dims)

print("mini batch with momentum:")

training2(train_X, train_Y, layers_dims)

print("mini batch with adam:")

training3(train_X, train_Y, layers_dims)

plt.show()

util:

import numpy as np

import matplotlib.pyplot as plt

import h5py

import scipy.io

import sklearn

import sklearn.datasets

def sigmoid(x):

"""

Compute the sigmoid of x

Arguments:

x -- A scalar or numpy array of any size.

Return:

s -- sigmoid(x)

"""

s = 1/(1+np.exp(-x))

return s

def relu(x):

"""

Compute the relu of x

Arguments:

x -- A scalar or numpy array of any size.

Return:

s -- relu(x)

"""

s = np.maximum(0,x)

return s

def load_params_and_grads(seed=1):

np.random.seed(seed)

W1 = np.random.randn(2,3)

b1 = np.random.randn(2,1)

W2 = np.random.randn(3,3)

b2 = np.random.randn(3,1)

dW1 = np.random.randn(2,3)

db1 = np.random.randn(2,1)

dW2 = np.random.randn(3,3)

db2 = np.random.randn(3,1)

return W1, b1, W2, b2, dW1, db1, dW2, db2

def initialize_parameters(layer_dims):

"""

Arguments:

layer_dims -- python array (list) containing the dimensions of each layer in our network

Returns:

parameters -- python dictionary containing your parameters "W1", "b1", ..., "WL", "bL":

W1 -- weight matrix of shape (layer_dims[l], layer_dims[l-1])

b1 -- bias vector of shape (layer_dims[l], 1)

Wl -- weight matrix of shape (layer_dims[l-1], layer_dims[l])

bl -- bias vector of shape (1, layer_dims[l])

Tips:

- For example: the layer_dims for the "Planar Data classification model" would have been [2,2,1].

This means W1's shape was (2,2), b1 was (1,2), W2 was (2,1) and b2 was (1,1). Now you have to generalize it!

- In the for loop, use parameters['W' + str(l)] to access Wl, where l is the iterative integer.

"""

np.random.seed(3)

parameters = {}

L = len(layer_dims) # number of layers in the network

for l in range(1, L):

parameters['W' + str(l)] = np.random.randn(layer_dims[l], layer_dims[l-1])* np.sqrt(2 / layer_dims[l-1])

parameters['b' + str(l)] = np.zeros((layer_dims[l], 1))

assert(parameters['W' + str(l)].shape == layer_dims[l], layer_dims[l-1])

assert(parameters['W' + str(l)].shape == layer_dims[l], 1)

return parameters

def compute_cost(a3, Y):

"""

Implement the cost function

Arguments:

a3 -- post-activation, output of forward propagation

Y -- "true" labels vector, same shape as a3

Returns:

cost - value of the cost function

"""

m = Y.shape[1]

logprobs = np.multiply(-np.log(a3),Y) + np.multiply(-np.log(1 - a3), 1 - Y)

cost = 1./m * np.sum(logprobs)

return cost

def forward_propagation(X, parameters):

"""

Implements the forward propagation (and computes the loss) presented in Figure 2.

Arguments:

X -- input dataset, of shape (input size, number of examples)

parameters -- python dictionary containing your parameters "W1", "b1", "W2", "b2", "W3", "b3":

W1 -- weight matrix of shape ()

b1 -- bias vector of shape ()

W2 -- weight matrix of shape ()

b2 -- bias vector of shape ()

W3 -- weight matrix of shape ()

b3 -- bias vector of shape ()

Returns:

loss -- the loss function (vanilla logistic loss)

"""

# retrieve parameters

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

W3 = parameters["W3"]

b3 = parameters["b3"]

# LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID

z1 = np.dot(W1, X) + b1

a1 = relu(z1)

z2 = np.dot(W2, a1) + b2

a2 = relu(z2)

z3 = np.dot(W3, a2) + b3

a3 = sigmoid(z3)

cache = (z1, a1, W1, b1, z2, a2, W2, b2, z3, a3, W3, b3)

return a3, cache

def backward_propagation(X, Y, cache):

"""

Implement the backward propagation presented in figure 2.

Arguments:

X -- input dataset, of shape (input size, number of examples)

Y -- true "label" vector (containing 0 if cat, 1 if non-cat)

cache -- cache output from forward_propagation()

Returns:

gradients -- A dictionary with the gradients with respect to each parameter, activation and pre-activation variables

"""

m = X.shape[1]

(z1, a1, W1, b1, z2, a2, W2, b2, z3, a3, W3, b3) = cache

dz3 = 1./m * (a3 - Y)

dW3 = np.dot(dz3, a2.T)

db3 = np.sum(dz3, axis=1, keepdims = True)

da2 = np.dot(W3.T, dz3)

dz2 = np.multiply(da2, np.int64(a2 > 0))

dW2 = np.dot(dz2, a1.T)

db2 = np.sum(dz2, axis=1, keepdims = True)

da1 = np.dot(W2.T, dz2)

dz1 = np.multiply(da1, np.int64(a1 > 0))

dW1 = np.dot(dz1, X.T)

db1 = np.sum(dz1, axis=1, keepdims = True)

gradients = {"dz3": dz3, "dW3": dW3, "db3": db3,

"da2": da2, "dz2": dz2, "dW2": dW2, "db2": db2,

"da1": da1, "dz1": dz1, "dW1": dW1, "db1": db1}

return gradients

def predict(X, y, parameters):

"""

This function is used to predict the results of a n-layer neural network.

Arguments:

X -- data set of examples you would like to label

parameters -- parameters of the trained model

Returns:

p -- predictions for the given dataset X

"""

m = X.shape[1]

p = np.zeros((1,m), dtype = np.int)

# Forward propagation

a3, caches = forward_propagation(X, parameters)

# convert probas to 0/1 predictions

for i in range(0, a3.shape[1]):

if a3[0,i] > 0.5:

p[0,i] = 1

else:

p[0,i] = 0

# print results

#print ("predictions: " + str(p[0,:]))

#print ("true labels: " + str(y[0,:]))

print("Accuracy: " + str(np.mean((p[0,:] == y[0,:]))))

return p

def load_2D_dataset():

data = scipy.io.loadmat('datasets/data.mat')

train_X = data['X'].T

train_Y = data['y'].T

test_X = data['Xval'].T

test_Y = data['yval'].T

plt.scatter(train_X[0, :], train_X[1, :], c=train_Y, s=40, cmap=plt.cm.Spectral);

return train_X, train_Y, test_X, test_Y

def plot_decision_boundary(model, X, y):

# Set min and max values and give it some padding

x_min, x_max = X[0, :].min() - 1, X[0, :].max() + 1

y_min, y_max = X[1, :].min() - 1, X[1, :].max() + 1

h = 0.01

# Generate a grid of points with distance h between them

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

# Predict the function value for the whole grid

Z = model(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

# Plot the contour and training examples

plt.contourf(xx, yy, Z, cmap=plt.cm.Spectral)

plt.ylabel('x2')

plt.xlabel('x1')

plt.scatter(X[0, :], X[1, :], c=y, cmap=plt.cm.Spectral)

plt.show()

def predict_dec(parameters, X):

"""

Used for plotting decision boundary.

Arguments:

parameters -- python dictionary containing your parameters

X -- input data of size (m, K)

Returns

predictions -- vector of predictions of our model (red: 0 / blue: 1)

"""

# Predict using forward propagation and a classification threshold of 0.5

a3, cache = forward_propagation(X, parameters)

predictions = (a3 > 0.5)

return predictions

def load_dataset():

np.random.seed(3)

train_X, train_Y = sklearn.datasets.make_moons(n_samples=300, noise=.2) #300 #0.2

# Visualize the data

# plt.scatter(train_X[:, 0], train_X[:, 1], c=train_Y, s=40, cmap=plt.cm.Spectral);

train_X = train_X.T

train_Y = train_Y.reshape((1, train_Y.shape[0]))

return train_X, train_Y

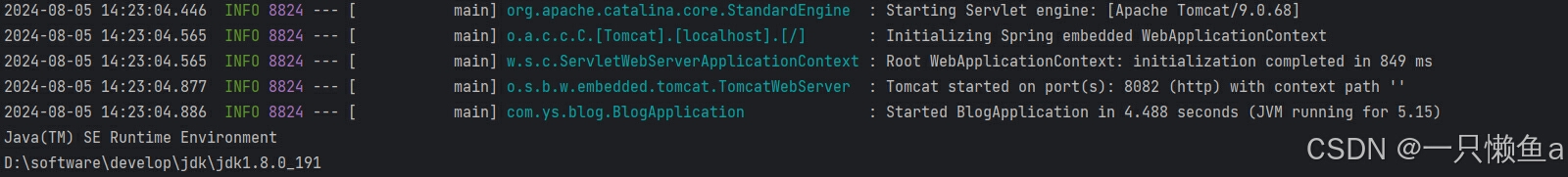

mini batch with gd:

Cost after epoch 0: 0.690736

Cost after epoch 1000: 0.685273

Cost after epoch 2000: 0.647072

Cost after epoch 3000: 0.619525

Cost after epoch 4000: 0.576584

Cost after epoch 5000: 0.607243

Cost after epoch 6000: 0.529403

Cost after epoch 7000: 0.460768

Cost after epoch 8000: 0.465586

Cost after epoch 9000: 0.464518

Accuracy: 0.7966666666666666

mini batch with momentum:

Cost after epoch 0: 0.690741

Cost after epoch 1000: 0.685341

Cost after epoch 2000: 0.647145

Cost after epoch 3000: 0.619594

Cost after epoch 4000: 0.576665

Cost after epoch 5000: 0.607324

Cost after epoch 6000: 0.529476

Cost after epoch 7000: 0.460936

Cost after epoch 8000: 0.465780

Cost after epoch 9000: 0.464740

Accuracy: 0.7966666666666666

mini batch with adam:

Cost after epoch 0: 0.690552

Cost after epoch 1000: 0.185501

Cost after epoch 2000: 0.150830

Cost after epoch 3000: 0.074454

Cost after epoch 4000: 0.125959

Cost after epoch 5000: 0.104344

Cost after epoch 6000: 0.100676

Cost after epoch 7000: 0.031652

Cost after epoch 8000: 0.111973

Cost after epoch 9000: 0.197940

Accuracy: 0.94