YOLOv5改进 | 多尺度特征提取 | 结合多样分支块及融合的高级设计)

- 本文介绍

- 论文原理介绍

- 网络代码

- 多种yaml设置

- 网络测试及实验结果

本文介绍

YOLOv5(You Only Look Once)以其高效、准确的实时目标检测性能被广泛应用。然而,随着视觉任务的复杂性增加,单一架构在多尺度特征提取上的局限性愈加明显。为此,本文提出了一种结合Diverse Branch Block(DBB)的改进方法,以进一步提升YOLOv5的性能。

多尺度特征提取是提升目标检测性能的重要手段,尤其在面对不同尺寸和复杂背景的目标时更为显著。通过引入金字塔结构(Feature Pyramid Network, FPN)和金字塔注意力模块(Pyramid Attention Module, PAM),可以在不同尺度上提取更加细粒度的特征,增强网络对不同尺寸目标的检测能力。

Diverse Branch Block的结合

- Diverse Branch Block(DBB)是一种创新的卷积神经网络结构,通过在训练过程中将多个分支结构合并为一个卷积层,在推理阶段大大提高了计算效率。将DBB的核心模块整合到YOLOv5中,可以显著提升网络的性能和效率。主要的结合点包括:

- 分支卷积融合:通过DBB的方法,将多个不同配置的卷积层分支在推理时融合为一个卷积层,保持多尺度特征提取的丰富性,同时减少计算成本。

- 高效参数化:DBB利用其高效的参数化方法,在保证模型性能的同时,显著降低模型的参数量和计算复杂度。

卷积和池化操作的统一:DBB将卷积、池化、批量归一化等操作通过线性变换统一为一个卷积操作,提高了模型的执行效率。

论文:https://arxiv.org/pdf/2103.13425

代码:https://github.com/DingXiaoH/DiverseBranchBlock?tab=readme-ov-file

将其加入到yolov5中,融合后不会增加任何计算量,且性能更高,这个改进用来写论文的很少

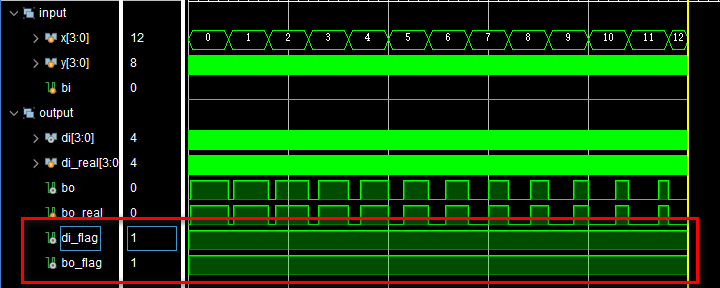

YOLOv5s_DBB summary: 412 layers, 9964797 parameters, 9964797 gradients, 23.1 GFLOPs

Fusing layers...

YOLOv5s_DBB summary: 179 layers, 7225885 parameters, 5187773 gradients, 16.4 GFLOPs

论文原理介绍

我们提出了一种通用的卷积神经网络(ConvNet)构建块,在不需要任何推理时间成本的情况下提高其性能。该块被命名为Di verse Branch block (DBB),通过组合不同规模和复杂度的分支来增强单个卷积的表示能力,从而丰富特征空间,包括卷积序列、多尺度卷积和平均池化。经过训练后,可以将dbb等效地转换为单个转换层以供部署。与新颖的convnet体系结构的进步不同,DBB在维护宏体系结构的同时使训练时间的mi基础结构复杂化,因此它可以用作任何体系结构的常规转换层的临时替代品。这样,可以训练模型达到更高的性能水平,然后将其转化为原始的推理时间结构进行推理。DBB在图像分类、目标检测和语义分割方面改进了ConvNets(在ImageNet上的前一准确率提高了1.9%)。

Diverse Branch Block (DBB) 是2021年CVPR会议上提出的一种创新方法,旨在通过将复杂的多分支结构转化为单一的卷积层,从而提高卷积神经网络(CNN)的效率和性能。以下是该论文的主要创新点:

主要贡献

分支卷积融合

DBB 的核心创新在于利用卷积的线性性质,将多个不同配置的卷积分支在训练过程中融合为一个卷积层。这种融合不仅保留了多分支结构在特征提取方面的优势,还大大简化了推理阶段的计算复杂度。

多分支结构设计

DBB 设计了多种分支结构,包括不同尺寸的卷积核(如 11,33,5*5),以及平均池化和全连接层等。通过这些多样化的分支设计,DBB 能够捕捉不同尺度和类型的特征,从而提高网络的表达能力。

高效的参数化方法

NAS是一种自动化的方法,用于搜索和优化神经网络架构。MNV4引入了一种优化的NAS配方,提升了架构搜索的效率和效果。

- 改进的搜索算法: 通过改进搜索算法,使得NAS能够更有效地探索架构空间,找到性能更优的网络。

- 高效的搜索策略: 新的搜索策略能够更快地收敛到最优解,从而节省计算资源。

- 更好的效果: 通过NAS搜索到的架构在多个基准测试中表现出色,实现了性能和计算成本的良好平衡。

新型蒸馏技术 - 变换 I: Conv-BN 融合:

将卷积层与批量归一化层(BN)融合,在推理阶段减少BN层的计算。 - 变换 II: 分支相加:

将具有相同配置的多个卷积分支通过相加的方式融合为一个卷积层。 - 变换 III: 顺序卷积融合:

将顺序排列的卷积和BN层合并为一个卷积层。 - 变换 IV: 深度连接:

类似于Inception单元,通过深度连接(Depth Concatenation)将多个分支的输出通道连接,形成更大的卷积核。 - 变换 V: 平均池化:

将平均池化操作视为具有特定核值的卷积操作,从而进行融合。 - 变换 VI: 多尺度卷积:

通过对较小卷积核进行零填充,实现不同卷积核的融合,形成单一较大的卷积核。

这个模块可以替换所有的3*3卷积模块,但是要注意激活函数的使用

网络代码

步骤1 新建这几个文件

步骤2 放入代码

- diversebranchblock.py

# -*- coding: utf-8 -*-

# @Time : 2024/8/2 21:19

# @Author : sjh

# @Site :

# @File : diversebranchblock.py

# @Comment :

import torch

import torch.nn as nn

import torch.nn.functional as F

from models.DBB.dbb_transforms import *

def conv_bn(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1,

padding_mode='zeros'):

conv_layer = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,

stride=stride, padding=padding, dilation=dilation, groups=groups,

bias=False, padding_mode=padding_mode)

bn_layer = nn.BatchNorm2d(num_features=out_channels, affine=True)

se = nn.Sequential()

se.add_module('conv', conv_layer)

se.add_module('bn', bn_layer)

return se

class IdentityBasedConv1x1(nn.Conv2d):

def __init__(self, channels, groups=1):

super(IdentityBasedConv1x1, self).__init__(in_channels=channels, out_channels=channels, kernel_size=1, stride=1, padding=0, groups=groups, bias=False)

assert channels % groups == 0

input_dim = channels // groups

id_value = np.zeros((channels, input_dim, 1, 1))

for i in range(channels):

id_value[i, i % input_dim, 0, 0] = 1

self.id_tensor = torch.from_numpy(id_value).type_as(self.weight)

nn.init.zeros_(self.weight)

def forward(self, input):

kernel = self.weight + self.id_tensor.to(self.weight.device)

kernel = kernel.to(input.dtype)

result = F.conv2d(input, kernel, None, stride=1, padding=0, dilation=self.dilation, groups=self.groups)

return result

def get_actual_kernel(self):

return self.weight + self.id_tensor.to(self.weight.device)

class BNAndPadLayer(nn.Module):

def __init__(self,

pad_pixels,

num_features,

eps=1e-5,

momentum=0.1,

affine=True,

track_running_stats=True):

super(BNAndPadLayer, self).__init__()

self.bn = nn.BatchNorm2d(num_features, eps, momentum, affine, track_running_stats)

self.pad_pixels = pad_pixels

def forward(self, input):

output = self.bn(input)

if self.pad_pixels > 0:

if self.bn.affine:

pad_values = self.bn.bias.detach() - self.bn.running_mean * self.bn.weight.detach() / torch.sqrt(self.bn.running_var + self.bn.eps)

else:

pad_values = - self.bn.running_mean / torch.sqrt(self.bn.running_var + self.bn.eps)

output = F.pad(output, [self.pad_pixels] * 4)

pad_values = pad_values.view(1, -1, 1, 1)

output[:, :, 0:self.pad_pixels, :] = pad_values

output[:, :, -self.pad_pixels:, :] = pad_values

output[:, :, :, 0:self.pad_pixels] = pad_values

output[:, :, :, -self.pad_pixels:] = pad_values

return output

@property

def weight(self):

return self.bn.weight

@property

def bias(self):

return self.bn.bias

@property

def running_mean(self):

return self.bn.running_mean

@property

def running_var(self):

return self.bn.running_var

@property

def eps(self):

return self.bn.eps

class DiverseBranchBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size,

stride=1, padding=0, dilation=1, groups=1,

internal_channels_1x1_3x3=None,

deploy=False, nonlinear=None, single_init=False):

super(DiverseBranchBlock, self).__init__()

self.deploy = deploy

if nonlinear is None:

self.nonlinear = nn.Identity()

else:

self.nonlinear = nonlinear

self.kernel_size = kernel_size

self.out_channels = out_channels

self.groups = groups

assert padding == kernel_size // 2

if deploy:

self.dbb_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=True)

else:

self.dbb_origin = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride, padding=padding, dilation=dilation, groups=groups)

self.dbb_avg = nn.Sequential()

if groups < out_channels:

self.dbb_avg.add_module('conv',

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=1,

stride=1, padding=0, groups=groups, bias=False))

self.dbb_avg.add_module('bn', BNAndPadLayer(pad_pixels=padding, num_features=out_channels))

self.dbb_avg.add_module('avg', nn.AvgPool2d(kernel_size=kernel_size, stride=stride, padding=0))

self.dbb_1x1 = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=stride,

padding=0, groups=groups)

else:

self.dbb_avg.add_module('avg', nn.AvgPool2d(kernel_size=kernel_size, stride=stride, padding=padding))

self.dbb_avg.add_module('avgbn', nn.BatchNorm2d(out_channels))

if internal_channels_1x1_3x3 is None:

internal_channels_1x1_3x3 = in_channels if groups < out_channels else 2 * in_channels # For mobilenet, it is better to have 2X internal channels

self.dbb_1x1_kxk = nn.Sequential()

if internal_channels_1x1_3x3 == in_channels:

self.dbb_1x1_kxk.add_module('idconv1', IdentityBasedConv1x1(channels=in_channels, groups=groups))

else:

self.dbb_1x1_kxk.add_module('conv1', nn.Conv2d(in_channels=in_channels, out_channels=internal_channels_1x1_3x3,

kernel_size=1, stride=1, padding=0, groups=groups, bias=False))

self.dbb_1x1_kxk.add_module('bn1', BNAndPadLayer(pad_pixels=padding, num_features=internal_channels_1x1_3x3, affine=True))

self.dbb_1x1_kxk.add_module('conv2', nn.Conv2d(in_channels=internal_channels_1x1_3x3, out_channels=out_channels,

kernel_size=kernel_size, stride=stride, padding=0, groups=groups, bias=False))

self.dbb_1x1_kxk.add_module('bn2', nn.BatchNorm2d(out_channels))

# The experiments reported in the paper used the default initialization of bn.weight (all as 1). But changing the initialization may be useful in some cases.

if single_init:

# Initialize the bn.weight of dbb_origin as 1 and others as 0. This is not the default setting.

self.single_init()

def get_equivalent_kernel_bias(self):

k_origin, b_origin = transI_fusebn(self.dbb_origin.conv.weight, self.dbb_origin.bn)

if hasattr(self, 'dbb_1x1'):

k_1x1, b_1x1 = transI_fusebn(self.dbb_1x1.conv.weight, self.dbb_1x1.bn)

k_1x1 = transVI_multiscale(k_1x1, self.kernel_size)

else:

k_1x1, b_1x1 = 0, 0

if hasattr(self.dbb_1x1_kxk, 'idconv1'):

k_1x1_kxk_first = self.dbb_1x1_kxk.idconv1.get_actual_kernel()

else:

k_1x1_kxk_first = self.dbb_1x1_kxk.conv1.weight

k_1x1_kxk_first, b_1x1_kxk_first = transI_fusebn(k_1x1_kxk_first, self.dbb_1x1_kxk.bn1)

k_1x1_kxk_second, b_1x1_kxk_second = transI_fusebn(self.dbb_1x1_kxk.conv2.weight, self.dbb_1x1_kxk.bn2)

k_1x1_kxk_merged, b_1x1_kxk_merged = transIII_1x1_kxk(k_1x1_kxk_first, b_1x1_kxk_first, k_1x1_kxk_second, b_1x1_kxk_second, groups=self.groups)

k_avg = transV_avg(self.out_channels, self.kernel_size, self.groups)

k_1x1_avg_second, b_1x1_avg_second = transI_fusebn(k_avg.to(self.dbb_avg.avgbn.weight.device), self.dbb_avg.avgbn)

if hasattr(self.dbb_avg, 'conv'):

k_1x1_avg_first, b_1x1_avg_first = transI_fusebn(self.dbb_avg.conv.weight, self.dbb_avg.bn)

k_1x1_avg_merged, b_1x1_avg_merged = transIII_1x1_kxk(k_1x1_avg_first, b_1x1_avg_first, k_1x1_avg_second, b_1x1_avg_second, groups=self.groups)

else:

k_1x1_avg_merged, b_1x1_avg_merged = k_1x1_avg_second, b_1x1_avg_second

return transII_addbranch((k_origin, k_1x1, k_1x1_kxk_merged, k_1x1_avg_merged), (b_origin, b_1x1, b_1x1_kxk_merged, b_1x1_avg_merged))

def switch_to_deploy(self):

if hasattr(self, 'dbb_reparam'):

return

kernel, bias = self.get_equivalent_kernel_bias()

self.dbb_reparam = nn.Conv2d(in_channels=self.dbb_origin.conv.in_channels, out_channels=self.dbb_origin.conv.out_channels,

kernel_size=self.dbb_origin.conv.kernel_size, stride=self.dbb_origin.conv.stride,

padding=self.dbb_origin.conv.padding, dilation=self.dbb_origin.conv.dilation, groups=self.dbb_origin.conv.groups, bias=True)

self.dbb_reparam.weight.data = kernel

self.dbb_reparam.bias.data = bias

for para in self.parameters():

para.detach_()

self.__delattr__('dbb_origin')

self.__delattr__('dbb_avg')

if hasattr(self, 'dbb_1x1'):

self.__delattr__('dbb_1x1')

self.__delattr__('dbb_1x1_kxk')

def forward(self, inputs):

if hasattr(self, 'dbb_reparam'):

return self.nonlinear(self.dbb_reparam(inputs))

out = self.dbb_origin(inputs)

if hasattr(self, 'dbb_1x1'):

out += self.dbb_1x1(inputs)

out += self.dbb_avg(inputs)

out += self.dbb_1x1_kxk(inputs)

return self.nonlinear(out)

def init_gamma(self, gamma_value):

if hasattr(self, "dbb_origin"):

torch.nn.init.constant_(self.dbb_origin.bn.weight, gamma_value)

if hasattr(self, "dbb_1x1"):

torch.nn.init.constant_(self.dbb_1x1.bn.weight, gamma_value)

if hasattr(self, "dbb_avg"):

torch.nn.init.constant_(self.dbb_avg.avgbn.weight, gamma_value)

if hasattr(self, "dbb_1x1_kxk"):

torch.nn.init.constant_(self.dbb_1x1_kxk.bn2.weight, gamma_value)

def single_init(self):

self.init_gamma(0.0)

if hasattr(self, "dbb_origin"):

torch.nn.init.constant_(self.dbb_origin.bn.weight, 1.0)

- dbb_transforms.py

# -*- coding: utf-8 -*-

# @Time : 2024/8/2 21:19

# @Author : sjh

# @Site :

# @File : dbb_transforms.py

# @Comment :

import torch

import numpy as np

import torch.nn.functional as F

def transI_fusebn(kernel, bn):

gamma = bn.weight

std = (bn.running_var + bn.eps).sqrt()

return kernel * ((gamma / std).reshape(-1, 1, 1, 1)), bn.bias - bn.running_mean * gamma / std

def transII_addbranch(kernels, biases):

return sum(kernels), sum(biases)

def transIII_1x1_kxk(k1, b1, k2, b2, groups):

if groups == 1:

k = F.conv2d(k2, k1.permute(1, 0, 2, 3)) #

b_hat = (k2 * b1.reshape(1, -1, 1, 1)).sum((1, 2, 3))

else:

k_slices = []

b_slices = []

k1_T = k1.permute(1, 0, 2, 3)

k1_group_width = k1.size(0) // groups

k2_group_width = k2.size(0) // groups

for g in range(groups):

k1_T_slice = k1_T[:, g*k1_group_width:(g+1)*k1_group_width, :, :]

k2_slice = k2[g*k2_group_width:(g+1)*k2_group_width, :, :, :]

k_slices.append(F.conv2d(k2_slice, k1_T_slice))

b_slices.append((k2_slice * b1[g*k1_group_width:(g+1)*k1_group_width].reshape(1, -1, 1, 1)).sum((1, 2, 3)))

k, b_hat = transIV_depthconcat(k_slices, b_slices)

return k, b_hat + b2

def transIV_depthconcat(kernels, biases):

return torch.cat(kernels, dim=0), torch.cat(biases)

def transV_avg(channels, kernel_size, groups):

input_dim = channels // groups

k = torch.zeros((channels, input_dim, kernel_size, kernel_size))

k[np.arange(channels), np.tile(np.arange(input_dim), groups), :, :] = 1.0 / kernel_size ** 2

return k

# This has not been tested with non-square kernels (kernel.size(2) != kernel.size(3)) nor even-size kernels

def transVI_multiscale(kernel, target_kernel_size):

H_pixels_to_pad = (target_kernel_size - kernel.size(2)) // 2

W_pixels_to_pad = (target_kernel_size - kernel.size(3)) // 2

return F.pad(kernel, [H_pixels_to_pad, H_pixels_to_pad, W_pixels_to_pad, W_pixels_to_pad])

common修改一下

加入一下:

# https://github.com/DingXiaoH/DiverseBranchBlock/blob/main/dbb_transforms.py

class Bottleneck_DBB(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5):

"""Initializes a standard bottleneck layer with optional shortcut and group convolution, supporting channel

expansion.

"""

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = DiverseBranchBlock(c_, c2, 3, 1, padding=1, nonlinear=nn.SiLU())

self.act = nn.SiLU()

self.add = shortcut and c1 == c2

def forward(self, x):

"""Processes input through two convolutions, optionally adds shortcut if channel dimensions match; input is a

tensor.

"""

# return x + self.act(self.cv2(self.cv1(x))) if self.add else self.cv2(self.cv1(x))

return x + self.act(self.cv2(self.cv1(x))) if self.add else self.cv2(self.cv1(x))

class C3DBB(C3):

# C3 module with cross-convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

"""Initializes C3x module with cross-convolutions, extending C3 with customizable channel dimensions, groups,

and expansion.

"""

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e)

self.m = nn.Sequential(*(Bottleneck_DBB(c_, c_, shortcut, g, e=1.0) for _ in range(n)))

yolo.py修改一下,找到对应的

多种yaml设置

这里可以选择多种骨干

# YOLOv5 🚀 by Ultralytics, AGPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10, 13, 16, 30, 33, 23] # P3/8

- [30, 61, 62, 45, 59, 119] # P4/16

- [116, 90, 156, 198, 373, 326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[

[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3DBB, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3DBB, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3DBB, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3DBB, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head: [

[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, "nearest"]],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3DBB, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, "nearest"]],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3DBB, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3DBB, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3DBB, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

# YOLOv5 🚀 by Ultralytics, AGPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10, 13, 16, 30, 33, 23] # P3/8

- [30, 61, 62, 45, 59, 119] # P4/16

- [116, 90, 156, 198, 373, 326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[

[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, DiverseBranchBlock, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, DiverseBranchBlock, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head: [

[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, "nearest"]],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, "nearest"]],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

网络测试及实验结果