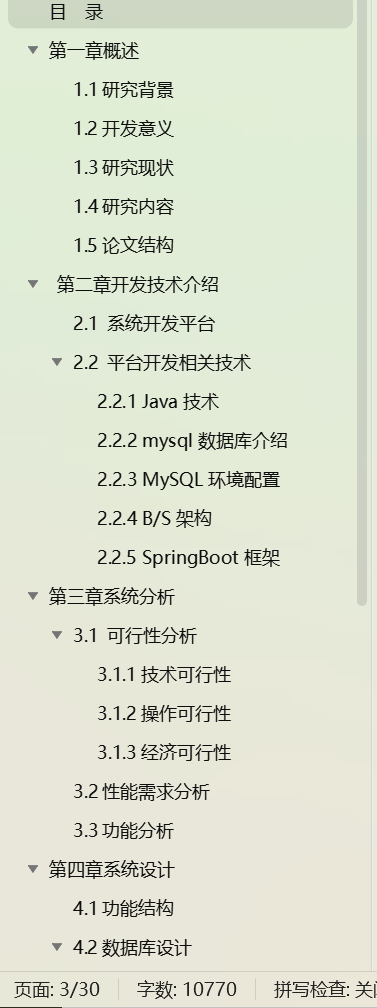

UVC驱动分析

- UVC驱动简介

- Linux video框架分层

- UVC驱动注册

- UVC驱动注册入口函数

- UVC设备探测初始化

- UVC描述符解析

- V4L2设备注册

- UVC控制参数初始化

- UVC video驱动注册

- UVC 状态初始化

UVC驱动简介

UVC全称为USB Video Class,即:USB视频类,是一种为USB视频捕获设备定义的协议标准。是Microsoft与另外几家设备厂商联合推出的为USB视频捕获设备定义的协议标准,已成为USB org标准之一。

如今的主流操作系统(如Windows XP SP2 and later, Linux 2.4.6 and later, MacOS 10.5 and later)都已提供UVC设备驱动,因此符合UVC规格的硬件设备在不需要安装任何的驱动程序下即可在主机中正常使用。使用UVC技术的包括摄像头、数码相机、类比影像转换器、电视棒及静态影像相机等设备。

最新的UVC版本为UVC 1.5,由USB Implementers Forum定义包括基本协议及负载格式。

UVC设备其实就是基于USB框架的V4L在Linux系统中属于V4L设备,它通过V4L2提供的统一API供上层应用进行调用及控制。所以一个UVC设备想要正常显示,必须满足以下几个条件:

- UVC Camera设备的硬件支持

- UVC驱动支持,包括USB设备驱动(UVC)及V4L2驱动的支持

- 上层的应用程序支持,调用V4L相关的API

Linux video框架分层

本章主要针对的是Linux系统的UVC驱动的学习和总结。

Linux UVC驱动是为了全面支持USB视频设备。这个视频设备或USB视频类的USB设备类定义了在USB上的视频流功能,UVC类型的外设只需要一个主机端通用的驱动就可以工作。

在Linux系统中V4L2视频框架,大概如下:

从图可以看出:

每个子设备驱动都必须有一个 v4l2_subdev 结构体(实际的硬件设备都被抽象为v4l2_subdev),代表一个简单的子设备,也可以嵌入到一个更大的结构体中,与更多设备状态 信息保存在一起。

v4l2_device在v4l2框架中充当所有v4l2_subdev的父设备,管理着注册在其下的子设备。

因为子设备千差万别,所以v4l2-device又向上层提供一个标准的接口。所以可以认为v4l2-device就是一个中间层。

UVC驱动就是其中的一个v4l2_subdev设备。 uvc的外围芯片就是一个支持UVC的外设,如一个USB camera摄像头。

UVC驱动注册

接下来正式进入到UVC驱动的分析。我们先从加载驱动开始。

UVC驱动注册入口函数

首先,驱动需要一个入口函数以及出口函数。

由于UVC属于USB设备,所以在入口函数中注册usb_driver:

// kernel/linux-4.9.y/drivers/media/usb/uvc/uvc_driver.c

struct uvc_driver uvc_driver = {

.driver = {

.name = "uvcvideo",

.probe = uvc_probe, // 支持的usb video设备插入就会调用这个probe函数

.disconnect = uvc_disconnect,

.suspend = uvc_suspend,

.resume = uvc_resume,

.reset_resume = uvc_reset_resume,

.id_table = uvc_ids,

.supports_autosuspend = 1,

},

};

// UVC驱动的入口函数

static int __init uvc_init(void)

{

int ret;

uvc_debugfs_init();

// 注册一个usb接口(interface)驱动

ret = usb_register(&uvc_driver.driver);

if (ret < 0) {

uvc_debugfs_cleanup();

return ret;

}

printk(KERN_INFO DRIVER_DESC " (" DRIVER_VERSION ")\n");

return 0;

}

// UVC驱动的出口函数

static void __exit uvc_cleanup(void)

{

usb_deregister(&uvc_driver.driver);

uvc_debugfs_cleanup();

}

module_init(uvc_init);

module_exit(uvc_cleanup);

当在系统中insmod装载该驱动程序时,会在入口函数直接注册usb_drvier结构体,通过比较设备提供的INFO,和id_table比较,若匹配,则表明驱动支持该usb,uvc驱动的id_table可由USB的接口自动生成,如下所示:

/*

* The Logitech cameras listed below have their interface class set to

* VENDOR_SPEC because they don't announce themselves as UVC devices, even

* though they are compliant.

*/

static struct usb_device_id uvc_ids[] = {

/* LogiLink Wireless Webcam */

{ .match_flags = USB_DEVICE_ID_MATCH_DEVICE

| USB_DEVICE_ID_MATCH_INT_INFO,

.idVendor = 0x0416,

.idProduct = 0xa91a,

.bInterfaceClass = USB_CLASS_VIDEO,

.bInterfaceSubClass = 1,

.bInterfaceProtocol = 0,

.driver_info = UVC_QUIRK_PROBE_MINMAX },

...

...

/* Generic USB Video Class */

{ USB_INTERFACE_INFO(USB_CLASS_VIDEO, 1, UVC_PC_PROTOCOL_UNDEFINED) },

{ USB_INTERFACE_INFO(USB_CLASS_VIDEO, 1, UVC_PC_PROTOCOL_15) },

{}

};

这样当有对应的设备被检测到时,就会调用uvc_driver.probe的探测函数,开始一系列的初始化操作。

UVC设备探测初始化

当一个USB设备插入系统被响应后,最先是通用usb驱动进行初步识别,确认是一个usb设备后,再通过读取配置描述符,进一步确认该设备是那种usb class device(如HID、MassStorage、UVC等)。 一旦通过配置信息知道属于那种设备类后,将会进行调用对应的usb interface driver进行匹配设备驱动。如果是UVC设备被匹配成功后将会调用对应的接口驱动的probe函数进行设备注册功能。即uvc_driver.probe.

static int uvc_probe(struct usb_interface *intf,

const struct usb_device_id *id)

{

struct usb_device *udev = interface_to_usbdev(intf);

struct uvc_device *dev;

int ret;

/*省略部分内容*/

if ((dev = kzalloc(sizeof *dev, GFP_KERNEL)) == NULL)//【1】

return -ENOMEM;

/*省略部分内容*/

dev->udev = usb_get_dev(udev);//【2】

dev->intf = usb_get_intf(intf);

dev->intfnum = intf->cur_altsetting->desc.bInterfaceNumber;

dev->quirks = (uvc_quirks_param == -1)

? id->driver_info : uvc_quirks_param;

/*省略部分内容*/

/* Parse the Video Class control descriptor. */

if (uvc_parse_control(dev) < 0) {//【3】

uvc_trace(UVC_TRACE_PROBE, "Unable to parse UVC "

"descriptors.\n");

goto error;

}

/*省略部分内容*/

if (v4l2_device_register(&intf->dev, &dev->vdev) < 0)//【4】

goto error;

/* Initialize controls. */

if (uvc_ctrl_init_device(dev) < 0)//【5】

goto error;

/* Scan the device for video chains. */

if (uvc_scan_device(dev) < 0)

goto error;

/* Register video device nodes. */

if (uvc_register_chains(dev) < 0)//【6】

goto error;

/*省略部分内容*/

/* Initialize the interrupt URB. */

if ((ret = uvc_status_init(dev)) < 0) {//【7】uvc状态的处理由中断端点来控制处理

/*省略部分内容*/

return 0;

error:

uvc_unregister_video(dev);

return -ENODEV;

}

函数太长了,省略了部分内容,但是可以看出,主要的就是做几件事情:

【1】分配一个dev

【2】给dev设置各种参数,如dev->udevudev

【3】调用uvc_parse_control函数分析设备的控制描述符

【4】调用v4l2_device_register函数初始化v4l2_dev

【5】调用uvc_ctrl_init_device函数初始化uvc控制设备

【6】调用uvc_register_chains函数注册所有通道

【7】调用uvc_status_init函数初始化uvc状态

我们来一个个分析下probe流程。

UVC描述符解析

当进入到probe函数中后,首选就是要解析相应的描述符并提取信息。它主要是通过uvc_parse_control函数进行操作。

【3】:调用uvc_parse_control函数

看下调用关系:

uvc_parse_control(dev)

// 解析类特定视频控制描述符,并找到视频流接口描述符

uvc_parse_standard_control(dev, buffer, buflen)

// 解析视频流接口描述符及类特定视频流接口描述符

uvc_parse_streaming(dev, intf)

// 解析format和frame

uvc_parse_format()

跟踪一下uvc_parse_control函数:

static int uvc_parse_control(struct uvc_device *dev)

{

// 首先,拿到当前使用的interface descriptor接口描述符

struct usb_host_interface *alts = dev->intf->cur_altsetting;

// 其次,拿到扩展描述符信息。 alts->extra存放的是类特定描述符,此处为类特定视频控制接口描述符class-specific VC interface descriptor

unsigned char *buffer = alts->extra;

int buflen = alts->extralen;

int ret;

/* Parse the default alternate setting only, as the UVC specification

* defines a single alternate setting, the default alternate setting

* zero.

*/

while (buflen > 2) {

if (uvc_parse_vendor_control(dev, buffer, buflen) ||

buffer[1] != USB_DT_CS_INTERFACE)

goto next_descriptor;

// 开始逐个解析类特定视频控制接口描述符class-specific VC interface descriptor,主要解析所有的video function:

// UVC_VC_HEADER\UVC_VC_INPUT_TERMINAL\UVC_VC_OUTPUT_TERMINAL\UVC_VC_SELECTOR_UNIT

// UVC_VC_PROCESSING_UNITUVC_VC_EXTENSION_UNIT

if ((ret = uvc_parse_standard_control(dev, buffer, buflen)) < 0)

return ret;

next_descriptor:

buflen -= buffer[0];

buffer += buffer[0];

}

/* Check if the optional status endpoint is present. Built-in iSight

* webcams have an interrupt endpoint but spit proprietary data that

* don't conform to the UVC status endpoint messages. Don't try to

* handle the interrupt endpoint for those cameras.

*/

if (alts->desc.bNumEndpoints == 1 &&

!(dev->quirks & UVC_QUIRK_BUILTIN_ISIGHT)) {

struct usb_host_endpoint *ep = &alts->endpoint[0];

struct usb_endpoint_descriptor *desc = &ep->desc;

if (usb_endpoint_is_int_in(desc) &&

le16_to_cpu(desc->wMaxPacketSize) >= 8 &&

desc->bInterval != 0) {

uvc_trace(UVC_TRACE_DESCR, "Found a Status endpoint "

"(addr %02x).\n", desc->bEndpointAddress);

dev->int_ep = ep;

}

}

return 0;

}

接下来,跳转分析uvc_parse_standard_control(dev, buffer, buflen)这个函数:

它主要是对类特定的视频控制接口所描述的所有video function进行解析。

该函数比较长,我们只分析UVC_VC_HEADER的解析流程。

static int uvc_parse_standard_control(struct uvc_device *dev,

const unsigned char *buffer, int buflen)

{

struct usb_device *udev = dev->udev;

struct uvc_entity *unit, *term;

struct usb_interface *intf;

struct usb_host_interface *alts = dev->intf->cur_altsetting;

unsigned int i, n, p, len;

__u16 type;

// 由前面我们知道,这个buffer是存放类特定视频控制描述符的。 它的布局通常第一个存放的就是VC_HEADER.

switch (buffer[2]) {

case UVC_VC_HEADER:

// 得到当前的UVC设备,有多少个VS视频流接口Video Streaming Interface数量

n = buflen >= 12 ? buffer[11] : 0;

if (buflen < 12 + n) {

uvc_trace(UVC_TRACE_DESCR, "device %d videocontrol "

"interface %d HEADER error\n", udev->devnum,

alts->desc.bInterfaceNumber);

return -EINVAL;

}

// 根据VC_HEADER描述符提取相应的字段

dev->uvc_version = get_unaligned_le16(&buffer[3]);

dev->clock_frequency = get_unaligned_le32(&buffer[7]);

/* Parse all USB Video Streaming interfaces. */

// 根据得到的视频流接口个数,依次遍历解析所有的VS视频流接口

for (i = 0; i < n; ++i) {

// 根据指定的视频流接口号'baInterfaceNr[i]',获取对应的接口信息描述符

intf = usb_ifnum_to_if(udev, buffer[12+i]);

if (intf == NULL) {

uvc_trace(UVC_TRACE_DESCR, "device %d "

"interface %d doesn't exists\n",

udev->devnum, i);

continue;

}

// 解析video streaming interface描述符,主要是从类特定视频流接口描述符中解析。

uvc_parse_streaming(dev, intf);

}

break;

case UVC_VC_INPUT_TERMINAL:

...

break;

case UVC_VC_OUTPUT_TERMINAL:

...

break;

case UVC_VC_SELECTOR_UNIT:

...

break;

case UVC_VC_PROCESSING_UNIT:

...

break;

case UVC_VC_EXTENSION_UNIT:

...

break;

default:

uvc_trace(UVC_TRACE_DESCR, "Found an unknown CS_INTERFACE "

"descriptor (%u)\n", buffer[2]);

break;

}

return 0;

}

接下来,我们在继续分析uvc_parse_streaming(dev, intf);这个函数。

它主要是从Video Streaming Interface Descriptor中与之相关的类特定视频流接口描述符 (Class-Specific VS Interface Descriptors)里面进行解析与视频流有关的Input and Output Header Descriptor,并从它们中解析出视频格式(Format Descriptor)和帧格式(Frame Descriptor)。

函数实现非常长,我们慢慢分析:

// 首选,函数传进来的intf就是一个usb_interface数据结构,它包含了一个视频流接口描述符video streaming interface,即usb_host_interface

static int uvc_parse_streaming(struct uvc_device *dev,

struct usb_interface *intf)

{

struct uvc_streaming *streaming = NULL;

struct uvc_format *format;

struct uvc_frame *frame;

// 得到一个视频流接口描述符

struct usb_host_interface *alts = &intf->altsetting[0];

// 得到当前视频流接口描述符与之对应的类特定视频流接口描述符

unsigned char *_buffer, *buffer = alts->extra;

int _buflen, buflen = alts->extralen;

unsigned int nformats = 0, nframes = 0, nintervals = 0;

unsigned int size, i, n, p;

__u32 *interval;

__u16 psize;

int ret = -EINVAL;

// 判断该接口是否是视频流接口描述符

if (intf->cur_altsetting->desc.bInterfaceSubClass

!= UVC_SC_VIDEOSTREAMING) {

uvc_trace(UVC_TRACE_DESCR, "device %d interface %d isn't a "

"video streaming interface\n", dev->udev->devnum,

intf->altsetting[0].desc.bInterfaceNumber);

return -EINVAL;

}

if (usb_driver_claim_interface(&uvc_driver.driver, intf, dev)) {

uvc_trace(UVC_TRACE_DESCR, "device %d interface %d is already "

"claimed\n", dev->udev->devnum,

intf->altsetting[0].desc.bInterfaceNumber);

return -EINVAL;

}

// uvc_streaming数据结构,很重要,包含了该设备所有的视频流接口信息,大部分参数都是存在里面,如支持有多少帧格式、支持的帧信息等

streaming = kzalloc(sizeof *streaming, GFP_KERNEL);

if (streaming == NULL) {

usb_driver_release_interface(&uvc_driver.driver, intf);

return -EINVAL;

}

mutex_init(&streaming->mutex);

streaming->dev = dev;

streaming->intf = usb_get_intf(intf);

// 当前接口的接口号

streaming->intfnum = intf->cur_altsetting->desc.bInterfaceNumber;

/* The Pico iMage webcam has its class-specific interface descriptors

* after the endpoint descriptors.

*/

if (buflen == 0) {

for (i = 0; i < alts->desc.bNumEndpoints; ++i) {

struct usb_host_endpoint *ep = &alts->endpoint[i];

if (ep->extralen == 0)

continue;

if (ep->extralen > 2 &&

ep->extra[1] == USB_DT_CS_INTERFACE) {

uvc_trace(UVC_TRACE_DESCR, "trying extra data "

"from endpoint %u.\n", i);

buffer = alts->endpoint[i].extra;

buflen = alts->endpoint[i].extralen;

break;

}

}

}

/* Skip the standard interface descriptors. */

while (buflen > 2 && buffer[1] != USB_DT_CS_INTERFACE /* 0x24 */) {

buflen -= buffer[0];

buffer += buffer[0];

}

if (buflen <= 2) {

uvc_trace(UVC_TRACE_DESCR, "no class-specific streaming "

"interface descriptors found.\n");

goto error;

}

/* Parse the header descriptor. */

switch (buffer[2]) {

case UVC_VS_OUTPUT_HEADER:

streaming->type = V4L2_BUF_TYPE_VIDEO_OUTPUT;

size = 9;

break;

// 对应UVC相机设备,是一个输入设备Capture

case UVC_VS_INPUT_HEADER:

streaming->type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

// INPUT_HEADER能确定的固定大小是13

size = 13;

break;

default:

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface "

"%d HEADER descriptor not found.\n", dev->udev->devnum,

alts->desc.bInterfaceNumber);

goto error;

}

// 此接口支持的帧格式数量

p = buflen >= 4 ? buffer[3] : 0;

// 获取‘bControlSize’字段的大小

n = buflen >= size ? buffer[size-1] : 0;

if (buflen < size + p*n) {

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming "

"interface %d HEADER descriptor is invalid.\n",

dev->udev->devnum, alts->desc.bInterfaceNumber);

goto error;

}

// 此接口支持的帧格式个数

streaming->header.bNumFormats = p;

streaming->header.bEndpointAddress = buffer[6];

// 根据Input header descriptor获取相应字段信息

if (buffer[2] == UVC_VS_INPUT_HEADER) {

streaming->header.bmInfo = buffer[7];

streaming->header.bTerminalLink = buffer[8];

streaming->header.bStillCaptureMethod = buffer[9];

streaming->header.bTriggerSupport = buffer[10];

streaming->header.bTriggerUsage = buffer[11];

} else {

streaming->header.bTerminalLink = buffer[7];

}

streaming->header.bControlSize = n;

streaming->header.bmaControls = kmemdup(&buffer[size], p * n,

GFP_KERNEL);

if (streaming->header.bmaControls == NULL) {

ret = -ENOMEM;

goto error;

}

// 跳过 Input Header Descpritor, 后面一般紧跟着的就是format和frame的描述符

buflen -= buffer[0];

buffer += buffer[0];

// 临时变量,为变量出有多少个format和frame临时设置

_buffer = buffer;

_buflen = buflen;

/* Count the format and frame descriptors. */

while (_buflen > 2 && _buffer[1] == USB_DT_CS_INTERFACE) {

switch (_buffer[2]) {

case UVC_VS_FORMAT_UNCOMPRESSED:

case UVC_VS_FORMAT_MJPEG:

case UVC_VS_FORMAT_FRAME_BASED:

nformats++;

break;

case UVC_VS_FORMAT_DV:

/* DV format has no frame descriptor. We will create a

* dummy frame descriptor with a dummy frame interval.

*/

nformats++;

nframes++;

nintervals++;

break;

case UVC_VS_FORMAT_MPEG2TS:

case UVC_VS_FORMAT_STREAM_BASED:

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming "

"interface %d FORMAT %u is not supported.\n",

dev->udev->devnum,

alts->desc.bInterfaceNumber, _buffer[2]);

break;

case UVC_VS_FRAME_UNCOMPRESSED:

case UVC_VS_FRAME_MJPEG:

nframes++;

if (_buflen > 25)

nintervals += _buffer[25] ? _buffer[25] : 3;

break;

case UVC_VS_FRAME_FRAME_BASED:

nframes++;

if (_buflen > 21)

nintervals += _buffer[21] ? _buffer[21] : 3;

break;

}

_buflen -= _buffer[0];

_buffer += _buffer[0];

}

if (nformats == 0) {

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface "

"%d has no supported formats defined.\n",

dev->udev->devnum, alts->desc.bInterfaceNumber);

goto error;

}

// 上面的while循环是依次遍历当前接口包含多少Format及frame描述符,然后进行资源分配

size = nformats * sizeof *format + nframes * sizeof *frame

+ nintervals * sizeof *interval;

format = kzalloc(size, GFP_KERNEL);

if (format == NULL) {

ret = -ENOMEM;

goto error;

}

frame = (struct uvc_frame *)&format[nformats];

interval = (__u32 *)&frame[nframes];

streaming->format = format;

streaming->nformats = nformats;

/* Parse the format descriptors. */

// 依次遍历解析该接口所有的format descriptor

// 此刻的buffer是指向Input Header descriptor的下一个描述符,即Format Descriptor

while (buflen > 2 && buffer[1] == USB_DT_CS_INTERFACE) {

switch (buffer[2]) {

case UVC_VS_FORMAT_UNCOMPRESSED:

case UVC_VS_FORMAT_MJPEG:

case UVC_VS_FORMAT_DV:

case UVC_VS_FORMAT_FRAME_BASED:

format->frame = frame;

// 解析format descriptor

ret = uvc_parse_format(dev, streaming, format,

&interval, buffer, buflen);

if (ret < 0)

goto error;

frame += format->nframes;

format++;

buflen -= ret;

buffer += ret;

continue;

default:

break;

}

buflen -= buffer[0];

buffer += buffer[0];

}

if (buflen)

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming interface "

"%d has %u bytes of trailing descriptor garbage.\n",

dev->udev->devnum, alts->desc.bInterfaceNumber, buflen);

/* Parse the alternate settings to find the maximum bandwidth. */

for (i = 0; i < intf->num_altsetting; ++i) {

struct usb_host_endpoint *ep;

alts = &intf->altsetting[i];

ep = uvc_find_endpoint(alts,

streaming->header.bEndpointAddress);

if (ep == NULL)

continue;

psize = le16_to_cpu(ep->desc.wMaxPacketSize);

psize = (psize & 0x07ff) * (1 + ((psize >> 11) & 3));

if (psize > streaming->maxpsize)

streaming->maxpsize = psize;

}

// 最后将streaming添加到streams链表中

list_add_tail(&streaming->list, &dev->streams);

return 0;

error:

usb_driver_release_interface(&uvc_driver.driver, intf);

usb_put_intf(intf);

kfree(streaming->format);

kfree(streaming->header.bmaControls);

kfree(streaming);

return ret;

}

最后,继续分析一下uvc_parse_format()函数,它主要是解析Format Descriptor信息后,在解析对应的frame descriptor信息并分别记录到对应的format和frame数据结构中,然后让streaming统一管理,为后续使用准备。

/* ------------------------------------------------------------------------

* Descriptors parsing

*/

static int uvc_parse_format(struct uvc_device *dev,

struct uvc_streaming *streaming, struct uvc_format *format,

__u32 **intervals, unsigned char *buffer, int buflen)

{

struct usb_interface *intf = streaming->intf;

struct usb_host_interface *alts = intf->cur_altsetting;

struct uvc_format_desc *fmtdesc;

struct uvc_frame *frame;

const unsigned char *start = buffer;

unsigned int width_multiplier = 1;

unsigned int interval;

unsigned int i, n;

__u8 ftype;

// 传进来的buffer一定是一个Format Descriptor,要根据不同的format查看对应的payload document

format->type = buffer[2];

format->index = buffer[3];

// 进行匹配对应的formt,并提取对应字段,没什么比较简单

switch (buffer[2]) {

case UVC_VS_FORMAT_UNCOMPRESSED:

case UVC_VS_FORMAT_FRAME_BASED:

n = buffer[2] == UVC_VS_FORMAT_UNCOMPRESSED ? 27 : 28;

if (buflen < n) {

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming "

"interface %d FORMAT error\n",

dev->udev->devnum,

alts->desc.bInterfaceNumber);

return -EINVAL;

}

/* Find the format descriptor from its GUID. */

fmtdesc = uvc_format_by_guid(&buffer[5]);

if (fmtdesc != NULL) {

strlcpy(format->name, fmtdesc->name,

sizeof format->name);

format->fcc = fmtdesc->fcc;

} else {

uvc_printk(KERN_INFO, "Unknown video format %pUl\n",

&buffer[5]);

snprintf(format->name, sizeof(format->name), "%pUl\n",

&buffer[5]);

format->fcc = 0;

}

format->bpp = buffer[21];

/* Some devices report a format that doesn't match what they

* really send.

*/

if (dev->quirks & UVC_QUIRK_FORCE_Y8) {

if (format->fcc == V4L2_PIX_FMT_YUYV) {

strlcpy(format->name, "Greyscale 8-bit (Y8 )",

sizeof(format->name));

format->fcc = V4L2_PIX_FMT_GREY;

format->bpp = 8;

width_multiplier = 2;

}

}

if (buffer[2] == UVC_VS_FORMAT_UNCOMPRESSED) {

ftype = UVC_VS_FRAME_UNCOMPRESSED;

} else {

ftype = UVC_VS_FRAME_FRAME_BASED;

if (buffer[27])

format->flags = UVC_FMT_FLAG_COMPRESSED;

}

break;

case UVC_VS_FORMAT_MJPEG:

if (buflen < 11) {

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming "

"interface %d FORMAT error\n",

dev->udev->devnum,

alts->desc.bInterfaceNumber);

return -EINVAL;

}

strlcpy(format->name, "MJPEG", sizeof format->name);

format->fcc = V4L2_PIX_FMT_MJPEG;

format->flags = UVC_FMT_FLAG_COMPRESSED;

format->bpp = 0;

ftype = UVC_VS_FRAME_MJPEG;

break;

case UVC_VS_FORMAT_DV:

...

break;

case UVC_VS_FORMAT_MPEG2TS:

case UVC_VS_FORMAT_STREAM_BASED:

/* Not supported yet. */

default:

uvc_trace(UVC_TRACE_DESCR, "device %d videostreaming "

"interface %d unsupported format %u\n",

dev->udev->devnum, alts->desc.bInterfaceNumber,

buffer[2]);

return -EINVAL;

}

uvc_trace(UVC_TRACE_DESCR, "Found format %s.\n", format->name);

// 指向下一个描述符

buflen -= buffer[0];

buffer += buffer[0];

/* Parse the frame descriptors. Only uncompressed, MJPEG and frame

* based formats have frame descriptors.

*/

// 匹配当前format下的所有frame

while (buflen > 2 && buffer[1] == USB_DT_CS_INTERFACE &&

buffer[2] == ftype) {

frame = &format->frame[format->nframes];

...

...

...

// format下的frame,实际上是支持多少中分辨率

format->nframes++;

buflen -= buffer[0];

buffer += buffer[0];

}

...

...

...

return buffer - start;

}

解析完了之后所有的视频流信息都被记录到了struct uvc_device结构体中:

// 最后将streaming添加到streams链表中

list_add_tail(&streaming->list, &dev->streams);

struct uvc_device {

struct usb_device *udev;

struct usb_interface *intf;

unsigned long warnings;

__u32 quirks;

int intfnum;

char name[32];

...

...

...

/* Video Streaming interfaces */

struct list_head streams; //以上解析的所有的流信息记录

atomic_t nstreams;

...

...

};

这样【3】的工作就完成了,我们来看下【4】的:

V4L2设备注册

简单,没啥好讲的,就是初始化v4l2_dev->subdevs子设备实例的链表,然后设置名字和设置dev->driver_data

int v4l2_device_register(struct device *dev, struct v4l2_device *v4l2_dev)

{

INIT_LIST_HEAD(&v4l2_dev->subdevs);//用来管理v4l2_device 下的subdevs实例

spin_lock_init(&v4l2_dev->lock);

v4l2_prio_init(&v4l2_dev->prio);

kref_init(&v4l2_dev->ref);

get_device(dev);

v4l2_dev->dev = dev;

if (!v4l2_dev->name[0])

snprintf(v4l2_dev->name, sizeof(v4l2_dev->name), "%s %s",

dev->driver->name, dev_name(dev));

if (!dev_get_drvdata(dev))//dev->driver_data 域 为 NULL

dev_set_drvdata(dev, v4l2_dev);//就将其指向 v4l2_dev

return 0;

}

UVC控制参数初始化

看下【5】调用uvc_ctrl_init_device

uvc_ctrl_init_device主要就是初始化控制参数,里面就会遍历uvc设备实体entities链表,然后设置位图和位域大小

最后还会调用uvc_ctrl_init_ctrl函数设置背光,色温等等

int uvc_ctrl_init_device(struct uvc_device *dev)

{

/*省略了部分内容*/

list_for_each_entry(entity, &dev->entities, list) {

bmControls = entity->extension.bmControls;//控制位图

bControlSize = entity->extension.bControlSize;//控制位域大小

entity->controls = kcalloc(ncontrols, sizeof(*ctrl),

GFP_KERNEL);//分配ncontrols个uvc控制内存

if (entity->controls == NULL)

return -ENOMEM;

entity->ncontrols = ncontrols;//设置uvc控制个数

/* Initialize all supported controls */

ctrl = entity->controls;//指向uvc控制数组

for (i = 0; i < bControlSize * 8; ++i) {

if (uvc_test_bit(bmControls, i) == 0)//跳过控制位域设置0的

continue;

ctrl->entity = entity;

ctrl->index = i;//设置控制位域索引

uvc_ctrl_init_ctrl(dev, ctrl);//初始化uvc控件

ctrl++;//uvc控制 指向下一个uvc控制数组项

}

}

}

UVC video驱动注册

接下来继续看【6】调用uvc_register_chains函数:

调用关系:

uvc_register_chains

uvc_register_terms(dev, chain)

uvc_stream_by_id

uvc_register_video

uvc_mc_register_entities(chain)

uvc_stream_by_id函数会通过函数传入的id和dev->streams链表的header.bTerminalLink匹配,寻找到stream

这不是重点,我们的重点是uvc_register_video函数,找到stream会就要注册:

static int uvc_register_video(struct uvc_device *dev,

struct uvc_streaming *stream)

{

/*部分内容省略......*/

struct video_device *vdev = &stream->vdev;

ret = uvc_queue_init(&stream->queue, stream->type, !uvc_no_drop_param);//初始化队列

ret = uvc_video_init(stream);//初始化

uvc_debugfs_init_stream(stream);

vdev->v4l2_dev = &dev->vdev;

vdev->fops = &uvc_fops;//v4l2操作函数集

vdev->ioctl_ops = &uvc_ioctl_ops;//设置真正的ioctl操作集

vdev->release = uvc_release;//释放方法

vdev->prio = &stream->chain->prio;

strlcpy(vdev->name, dev->name, sizeof vdev->name);

video_set_drvdata(vdev, stream);//将uvc视频流作为v4l2设备的驱动数据

ret = video_register_device(vdev, VFL_TYPE_GRABBER, -1);//注册

return 0;

}

到了这里就是真正的注册uvc driver驱动并确定用户空间的操作处理函数,在/dev下生成uvc节点:/dev/videoX

具体的操作函数及注册流程我们下一章节分析。

UVC 状态初始化

【7】调用uvc_status_init函数

int uvc_status_init(struct uvc_device *dev)

{

/*省略部分函数*/

struct usb_host_endpoint *ep = dev->int_ep;//获取usb_host_endpoint

uvc_input_init(dev);//初始化uvc输入设备,里面注册input设备

dev->status = kzalloc(UVC_MAX_STATUS_SIZE, GFP_KERNEL);//分配urb设备状态内存

dev->int_urb = usb_alloc_urb(0, GFP_KERNEL);//分配urb

pipe = usb_rcvintpipe(dev->udev, ep->desc.bEndpointAddress);//中断输入端点

usb_fill_int_urb(dev->int_urb, dev->udev, pipe,

dev->status, UVC_MAX_STATUS_SIZE, uvc_status_complete,

dev, interval);//填充中断urb

return 0;

}

里面就是关于urb的一些东西了,了解就好。