RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control

RT-2: 用互联网知识训练的视觉语言模型融入到机器人控制中

RT1 论文翻译:

https://blog.csdn.net/weixin_43334869/article/details/135850410

文章目录

- RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control

- RT-2: 用互联网知识训练的视觉语言模型融入到机器人控制中

-

- 1.Introduction

- 1.介绍

- 2.Related Work

- 2.相关工作

- 3.Vision-Language-Action Models

- 3.视觉语言动作模型

-

- 3.1. Pre-Trained Vision-Language Models

- 3.1. 预训练的视觉语言模型

- 3.2. Robot-Action Fine-tuning

- 3.2. 机器人动作微调

- 3.3. Real-Time Inference

- 3.3. 实时推理

- 3.1. Pre-Trained Vision-Language Models

- 4. Experiments

- 4. 实验

-

- 4.1. How does RT-2 perform on seen tasks and more importantly, generalize over new objects, backgrounds, and environments?

- 4.1. RT-2在已见任务上的表现如何,更重要的是,它在新的对象、背景和环境中如何泛化?

- 4.2. Can we observe and measure any emergent capabilities of RT-2?

- 4.2. 我们能观察和衡量RT-2的任何新能力吗?

- 4.3. How does the generalization vary with parameter count and other design decisions?

- 4.3. 泛化如何随参数数量和其他设计决策而变化?

- 4.4. Can RT-2 exhibit signs of chain-of-thought reasoning similarly to vision-language models?

- 4.4. RT-2是否能展现类似于视觉语言模型的思维链推理?

- 4.1. How does RT-2 perform on seen tasks and more importantly, generalize over new objects, backgrounds, and environments?

- 5. Limitations

- 5.限制

- 6. Conclusions

- 6.结论

- Acknowledgments

- 致谢

- A. Contributions

- A. 贡献

- B. Datasets

- B. 数据集

- C. Baselines

- C. 基线

- D. VLMs for RT-2

- D. 用于RT-2的VLMs

- E. Training Details

- E. 训练细节

- F. Evaluation Details

- F. 评估细节

-

- F.1. Evaluation Scenarios

- F.1. 评估场景

- F.2. Evaluation Instructions

- F.2. 评估指令

- F.1. Evaluation Scenarios

- G. Example Failure Cases

- G. 示例失败案例

- H.Quantitative Experimental Results

- H.定量实验结果

-

- H.1. Overall Performance, for Section 4.1

- H.1.整体性能,见第4.1节

- H.2. Emergent Evaluation, for Section 4.2

- H.2.新能力评估,见第4.2节

- H.1. Overall Performance, for Section 4.1

- Additional Chain-Of-Thought Reasoning Results

- 额外的链式思考推理结果

- 1.Introduction

We study how vision-language models trained on Internet-scale data can be incorporated directly into end-to-end robotic control to boost generalization and enable emergent semantic reasoning. Our goal is to enable a single end-to-end trained model to both learn to map robot observations to actions and enjoy the benefits of large-scale pretraining on language and vision-language data from the web. To this end, we propose to co-fine-tune state-of-the-art vision-language models on both robotic trajectory data and Internet-scale vision-language tasks, such as visual question answering. In contrast to other approaches, we propose a simple, general recipe to achieve this goal: in order to fit both natural language responses and robotic actions into the same format, we express the actions as text tokens and incorporate them directly into the training set of the model in the same way as natural language tokens. We refer to such category of models as vision-language-action models (VLA) and instantiate an example of such a model, which we call RT-2. Our extensive evaluation (6k evaluation trials) shows that our approach leads to performant robotic policies and enables RT-2 to obtain a range of emergent capabilities from Internet-scale training. This includes significantly improved generalization to novel objects, the ability to interpret commands not present in the robot training data (such as placing an object onto a particular number or icon), and the ability to perform rudimentary reasoning in response to user commands (such as picking up the smallest or largest object, or the one closest to another object). We further show that incorporating chain of thought reasoning allows RT-2 to perform multi-stage semantic reasoning, for example figuring out which object to pick up for use as an improvised hammer (a rock), or which type of drink is best suited for someone who is tired (an energy drink).

我们研究了如何将在互联网规模数据上训练的视觉语言模型直接融入端到端的机器人控制,以提升泛化能力并实现新兴的语义推理。我们的目标是使单一的端到端训练模型既能学会将机器人观察映射到动作,又能享受来自Web的语言和视觉语言数据的大规模预训练的益处。为此,我们提出在机器人轨迹数据和互联网规模的视觉语言任务(如视觉问答)上共同微调最先进的视觉语言模型。与其他方法不同,我们提出了一个简单而通用的方法来实现这一目标:为了将自然语言响应和机器人动作适应于相同的格式,我们将动作表达为文本标记(tokens),并将其直接纳入模型的训练集中,与自然语言标记相同。我们将这类模型称为视觉语言行动模型(VLA),并实例化了一个这样的模型,我们称之为RT-2。我们进行了广泛的评估(6,000次评估试验),结果表明我们的方法可以带来高性能的机器人策略,并使RT-2能够从互联网规模的训练中获得一系列新的能力。这包括对新物体的显着改进的泛化能力,解释机器人训练数据中不存在的命令(例如将物体放在特定的数字或图标上),以及对用户命令做出初步推理的能力(例如拾取最小或最大的物体,或离其他物体最近的物体)。我们进一步展示,纳入思维链(chain of thought)推理使RT-2能够进行多阶段语义推理,例如找出哪个物体可以用作临时的锤子(一块岩石),或者对于疲劳的人来说哪种类型的饮料最适合(能量饮料)。

1.Introduction

1.介绍

High-capacity models pretrained on broad web-scale datasets provide an effective and powerful platform for a wide range of downstream tasks: large language models can enable not only fluent text generation (Anil et al., 2023; Brohan et al., 2022; OpenAI, 2023) but emergent problem-solving (Cobbe et al., 2021; Lewkowycz et al., 2022; Polu et al., 2022) and creative generation of prose (Brown et al., 2020; OpenAI, 2023) and code (Chen et al., 2021), while vision-language models enable open-vocabulary visual recognition (Kirillov et al., 2023; Minderer et al., 2022; Radford et al., 2021) and can even make complex inferences about object-agent interactions in images (Alayrac et al., 2022; Chen et al., 2023a,b; Driess et al., 2023; Hao et al., 2022; Huang et al., 2023; Wang et al., 2022). Such semantic reasoning, problem solving, and visual interpretation capabilities would be tremendously useful for generalist robots that must perform a variety of tasks in real-world environments. However, it is unclear how robots should acquire such capabilities. While a brute force approach might entail collecting millions of robotic interaction trials, the most capable language and vision-language models are trained on billions of tokens and images from the web (Alayrac et al., 2022; Chen et al., 2023a,b; Huang et al., 2023) – an amount unlikely to be matched with robot data in the near future. On the other hand, directly applying such models to robotic tasks is also difficult: such models reason about semantics, labels, and textual prompts, whereas robots require grounded low-level actions, such as Cartesian end-effector commands. While a number of recent works have sought to incorporate language models (LLMs) and vision-language models (VLMs) into robotics (Ahn et al., 2022; Driess et al., 2023; Vemprala et al., 2023), such methods generally address only the “higher level” aspects of robotic planning, essentially taking the role of a state machine that interprets commands and parses them into individual primitives (such as picking and placing objects), which are then executed by separate low-level controllers that themselves do not benefit from the rich semantic knowledge of Internet-scale models during training. Therefore, in this paper we ask: can large pretrained visionlanguage models be integrated directly into low-level robotic control to boost generalization and enable emergent semantic reasoning?

在广泛的Web规模数据集上预训练的高容量模型为各种下游任务提供了有效且强大的平台:大型语言模型不仅可以实现流畅的文本生成(Anil等,2023;Brohan等,2022;OpenAI,2023),还能实现新兴的问题解决(Cobbe等,2021;Lewkowycz等,2022;Polu等,2022)以及散文(Brown等,2020;OpenAI,2023)和代码(Chen等,2021)的创造性生成,而视觉语言模型则实现了开放词汇的视觉识别(Kirillov等,2023;Minderer等,2022;Radford等,2021),甚至可以对图像中的物体-代理交互进行复杂推理(Alayrac等,2022;Chen等,2023a,b;Driess等,2023;Hao等,2022;Huang等,2023;Wang等,2022)。这种语义推理、问题解决和视觉解释的能力对于必须在真实环境中执行各种任务的通用型机器人将是非常有用的。然而,尚不清楚机器人应该如何获得这些能力。虽然蛮力方法可能包括收集数百万次机器人交互试验,但最有能力的语言和视觉语言模型是在来自Web的数十亿个标记和图像上训练的(Alayrac等,2022;Chen等,2023a,b;Huang等,2023) - 在可预见的未来内,机器人数据可能无法匹配这一数量。另一方面,直接将这些模型应用于机器人任务也很困难:此类模型对语义、标签和文本提示进行推理,而机器人需要基于实际低级动作,如笛卡尔末端执行器命令。虽然最近的一些工作尝试将语言模型(LLMs)和视觉语言模型(VLMs)纳入机器人(Ahn等,2022;Driess等,2023;Vemprala等,2023),但这些方法通常只涉及机器人规划的“更高层”方面,基本上扮演状态机的角色,解释命令并将其解析为单个基元(例如拾取和放置物体),然后由单独的低级控制器执行,这些低级控制器本身在训练期间并不受益于互联网规模模型的丰富语义知识。因此,在本文中,我们提出一个问题:能否将大型预训练的视觉语言模型直接整合到低级机器人控制中,以提升泛化能力并实现新兴的语义推理?

To this end, we explore an approach that is both simple and surprisingly effective: we directly train vision-language models designed for open-vocabulary visual question answering and visual dialogue to output low-level robot actions, along with solving other Internet-scale vision-language tasks. Although such models are typically trained to produce natural language tokens, we can train them on robotic trajectories by tokenizing the actions into text tokens and creating “multimodal sentences” (Driess et al., 2023) that “respond” to robotic instructions paired with camera observations by producing corresponding actions. In this way, vision-language models can be directly trained to act as instruction following robotic policies. This simple approach is in contrast with prior alternatives for incorporating VLMs into robot policies (Shridhar et al., 2022a) or designing new vision-languageaction architectures from scratch (Reed et al., 2022): instead, pre-existing vision-language models, with already-amortized significant compute investment, are trained without any new parameters to output text-encoded actions. We refer to this category of models as vision-language-action (VLA) models. We instantiate VLA models by building on the protocol proposed for RT-1 (Brohan et al., 2022), using a similar dataset, but expanding the model to use a large vision-language backbone. Hence we refer to our model as RT-2 (Robotics Transformer 2). We provide an overview in Figure 1.

为此,我们探索了一种既简单又出奇地有效的方法:我们直接训练设计用于开放词汇的视觉问答和视觉对话的视觉语言模型,使其输出低级机器人动作,同时解决其他互联网规模的视觉语言任务。尽管这些模型通常是为生成自然语言标记而训练的,但我们可以通过将动作标记成文本标记并创建“多模态句子”(Driess等,2023)来对机器人轨迹进行训练,这些句子通过生成相应的动作来“响应”与相机观察配对的机器人指令。通过这种方式,视觉语言模型可以直接训练成为遵循指令的机器人策略。这种简单的方法与以前将VLMs纳入机器人策略(Shridhar等,2022a)或从头开始设计新的视觉语言动作架构(Reed等,2022)的替代方法形成鲜明对比。相反,我们使用已经进行了大量计算投资的现有视觉语言模型进行训练,而无需引入任何新的参数,以输出文本编码的动作。我们将这类模型称为视觉语言动作(VLA)模型。我们通过在RT-1提出的协议基础上构建VLA模型,使用类似的数据集,扩展模型以使用大型视觉语言骨干,因此我们将我们的模型称为RT-2(Robotics Transformer 2)。我们在图1中提供了一个概述。

Figure 1 | RT-2 overview: we represent robot actions as another language, which can be cast into text tokens and trained together with Internet-scale vision-language datasets. During inference, the text tokens are de-tokenized into robot actions, enabling closed loop control. This allows us to leverage the backbone and pretraining of vision-language models in learning robotic policies, transferring some of their generalization, semantic understanding, and reasoning to robotic control. We demonstrate examples of RT-2 execution on the project website: robotics-transformer2.github.io.

图1 | RT-2概述:我们将机器人动作表示为另一种语言,可以转换为文本标记并与互联网规模的视觉语言数据集一起训练。在推理过程中,文本标记被解标记为机器人动作,实现闭环控制。这使我们能够在学习机器人策略时利用视觉语言模型的骨干结构和预训练,将它们的泛化、语义理解和推理部分转移到机器人控制。我们在项目网站上展示了RT-2执行的示例:robotics-transformer2.github.io。

We observe that robotic policies derived from such vision-language models exhibit a range of remarkable capabilities, combining the physical motions learned from the robot data with the ability to interpret images and text learned from web data into a single model. Besides the expected benefit of dramatically improving generalization to novel objects and semantically varied instructions, we observe a number of emergent capabilities. While the model’s physical skills are still limited to the distribution of skills seen in the robot data, the model acquires the ability to deploy those skills in new ways by interpreting images and language commands using knowledge gleaned from the web. Some example highlights are shown in Figure 2. The model is able to re-purpose pick and place skills learned from robot data to place objects near semantically indicated locations, such as specific numbers or icons, despite those cues not being present in the robot data. The model can also interpret relations between objects to determine which object to pick and where to place it, despite no such relations being provided in the robot demonstrations. Furthermore, if we augment the command with chain of thought prompting, the model is able to make even more complex semantic inferences, such as figuring out which object to pick up for use as an improvised hammer (a rock), or which type of drink is best suited for someone who is tired (an energy drink).

我们观察到,从这些视觉语言模型派生的机器人策略展示了一系列引人注目的能力,将从机器人数据学到的物理运动与从Web数据中学到的图像和文本解释能力结合成一个单一的模型。除了显著提高对新颖对象和语义多样指令的泛化能力的预期益处之外,我们还观察到一些新兴的能力。虽然模型的物理技能仍然受限于机器人数据中看到的技能分布,但通过使用从Web获取的知识解释图像和语言命令,模型获得了以新方式运用这些技能的能力。图2中展示了一些示例亮点。该模型能够重新利用从机器人数据中学到的拾取和放置技能,将物体放置在语义指示的位置附近,例如特定的数字或图标,尽管在机器人数据中不存在这些提示。模型还可以解释物体之间的关系,确定应该拾取哪个物体以及放置在哪里,尽管机器人演示中未提供这些关系。此外,如果我们用思维链提示来增强命令,模型能够进行更复杂的语义推理,例如找出哪个物体可以用作临时的锤子(一块岩石),或者哪种类型的饮料最适合疲劳的人(能量饮料)。

Figure 2 | RT-2 is able to generalize to a variety of real-world situations that require reasoning, symbol understanding, and human recognition. We study these challenging scenarios in detail in Section 4.

图2 | RT-2 能够推广到需要推理、符号理解和人类识别的各种现实场景。我们将在第4节详细研究这些具有挑战性的情景。

Our main contribution is RT-2, a family of models derived from fine-tuning large vision-language models trained on web-scale data to directly act as generalizable and semantically aware robotic policies. Our experiments investigate models with up to 55B parameters trained on Internet data and instruction-annotated robotic trajectories from previous work (Brohan et al., 2022). Over the course of 6k robotic evaluations, we show that RT-2 enable significant improvements to generalization over objects, scenes, and instructions, and exhibit a breadth of emergent capabilities inherited from web-scale vision-language pretraining.

我们的主要贡献是RT-2,这是一系列从在Web规模数据上训练的大型视觉语言模型微调而来的模型,直接作为通用且语义感知的机器人策略。我们的实验涉及在Internet数据和先前工作中(Brohan等,2022)的指令注释机器人轨迹上训练的具有最多55B参数的模型。在6,000次机器人评估的过程中,我们展示了RT-2在对象、场景和指令的泛化方面取得了显著的改进,并展示了从Web规模视觉语言预训练中继承的新兴能力的广度。

2.Related Work

2.相关工作

Vision-language models. There are several categories of Vision-Language Models (VLMs) (Gan et al., 2022), with perhaps two most relevant: (1) representation-learning models, e.g. CLIP (Radford et al., 2021), which learn common embeddings for both modalities, and (2) visual language models of the form {vision, text} → {text} which learn to take vision and language as input and provide free-form text. Both categories have been used to provide pretraining for a wide variety of applied to downstream applications such as object classification (Radford et al., 2021), detection (Gu et al., 2021), and segmentation (Ghiasi et al., 2021). In this work, we focus on the latter category (Alayrac et al., 2022; Chen et al., 2023a,b; Driess et al., 2023; Hao et al., 2022; Li et al., 2023, 2019; Lu et al., 2019). These models are generally trained on many different tasks, such as image captioning, vision-question answering (VQA), and general language tasks on multiple datasets at the same time. While prior works study VLMs for a wide range of problems and settings including in robotics, our focus is on how the capabilities of VLMs can be extended to robotics closed-loop control by endowing them with the ability to predict robot actions, thus leveraging the knowledge already present in VLMs to enable new levels of generalization.

视觉语言模型。

有几类视觉语言模型(VLMs)(Gan等,2022),其中可能最相关的是两类:(1) 表征学习模型,例如 CLIP(Radford等,2021),它学习两种模态的共同嵌入,以及(2) 形式为 {视觉,文本} → {文本} 的视觉语言模型,它们学习将视觉和语言作为输入,输出为自由格式文本的模型。这两类模型已被用于为各种下游应用提供预训练,如对象分类(Radford等,2021),检测(Gu等,2021)和分割(Ghiasi等,2021)。在这项工作中,我们关注后一类模型(Alayrac等,2022;Chen等,2023a,b;Driess等,2023;Hao等,2022;Li等,2023, 2019;Lu等,2019)。这些模型通常在多个数据集上同时进行训练,涉及图像字幕、视觉问答(VQA)和多个通用语言任务。尽管以前的研究,研究了VLMs在广泛的问题和设置中的应用,包括在机器人学中,我们的焦点是如何通过赋予它们预测机器人动作的能力,将VLMs的能力扩展到机器人闭环控制,从而利用VLMs中已有的知识以实现新的泛化水平。

Generalization in robot learning. Developing robotic controllers that can broadly succeed in a variety of scenarios is a long-standing goal in robotics research (Kaelbling, 2020; Smith and Coles, 1973). A promising approach for enabling generalization in robotic manipulation is by learning from large and diverse datasets (Dasari et al., 2019; Levine et al., 2018; Pinto and Gupta, 2016). By doing so, prior methods have demonstrated how robots can generalize to novel object instances (Finn and Levine, 2017; Levine et al., 2018; Mahler et al., 2017; Pinto and Gupta, 2016; Young et al., 2021), to tasks involving novel combinations of objects and skills (Dasari and Gupta, 2021; Finn et al., 2017; James et al., 2018; Jang et al., 2021; Yu et al., 2018), to new goals or language instructions (Jang et al., 2021; Jiang et al., 2022; Liu et al., 2022; Mees et al., 2022; Nair et al., 2022a; Pong et al.,2019), to tasks with novel semantic object categories (Shridhar et al., 2021; Stone et al., 2023), and to unseen environments (Cui et al., 2022; Du et al., 2023a; Hansen et al., 2020). Unlike most of these prior works, we aim to develop and study a single model that can generalize to unseen conditions along all of these axes. A key ingredient of our approach is to leverage pre-trained models that have been exposed to data that is much broader than the data seen by the robot.

机器人学习中的泛化。

开发能够在各种场景中取得广泛成功的机器人控制器是机器人研究中的长期目标(Kaelbling, 2020;Smith and Coles, 1973)。在机器人操纵中实现泛化的一个有前途的方法是通过从大规模且多样化的数据集中学习(Dasari等,2019;Levine等,2018;Pinto和Gupta,2016)。通过这样做,先前的方法已经展示了机器人如何对新颖的对象实例(Finn和Levine,2017;Levine等,2018;Mahler等,2017;Pinto和Gupta,2016;Young等,2021)、涉及新颖对象和技能组合的任务(Dasari和Gupta,2021;Finn等,2017;James等,2018;Jang等,2021;Yu等,2018)、新目标或语言指令(Jang等,2021;Jiang等,2022;Liu等,2022;Mees等,2022;Nair等,2022a;Pong等,2019)、具有新的语义对象类别的任务(Shridhar等,2021;Stone等,2023)以及未见过的环境(Cui等,2022;Du等,2023a;Hansen等,2020)进行泛化。与大多数这些先前的工作不同,我们的目标是开发和研究一个能够在所有这些轴上,对未见条件进行泛化的单一模型。我们方法的一个关键要素是利用经过预训练的模型,这些模型相比机器人看到的数据,可以接触到更广泛的数据。

Pre-training for robotic manipulation. Pre-training has a long history in robotic learning. Most works focus on pre-trained visual representations that can be used to initialize the encoder of the robot’s camera observations, either via supervised ImageNet classification (Shah and Kumar, 2021), data augmentation (Kostrikov et al., 2020; Laskin et al., 2020a,b; Pari et al., 2021) or objectives that are tailored towards robotic control (Karamcheti et al., 2023; Ma et al., 2022; Majumdar et al., 2023b; Nair et al., 2022b; Xiao et al., 2022b). Other works have incorporated pre-trained language models, often either as an instruction encoder (Brohan et al., 2022; Hill et al., 2020; Jang et al., 2021; Jiang et al., 2022; Lynch and Sermanet, 2020; Nair et al., 2022a; Shridhar et al., 2022b) or for high-level planning (Ahn et al., 2022; Driess et al., 2023; Huang et al., 2022; Mu et al., 2023; Singh et al., 2023; Wu et al., 2023). Rather than using pre-training vision models or pre-trained language models, we specifically consider the use of pre-trained vision-language models (VLMs), which provide rich, grounded knowledge about the world. Prior works have studied the use of VLMs for robotics (Driess et al., 2023; Du et al., 2023b; Gadre et al., 2022; Karamcheti et al., 2023; Shah et al., 2023; Shridhar et al., 2021; Stone et al., 2023), and form part of the inspiration for this work. These prior approaches use VLMs for visual state representations (Karamcheti et al., 2023), for identifying objects (Gadre et al., 2022; Stone et al., 2023), for high-level planning (Driess et al., 2023), or for providing supervision or success detection (Du et al., 2023b; Ma et al., 2023; Sumers et al., 2023; Xiao et al., 2022a; Zhang et al., 2023). While CLIPort (Shridhar et al., 2021) and MOO (Stone et al., 2023) integrate pre-trained VLMs into end-to-end visuomotor manipulation policies, both incorporate significant structure into the policy that limits their applicability. Notably, our work does not rely on a restricted 2D action space and does not require a calibrated camera. Moreover, a critical distinction is that, unlike these works, we leverage VLMs that generate language, and the unified output space of our formulation enables model weights to be entirely shared across language and action tasks, without introducing action-only model layer components.

机器人操作的预训练模型。

预训练在机器人学习中有着悠久的历史。大多数研究关注的是可以用于初始化机器人摄像头观察的编码器的预训练视觉表示,可以通过监督式ImageNet分类(Shah和Kumar,2021)、数据增强(Kostrikov等,2020;Laskin等,2020a,b;Pari等,2021)或专为机器人控制定制的目标(Karamcheti等,2023;Ma等,2022;Majumdar等,2023b;Nair等,2022b;Xiao等,2022b)来实现。其他作品已经整合了预训练的语言模型,通常是作为指令编码器(Brohan等,2022;Hill等,2020;Jang等,2021;Jiang等,2022;Lynch和Sermanet,2020;Nair等,2022a;Shridhar等,2022b)或用于高层规划(Ahn等,2022;Driess等,2023;Huang等,2022;Mu等,2023;Singh等,2023;Wu等,2023)。与使用预训练视觉模型或预训练语言模型不同,我们专门考虑了预训练的视觉语言模型(VLMs)的使用,这提供了关于世界的丰富而基础的知识。先前的研究已经研究了在机器人学中使用VLMs的方法(Driess等,2023;Du等,2023b;Gadre等,2022;Karamcheti等,2023;Shah等,2023;Shridhar等,2021;Stone等,2023),并且这些研究部分启发了这项工作。这些先前的方法使用VLMs进行视觉状态表示(Karamcheti等,2023)、识别对象(Gadre等,2022;Stone等,2023)、高层规划(Driess等,2023)或提供监督或成功检测(Du等,2023b;Ma等,2023;Sumers等,2023;Xiao等,2022a;Zhang等,2023)。虽然 CLIPort(Shridhar等,2021)和 MOO(Stone等,2023)将预训练的VLMs整合到端到端的视觉运动操作策略中,但两者都在策略中整合了显著的结构,限制了它们的适用性。值得注意的是,我们的工作不依赖于受限的2D动作空间,并且不需要校准的摄像头。而且,一个关键的区别是,与这些作品不同,我们利用生成语言的VLMs,并且我们的公式的统一输出空间使模型权重可以在语言和动作任务之间完全共享,而无需引入仅用于动作的模型层组件。

3.Vision-Language-Action Models

3.视觉语言动作模型

In this section, we present our model family and the design choices for enabling training VLMs to directly perform closed-loop robot control. First, we describe the general architecture of our models and how they can be derived from models that are commonly used for vision-language tasks. Then, we introduce the recipe and challenges of fine-tuning large VLMs that are pre-trained on web-scale data to directly output robot actions, becoming VLA models. Finally, we describe how to make these models practical for robot tasks, addressing challenges with model size and inference speed to enable real-time control.

在本节中,我们介绍我们的模型系列以及设计选择,使得训练VLMs能够直接执行闭环机器人控制成为可能。首先,我们描述我们模型的通用架构以及它们如何可以从通常用于视觉语言任务的模型中派生出来。然后,我们介绍了对大型 VLM 进行微调的方法和挑战,这些 VLM 在网络规模数据上进行了预训练,以直接输出机器人动作,形成 VLA 模型。最后,我们描述了如何使这些模型适用于机器人任务,解决模型大小和推理速度方面的挑战以实现实时控制。

3.1. Pre-Trained Vision-Language Models

3.1. 预训练的视觉语言模型

The vision-language models (Chen et al., 2023a; Driess et al., 2023) that we build on in this work take as input one or more images and produce a sequence of tokens, which conventionally represents natural language text. Such models can perform a wide range of visual interpretation and reasoning tasks, from inferring the composition of an image to answering questions about individual objects and their relations to other objects (Alayrac et al., 2022; Chen et al., 2023a; Driess et al., 2023; Huang et al., 2023). Representing the knowledge necessary to perform such a wide range of tasks requires large models and web-scale datasets. In this work, we adapt two previously proposed VLMs to act as VLA models: PaLI-X (Chen et al., 2023a) and PaLM-E (Driess et al., 2023). We will refer to vision-language-action versions of these models as RT-2-PaLI-X and RT-2-PaLM-E. We leverage instantiations of these models that range in size from billions to tens of billions of parameters. We provide a detailed description of the architecture of these two models in Appendix D.

我们在这项工作中构建的视觉语言模型(Chen等,2023a;Driess等,2023)以一个或多个图像作为输入,并生成一系列标记,这系列标记通常代表自然语言文本。这样的模型可以执行各种视觉解释和推理任务:从推断图像的组成,到回答有关个别对象及其与其他对象关系的问题(Alayrac等,2022;Chen等,2023a;Driess等,2023;Huang等,2023)。代表执行如此广泛任务所需的知识,需要大型模型和Web规模数据集。在这项工作中,我们调整了两个先前提出的VLMs,使其充当VLA模型:PaLI-X(Chen等,2023a)和PaLM-E(Driess等,2023)。我们将这些模型的视觉语言动作版本称为RT-2-PaLI-X和RT-2-PaLM-E。我们利用这些模型的实例,其参数范围从数十亿到数百亿。我们在附录D中提供了对这两个模型架构的详细描述。

3.2. Robot-Action Fine-tuning

3.2. 机器人动作微调

To enable vision-language models to control a robot, they must be trained to output actions. We take a direct approach to this problem, representing actions as tokens in the model’s output, which are treated in the same way as language tokens. We base our action encoding on the discretization proposed by Brohan et al. (2022) for the RT-1 model. The action space consists of 6-DoF positional and rotational displacement of the robot end-effector, as well as the level of extension of the robot gripper and a special discrete command for terminating the episode, which should be triggered by the policy to signal successful completion. The continuous dimensions (all dimensions except for the discrete termination command) are discretized into 256 bins uniformly. Thus, the robot action can be represented using ordinals of the discrete bins as 8 integer numbers. In order to use these discretized actions to finetune a vision-language into a vision-language-action model, we need to associate tokens from the model’s existing tokenization with the discrete action bins. This requires reserving 256 tokens to serve as action tokens. Which tokens to choose depends on the particular tokenization used by each VLM, which we discuss later in this section. In order to define a target for VLM fine-tuning we convert the action vector into a single string by simply concatenating action tokens for each dimension with a space character:

“terminate Δpos𝑥 Δpos𝑦 Δpos𝑧 Δrot𝑥 Δrot𝑦 Δrot𝑧 gripper_extension”.

为了使视觉语言模型能够控制机器人,它们必须经过训练以输出动作。我们采取了一种直接的方法来解决这个问题,将动作表示为模型输出中的标记,这些标记与语言标记相同处理。我们基于Brohan等人(2022)为RT-1模型提出的离散化方法来进行动作编码。动作空间包括机器人末端执行器的6个自由度的位置和旋转位移,以及机器人夹持器的伸展程度,还有一个用于终止事件的特殊离散命令,应由策略触发以表示成功完成。连续维度(除离散终止命令之外的所有维度)统一离散化为 256 个箱子(区间?)。因此,机器人动作可以使用这些离散箱子的序数表示为8个整数。为了使用这些离散动作来微调视觉语言成为视觉语言动作模型,我们需要将模型现有的标记化中的标记与离散动作箱子相关联。这需要保留256个标记作为动作标记。选择哪些标记取决于每个VLM使用的特定标记化,我们将在本节后面讨论。为了定义VLM微调的目标,我们将动作向量转换为单个字符串,方法是简单地连接每个维度的动作标记,使用空格字符:

A possible instantiation of such a target could be: “1 128 91 241 5 101 127”. The two VLMs that we finetune in our experiments, PaLI-X (Chen et al., 2023a) and PaLM-E (Driess et al., 2023), use different tokenizations. For PaLI-X, integers up to 1000 each have a unique token, so we simply associate the action bins to the token representing the corresponding integer. For the PaLM-E model, which does not provide this convenient representation of numbers, we simply overwrite the 256 least frequently used tokens to represent the action vocabulary. It is worth noting that training VLMs to override existing tokens with action tokens is a form of symbol tuning (Wei et al., 2023), which has been shown to work well for VLMs in prior work.

这样的目标的一个可能实例可能是:“1 128 91 241 5 101 127”。我们在实验中微调的两个VLMs,PaLI-X(Chen等,2023a)和PaLM-E(Driess等,2023),使用不同的标记化。对于PaLI-X模型,它的标记化方式是,每个整数从1到1000都有一个唯一的标记,因此我们简单地将动作箱子与表示相应整数的标记相关联。对于PaLM-E模型,它没有提供这种方便的数字表示,我们只需覆盖256个最不常用的标记以表示动作词汇。值得注意的是,训练VLMs覆盖现有标记的动作标记是符号调整(Wei等,2023)的一种形式,已经在先前的工作中证明对VLMs很有效。

Taking the action representation described above, we convert our robot data to be suitable for VLM model fine-tuning, where our inputs include robot camera image and textual task description (using standard VQA format “Q: what action should the robot take to [task instruction]? A:”), and our output is formatted as a string of numbers/least frequently used tokens representing a robot action.

采用上述描述的动作表示,我们将机器人数据转换为适用于VLM模型微调的格式。输入包括机器人摄像头图像和文本任务描述(使用标准的VQA格式,“Q:机器人应该采取什么行动来完成[任务说明]?A:”),输出格式为一串数字/最不常用的标记,表示机器人的动作。

Co-Fine-Tuning. As we will show in our experiments, a key technical detail of the training recipe that improves robot performance is co-fine-tuning robotics data with the original web data instead of naïve finetuning on robot data only. We notice that co-fine-tuning leads to more generalizable policies since the policies are exposed to both abstract visual concepts from web scale data and low level robot actions during fine-tuning, instead of just robot actions. During co-fine-tuning we balance the ratios of robot and web data in each training batch by increasing the sampling weight on the robot dataset.

联合微调。

正如我们在实验中将要展示的,改进机器人性能的训练方法的一个关键技术细节是共同微调机器人数据和原始网络数据,而不是仅在机器人数据上进行简单微调。我们注意到,联合微调导致了更具一般性的策略,因为在微调过程中,策略既暴露于来自网络规模数据的抽象视觉概念,又暴露于低级机器人动作,而不仅仅是机器人动作。在共同微调过程中,我们通过增加对机器人数据的采样权重来平衡每个训练批次中机器人和网络数据的比例。

Output Constraint. One important distinction between RT-2 and standard VLMs is that RT-2 is required to output valid action tokens for execution on the real robot. Thus, to ensure that RT-2 outputs valid action tokens during decoding, we constrain its output vocabulary via only sampling valid action tokens when the model is prompted with a robot-action task, whereas the model is still allowed to output the full range of natural language tokens on standard vision-language tasks.

输出约束。

RT-2和标准VLM之间的一个重要区别是,RT-2必须输出用于在真实机器人上执行的有效动作标记。因此,为了确保在解码过程中RT-2输出有效的动作标记,我们通过在模型受到机器人动作任务提示时,仅对有效的动作标记进行采样来限制其输出词汇。而在标准视觉语言任务中,模型仍然被允许输出完整范围的自然语言标记。

3.3. Real-Time Inference

3.3. 实时推理

The size of modern VLMs can reach tens or hundreds of billions of parameters (Chen et al., 2023a; Driess et al., 2023). The largest model trained in this work uses 55B parameters. It is infeasible to directly run such models on the standard desktop-style machines or on-robot GPUs commonly used for real-time robot control. To the best of our knowledge, our model is the largest ever, by over an order of magnitude, used for direct closed-loop robotic control, and therefore requires a new set of solutions to enable efficient real-time inference. We develop a protocol that allows us to run RT-2 models on robots by deploying them in a multi-TPU cloud service and querying this service over the network. With this solution, we can achieve a suitable frequency of control and also serve multiple robots using the same cloud service. The largest model we evaluated, the 55B parameter RT-2-PaLI-X-55B model, can run at a frequency of 1-3 Hz. The smaller version of that model, consisting of 5B parameters, can run at a frequency of around 5 Hz.

当前VLM的规模可以达到数百亿或数千亿参数(Chen et al., 2023a; Driess et al., 2023)。本文中训练的最大模型使用了55B参数。直接在标准桌面式机器,或通常用于实时机器人控制的机器人GPU上运行这样的模型是不可行的。据我们所知,我们的模型是迄今为止用于直接闭环机器人控制的规模最大的模型,其参数数量超过一个数量级,因此需要一套新的解决方案来实现高效的实时推断。我们制定了一个协议,使我们能够在机器人上运行RT-2模型,通过在多个TPU云服务上部署它们并通过网络查询此服务来实现。借助这个解决方案,我们可以实现适当的控制频率,并且可以使用同一云服务为多个机器人提供服务。我们评估的最大模型,即55B参数的RT-2-PaLI-X-55B模型,可以以1-3 Hz的频率运行。该模型的较小版本,由5B参数组成,可以以约5 Hz的频率运行。

4. Experiments

4. 实验

Our experiments focus on real-world generalization and emergent capabilities of RT-2 and aim to answer the following questions:

我们的实验重点关注RT-2的真实世界泛化和新兴能力,并旨在回答以下问题:

1.How does RT-2 perform on seen tasks and more importantly, generalize over new objects, backgrounds, and environments?

2.Can we observe and measure any emergent capabilities of RT-2?

3.How does the generalization vary with parameter count and other design decisions?

-

Can RT-2 exhibit signs of chain-of-thought reasoning similarly to vision-language models?

-

RT-2在已见任务上表现如何,更重要的是,它在新的对象、背景和环境中如何泛化?

-

我们是否可以观察和测量RT-2的任何新兴能力?

-

泛化效果如何随参数数量和其他设计决策而变化?

-

RT-2是否能像视觉语言模型一样展现出链式思维推理的迹象?

We evaluate our approach and several baselines with about 6,000 evaluation trajectories in a variety of conditions, which we describe in the following sections. Unless specified otherwise, we use a 7DoF mobile manipulator with the action space described in Sec. 3.2. We also demonstrate examples of RT-2 execution on the project website: robotics-transformer2.github.io. We train two specific instantiations of RT-2 that leverage pre-trained VLMs: (1) RT-2-PaLI-X is built from 5B and 55B PaLI-X (Chen et al., 2023a), and (2) RT-2-PaLM-E is built from 12B PaLM-E (Driess et al., 2023).

我们通过大约6000个评估轨迹对我们的方法和几个基线进行评估,涵盖了各种条件,我们将在以下章节中进行描述。除非另有说明,我们使用一个具有7个自由度的移动机械手,其动作空间在第3.2节中描述。我们还在项目网站上演示了RT-2执行的示例:robotics-transformer2.github.io。我们训练了两个特定的RT-2实例,利用了预训练的VLMs:(1)RT-2-PaLI-X是由5B和55B PaLI-X (Chen et al., 2023a) 构建的,(2)RT-2-PaLM-E是由12B PaLM-E (Driess et al., 2023) 构建的。

For training, we leverage the original web scale data from Chen et al. (2023a) and Driess et al. (2023), which consists of visual question answering, captioning, and unstructured interwoven image and text examples. We combine it with the robot demonstration data from Brohan et al. (2022), which was collected with 13 robots over 17 months in an office kitchen environment. Each robot demonstration trajectory is annotated with a natural language instruction that describes the task performed, consisting of a verb describing the skill (e.g., “pick”, ”open”, “place into”) and one or more nouns describing the objects manipulated (e.g., “7up can”, “drawer”, “napkin”) (see Appendix B for more details on the used datasets). For all RT-2 training runs we adopt the hyperparameters from the original PaLI-X (Chen et al., 2023a) and PaLM-E (Driess et al., 2023) papers, including learning rate schedules and regularizations. More training details can be found in Appendix E.

对于训练,我们利用了Chen等人(2023a)和Driess等人(2023)的原始Web规模数据,其中包括视觉问答、字幕和无结构的交织图像和文本示例。我们将其与Brohan等人(2022)的机器人演示数据相结合,该数据是在办公室厨房环境中使用13台机器人在17个月内收集的。每个机器人演示轨迹都用自然语言指令进行注释,描述执行的任务,包括描述技能的动词(例如,“拾取”、“打开”、“放入”)和描述被操作对象的一个或多个名词(例如,“7up罐”、“抽屉”、“餐巾纸”)(有关使用的数据集的更多详细信息,请参见附录B)。对于所有RT-2训练运行,我们采用了原始PaLI-X(Chen et al., 2023a)和PaLM-E(Driess et al., 2023)论文的超参数,包括学习率调度和正则化。更多的训练细节可以在附录E中找到。

Baselines. We compare our method to multiple state-of-the-art baselines that challenge different aspects of our method. All of the baselines use the exact same robotic data. To compare against a state-of-the-art policy, we use RT-1 (Brohan et al., 2022), a 35M parameter transformer-based model. To compare against state-of-the-art pretrained representations, we use VC-1 (Majumdar et al., 2023a) and R3M (Nair et al., 2022b), with policies implemented by training an RT-1 backbone to take their representations as input. To compare against other architectures for using VLMs, we use MOO (Stone et al., 2023), which uses a VLM to create an additional image channel for a semantic map, which is then fed into an RT-1 backbone. More information is provided in Appendix C.

基线。

我们将我们的方法与多个挑战我们方法不同方面的最新基线进行比较。所有基线使用相同的机器人数据。为了与最先进的策略进行比较,我们使用RT-1(Brohan et al., 2022),一个基于Transformer的3500万参数的模型。为了与最先进的预训练表示进行比较,我们使用VC-1(Majumdar et al., 2023a)和R3M(Nair et al., 2022b),通过训练RT-1骨干以接受它们的表示作为输入来实现策略。为了与使用VLM的其他架构进行比较,我们使用MOO(Stone et al., 2023),该方法使用VLM创建一个用于语义映射的附加图像通道,然后将其输入RT-1骨干。附录C提供了更多信息。

4.1. How does RT-2 perform on seen tasks and more importantly, generalize over new objects, backgrounds, and environments?

4.1. RT-2在已见任务上的表现如何,更重要的是,它在新的对象、背景和环境中如何泛化?

To evaluate in-distribution performance as well as generalization capabilities, we compare the RT-2-PaLI-X and RT-2-PaLM-E models to the four baselines listed in the previous sections. For the seen tasks category, we use the same suite of seen instructions as in RT-1 (Brohan et al., 2022), which include over 200 tasks in this evaluation: 36 for picking objects, 35 for knocking objects, 35 for placing things upright, 48 for moving objects, 18 for opening and closing various drawers, and 36 for picking out of and placing objects into drawers. Note, however, that these “in-distribution” evaluations still vary the placement of objects and factors such as time of day and robot position, requiring the skills to generalize to realistic variability in the environment.

为了评估分布内性能以及泛化能力,我们将RT-2-PaLI-X和RT-2-PaLM-E模型与前几节列出的四个基线进行比较。对于已见任务类别,我们使用与RT-1 (Brohan et al., 2022) 相同的已见指令套件,该套件在本次评估中包括超过200个任务:36个用于拾取物体,35个用于击倒物体,35个用于将物品放直,48个用于移动物体,18个用于打开和关闭各种抽屉,以及36个用于从抽屉中取出和放入物品。然而,请注意,这些“分布内”评估仍然改变了物体的放置位置以及时间和机器人位置等因素,要求技能能够泛化到环境中的实际变化。

Figure 3 shows example generalization evaluations, which are split into unseen categories (objects, backgrounds and environments), and are additionally split into easy and hard cases. For unseen objects, hard cases include harder-to-grasp and more unique objects (such as toys). For unseen backgrounds, hard cases include more varied backgrounds and novel objects. Lastly, for unseen environments, hard cases correspond to a more visually distinct office desk environment with monitors and accessories, while the easier environment is a kitchen sink. These evaluations consists of over 280 tasks that focus primarily on pick and placing skills in many diverse scenarios. The list of instructions for unseen categories is specified in Appendix F.2.

图3显示了示例泛化评估,这些评估分为未见类别(对象、背景和环境),并且另外分为简单和困难情况。对于未见对象,困难情况包括难以抓取和更独特的对象(例如玩具)。对于未见背景,困难情况包括更多样化的背景和新颖的对象。最后,对于未见环境,困难情况对应于一个在视觉上更为独特的办公桌环境,其中包括监视器和配件,而较容易的环境是一个厨房水槽。这些评估包括超过280个主要关注在许多不同场景中进行拾取和放置技能的任务。未见类别的指令列表在附录F.2中指定。

Figure 3 | Example generalization scenarios used for evaluation in Figures 4 and 6b and Tables 4 and 6.

图3 | 用于在图4和6b以及表4和6中评估的示例泛化场景。

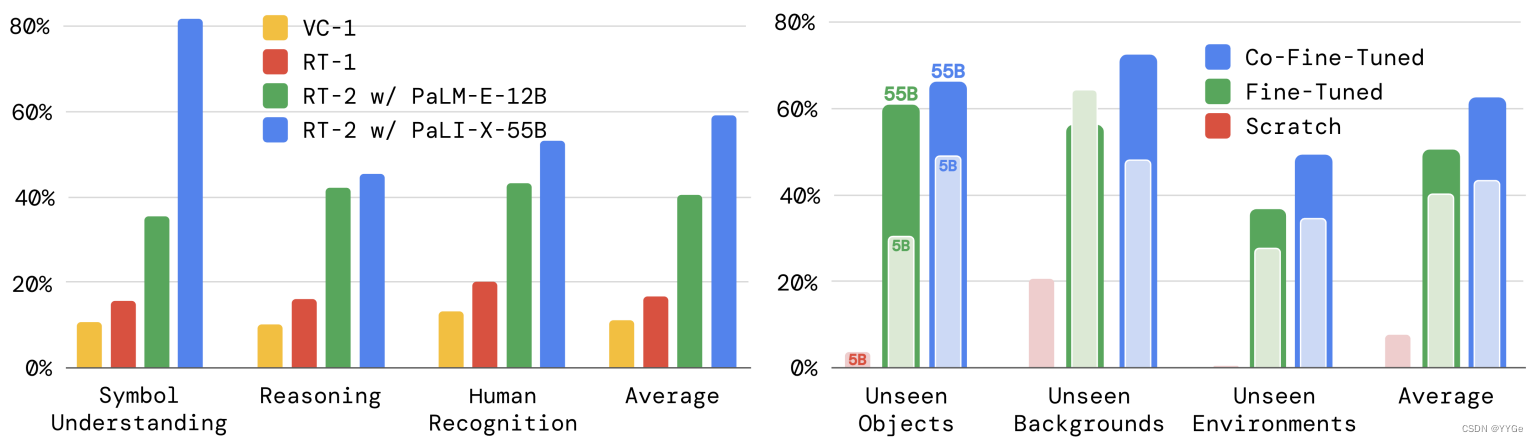

The evaluation results are shown in Figure 4 and Appendix Table 4. The performance on seen tasks is similar between the RT-2 models and RT-1, with other baselines attaining a lower success rate. The difference between the RT-2 models and the baseline is most pronounced in the various generalization experiments, suggesting that the strength of vision-language-action models lies in transferring more generalizable visual and semantic concepts from their Internet-scale pretraining data. Here, on average, both instantiations of RT-2 perform similarly, resulting in ∼2x improvement over the next two baselines, RT-1 and MOO, and ∼6x better than the other baselines. The PaLM-E version of RT-2 seems to perform better than the RT-2-PaLI-X in harder versions of generalization scenarios while under-performing on easier ones, resulting in a similar average performance.

评估结果显示在图4和附录表4中。在已见任务上,RT-2模型和RT-1的性能相似,而其他基线的成功率较低。RT-2模型和基线之间的差异在各种泛化实验中最为显著,表明视觉语言动作模型的强项在于从其互联网规模的预训练数据中传递更具泛化性的视觉和语义概念。在这里,两个RT-2实例的表现平均相似,相对于接下来的两个基线RT-1和MOO,提高了约2倍,比其他基线提高了约6倍。RT-2-PaLM-E版本似乎在泛化场景的困难版本中表现比RT-2-PaLI-X更好,而在简单场景中表现较差,导致平均性能相似。

Figure 4 | Overall performance of two instantiations of RT-2 and baselines across seen training tasks as well as unseen evaluations measuring generalization to novel objects, novel backgrounds, and novel environments. Appendix Table 4 details the full results.

图4 | 两个RT-2实例和基线在已见训练任务以及未见评估中的总体表现,衡量对新对象、新背景和新环境的泛化。附录表4详细说明了完整的结果。

Open Source Language Table Benchmark. To provide an additional point of comparison using open-source baselines and environments, we leverage the open-source Language-Table simulation environment from Lynch et al. (2022). We co-fine-tune a smaller PaLI 3B model on several prediction tasks, including in-domain VQA tasks, for the Language-Table dataset, and evaluate the resulting policy in simulation. For the action prediction task, we discretize and encode actions as text in the format “X Y”, where X and Y range between {-10, -9, . . . , +9, +10}, and represent delta 2D cartesian setpoints of the end effector. Due to its reduced size, the resulting model can run inference at a similar rate (5 Hz) as the other baselines. The results of this experiment are presented in Table 1. We observe a significant performance boost when using our model compared to the baselines, indicating that the VLM-based pre-training together with the expressiveness of the large PaLI model can be beneficial in other scenarios, in this case, simulation with a different robot. We also show qualitative real-world out-of-distribution behaviors behaviors in Figure 5, demonstrating novel pushing tasks and targeting objects not before seen in this environment. More details about the Language Table experiments can be found in Appendix B and D.

开源语言-表格基准。

为了提供使用开源基线和环境的额外比较点,我们利用了Lynch等人(2022)的开源语言-表格仿真环境。我们在Language-Table数据集上使用较小的PaLI 3B模型进行多个预测任务的协同微调,包括域内VQA任务,并在模拟中评估生成的策略。对于动作预测任务,我们将动作离散化并编码为文本格式“X Y”,其中X和Y在{-10,-9,…,+9,+10}范围内,表示末端执行器的二维笛卡尔设定点的增量。由于其较小的尺寸,生成的模型可以以与其他基线相似的速率(5 Hz)进行推断。这个实验的结果呈现在表1中。我们观察到在使用我们的模型时相对于基线有显著的性能提升,表明基于VLM的预训练与大型PaLI模型的表达能力在其他场景中也是有益的,即在模拟环境中与不同的机器人。我们还展示了图5中的实际世界超分布行为,演示了新颖的推动任务和以前在此环境中未见过的对象。有关Language Table实验的更多细节,请参见附录B和D。

Figure 5 | Real-world out-of-distribution behaviors in the Language Table environment. Identical RT-2-PaLI-3B model checkpoint is used as in Tab. 1.

图5 | Language Table环境中实际超分布行为。使用与表1相同的RT-2-PaLI-3B模型检查点。

Table 1 | Performance on the simulated Language-Table tasks (Lynch and Sermanet, 2020).

表1 | 在模拟的Language-Table任务上的性能(Lynch and Sermanet, 2020)。

4.2. Can we observe and measure any emergent capabilities of RT-2?

4.2. 我们能观察和衡量RT-2的任何新能力吗?

In addition to evaluating the generalization capabilities of vision-language-action models, we also aim to evaluate the degree to which such models can enable new capabilities beyond those demonstrated in the robot data by transferring knowledge from the web. We refer to such capabilities as emergent, in the sense that they emerge by transferring Internet-scale pretraining. We do not expect such transfer to enable new robotic motions, but we do expect semantic and visual concepts, including relations and nouns, to transfer effectively, even in cases where those concepts were not seen in the robot data.

除了评估视觉-语言-动作模型的泛化能力之外,我们还旨在评估这些模型能够通过从Web中传递知识来实现新能力的程度,超越了机器人数据中所展示的能力。我们将这样的能力称为新兴能力,意味着它们通过互联网规模的预训练而出现。我们不期望这种转移能够实现新的机器人动作,但我们确实期望语义和视觉概念,包括关系和名词,能够在机器人数据中未看到这些概念的情况下有效传递。

Qualitative Evaluations.

First, we experiment with our RT-2-PaLI-X model to determine various emergent capabilities transferred from vision-language concepts. We demonstrate some examples of such interactions in Figure 2. We find through our explorations that RT-2 inherits novel capabilities in terms of semantic understanding and basic reasoning in the context of the scene. For example accomplishing the task “put strawberry into the correct bowl” requires a nuanced understanding of not only what a strawberry and bowl are, but also reasoning in the context the scene to know the strawberry should go with the like fruits. For the task “pick up the bag about to fall off the table,” RT-2 demonstrates physical understanding to disambiguate between two bags and recognize the precariously placed object. All the interactions tested in these scenarios have never been seen in the robot data, which points to the transfer of semantic knowledge from vision-language data.

**定性评估。**首先,我们使用RT-2-PaLI-X模型进行实验,以确定从视觉-语言概念中传递的各种新兴能力。我们在图2中展示了一些此类交互的示例。通过我们的探索,我们发现RT-2在场景语境中的语义理解和基本推理方面继承了新颖的能力。例如,完成任务“将草莓放入正确的碗中”不仅需要对草莓和碗的理解,还需要在场景背景下进行推理,知道草莓应该与相似的水果一起放置。对于任务“拿起即将从桌子上掉下的袋子”,RT-2展示了对两个袋子进行物理理解以消除歧义,并识别处于不稳定位置的物体。在这些场景中测试的所有交互在机器人数据中从未见过,这表明了从视觉-语言数据中传递语义知识。

Quantitative Evaluations.

To quantify these emergent capabilities, we take the top two baselines from the previous evaluations, RT-1 and VC-1, and compare them against our two models: RT-2-PaLI-X and RT-2-PaLM-E. To reduce the variance of these experiment, we evaluate all of the methods using the A/B testing framework (Fisher, 1936), where all four models are evaluated one after another in the exact same conditions.

**定量评估。**为了量化这些新兴能力,我们选择了前述评估中排名前两位的基线,RT-1和VC-1,并将它们与我们的两个模型进行比较:RT-2-PaLI-X和RT-2-PaLM-E。为了减少这些实验的方差,我们使用A/B测试框架(Fisher,1936),其中四个模型在完全相同的条件下依次进行评估。

We’ split the emergent capabilities of RT-2 into three categories covering axes of reasoning and semantic understanding (with examples of each shown in Appendix Figure 8). The first we term symbol understanding, which explicitly tests whether the RT-2 policy transfers semantic knowledge from vision-language pretraining that was not present in any of the robot data. Example instructions in this category are “move apple to 3” or “push coke can on top of heart”. The second category we term reasoning, which demonstrates the ability to apply various aspects of reasoning of the underlying VLM to control tasks. These tasks require visual reasoning (“move the apple to cup with same color”), math (“move X near the sum of two plus one”), and multilingual understanding (“mueve la manzana al vaso verde”). We refer to the last category as human recognition tasks, which include tasks such as “move the coke can to the person with glasses”, to demonstrate human-centric understanding and recognition. The full list of instructions used for this evaluation is specified in Appendix F.2.

我们将RT-2的新兴能力分为三个类别,涵盖了推理和语义理解的轴(每个类别的示例在附录图8中显示)。我们将第一个类别称为符号理解,明确测试RT-2策略是否从视觉-语言预训练中传递了在任何机器人数据中都不存在的语义知识。在这个类别中的示例指令包括“将苹果移动到3”或“推动可乐罐放在心脏上方”。第二个类别我们称为推理,它展示了将底层VLM的各个推理方面应用于控制任务的能力。这些任务涉及视觉推理(“将苹果移动到颜色相同的杯子”),数学推理(“将X移近两加一的和”)和多语言理解(“mueve la manzana al vaso verde”)。我们将最后一类称为人类识别任务,其中包括任务,如“将可乐罐移动到戴眼镜的人”,以展示对人类的理解和识别。用于此评估的完整指令列表在附录F.2中指定。

We present the results of this experiment in Figure 6a with all the numerical results in Appendix H.2. We observe that our VLA models significantly outperform the baselines across all categories, with our best RT-2-PaLI-X model achieving more than 3x average success rate over the next best baseline (RT-1). We also note that while the larger PaLI-X-based model results in better symbol understanding, reasoning and person recognition performance on average, the smaller PaLM-E-based model has an edge on tasks that involve math reasoning. We attribute this interesting result to the different pre-training mixture used in PaLM-E, which results in a model that is more capable at math calculation than the mostly visually pre-trained PaLI-X.

我们在图6a中呈现了这个实验的结果,所有的数值结果都在附录H.2中。我们观察到我们的VLA模型在所有类别上都显著优于基线,我们最好的RT-2-PaLI-X模型在平均成功率上超过下一个最佳基线(RT-1)3倍以上。我们还注意到,虽然基于较大的PaLI-X的模型在平均符号理解、推理和人员识别性能上表现更好,但基于较小的PaLM-E的模型在涉及数学推理的任务上更具优势。我们将这一有趣的结果归因于PaLM-E中使用的不同的预训练混合,这导致该模型在数学计算方面比大部分基于视觉预训练的PaLI-X更为强大。

(a) Performance comparison on various emergent skill evaluations (Figure 8) between RT-2 and two baselines.

(b) Ablations of RT-2-PaLI-X showcasing the impact of parameter count and training strategy on generalization.

(a)左图:RT-2与两个基线在各种新兴技能评估(图8)上的性能比较。

(b)右图:RT-2-PaLI-X的消融展示了参数数量和训练策略对泛化的影响。

Figure 6 | Quantitative performance of RT-2 across (6a) emergent skills and (6b) size and training ablations. Appendix Tables 5 and 6 detail the full numerical results.

图6 | RT-2在新兴技能(6a)和尺寸以及训练消融(6b)方面的定量性能。附录表5和表6详细说明了完整的数值结果。

4.3. How does the generalization vary with parameter count and other design decisions?

4.3. 泛化如何随参数数量和其他设计决策而变化?

For this comparison, we use RT-2-PaLI-X model because of its flexibility in terms of the model size (due to the nature of PaLM-E, RT-2-PaLM-E is restricted to only certain sizes of PaLM and ViT models). In particular, we compare two different model sizes, 5B and 55B, as well as three different training routines: training a model from scratch, without using any weights from the VLM pre-training; fine-tuning a pre-trained model using robot action data only; and co-fine-tuning (co-training with fine-tuning), the primary method used in this work where we use both the original VLM training data as well as robotic data for VLM fine-tuning. Since we are mostly interested in the generalization aspects of these models, we remove the seen tasks evaluation from this set of experiments.

对于这个比较,我们使用RT-2-PaLI-X模型,因为它在模型大小方面具有灵活性(由于PaLM-E的性质,RT-2-PaLM-E仅限于PaLM和ViT模型的某些大小)。特别是,我们比较了两种不同的模型大小,即5B和55B,以及三种不同的训练例程:从头开始训练一个模型,不使用任何来自VLM预训练的权重;仅使用机器人动作数据对预训练模型进行微调;和联合微调(与微调一起训练),这是本文中使用的主要方法,我们在VLM微调中同时使用原始的VLM训练数据和机器人数据。由于我们主要关注这些模型的泛化方面,因此我们在这组实验中排除了已见任务的评估。

The results of the ablations are presented in Figure 6b and Appendix Table 6. First, we observe that training a very large model from scratch results in a very poor performance even for the 5B model. Given this result, we decide to skip the evaluation of an even bigger 55B PaLI-X model when trained from scratch. Second, we notice that co-fine-tuning a model (regardless of its size) results in a better generalization performance than simply fine-tuning it with robotic data. We attribute this to the fact that keeping the original data around the fine-tuning part of training, allows the model to not forget its previous concepts learned during the VLM training. Lastly, somewhat unsurprisingly, we notice that the increased size of the model results in a better generalization performance

消融实验的结果显示在图6b中,附录表6详细说明了完整的结果。首先,我们观察到从头开始训练一个非常大的模型,即使对于5B模型也会导致非常差的性能。鉴于这个结果,我们决定跳过从头开始训练一个更大的55B PaLI-X模型的评估。其次,我们注意到联合微调模型(无论其大小如何)会导致比仅使用机器人数据微调更好的泛化性能。我们将这归因于在训练的微调部分保留原始数据,允许模型不忘记在VLM训练期间学到的先前概念。最后,或多或少地,我们注意到模型的增加尺寸导致更好的泛化性能。

4.4. Can RT-2 exhibit signs of chain-of-thought reasoning similarly to vision-language models?

4.4. RT-2是否能展现类似于视觉语言模型的思维链推理?

Inspired by the chain-of-thought prompting method in LLMs (Wei et al., 2022), we fine-tune a variant of RT-2 with PaLM-E for just a few hundred gradient steps to increase its capability of utilizing language and actions jointly with the hope that it will elicit a more sophisticated reasoning behavior. We augment the data to include an additional “Plan” step, which describes the purpose of the action that the robot is about to take in natural language first, which is then followed by the actual action tokens, e.g. “Instruction: I’m hungry. Plan: pick rxbar chocolate. Action: 1 128 124 136 121 158 111 255.” This data augmentation scheme acts as a bridge between VQA datasets (visual reasoning) and manipulation datasets (generating actions).

受LLMs(Wei等人,2022)中的思维链提示方法的启发,我们微调了RT-2的PaLM-E变体,只进行了几百个梯度步骤,以增加其同时利用语言和动作的能力,希望它能引发更复杂的推理行为。我们扩充数据,增加了一个额外的“计划”步骤,该步骤首先用自然语言,描述机器人即将采取的动作的目的,然后是实际的动作标记,例如“指令:我饿了。计划:拿rxbar巧克力。动作:1 128 124 136 121 158 111 255。”这种数据扩充方案充当了VQA数据集(视觉推理)和操作数据集(生成动作)之间的桥梁。

We qualitatively observe that RT-2 with chain-of-thought reasoning is able to answer more sophisticated commands due to the fact that it is given a place to plan its actions in natural language first. This is a promising direction that provides some initial evidence that using LLMs or VLMs as planners (Ahn et al., 2022; Driess et al., 2023) can be combined with low-level policies in a single VLA model. Rollouts of RT-2 with chain-of-thought reasoning are shown in Figure 7 and in Appendix I.

我们在定性上观察到,具有思维链推理的RT-2能够更好地回答更复杂的命令,因为它首先用自然语言规划其动作的地方。这是一个有希望的方向,为一些初步证据,即使用LLMs或VLMs作为计划者(Ahn等人,2022;Driess等人,2023)可以与单个VLA模型中的低级策略相结合。RT-2带有思维链推理的轨迹显示在图7中,并在附录I中。

Figure 7 | Rollouts of RT-2 with chain-of-thought reasoning, where RT-2 generates both a plan and an action.

图 7 | RT-2 的带有思维链推理的推出,其中用 RT-2 生成计划和行动。

5. Limitations

5.限制

Even though RT-2 exhibits promising generalization properties, there are multiple limitations of this approach. First, although we show that including web-scale pretraining via VLMs boosts generalization over semantic and visual concepts, the robot does not acquire any ability to perform new motions by virtue of including this additional experience. The model’s physical skills are still limited to the distribution of skills seen in the robot data (see Appendix G), but it learns to deploy those skills in new ways. We believe this is a result of the dataset not being varied enough along the axes of skills. An exciting direction for future work is to study how new skills could be acquired through new data collection paradigms such as videos of humans.

尽管RT-2展现出有希望的泛化特性,但这种方法存在多个局限性。首先,尽管我们展示了通过VLMs进行Web规模预训练可以提升对语义和视觉概念的泛化能力,但机器人并没有通过包含这种额外经验而获得执行新动作的能力。模型的物理技能仍然局限于在机器人数据中看到的技能分布(参见附录G),但它学会了以新的方式运用这些技能。我们认为这是数据集在技能轴上变化不足的结果。未来研究的一个激动人心的方向是研究如何通过新的数据收集范例(例如人类的视频)获得新技能。

Second, although we showed we could run large VLA models in real time, the computation cost of these models is high, and as these methods are applied to settings that demand high-frequency control, real-time inference may become a major bottleneck. An exciting direction for future research is to explore quantization and distillation techniques that might enable such models to run at higher rates or on lower-cost hardware. This is also connected to another current limitation in that there are only a small number of generally available VLM models that can be used to create RT-2. We hope that more open-sourced models will become available (e.g. https://llava-vl.github.io/) and the proprietary ones will open up their fine-tuning APIs, which is a sufficient requirement to build VLA models.

其次,尽管我们展示了可以实时运行大型VLA模型,但这些模型的计算成本很高,随着这些方法被应用于需要高频控制的场景,实时推理可能成为一个主要瓶颈。未来研究的一个激动人心的方向是探索量化和蒸馏技术,这可能使这些模型以更高的速率运行或在更低成本的硬件上运行。这也与另一个当前的限制相关,即目前只有很少数量的通用可用的VLM模型可以用于创建RT-2。我们希望更多的开源模型将变得可用(例如https://llava-vl.github.io/),并且专有的模型将开放它们的微调API,这是构建VLA模型的足够条件。

6. Conclusions

6.结论

In this paper, we described how vision-language-action (VLA) models could be trained by combining vision-language model (VLM) pretraining with robotic data. We then presented two instantiations of VLAs based on PaLM-E and PaLI-X, which we call RT-2-PaLM-E and RT-2-PaLI-X. These models are cofine-tuned with robotic trajectory data to output robot actions, which are represented as text tokens. We showed that our approach results in very performant robotic policies and, more importantly, leads to a significantly better generalization performance and emergent capabilities inherited from web-scale vision-language pretraining. We believe that this simple and general approach shows a promise of robotics directly benefiting from better vision-language models, which puts the field of robot learning in a strategic position to further improve with advancements in other fields.

在本文中,我们描述了如何通过将视觉语言模型(VLM)的预训练与机器人数据相结合来训练视觉语言动作(VLA)模型。然后,我们提出了两个基于PaLM-E和PaLI-X的VLA实例,我们称之为RT-2-PaLM-E和RT-2-PaLI-X。这些模型与机器人轨迹数据一起进行了协同微调,以输出机器人动作,这些动作被表示为文本标记。我们展示了我们的方法导致了非常有效的机器人策略,并且更重要的是,具有显著更好的泛化性能和从Web规模视觉语言预训练中继承的新能力。我们相信,这种简单而通用的方法显示了机器人学习,在其他领域的进展中将会直接受益于更好的视觉语言模型,这使得机器人学领域在进一步改进方面处于战略位置。

Acknowledgments

致谢

We would like to acknowledge Fred Alcober, Jodi Lynn Andres, Carolina Parada, Joseph Dabis, Rochelle Dela Cruz, Jessica Gomez, Gavin Gonzalez, John Guilyard, Tomas Jackson, Jie Tan, Scott Lehrer, Dee M, Utsav Malla, Sarah Nguyen, Jane Park, Emily Perez, Elio Prado, Jornell Quiambao, Clayton Tan, Jodexty Therlonge, Eleanor Tomlinson, Wenxuan Zhou, and the greater Google DeepMind team for their feedback and contributions.

我们要感谢Fred Alcober、Jodi Lynn Andres、Carolina Parada、Joseph Dabis、Rochelle Dela Cruz、Jessica Gomez、Gavin Gonzalez、John Guilyard、Tomas Jackson、Jie Tan、Scott Lehrer、Dee M、Utsav Malla、Sarah Nguyen、Jane Park、Emily Perez、Elio Prado、Jornell Quiambao、Clayton Tan、Jodexty Therlonge、Eleanor Tomlinson、Wenxuan Zhou以及Google DeepMind团队的其他成员,感谢他们的反馈和贡献。

A. Contributions

A. 贡献

• 训练与评估(设计和执行模型训练程序、在模拟和真实环境中评估模型、对算法设计选择运行割离实验):Yevgen Chebotar、Krzysztof Choromanski、Tianli Ding、Danny Driess、Avinava Dubey、Pete Florence、Chuyuan Fu、Montse Gonzalez Arenas、Keerthana Gopalakrishnan、Kehang Han、Alexander Herzog、Brian Ichter、Alex Irpan、Isabel Leal、Lisa Lee、Yao Lu、Henryk Michalewski、Igor Mordatch、Karl Pertsch、Michael Ryoo、Anikait Singh、Quan Vuong、Ayzaan Wahid、Paul Wohlhart、Fei Xia、Ted Xiao 和 Tianhe Yu。

• 网络架构(设计和实现模型网络模块、处理动作的标记化、在实验中启用模型网络的推断):Yevgen Chebotar、Xi Chen、Krzysztof Choromanski、Danny Driess、Pete Florence、Keerthana Gopalakrishnan、Kehang Han、Karol Hausman、Brian Ichter、Alex Irpan、Isabel Leal、Lisa Lee、Henryk Michalewski、Igor Mordatch、Kanishka Rao、Michael Ryoo、Anikait Singh、Quan Vuong、Ayzaan Wahid、Jialin Wu、Fei Xia、Ted Xiao 和 Tianhe Yu。

• 数据收集(在真实机器人上收集数据、运行真实机器人评估、执行运行真实机器人所需的操作):Noah Brown、Justice Carbajal、Tianli Ding、Krista Reymann、Grecia Salazar、Pierre Sermanet、Jaspiar Singh、Huong Tran、Stefan Welker 和 Sichun Xu。

• 领导(领导项目工作、管理项目团队、为项目方向提供建议):Yevgen Chebotar、Chelsea Finn、Karol Hausman、Brian Ichter、Sergey Levine、Yao Lu、Igor Mordatch、Kanishka Rao、Pannag Sanketi、Radu Soricut、Vincent Vanhoucke 和 Tianhe Yu。

• 论文(撰写论文手稿、设计论文可视化和图表):Yevgen Chebotar、Danny Driess、Chelsea Finn、Pete Florence、Karol Hausman、Brian Ichter、Lisa Lee、Sergey Levine、Igor Mordatch、Karl Pertsch、Quan Vuong、Fei Xia、Ted Xiao 和 Tianhe Yu。

• 基础设施(为模型训练、运行实验、存储和访问数据所需的基础设施和代码基础工作):Anthony Brohan、Yevgen Chebotar、Danny Driess、Kehang Han、Jasmine Hsu、Brian Ichter、Alex Irpan、Nikhil Joshi、Ryan Julian、Dmitry Kalashnikov、Yuheng Kuang、Isabel Leal、Lisa Lee、Tsang-Wei Edward Lee、Yao Lu、Igor Mordatch、Quan Vuong、Ayzaan Wahid、Fei Xia、Ted Xiao、Peng Xu 和 Tianhe Yu。

B. Datasets

B. 数据集

The vision-language datasets are based on the dataset mixtures from Chen et al. (2023b) and Driess et al. (2023). The bulk of this data consists of the WebLI dataset, which is around 10B image-text pairs across 109 languages, filtered to the top 10% scoring cross-modal similarity examples to give 1B training examples. Many other captioning and vision question answering datasets are included as well, and more info on the dataset mixtures can be found in Chen et al. (2023b) for RT-2-PaLI-X, and Driess et al. (2023) for RT-2-PaLM-E. When co-fine-tuning RT-2-PaLI-X, we do not use the Episodic WebLI dataset described by Chen et al. (2023a).

视觉语言数据集基于Chen等人(2023b)和Driess等人(2023)的混合数据集。这些数据主要由WebLI数据集组成,包含大约10亿个图像文本对,跨109种语言,经过筛选,选取了最高得分的跨模态相似性示例的前10%,得到了10亿个训练示例。还包括许多其他字幕和视觉问题回答数据集,有关数据集混合的更多信息可以在Chen等人(2023b)的RT-2-PaLI-X和Driess等人(2023)的RT-2-PaLM-E中找到。在对RT-2-PaLI-X进行共同微调时,我们不使用Chen等人(2023a)描述的Episodic WebLI数据集。

The robotics dataset is based on the dataset from Brohan et al. (2022). This consists of demonstration episodes collected with a mobile manipulation robot. Each demonstration is annotated with a natural language instruction from one of seven skills: “Pick Object”, “Move Object Near Object”, “Place Object Upright”, “Knock Object Over”, “Open Drawer”, “Close Drawer”, “Place Object into Receptacle”, and “Pick Object from Receptacle and place on the counter”. Further details can be found in Brohan et al. (2022).

机器人数据集基于Brohan等人(2022)的数据集。这包括使用移动操纵机器人收集的演示片段。每个演示都用自然语言指令注释,其中包含七种技能之一:“Pick Object”、“Move Object Near Object”、“Place Object Upright”、“Knock Object Over”、“Open Drawer”、“Close Drawer”、“Place Object into Receptacle” 和 “Pick Object from Receptacle and place on the counter”。有关详细信息,请参阅Brohan等人(2022)。

RT-2-PaLI-X weights the robotics dataset such that it makes up about 50% of the training mixture for co-fine-tuning. RT-2-PaLM-E weights the robotics dataset to be about 66% of the training mixture.

RT-2-PaLI-X对机器人数据集进行权重处理,使其在共同微调的训练混合中占约50%。RT-2-PaLM-E对机器人数据集进行权重处理,使其在训练混合中占约66%。

For the results on Language-Table in Table 1, our model is trained on the Language-Table datasets from Lynch et al. (2022). Our model is co-fine-tuned on several prediction tasks: (1) predict the action, given two consecutive image frames and a text instruction; (2) predict the instruction, given image frames; (3) predict the robot arm position, given image frames; (4) predict the number of timesteps between given image frames; and (5) predict whether the task was successful, given image frames and the instruction.

对于表格1中的Language-Table结果,我们的模型在Lynch等人(2022)的Language-Table数据集上进行训练。我们的模型在几个预测任务上进行了共同微调:

(1)给定两个连续的图像帧和文本指令,预测动作;

(2)给定图像帧,预测指令;

(3)给定图像帧,预测机器人手臂位置;

(4)给定图像帧,预测两个图像帧之间的时间步数;

(5)给定图像帧和指令,预测任务是否成功。

C. Baselines

C. 基线

We compare our method to multiple state-of-the-art baselines that challenge different aspects of our method. All of the baselines use the exact same robotic data.

我们将我们的方法与多个挑战我们方法不同方面的最新基线进行比较。所有的基线都使用相同的机器人数据。

• RT-1: Robotics Transformer 1 Brohan et al. (2022) is a transformer-based model that achieved state-of-the-art performance on a similar suite of tasks when it was published. The model does not use VLM-based pre-training so it provides an important data point demonstrating whether VLM-based pre-training matters.

RT-1: 机器人Transformer 1 Brohan等人(2022)是一种基于Transformer的模型,在发布时在类似的任务套件上取得了最新的性能。该模型不使用基于VLM的预训练,因此它提供了一个重要的数据点,证明了基于VLM的预训练是否重要。

VC-1: VC-1 Majumdar et al. (2023a) is a visual foundation model that uses pre-trained visual representations specifically designed for robotics tasks. We use pre-trained representations from the VC-1 ViT-L model. Since VC-1 does not include language conditioning, we add this by separately embedding the language command via Universal Sentence Encoder Cer et al. (2018) to enable comparison to our method. In particular, we concatenate the resulting language embedding tokens to the image tokens produced by VC-1, and pass the concatenated token sequences through token learner Ryoo et al. (2021). The token sequences produced by token learner are then consumed by an RT-1 decoder-only transformer model to predict robot action tokens. We train the VC-1 baseline end-to-end and unfreeze the VC-1 weights during training, since this led to far better results than using frozen VC-1 weights.

VC-1: VC-1 Majumdar等人(2023a)是一个视觉基础模型,使用专门设计用于机器人任务的预训练视觉表示。我们使用VC-1 ViT-L模型的预训练表示。由于VC-1不包括语言调节,我们通过单独嵌入语言命令(通过Universal Sentence Encoder Cer等人(2018))来添加这一点,以便与我们的方法进行比较。特别地,我们将由VC-1生成的图像标记的结果与由语言嵌入标记生成的标记序列连接起来,并通过令牌学习器Ryoo等人(2021)将连接的令牌序列传递。令牌学习器产生的标记序列然后由一个仅包含解码器的RT-1 Transformer模型消耗,以预测机器人动作标记。我们端对端地训练VC-1基线,并在训练期间解冻VC-1权重,因为这比使用冻结的VC-1权重效果要好得多。

• R3M: R3M Nair et al. (2022b) is a similar method to VC-1 in that R3M uses pre-trained visual-language representations to improve policy training. In this case the authors use Ego4D dataset Grauman et al. (2022) of human activities to learn the representation that is used by the policy. Both VC-1 and R3M test different state-of-the-art representation learning methods as an alternative to using a VLM. To obtain a language-conditioned policy from the R3M pretrained representation, we follow the same procedure as described above for VC-1, except we use the R3M ResNet50 model to obtain the image tokens, and unfreeze it during training.

R3M: R3M Nair等人(2022b)是一种与VC-1类似的方法,因为R3M使用预训练的视觉语言表示来改善策略训练。在这种情况下,作者使用了人类活动的Ego4D数据集Grauman等人(2022)来学习由策略使用的表示。VC-1和R3M测试了与使用VLM相比的不同最新表示学习方法。为了从R3M预训练的表示中获得语言调节策略,我们按照上述VC-1的相同过程进行操作,只是我们使用R3M ResNet50模型来获取图像标记,并在训练期间解冻它。

• MOO: MOO Stone et al. (2023) is an object-centric approach, where a VLM is first used to specify the object of interest in a form of a single, colored pixel in the original image. This pixelmodified image is then trained with an end-to-end policy to accomplish a set of manipulation tasks. This baseline corresponds to a situation where a VLM is used as a separate module that enhances perception but its representations are not used for policy learning.

MOO: MOO Stone等人(2023)是一种以对象为中心的方法,其中首先使用VLM来指定原始图像中感兴趣的对象,形成一个单一的着色像素。然后,通过端到端策略对修改后的图像进行训练,以完成一组操纵任务。这个基线对应于使用VLM作为一个独立模块来增强感知,但其表示不用于策略学习的情况。

D. VLMs for RT-2

D. 用于RT-2的VLMs

The PaLI-X model architecture consists of a ViT-22B Dehghani et al. (2023) to process images, which can accept sequences of 𝑛 images, leading to 𝑛× 𝑘 tokens per image, where 𝑘 is the number of patches per image. The image tokens passing over a projection layer is then consumed by an encoder-decoder backbone of 32B parameters and 50 layers, similar to UL2 Tay et al. (2023), which jointly processes text and images as embeddings to generate output tokens in an auto-regressive manner. The text input usually consists of the type of task and any additional context (e.g., “Generate caption in ⟨lang⟩” for captioning tasks or “Answer in ⟨lang⟩: question” for VQA tasks).

PaLI-X模型架构包括一个ViT-22B(Dehghani等人,2023)来处理图像,可以接受𝑛个图像的序列,每个图像有𝑛× 𝑘个标记,其中𝑘是每个图像的块数。经过投影层的图像标记然后由一个包含32B参数和50个层的编码器-解码器主干(类似于UL2 Tay等人,2023)消耗,该主干以自回归方式联合处理文本和图像,生成输出标记。文本输入通常包括任务的类型和任何额外的上下文(例如,对于标题任务,文本输入可能是"Generate caption in ⟨lang⟩“,对于VQA任务,文本输入可能是"Answer in ⟨lang⟩: question”)。

The PaLI-3B model trained on Language-Table (Table 1) uses a smaller ViT-G/14 (Zhai et al., 2022) (2B parameters) to process images, and UL2-3B (Tay et al., 2023) for the encoder-decoder network.

在Language-Table上训练的PaLI-3B模型(表1)使用较小的ViT-G/14(Zhai等人,2022)(2B参数)来处理图像,并使用UL2-3B(Tay等人,2023)进行编码器-解码器网络。

The PaLM-E model is based on a decoder-only LLM that projects robot data such as images and text into the language token space and outputs text such as high-level plans. In the case of the used PaLM-E-12B, the visual model used to project images to the language embedding space is a ViT-4B Chen et al. (2023b). The concatenation of continuous variables to textual input allows PaLM-E to be fully multimodal, accepting a wide variety of inputs such as multiple sensor modalities, object-centric representations, scene representations and object entity referrals.

PaLM-E模型基于一个仅解码的LLM,将机器人数据(例如图像和文本)投影到语言令牌空间,并输出文本(例如高级计划)。在使用的PaLM-E-12B中,用于将图像投影到语言嵌入空间的视觉模型是ViT-4B(Chen等人,2023b)。将连续变量连接到文本输入允许PaLM-E完全多模态,接受各种输入,例如多个传感器模态,以物体为中心的表示,场景表示和物体实体引用。

E. Training Details

E. 训练细节

We perform co-fine-tuning on pre-trained models from the PaLI-X (Chen et al., 2023a) 5B & 55B model, PaLI (Chen et al., 2023b) 3B model and the PaLM-E (Driess et al., 2023) 12B model. For RT-2-PaLI-X-55B, we use learning rate 1e-3 and batch size 2048 and co-fine-tune the model for 80K gradient steps whereas for RT-2-PaLI-X-5B, we use the same learning rate and batch size and co-fine-tune the model for 270K gradient steps. For RT-2-PaLM-E-12B, we use learning rate 4e-4 and batch size 512 to co-fine-tune the model for 1M gradient steps. Both models are trained with the next token prediction objective, which corresponds to the behavior cloning loss in robot learning. For RT-2-PaLI-3B model used for Language-Table results in Table 1, we use learning rate 1e-3 and batch size 128 to co-fine-tune the model for 300K gradient steps.

我们对来自PaLI-X(Chen等人,2023a)5B和55B模型、PaLI(Chen等人,2023b)3B模型以及PaLM-E(Driess等人,2023)12B模型的预训练模型进行了共同微调。对于RT-2-PaLI-X-55B,我们使用学习率1e-3和批大小2048,对模型进行80K个梯度步的共同微调;而对于RT-2-PaLI-X-5B,我们使用相同的学习率和批大小,对模型进行270K个梯度步的共同微调。对于RT-2-PaLM-E-12B,我们使用学习率4e-4和批大小512进行100万个梯度步的共同微调。这两个模型都使用下一个标记预测目标进行训练,这对应于机器人学习中的行为克隆损失。对于在表1中显示的用于Language-Table结果的RT-2-PaLI-3B模型,我们使用学习率1e-3和批大小128进行了30万个梯度步的共同微调。

F. Evaluation Details

F. 评估细节

F.1. Evaluation Scenarios

F.1. 评估场景

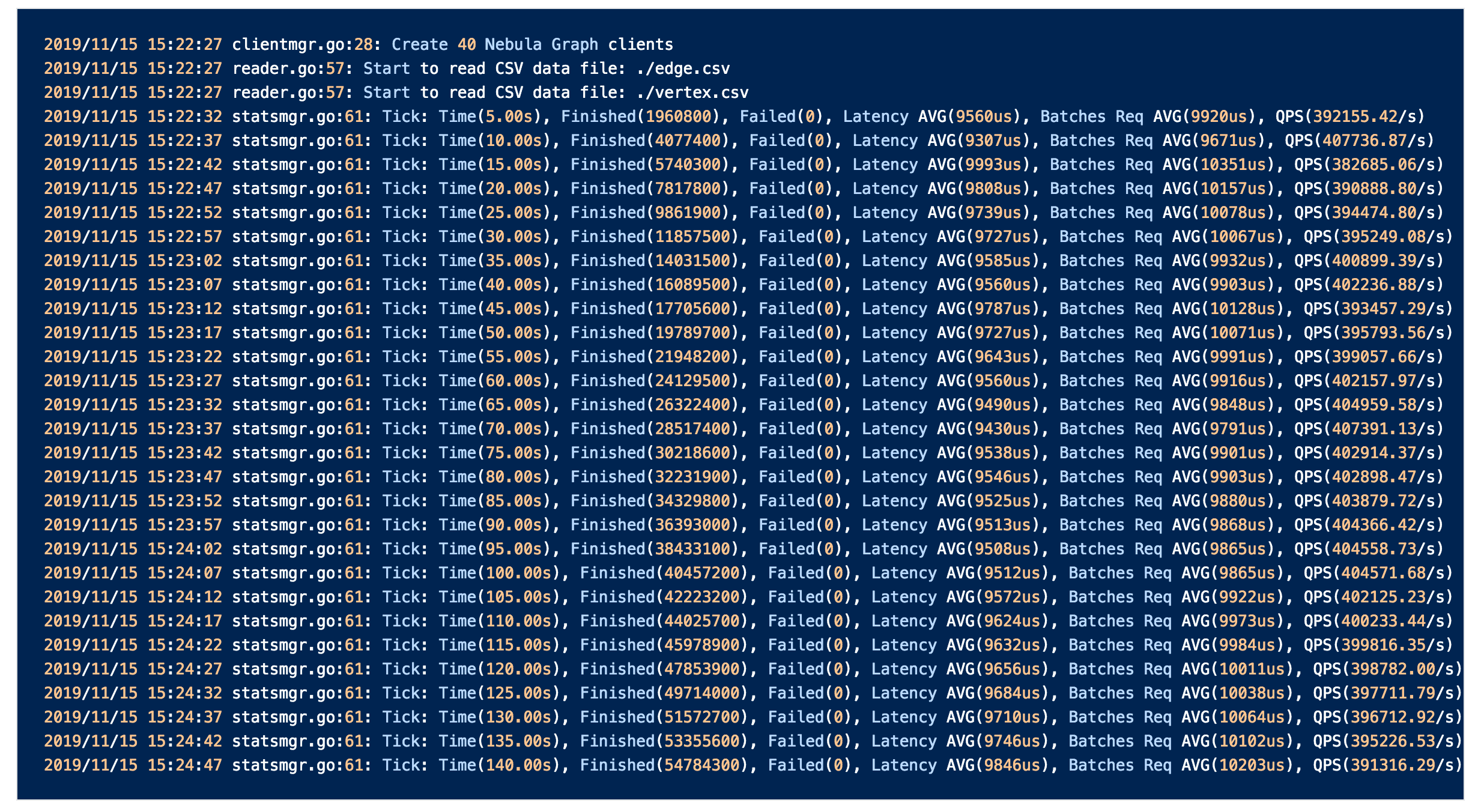

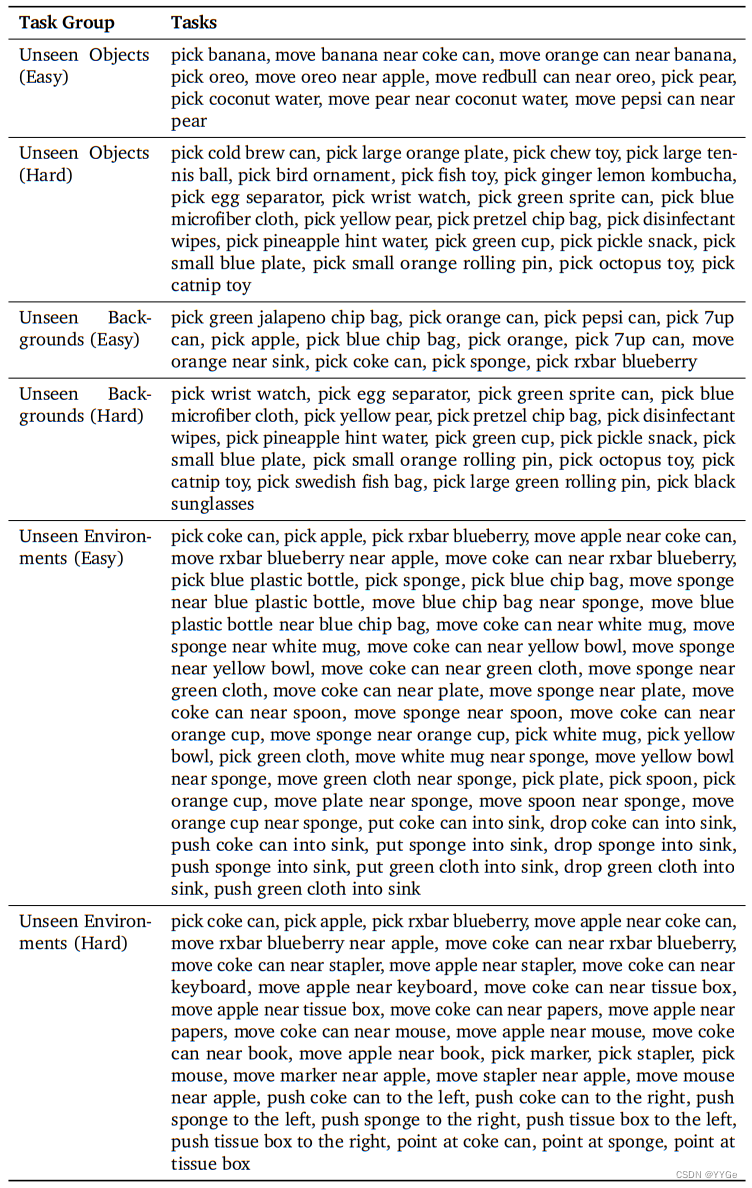

For studying the emergent capabilities of RT-2 in a quantitative manner, we study various challenging semantic evaluation scenarios that aim to measure capabilities such as reasoning, symbol understanding, and human recognition. A visual overview of a subset of these scenes is provided in Figure 8, and the full list of instructions used for quantiative evalution is shown in Table 3.

为了定量研究RT-2的新能力,我们研究了各种具有挑战性的语义评估场景,旨在测量推理、符号理解和人类识别等能力。这些场景的一个子集的视觉概述在图8中提供,用于定量评估的完整指令列表在表3中显示。

F.2. Evaluation Instructions

F.2. 评估指令

Table 2 lists natural language instructions used in model evaluations for unseen objects, backgrounds, and environments. Each instruction was run between 1-5 times, depending on the number of total instructions in that evaluation set. Table 3 lists natural language instructions used to evaluate quantitative emergent evals. Each instruction was run 5 times.

表2(文章末尾)列出了用于模型评估的自然语言指令,用于评估看不见的对象、背景和环境。每个指令运行1-5次,具体取决于该评估集中的指令总数。表3列出了用于评估定量新能力的自然语言指令。每个指令运行5次。

Figure 8 | An overview of some of the evaluation scenarios used to study the emergent capabilities of RT-2. They focus on three broad categories, which are (a) reasoning, (b) symbol understanding, and © human recognition. The visualized instructions are a subset of the full instructions, which are listed in Appendix F.2.

Table 3 | Natural language instructions used for quantitative emergent evalutions.

表3 | 用于定量新能力评估的自然语言指令。

G. Example Failure Cases

G. 示例失败案例

In Fig. 9 we provide examples of a notable type of failure case in the Language Table setting, with the RT-2 model not generalizing to unseen object dynamics. In these cases, although the model is able to correctly attend to the language instruction and move to the first correct object, it is not able to control the challenging dynamics of these objects, which are significantly different than the small set of block objects that have been seen in this environment Lynch et al. (2022). Then pen simply rolls off the table (Fig. 9, left), while the banana’s center-of-mass is far from where the robot makes contact (Fig. 9, right). We note that pushing dynamics are notoriously difficult to predict and control Yu et al. (2016). We hypothesize that greater generalization in robot-environment interaction dynamics may be possible by further scaling the datasets across diverse environments and objects – for example, in this case, datasets that include similar types of more diverse pushing dynamics Dasari et al. (2019).

在图9中,我们提供了在语言表设置中一种显著类型的失败案例,其中RT-2模型未能推广到看不见的对象动态。在这些情况下,尽管模型能够正确关注语言指令并移动到第一个正确的对象,但它无法控制这些对象的具有挑战性的动态,这与在此环境中看到的小块对象的小集合明显不同Lynch et al. (2022)。然后,笔只是滚落桌子(图9,左),而香蕉的质心远离机器人接触的地方(图9,右)。我们注意到,推动动态通常很难预测和控制Yu et al. (2016)。我们假设通过在各种环境和对象之间进一步扩展数据集,例如在这种情况下,包括类似更多样化的推动动态Dasari et al. (2019)的数据集,可以实现对机器人-环境交互动态更广泛的推广。

In addition, despite RT-2’s promising performance on real world manipulation tasks in qualitative and quantitative emergent evaluations, we still find numerous notable failure cases. For example, with the current training dataset composition and training method, RT-2 seemed to perform poorly at:

此外,尽管RT-2在定性和定量新能力评估中在现实世界的操纵任务上表现出色,我们仍然发现了许多显著的失败案例。例如,使用当前的训练数据集组成和训练方法,RT-2在以下方面表现不佳:

• Grasping objects by specific parts, such as the handle

• Novel motions beyond what was seen in the robot data, such as wiping with a towel or tool use

• Dexterous or precise motions, such as folding a towel • Extended reasoning requiring multiple layers of indirection

• 通过特定部分抓取物体,如把手。

• 超出机器人数据中所见的新动作,如用毛巾擦拭或使用工具。

• 灵巧或精准的动作,如折叠毛巾 • 需要多层间接的扩展推理。

Figure 9 | Qualitative example failure cases in the real-world failing to generalize to unseen object dynamics.

图9 | 真实世界中定性失败案例的示例,未能推广到看不见的对象动态。

H.Quantitative Experimental Results

H.定量实验结果

H.1. Overall Performance, for Section 4.1

H.1.整体性能,见第4.1节

Table 4 lists our quantitative overall evaluation results. We find that RT-2 performs as well or better than baselines on seen tasks and significantly outperforms baselines on generalization to unseen objects, backgrounds, and environments.

表4列出了我们的整体性能定量评估结果。我们发现RT-2在已见任务上的表现与或优于基线,在对未见对象、背景和环境进行泛化方面明显优于基线。

Table 4 | Overall performance of two instantiations of RT-2 and baselines across seen training tasks as well as unseen evaluations measuring generalization to novel objects, novel backgrounds, and novel environments.

表4 | RT-2两个实例和基线在已见训练任务以及对新颖对象、新颖背景和新颖环境泛化进行定量评估的整体性能。

H.2. Emergent Evaluation, for Section 4.2

H.2.新能力评估,见第4.2节

Table 5 lists all of our quantitative emergent evaluation results. We find that RT-2 performs 2x to 3x better than RT-1 on these new instructions, without any additional robotic demonstrations. This showcases how our method allows us to leverage capabilities from pretraining on web-scale vision-language datasets.

表5列出了我们的所有定量新能力评估结果。我们发现RT-2在这些新指令上的表现比RT-1好2倍到3倍,而没有额外的机器人演示。这展示了我们的方法如何允许我们利用在Web规模的视觉语言数据集上进行预训练的能力。

Table 5 | Performance of RT-2 and baselines on quantitative emergent evaluations.

表5 | RT-2和基线在定量新能力评估中的表现。

H.3. Size and Training Ablations, for Section 4.3

H.3. 模型大小和训练策略消融实验,见第4.3节

Table 6 details quantitative results for ablations across model size and training approach. Across each, we see that model size plays an important role in performance and that co-fine-tuning outperforms fine-tuning, which outperforms training from scratch.

表6详细说明了在模型大小和训练方法方面的消融实验的定量结果。在每个实验中,我们发现模型大小在性能方面起着重要作用,而联合微调优于微调,微调优于从头开始训练。

Table 6 | Ablations of RT-2 showcasing the impact of parameter count and training strategy on generalization.

表6 | RT-2参数数量和训练策略对泛化性能的影响的消融实验。

Additional Chain-Of-Thought Reasoning Results

额外的链式思考推理结果

1The original pre-training data mixture used in PaLM-E-12B (as described in Driess et al. (2023)) includes robot images

for high-level VQA planning tasks that can be similar to images encountered in generalization scenarios. However, none of

those training examples include low-level actions that are evaluated in this experiment.

I. 注解:PaLM-E-12B的原始预训练数据混合(如Driess等人(2023)中所述)包括用于高级VQA规划任务的机器人图像,这些图像可能类似于在泛化场景中遇到的图像。然而,这些训练示例中没有包含在此实验中评估的低级动作。

We present additional examples of chain-of-thought reasoning rollouts accomplished with RT-2-PaLME, as described in Sec. 4.4, in Figure 10.

我们在图10中提供了RT-2-PaLME完成的额外链式思考推理展示,如第4.4节所述。

Figure 10 | Additional examples of RT-2 with chain-of-thought reasoning

图10 | RT-2带链式思考推理的额外示例

Table 2 | Natural language instructions used for evaluations testing controlled distribution shifts along the dimension of novel objects, novel environments, and novel backgrounds. For each category, we introduce evaluation settings with smaller distribution shifts as well as larger distribution shifts. A visualization of these scenarios if shown in Figure 3.

表2 | 用于测试在新颖对象、新颖环境和新颖背景维度上的受控分布变化的评估的自然语言指令。对于每个类别,我们引入了具有较小和较大分布变化的评估设置。这些场景的可视化如图3所示。