1 导入必要的库

import pandas as pd

from sklearn.model_selection import train_test_split, cross_val_score, KFold

import xgboost as xgb

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

import numpy as np

from sklearn.metrics import confusion_matrix, classification_report, accuracy_score

from sklearn.model_selection import RandomizedSearchCV

from sklearn.tree import plot_tree

import matplotlib.pyplot as plt

import seaborn as sns

import missingno as msno

# 忽略Matplotlib的警告(可选)

import warnings

warnings.filterwarnings("ignore")

# 设置中文显示和负号正常显示

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.regularizers import l2 # 导入l2正则化

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler 2 加载数据与预处理

df = pd.read_excel('10.xls')

for column in ['Fault type', 'lithology']:

df[column] = pd.factorize(df[column])[0]

df3 模型构建

# 假设df已经定义并包含了数据

# 分离特征和标签

X = df.drop('magnitude', axis=1)

y = df['magnitude']

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 特征缩放

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# 构建模型,添加L2正则化

model = Sequential([

Dense(64, activation='relu', input_shape=(X_train_scaled.shape[1],), kernel_regularizer=l2(0.01)), # 对第一个Dense层的权重添加L2正则化

Dense(32, activation='relu', kernel_regularizer=l2(0.01)), # 对第二个Dense层的权重也添加L2正则化

Dense(1, activation='linear') # 回归问题,输出层使用线性激活函数

])

# 编译模型,使用回归损失函数

model.compile(optimizer='adam', loss='mean_squared_error', metrics=['mean_absolute_error'])

# 训练模型

history = model.fit(X_train_scaled, y_train, epochs=100, validation_split=0.2, verbose=1)

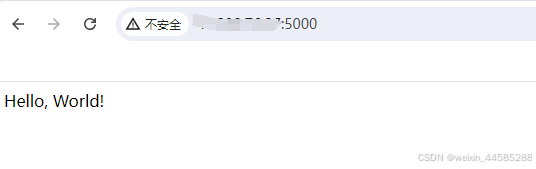

图 3-1

4 模型评估

import matplotlib.pyplot as plt

# 假设你已经通过model.fit()训练了模型,并得到了history对象

# 这里我们直接使用你提供的history变量

# 绘制训练损失和验证损失

plt.figure(figsize=(10, 3))

plt.plot(history.history['loss'], color='lime',label='Training Loss')

plt.plot(history.history['val_loss'], color='black', label='Validation Loss')

plt.title('Training and Validation Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.grid(True)

# 如果你还想绘制平均绝对误差(MAE),确保在model.compile时添加了它作为metrics之一

# 如果添加了,可以这样绘制:

if 'mean_absolute_error' in history.history:

plt.figure(figsize=(10, 3))

plt.plot(history.history['mean_absolute_error'],color='red',label='Training MAE')

plt.plot(history.history['val_mean_absolute_error'], color='lime', label='Validation MAE')

plt.title('Training and Validation Mean Absolute Error')

plt.xlabel('Epoch')

plt.ylabel('MAE')

plt.legend()

plt.grid(True)

# 显示图表

plt.show()

图 4-1

![CTF-Web习题:[GXYCTF2019]Ping Ping Ping](https://i-blog.csdnimg.cn/direct/af68486902a242da828b7d97fbacfa62.png)