电力需求预测挑战赛

比赛链接:https://challenge.xfyun.cn/topic/info?type=electricity-demand&option=ssgy&ch=dw24_uGS8Gs

学习链接:https://datawhaler.feishu.cn/wiki/CuhBw9vBaiG1nJklIPkcRhqVnmk

一句话介绍赛题任务可以这样理解赛题:

【训练时序预测模型助力电力需求预测】

电力需求的准确预测对于电网的稳定运行、能源的有效管理以及可再生能源的整合至关重要。

赛题任务

给定多个房屋对应电力消耗历史N天的相关序列数据等信息,预测房屋对应电力的消耗。

赛题数据简介

赛题数据由训练集和测试集组成,为了保证比赛的公平性,将每日日期进行脱敏,用1-N进行标识。

即1为数据集最近一天,其中1-10为测试集数据。

数据集由字段id(房屋id)、 dt(日标识)、type(房屋类型)、target(实际电力消耗)组成。

下面进入baseline代码

以下代码均在kaggle平台运行,不用配置环境和可以使用GPU,较为方便。

Task1

使用移动平均预测,评分373左右,不太行,建议从Task2开始。

import pandas as pd

import numpy as np

train = pd.read_csv('/kaggle/input/2024abcd/train.csv')

test = pd.read_csv('/kaggle/input/2024abcd/test.csv')

# 计算训练数据最近11-20单位时间内对应id的目标均值

target_mean = train[train['dt']<=20].groupby(['id'])['target'].mean().reset_index()

# 将target_mean作为测试集结果进行合并

test = test.merge(target_mean, on=['id'], how='left')

# 保存结果文件到本地

test[['id','dt','target']].to_csv('submit1.csv', index=None)

Task2 入门lightgbm,开始特征工程

import numpy as np

import pandas as pd

import lightgbm as lgb

from sklearn.metrics import mean_squared_log_error, mean_absolute_error, mean_squared_error

import tqdm # 用于显示循环进度条的库

import sys

import os

import gc # 提供了与垃圾回收机制相关的接口,可以手动执行垃圾回收、调整垃圾回收器的参数等,主要用于内存管理和优化。

import argparse # 用于命令行参数解析的库,可以方便地编写用户友好的命令行接口。可以定义程序所需的参数和选项,并自动生成帮助和使用信息。

import warnings

warnings.filterwarnings('ignore')

train = pd.read_csv('/kaggle/input/2024abcd/train.csv')

test = pd.read_csv('/kaggle/input/2024abcd/test.csv')

train.head(),train.shape,test.head(),test.shape

( id dt type target

0 00037f39cf 11 2 44.050

1 00037f39cf 12 2 50.672

2 00037f39cf 13 2 39.042

3 00037f39cf 14 2 35.900

4 00037f39cf 15 2 53.888,

(2877305, 4),

id dt type

0 00037f39cf 1 2

1 00037f39cf 2 2

2 00037f39cf 3 2

3 00037f39cf 4 2

4 00037f39cf 5 2,

(58320, 3))

可视化观察

# 不同type类型对应target的柱状图

import matplotlib.pyplot as plt

# 不同type类型对应target

type_target_df = train.groupby('type')['target'].mean().reset_index()

plt.figure(figsize=(8, 4))

plt.bar(type_target_df['type'], type_target_df['target'], color=['blue', 'green'])

plt.xlabel('Type')

plt.xticks([i for i in range(0,19)])

plt.ylabel('Average Target Value')

plt.title('Bar Chart of Target by Type')

plt.show()

# id为00037f39cf的按dt为序列关于target的折线图

specific_id_df = train[train['id'] == '00037f39cf']

plt.figure(figsize=(10, 5))

plt.plot(specific_id_df['dt'], specific_id_df['target'], marker='o', linestyle='-')

plt.xlabel('DateTime')

plt.ylabel('Target Value')

plt.title("Line Chart of Target for ID '00037f39cf'")

plt.show()

特征工程

这里主要构建了历史平移特征和窗口统计特征。

- 历史平移特征:是一种特征工程技术,通过将之前时间点的数据转移到当前时间点,从而为当前时间点提供额外的信息。

- 窗口统计特征:窗口统计可以构建不同的窗口大小,然后基于窗口范围进统计均值、最大值、最小值、中位数、方差的信息,可以反映最近阶段数据的变化情况。下列代码可以将d时刻之前的三个时间单位的信息进行统计构建特征给作为d时刻的特征。

# 合并训练数据和测试数据,并进行排序

data = pd.concat([test, train], axis=0, ignore_index=True)

data = data.sort_values(['id','dt'], ascending=False).reset_index(drop=True)

# 历史平移

for i in range(10,30):

data[f'last{i}_target'] = data.groupby(['id'])['target'].shift(i)

# 窗口统计

data[f'win3_mean_target'] = (data['last10_target'] + data['last11_target'] + data['last12_target']) / 3

# 进行数据切分

train = data[data.target.notnull()].reset_index(drop=True)

test = data[data.target.isnull()].reset_index(drop=True)

# 确定输入特征

train_cols = [f for f in data.columns if f not in ['id','target']]

test.head()

| id | dt | type | target | last10_target | last11_target | last12_target | last13_target | last14_target | last15_target | ... | last21_target | last22_target | last23_target | last24_target | last25_target | last26_target | last27_target | last28_target | last29_target | win3_mean_target | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | fff81139a7 | 10 | 5 | NaN | 29.571 | 33.691 | 27.034 | 33.063 | 30.109 | 36.227 | ... | 32.513 | 32.984 | 31.413 | 34.685 | 23.518 | 29.392 | 31.081 | 29.185 | 30.631 | 30.098667 |

| 1 | fff81139a7 | 9 | 5 | NaN | 28.677 | 29.571 | 33.691 | 27.034 | 33.063 | 30.109 | ... | 28.664 | 32.513 | 32.984 | 31.413 | 34.685 | 23.518 | 29.392 | 31.081 | 29.185 | 30.646333 |

| 2 | fff81139a7 | 8 | 5 | NaN | 21.925 | 28.677 | 29.571 | 33.691 | 27.034 | 33.063 | ... | 26.588 | 28.664 | 32.513 | 32.984 | 31.413 | 34.685 | 23.518 | 29.392 | 31.081 | 26.724333 |

| 3 | fff81139a7 | 7 | 5 | NaN | 29.603 | 21.925 | 28.677 | 29.571 | 33.691 | 27.034 | ... | 33.816 | 26.588 | 28.664 | 32.513 | 32.984 | 31.413 | 34.685 | 23.518 | 29.392 | 26.735000 |

| 4 | fff81139a7 | 6 | 5 | NaN | 30.279 | 29.603 | 21.925 | 28.677 | 29.571 | 33.691 | ... | 37.080 | 33.816 | 26.588 | 28.664 | 32.513 | 32.984 | 31.413 | 34.685 | 23.518 | 27.269000 |

5 rows × 25 columns

模型训练与测试集预测

需要注意的训练集和验证集的构建:因为数据存在时序关系,所以需要严格按照时序进行切分,

- 这里选择原始给出训练数据集中dt为30之后的数据作为训练数据,之前的数据作为验证数据,

- 这样保证了数据不存在穿越问题(不使用未来数据预测历史数据)

def time_model(lgb, train_df, test_df, cols):

# 训练集和验证集切分

trn_x, trn_y = train_df[train_df.dt>=31][cols], train_df[train_df.dt>=31]['target']

val_x, val_y = train_df[train_df.dt<=30][cols], train_df[train_df.dt<=30]['target']

# 构建模型输入数据

train_matrix = lgb.Dataset(trn_x, label=trn_y)

valid_matrix = lgb.Dataset(val_x, label=val_y)

# lightgbm参数

lgb_params = {

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': 'mse',

'min_child_weight': 5,

'num_leaves': 2 ** 5,

'lambda_l2': 10,

'feature_fraction': 0.8,

'bagging_fraction': 0.8,

'bagging_freq': 4,

'learning_rate': 0.05,

'seed': 2024,

'nthread' : 16,

'verbose' : -1,

'device': 'gpu',

'gpu_platform_id': 0,

'gpu_device_id': 0

}

# 训练模型

model = lgb.train(lgb_params, train_matrix, 50000, valid_sets=[train_matrix, valid_matrix],

categorical_feature=[], callbacks=[lgb.early_stopping(500), lgb.log_evaluation(500)])

# 验证集和测试集结果预测

val_pred = model.predict(val_x, num_iteration=model.best_iteration)

test_pred = model.predict(test_df[cols], num_iteration=model.best_iteration)

# 离线分数评估

score = mean_squared_error(val_pred, val_y)

print(score)

return val_pred, test_pred

lgb_oof, lgb_test = time_model(lgb, train, test, train_cols)

# 保存结果文件到本地

test['target'] = lgb_test

test[['id','dt','target']].to_csv('submit2.csv', index=None)

Task3:尝试使用深度学习方案

特征优化

这里主要构建了历史平移特征、差分特征、和窗口统计特征;每种特征都是有理可据的,具体说明如下:

(1)历史平移特征:通过历史平移获取上个阶段的信息;

(2)差分特征:可以帮助获取相邻阶段的增长差异,描述数据的涨减变化情况。在此基础上还可以构建相邻数据比值变化、二阶差分等;

(3)窗口统计特征:窗口统计可以构建不同的窗口大小,然后基于窗口范围进统计均值、最大值、最小值、中位数、方差的信息,可以反映最近阶段数据的变化情况。

import numpy as np

import pandas as pd

import lightgbm as lgb

from sklearn.metrics import mean_squared_log_error, mean_absolute_error, mean_squared_error

import tqdm # 用于显示循环进度条的库

import sys

import os

import gc # 提供了与垃圾回收机制相关的接口,可以手动执行垃圾回收、调整垃圾回收器的参数等,主要用于内存管理和优化。

import argparse # 用于命令行参数解析的库,可以方便地编写用户友好的命令行接口。可以定义程序所需的参数和选项,并自动生成帮助和使用信息。

import warnings

warnings.filterwarnings('ignore')

train = pd.read_csv('/kaggle/input/2024abcd/train.csv')

test = pd.read_csv('/kaggle/input/2024abcd/test.csv')

train.head()

| id | dt | type | target | |

|---|---|---|---|---|

| 0 | 00037f39cf | 11 | 2 | 44.050 |

| 1 | 00037f39cf | 12 | 2 | 50.672 |

| 2 | 00037f39cf | 13 | 2 | 39.042 |

| 3 | 00037f39cf | 14 | 2 | 35.900 |

| 4 | 00037f39cf | 15 | 2 | 53.888 |

# 合并训练数据和测试数据

data = pd.concat([train, test], axis=0).reset_index(drop=True) # 在重置索引时删除旧索引,避免将旧索引添加为新的一列

data = data.sort_values(['id','dt'], ascending=False).reset_index(drop=True)

# 历史平移

for i in range(10,36):

data[f'target_shift{i}'] = data.groupby('id')['target'].shift(i)

# 历史平移 + 差分特征

for i in range(1,4):

data[f'target_shift10_diff{i}'] = data.groupby('id')['target_shift10'].diff(i)

# 窗口统计

for win in [15,30,50,70]:

data[f'target_win{win}_mean'] = data.groupby('id')['target'].rolling(window=win, min_periods=3, closed='left').mean().values

data[f'target_win{win}_max'] = data.groupby('id')['target'].rolling(window=win, min_periods=3, closed='left').max().values

data[f'target_win{win}_min'] = data.groupby('id')['target'].rolling(window=win, min_periods=3, closed='left').min().values

data[f'target_win{win}_std'] = data.groupby('id')['target'].rolling(window=win, min_periods=3, closed='left').std().values

# 历史平移 + 窗口统计

for win in [7,14,28,35,50,70]:

data[f'target_shift10_win{win}_mean'] = data.groupby('id')['target_shift10'].rolling(window=win, min_periods=3, closed='left').mean().values

data[f'target_shift10_win{win}_max'] = data.groupby('id')['target_shift10'].rolling(window=win, min_periods=3, closed='left').max().values

data[f'target_shift10_win{win}_min'] = data.groupby('id')['target_shift10'].rolling(window=win, min_periods=3, closed='left').min().values

data[f'target_shift10_win{win}_sum'] = data.groupby('id')['target_shift10'].rolling(window=win, min_periods=3, closed='left').sum().values

data[f'target_shift710win{win}_std'] = data.groupby('id')['target_shift10'].rolling(window=win, min_periods=3, closed='left').std().values

# 进行数据切分

train = data[data.target.notnull()].reset_index(drop=True)

test = data[data.target.isnull()].reset_index(drop=True)

# 确定输入特征

train_cols = [f for f in data.columns if f not in ['id','target']]

test.head()

| id | dt | type | target | target_shift10 | target_shift11 | target_shift12 | target_shift13 | target_shift14 | target_shift15 | ... | target_shift10_win50_mean | target_shift10_win50_max | target_shift10_win50_min | target_shift10_win50_sum | target_shift710win50_std | target_shift10_win70_mean | target_shift10_win70_max | target_shift10_win70_min | target_shift10_win70_sum | target_shift710win70_std | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | fff81139a7 | 10 | 5 | NaN | 29.571 | 33.691 | 27.034 | 33.063 | 30.109 | 36.227 | ... | 36.15146 | 57.527 | 22.631 | 1807.573 | 6.716722 | 35.678629 | 57.527 | 10.779 | 2497.504 | 7.157341 |

| 1 | fff81139a7 | 9 | 5 | NaN | 28.677 | 29.571 | 33.691 | 27.034 | 33.063 | 30.109 | ... | 36.35390 | 57.527 | 22.631 | 1817.695 | 6.812526 | 36.159657 | 57.527 | 22.631 | 2531.176 | 6.566787 |

| 2 | fff81139a7 | 8 | 5 | NaN | 21.925 | 28.677 | 29.571 | 33.691 | 27.034 | 33.063 | ... | 36.02730 | 57.527 | 22.631 | 1801.365 | 6.929592 | 36.068243 | 57.527 | 22.631 | 2524.777 | 6.669118 |

| 3 | fff81139a7 | 7 | 5 | NaN | 29.603 | 21.925 | 28.677 | 29.571 | 33.691 | 27.034 | ... | 35.87510 | 57.527 | 22.631 | 1793.755 | 7.063492 | 35.954057 | 57.527 | 22.631 | 2516.784 | 6.770835 |

| 4 | fff81139a7 | 6 | 5 | NaN | 30.279 | 29.603 | 21.925 | 28.677 | 29.571 | 33.691 | ... | 36.05080 | 57.527 | 22.631 | 1802.540 | 7.086920 | 35.977400 | 57.527 | 22.631 | 2518.418 | 6.786546 |

5 rows × 79 columns

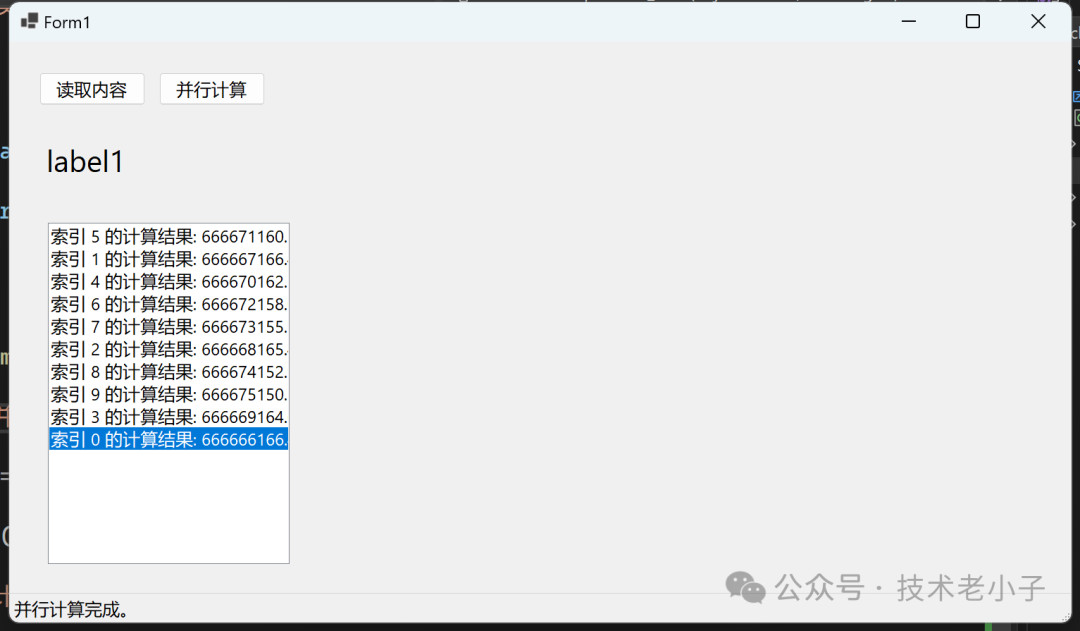

模型融合

进行模型融合的前提是有多个模型的输出结果,比如使用catboost、xgboost和lightgbm三个模型分别输出三个结果,这时就可以将三个结果进行融合,最常见的是将结果直接进行加权平均融合。

下面我们构建了cv_model函数,内部可以选择使用lightgbm、xgboost和catboost模型,可以依次跑完这三个模型,然后将三个模型的结果进行取平均进行融合。

对于每个模型均选择经典的K折交叉验证方法进行离线评估,大体流程如下:

-

K折交叉验证会把样本数据随机的分成K份;

-

每次随机的选择K-1份作为训练集,剩下的1份做验证集;

-

当这一轮完成后,重新随机选择K-1份来训练数据;

-

最后将K折预测结果取平均作为最终提交结果。

# 这段代码运行特别久,几乎半个小时!

from sklearn.model_selection import StratifiedKFold, KFold, GroupKFold

import lightgbm as lgb

import xgboost as xgb

from catboost import CatBoostRegressor

from sklearn.metrics import mean_squared_error, mean_absolute_error

def cv_model(clf, train_x, train_y, test_x, clf_name, seed=2024):

'''

clf: 调用的模型(LightGBM、XGBoost 或 CatBoost)

train_x: 训练数据

train_y: 训练数据对应标签

test_x: 测试数据

clf_name: 选择使用的模型名称

seed: 随机种子

'''

folds = 5

kf = KFold(n_splits=folds, shuffle=True, random_state=seed)

oof = np.zeros(train_x.shape[0])

test_predict = np.zeros(test_x.shape[0])

cv_scores = []

for i, (train_index, valid_index) in enumerate(kf.split(train_x, train_y)):

print('************************************ {} ************************************'.format(str(i+1)))

trn_x, trn_y = train_x.iloc[train_index], train_y[train_index]

val_x, val_y = train_x.iloc[valid_index], train_y[valid_index]

if clf_name == "lgb":

train_matrix = lgb.Dataset(trn_x, label=trn_y)

valid_matrix = lgb.Dataset(val_x, label=val_y)

params = {

'boosting_type': 'gbdt',

'objective': 'regression',

'metric': 'mae',

'min_child_weight': 6,

'num_leaves': 2 ** 6,

'lambda_l2': 10,

'feature_fraction': 0.8,

'bagging_fraction': 0.8,

'bagging_freq': 4,

'learning_rate': 0.1,

'seed': 2024,

'nthread': 16,

'verbose': -1,

'device': 'gpu'

}

model = lgb.train(params, train_matrix, 1000, valid_sets=[train_matrix, valid_matrix],

categorical_feature=[], callbacks=[lgb.early_stopping(100), lgb.log_evaluation(200)])

val_pred = model.predict(val_x, num_iteration=model.best_iteration)

test_pred = model.predict(test_x, num_iteration=model.best_iteration)

if clf_name == "xgb":

xgb_params = {

'booster': 'gbtree',

'objective': 'reg:squarederror',

'eval_metric': 'mae',

'max_depth': 5,

'lambda': 10,

'subsample': 0.7,

'colsample_bytree': 0.7,

'colsample_bylevel': 0.7,

'eta': 0.1,

'tree_method': 'gpu_hist',

'seed': 520,

'nthread': 16

}

train_matrix = xgb.DMatrix(trn_x, label=trn_y)

valid_matrix = xgb.DMatrix(val_x, label=val_y)

test_matrix = xgb.DMatrix(test_x)

watchlist = [(train_matrix, 'train'), (valid_matrix, 'eval')]

model = xgb.train(xgb_params, train_matrix, num_boost_round=1000, evals=watchlist, verbose_eval=200, early_stopping_rounds=100)

val_pred = model.predict(valid_matrix)

test_pred = model.predict(test_matrix)

if clf_name == "cat":

params = {'learning_rate': 0.1, 'depth': 5, 'bootstrap_type': 'Bernoulli', 'random_seed': 2023,

'od_type': 'Iter', 'od_wait': 100, 'random_seed': 11, 'allow_writing_files': False, 'task_type': 'GPU'}

model = CatBoostRegressor(iterations=1000, **params)

model.fit(trn_x, trn_y, eval_set=(val_x, val_y),

metric_period=200,

use_best_model=True,

cat_features=[],

verbose=1)

val_pred = model.predict(val_x)

test_pred = model.predict(test_x)

oof[valid_index] = val_pred

test_predict += test_pred / kf.n_splits

score = mean_absolute_error(val_y, val_pred)

cv_scores.append(score)

print(cv_scores)

return oof, test_predict

# 选择lightgbm模型

lgb_oof, lgb_test = cv_model(lgb, train[train_cols], train['target'], test[train_cols], 'lgb')

# 选择xgboost模型

xgb_oof, xgb_test = cv_model(xgb, train[train_cols], train['target'], test[train_cols], 'xgb')

# 选择catboost模型

cat_oof, cat_test = cv_model(CatBoostRegressor, train[train_cols], train['target'], test[train_cols], 'cat')

# 进行取平均融合

final_test = (lgb_test + xgb_test + cat_test) / 3

# 保存结果文件到本地

test['target'] = final_test

test[['id','dt','target']].to_csv('submit3.1.csv', index=None)

# 如果前面模型没有变动,则保存目前的结果以便日后直接读取即可,就不用再重复运行前面的程序了

import numpy as np

import pandas as pd

# 将 numpy.ndarray 转换为 pandas.DataFrame

lgb_oof_df = pd.DataFrame(lgb_oof, columns=['oof'])

lgb_test_df = pd.DataFrame(lgb_test, columns=['test'])

xgb_oof_df = pd.DataFrame(xgb_oof, columns=['oof'])

xgb_test_df = pd.DataFrame(xgb_test, columns=['test'])

cat_oof_df = pd.DataFrame(cat_oof, columns=['oof'])

cat_test_df = pd.DataFrame(cat_test, columns=['test'])

# 保存 DataFrame 到 CSV 文件

lgb_oof_df.to_csv('lgb_oof.csv', index=False)

lgb_test_df.to_csv('lgb_test.csv', index=False)

xgb_oof_df.to_csv('xgb_oof.csv', index=False)

xgb_test_df.to_csv('xgb_test.csv', index=False)

cat_oof_df.to_csv('cat_oof.csv', index=False)

cat_test_df.to_csv('cat_test.csv', index=False)

import pandas as pd

# 读取 CSV 文件并转换为 numpy.ndarray

lgb_oof = pd.read_csv('lgb_oof.csv').values

lgb_test = pd.read_csv('lgb_test.csv').values

xgb_oof = pd.read_csv('xgb_oof.csv').values

xgb_test = pd.read_csv('xgb_test.csv').values

cat_oof = pd.read_csv('cat_oof.csv').values

cat_test = pd.read_csv('cat_test.csv').values

另外一种就是stacking融合。Stacking融合(Stacking Ensemble)是一种集成学习的方法,它结合了多个不同的机器学习模型,以提高整体预测性能。通过将多个基础模型的预测结果作为输入,再训练一个新的模型(称为元模型或次级模型),可以利用基础模型的多样性和不同的学习能力来提升最终的预测效果。

主要步骤:

- 训练基础模型(Level-0 models):

- 将训练数据分成 K 个折(folds)。

- 对每一折的数据,使用其他 K-1 折的数据训练基础模型,并在当前折上进行预测。

- 对每一个基础模型,保存每一折的预测结果(称为 out-of-fold predictions),以及在测试集上的预测结果。

- 构建新的训练集:

- 将所有基础模型的 out-of-fold predictions 作为特征,构建新的训练集。

- 新的训练集的标签仍然是原始训练集的标签。

- 训练元模型(Level-1 model):

- 使用新的训练集训练一个新的模型,称为元模型或次级模型。

- 元模型可以是任何机器学习模型,通常选择简单且鲁棒的模型,如线性回归、决策树等。

- 最终预测:

- 使用基础模型对测试集进行预测,将所有基础模型的预测结果作为特征,构建新的测试集。

- 使用训练好的元模型对新的测试集进行预测,得到最终的预测结果。

Stacking参考代码:

from sklearn.model_selection import RepeatedKFold

from sklearn.linear_model import Ridge, LinearRegression

from sklearn.metrics import mean_absolute_error

import numpy as np

import pandas as pd

def stack_model(oof_1, oof_2, oof_3, predictions_1, predictions_2, predictions_3, y):

train_stack = pd.concat([oof_1, oof_2, oof_3], axis=1) # (len(train), 3)

test_stack = pd.concat([predictions_1, predictions_2, predictions_3], axis=1) # (len(test), 3)

# 元模型

models = {

'Ridge': Ridge(random_state=42),

'LinearRegression': LinearRegression(),

}

scores = {}

oof_dict = {}

predictions_dict = {}

folds = RepeatedKFold(n_splits=5, n_repeats=2, random_state=42)

for model_name, model in models.items():

oof = np.zeros((train_stack.shape[0],))

predictions = np.zeros((test_stack.shape[0],))

fold_scores = []

for fold_, (trn_idx, val_idx) in enumerate(folds.split(train_stack, y)):

print(f"Model: {model_name}, Fold {fold_+1}")

trn_data, trn_y = train_stack.iloc[trn_idx], y.iloc[trn_idx]

val_data, val_y = train_stack.iloc[val_idx], y.iloc[val_idx]

model.fit(trn_data, trn_y)

oof[val_idx] = model.predict(val_data)

predictions += model.predict(test_stack) / (5 * 2)

score_single = mean_absolute_error(val_y, oof[val_idx])

fold_scores.append(score_single)

print(f'Fold {fold_+1}/{5*2}', score_single)

mean_score = np.mean(fold_scores)

scores[model_name] = mean_score

oof_dict[model_name] = oof

predictions_dict[model_name] = predictions

print(f'{model_name} Mean Score:', mean_score)

# 选择得分最小的模型

best_model_name = min(scores, key=scores.get)

print(f'Best model: {best_model_name}')

return oof_dict[best_model_name], predictions_dict[best_model_name]

# 假设 lgb_oof, xgb_oof, cat_oof, lgb_test, xgb_test, cat_test 已经定义,并且 train['target'] 是目标变量

stack_oof, stack_pred = stack_model(pd.DataFrame(lgb_oof), pd.DataFrame(xgb_oof), pd.DataFrame(cat_oof),

pd.DataFrame(lgb_test), pd.DataFrame(xgb_test), pd.DataFrame(cat_test), train['target'])

# 保存结果文件到本地

test['target'] = stack_pred

test[['id','dt','target']].to_csv('submit3.2.csv', index=None)

在官网提交多次,以上一些结果的得分大致如下,作参考。

深度学习方案尝试

没学过LSTM,运行太久了没跑出来。不管了,以后学了再修改。

import tensorflow as tf

# 检查可用的 GPU 设备

gpus = tf.config.list_physical_devices('GPU')

if gpus:

print(f"GPUs detected: {gpus}")

else:

print("No GPU detected.")

# 检查 TensorFlow 是否使用 GPU

print("Num GPUs Available: ", len(tf.config.list_physical_devices('GPU')))

GPUs detected: [PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU'), PhysicalDevice(name='/physical_device:GPU:1', device_type='GPU')]

Num GPUs Available: 2

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense, Dropout

from tensorflow.keras.callbacks import EarlyStopping

print("开始读取数据...")

# 读取数据

train_data = pd.read_csv('/kaggle/input/2024abcd/train.csv')

test_data = pd.read_csv('/kaggle/input/2024abcd/test.csv')

print("数据读取完成。")

print("准备训练数据...")

# 准备训练数据,去掉非数值列

X_train = train_data.drop(columns=['target', 'id'])

y_train = train_data['target'].values

# 准备测试数据,去掉非数值列

X_test = test_data.drop(columns=['target', 'id'], errors='ignore')

test_dt = test_data['dt'].values # 保存测试数据的 dt 列

print("训练数据准备完成。")

print("重新组织数据以适应LSTM输入...")

# 重新组织数据以适应LSTM输入

def create_sequences(X, y, seq_length):

sequences = []

targets = []

for i in range(len(X) - seq_length):

seq = X.iloc[i:i+seq_length].values

target = y[i+seq_length]

sequences.append(seq)

targets.append(target)

return np.array(sequences), np.array(targets)

SEQ_LENGTH = 10

X_train_seq, y_train_seq = create_sequences(X_train, y_train, SEQ_LENGTH)

# 将数据类型转换为 float32

X_train_seq = X_train_seq.astype(np.float32)

y_train_seq = y_train_seq.astype(np.float32)

# 注意测试数据没有目标值,因此不进行序列化处理

X_test_seq = np.array([X_test.iloc[i:i+SEQ_LENGTH].values for i in range(len(X_test) - SEQ_LENGTH)])

X_test_seq = X_test_seq.astype(np.float32) # 转换为 float32 类型

print("数据组织完成。")

# 检查数据维度

print(f'X_train_seq 形状: {X_train_seq.shape}')

print(f'y_train_seq 形状: {y_train_seq.shape}')

print("开始构建LSTM模型...")

# 构建LSTM模型

model = Sequential()

model.add(LSTM(50, activation='relu', input_shape=(SEQ_LENGTH, X_train_seq.shape[2]), return_sequences=True))

model.add(Dropout(0.2))

model.add(LSTM(50, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')

print("LSTM模型构建完成。")

# 早停回调

early_stopping = EarlyStopping(monitor='val_loss', patience=3, restore_best_weights=True)

print("开始训练模型...")

# 训练模型

history = model.fit(X_train_seq, y_train_seq, epochs=10, batch_size=128, validation_split=0.2,

callbacks=[early_stopping], verbose=1)

print("模型训练完成。")

print("开始评估模型...")

# 评估模型

mse = model.evaluate(X_train_seq, y_train_seq)

print(f'Train MSE: {mse}')

print("开始对测试集进行预测...")

# 对测试集进行预测

predictions = model.predict(X_test_seq)

print("预测完成。")

print("开始保存预测结果...")

# 保存预测结果

submission = pd.DataFrame({

'id': test_data['id'].values[SEQ_LENGTH:],

'dt': test_dt[SEQ_LENGTH:],

'target': predictions.flatten()

})

submission.to_csv('/kaggle/working/submission.csv', index=False)

print("预测结果保存完成。")