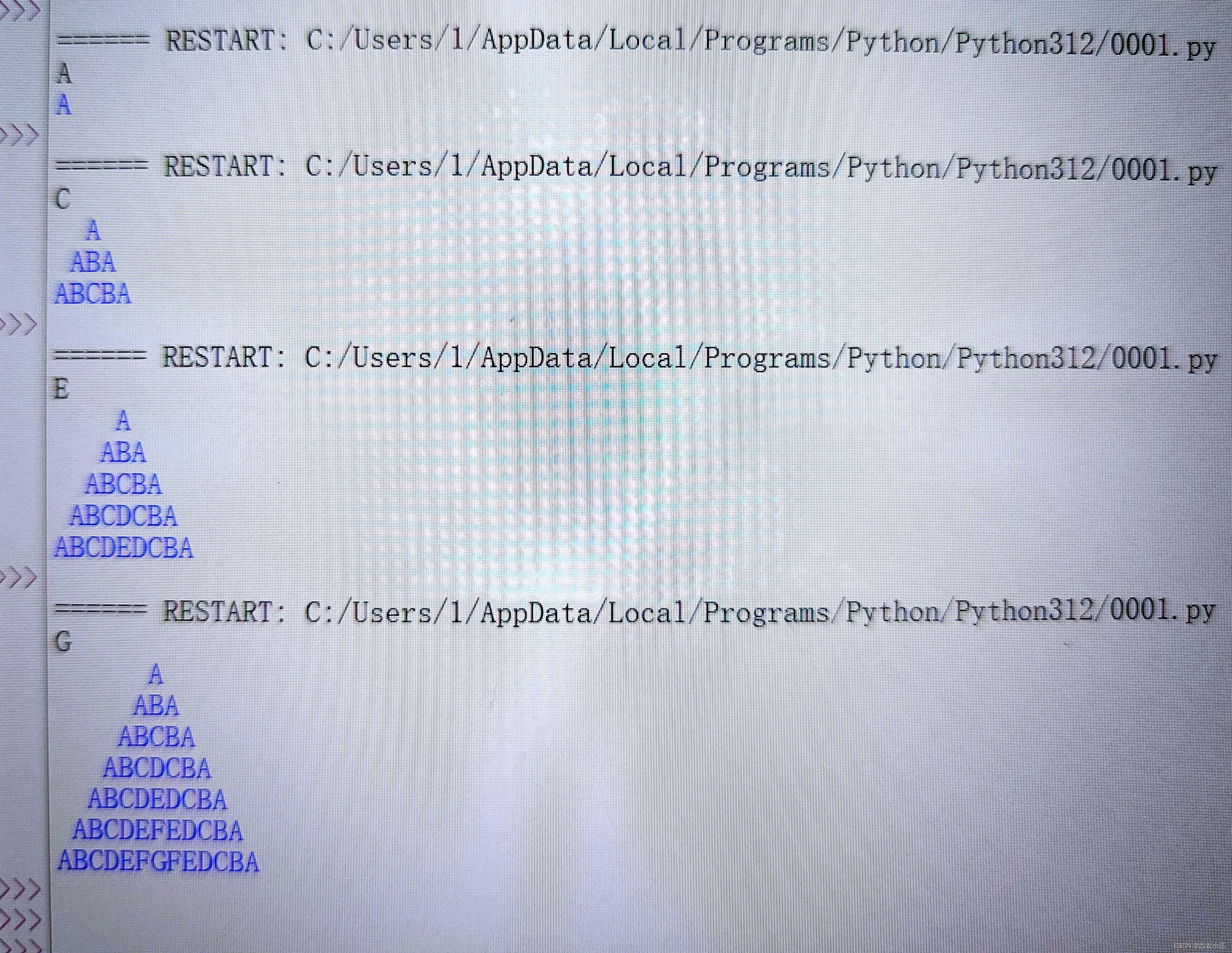

这一次学习的时候静态绘制loss和correct曲线,也就是在模型训练完成后,对统计的数据进行绘制。

以minist数据训练为例子

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import numpy as np

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

trainning_data =datasets.MNIST(root="data",train=True,transform=ToTensor(),download=True)

print(len(trainning_data))

test_data = datasets.MNIST(root="data",train=True,transform=ToTensor(),download=False)

train_loader = DataLoader(trainning_data, batch_size=64,shuffle=True)

test_loader = DataLoader(test_data, batch_size=64,shuffle=True)

print(len(train_loader)) #分成了多少个batch

print(len(trainning_data)) #总共多少个图像

# for x, y in train_loader:

# print(x.shape)

# print(y.shape)

class MinistNet(nn.Module):

def __init__(self):

super().__init__()

# self.flat = nn.Flatten()

self.conv1 = nn.Conv2d(1,1,3,1,1)

self.hideLayer1 = nn.Linear(28*28,256)

self.hideLayer2 = nn.Linear(256,10)

def forward(self,x):

x= self.conv1(x)

x = x.view(-1,28*28)

x = self.hideLayer1(x)

x = torch.sigmoid(x)

x = self.hideLayer2(x)

# x = nn.Sigmoid(x)

return x

model = MinistNet()

model = model.to(device)

cuda = next(model.parameters()).device

print(model)

criterion = nn.CrossEntropyLoss()

optimer = torch.optim.RMSprop(model.parameters(),lr= 0.001)

def train():

train_losses = []

train_acces = []

eval_losses = []

eval_acces = []

#训练

model.train()

for epoch in range(10):

batchsizeNum = 0

train_loss = 0

train_acc = 0

train_correct = 0

for x,y in train_loader:

# print(epoch)

# print(x.shape)

# print(y.shape)

x = x.to('cuda')

y = y.to('cuda')

bte = type(x)==torch.Tensor

bte1 = type(y)==torch.Tensor

A = x.device

B = y.device

pred_y = model(x)

loss = criterion(pred_y,y)

optimer.zero_grad()

loss.backward()

optimer.step()

loss_val = loss.item()

batchsizeNum = batchsizeNum +1

train_acc += (pred_y.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

# print("loss: ",loss_val," ",epoch, " ", batchsizeNum)

train_losses.append(train_loss / len(trainning_data))

train_acces.append(train_acc / len(trainning_data))

#测试

model.eval()

with torch.no_grad():

num_batch = len(test_data)

numSize = len(test_data)

test_loss, test_correct = 0,0

for x,y in test_loader:

x = x.to(device)

y = y.to(device)

pred_y = model(x)

test_loss += criterion(pred_y, y).item()

test_correct += (pred_y.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batch

test_correct /= numSize

eval_losses.append(test_loss)

eval_acces.append(test_correct)

print("test result:",100 * test_correct,"% avg loss:",test_loss)

PATH = "dict_model_%d_dict.pth"%(epoch)

torch.save({"epoch": epoch,

"model_state_dict": model.state_dict(), }, PATH)

plt.plot(np.arange(len(train_losses)), train_losses, label="train loss")

plt.plot(np.arange(len(train_acces)), train_acces, label="train acc")

plt.plot(np.arange(len(eval_losses)), eval_losses, label="valid loss")

plt.plot(np.arange(len(eval_acces)), eval_acces, label="valid acc")

plt.legend() # 显示图例

plt.xlabel('epoches')

# plt.ylabel("epoch")

plt.title('Model accuracy&loss')

plt.show()

torch.save(model,"mode_con_line2.pth")#保存网络模型结构

# torch.save(model,) #保存模型中的参数

torch.save(model.state_dict(),"model_dict.pth")

# Press the green button in the gutter to run the script.

if __name__ == '__main__':

train()

绘制的图如下:

![【C++刷题】[UVA 489]Hangman Judge 刽子手游戏](https://i-blog.csdnimg.cn/direct/7630ecf3cb8d4e17bb7c041d9fade0de.png)