文本解码原理--以MindNLP为例

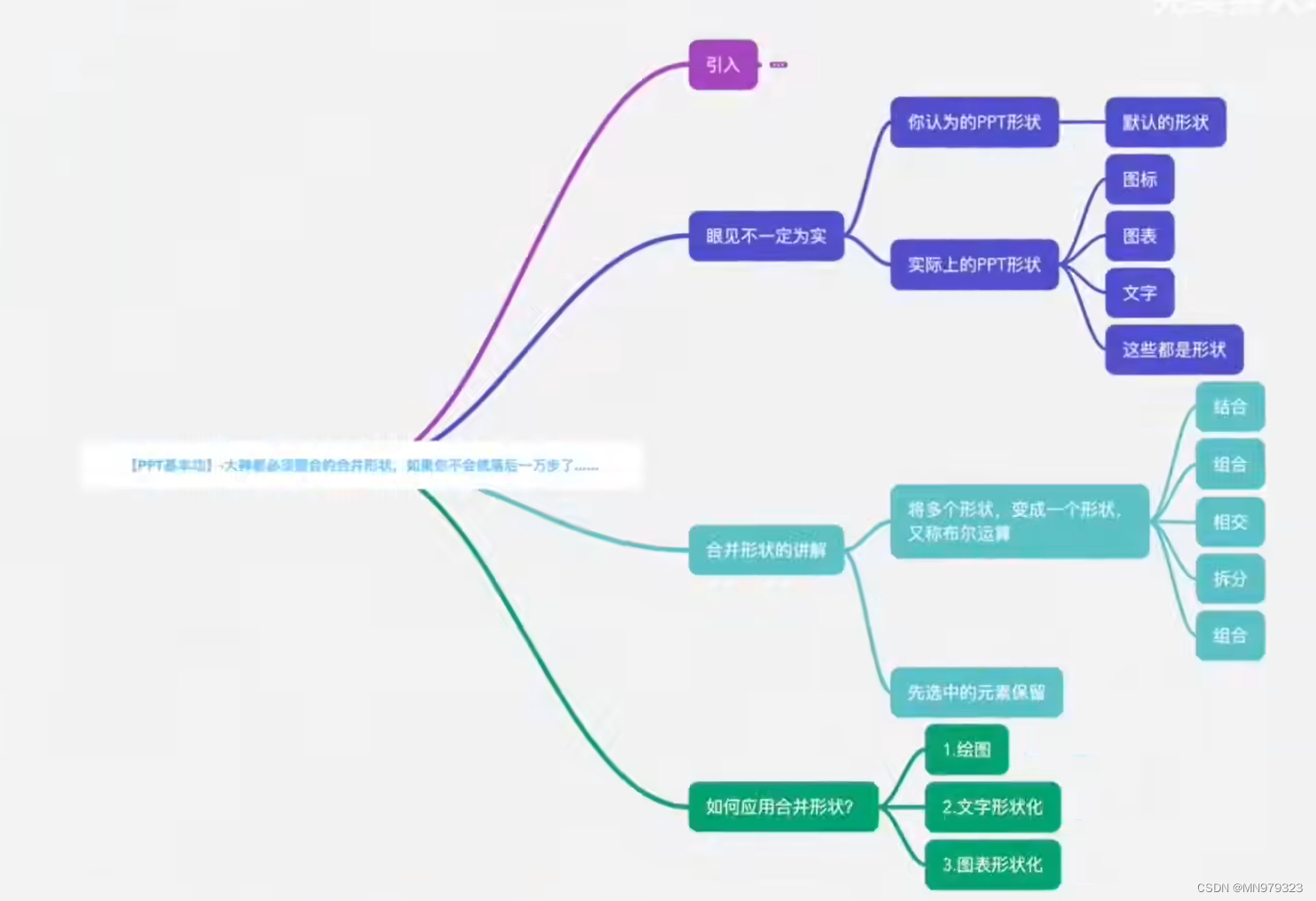

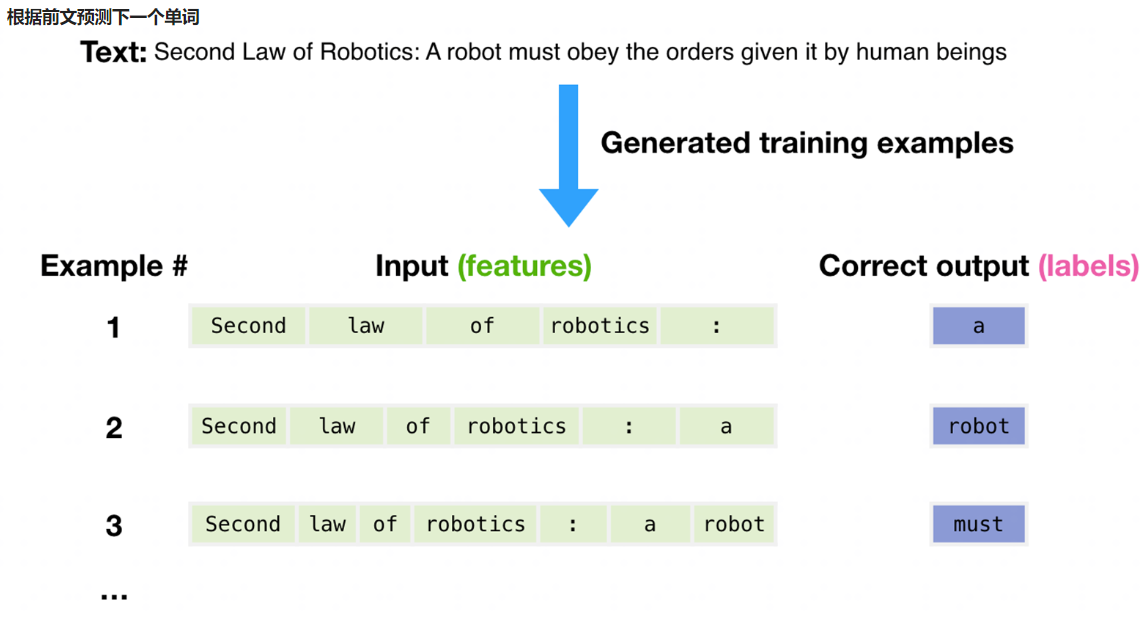

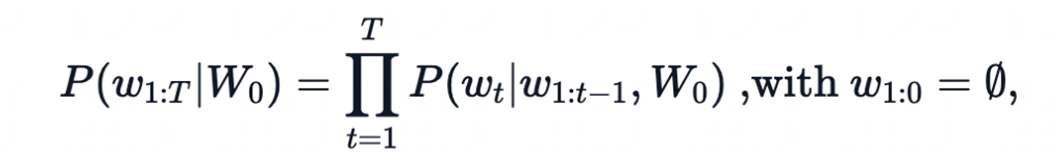

回顾:自回归语言模型

根据前文预测下一个单词

一个文本序列的概率分布可以分解为每个词基于其上文的条件概率的乘积

- 𝑊_0:初始上下文单词序列

- 𝑇: 时间步

- 当生成EOS标签时,停止生成。

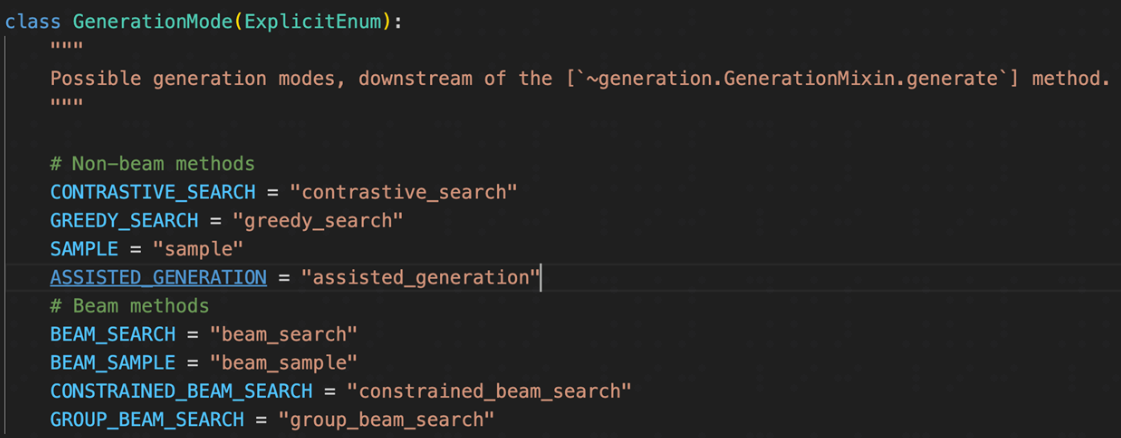

MindNLP/huggingface Transformers提供的文本生成方法

Greedy search

在每个时间步𝑡都简单地选择概率最高的词作为当前输出词:

𝑤_𝑡=𝑎𝑟𝑔𝑚𝑎𝑥_𝑤 𝑃(𝑤|𝑤_(1:𝑡−1))

按照贪心搜索输出序列(“The”,“nice”,“woman”) 的条件概率为:0.5 x 0.4 = 0.2

缺点: 错过了隐藏在低概率词后面的高概率词,如:dog=0.5, has=0.9

环境准备

%%capture captured_output

# 实验环境已经预装了mindspore==2.2.14,如需更换mindspore版本,可更改下面mindspore的版本号

!pip uninstall mindspore -y

!pip install -i https://pypi.mirrors.ustc.edu.cn/simple mindspore==2.2.14

!pip uninstall mindvision -y

!pip uninstall mindinsight -y

Found existing installation: mindvision 0.1.0

Uninstalling mindvision-0.1.0:

Successfully uninstalled mindvision-0.1.0

[33mWARNING: Skipping mindinsight as it is not installed.[0m[33m

[0m

# 该案例在 mindnlp 0.3.1 版本完成适配,如果发现案例跑不通,可以指定mindnlp版本,执行`!pip install mindnlp==0.3.1`

!pip install mindnlp

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting mindnlp

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/72/37/ef313c23fd587c3d1f46b0741c98235aecdfd93b4d6d446376f3db6a552c/mindnlp-0.3.1-py3-none-any.whl (5.7 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m5.7/5.7 MB[0m [31m8.1 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hRequirement already satisfied: mindspore in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp) (2.2.14)

Requirement already satisfied: tqdm in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp) (4.66.4)

Requirement already satisfied: requests in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindnlp) (2.32.3)

Collecting datasets (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/60/2d/963b266bb8f88492d5ab4232d74292af8beb5b6fdae97902df9e284d4c32/datasets-2.20.0-py3-none-any.whl (547 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m547.8/547.8 kB[0m [31m12.4 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting evaluate (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c2/d6/ff9baefc8fc679dcd9eb21b29da3ef10c81aa36be630a7ae78e4611588e1/evaluate-0.4.2-py3-none-any.whl (84 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m84.1/84.1 kB[0m [31m169.4 kB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting tokenizers (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ba/26/139bd2371228a0e203da7b3e3eddcb02f45b2b7edd91df00e342e4b55e13/tokenizers-0.19.1-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (3.6 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m3.6/3.6 MB[0m [31m7.5 MB/s[0m eta [36m0:00:00[0m00:01[0m00:01[0m

[?25hCollecting safetensors (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c6/02/28e6280ed0f1bde89eed644b80f2ece4e5ae212dc9ee70d7f56fadc93602/safetensors-0.4.3-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (1.2 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m1.2/1.2 MB[0m [31m9.8 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0mm

[?25hCollecting sentencepiece (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a3/69/e96ef68261fa5b82379fdedb325ceaf1d353c6e839ec346d8244e0da5f2f/sentencepiece-0.2.0-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (1.3 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m1.3/1.3 MB[0m [31m9.8 MB/s[0m eta [36m0:00:00[0mta [36m0:00:01[0m

[?25hCollecting regex (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/70/70/fea4865c89a841432497d1abbfd53878513b55c6543245fabe31cf8df0b8/regex-2024.5.15-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (774 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m774.7/774.7 kB[0m [31m14.3 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting addict (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/6a/00/b08f23b7d7e1e14ce01419a467b583edbb93c6cdb8654e54a9cc579cd61f/addict-2.4.0-py3-none-any.whl (3.8 kB)

Collecting ml-dtypes (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/50/96/13d7c3cc82d5ef597279216cf56ff461f8b57e7096a3ef10246a83ca80c0/ml_dtypes-0.4.0-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (2.2 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m2.2/2.2 MB[0m [31m11.8 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting pyctcdecode (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a5/8a/93e2118411ae5e861d4f4ce65578c62e85d0f1d9cb389bd63bd57130604e/pyctcdecode-0.5.0-py2.py3-none-any.whl (39 kB)

Collecting jieba (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c6/cb/18eeb235f833b726522d7ebed54f2278ce28ba9438e3135ab0278d9792a2/jieba-0.42.1.tar.gz (19.2 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m19.2/19.2 MB[0m [31m9.3 MB/s[0m eta [36m0:00:00[0m00:01[0m00:01[0mm

[?25h Preparing metadata (setup.py) ... [?25ldone

[?25hCollecting pytest==7.2.0 (from mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/67/68/a5eb36c3a8540594b6035e6cdae40c1ef1b6a2bfacbecc3d1a544583c078/pytest-7.2.0-py3-none-any.whl (316 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m316.8/316.8 kB[0m [31m14.2 MB/s[0m eta [36m0:00:00[0m

[?25hRequirement already satisfied: attrs>=19.2.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp) (23.2.0)

Requirement already satisfied: iniconfig in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp) (2.0.0)

Requirement already satisfied: packaging in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp) (23.2)

Requirement already satisfied: pluggy<2.0,>=0.12 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp) (1.5.0)

Requirement already satisfied: exceptiongroup>=1.0.0rc8 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp) (1.2.0)

Requirement already satisfied: tomli>=1.0.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pytest==7.2.0->mindnlp) (2.0.1)

Requirement already satisfied: filelock in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp) (3.15.3)

Requirement already satisfied: numpy>=1.17 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp) (1.26.4)

Collecting pyarrow>=15.0.0 (from datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/87/60/cc0645eb4ef73f88847e40a7f9d238bae6b7409d6c1f6a5d200d8ade1f09/pyarrow-16.1.0-cp39-cp39-manylinux_2_28_aarch64.whl (38.1 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m38.1/38.1 MB[0m [31m7.0 MB/s[0m eta [36m0:00:00[0m00:01[0m00:01[0m

[?25hCollecting pyarrow-hotfix (from datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/e4/f4/9ec2222f5f5f8ea04f66f184caafd991a39c8782e31f5b0266f101cb68ca/pyarrow_hotfix-0.6-py3-none-any.whl (7.9 kB)

Requirement already satisfied: dill<0.3.9,>=0.3.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp) (0.3.8)

Requirement already satisfied: pandas in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp) (2.2.2)

Collecting xxhash (from datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/7c/b9/93f860969093d5d1c4fa60c75ca351b212560de68f33dc0da04c89b7dc1b/xxhash-3.4.1-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (220 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m220.6/220.6 kB[0m [31m5.5 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting multiprocess (from datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/da/d9/f7f9379981e39b8c2511c9e0326d212accacb82f12fbfdc1aa2ce2a7b2b6/multiprocess-0.70.16-py39-none-any.whl (133 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m133.4/133.4 kB[0m [31m10.6 MB/s[0m eta [36m0:00:00[0m

[?25hCollecting fsspec<=2024.5.0,>=2023.1.0 (from fsspec[http]<=2024.5.0,>=2023.1.0->datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ba/a3/16e9fe32187e9c8bc7f9b7bcd9728529faa725231a0c96f2f98714ff2fc5/fsspec-2024.5.0-py3-none-any.whl (316 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m316.1/316.1 kB[0m [31m6.0 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting aiohttp (from datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/eb/45/eebe8d2215328434f33ccb44a05d2741ff7ed4b96b56ca507e2ecf598b73/aiohttp-3.9.5-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (1.2 MB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m1.2/1.2 MB[0m [31m7.8 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0mm

[?25hRequirement already satisfied: huggingface-hub>=0.21.2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp) (0.23.4)

Requirement already satisfied: pyyaml>=5.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from datasets->mindnlp) (6.0.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp) (2.2.2)

Requirement already satisfied: certifi>=2017.4.17 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from requests->mindnlp) (2024.6.2)

Requirement already satisfied: protobuf>=3.13.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp) (5.27.1)

Requirement already satisfied: asttokens>=2.0.4 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp) (2.0.5)

Requirement already satisfied: pillow>=6.2.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp) (10.3.0)

Requirement already satisfied: scipy>=1.5.4 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp) (1.13.1)

Requirement already satisfied: psutil>=5.6.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp) (5.9.0)

Requirement already satisfied: astunparse>=1.6.3 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from mindspore->mindnlp) (1.6.3)

Collecting pygtrie<3.0,>=2.1 (from pyctcdecode->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ec/cd/bd196b2cf014afb1009de8b0f05ecd54011d881944e62763f3c1b1e8ef37/pygtrie-2.5.0-py3-none-any.whl (25 kB)

Collecting hypothesis<7,>=6.14 (from pyctcdecode->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/6c/f7/66279227de1a500724e90ef11d0f47a21342454e50acf50ee0148e9eda00/hypothesis-6.108.2-py3-none-any.whl (465 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m465.2/465.2 kB[0m [31m8.7 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hRequirement already satisfied: six in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from asttokens>=2.0.4->mindspore->mindnlp) (1.16.0)

Requirement already satisfied: wheel<1.0,>=0.23.0 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from astunparse>=1.6.3->mindspore->mindnlp) (0.43.0)

Collecting aiosignal>=1.1.2 (from aiohttp->datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/76/ac/a7305707cb852b7e16ff80eaf5692309bde30e2b1100a1fcacdc8f731d97/aiosignal-1.3.1-py3-none-any.whl (7.6 kB)

Collecting frozenlist>=1.1.1 (from aiohttp->datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/57/15/172af60c7e150a1d88ecc832f2590721166ae41eab582172fe1e9844eab4/frozenlist-1.4.1-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (239 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m239.4/239.4 kB[0m [31m8.5 MB/s[0m eta [36m0:00:00[0m

[?25hCollecting multidict<7.0,>=4.5 (from aiohttp->datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/d0/10/2ff646c471e84af25fe8111985ffb8ec85a3f6e1ade8643bfcfcc0f4d2b1/multidict-6.0.5-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (125 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m125.9/125.9 kB[0m [31m9.0 MB/s[0m eta [36m0:00:00[0m

[?25hCollecting yarl<2.0,>=1.0 (from aiohttp->datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c6/d6/5b30ae1d8a13104ee2ceb649f28f2db5ad42afbd5697fd0fc61528bb112c/yarl-1.9.4-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (300 kB)

[2K [90m━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━[0m [32m300.9/300.9 kB[0m [31m6.5 MB/s[0m eta [36m0:00:00[0ma [36m0:00:01[0m

[?25hCollecting async-timeout<5.0,>=4.0 (from aiohttp->datasets->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/a7/fa/e01228c2938de91d47b307831c62ab9e4001e747789d0b05baf779a6488c/async_timeout-4.0.3-py3-none-any.whl (5.7 kB)

Requirement already satisfied: typing-extensions>=3.7.4.3 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from huggingface-hub>=0.21.2->datasets->mindnlp) (4.11.0)

Collecting sortedcontainers<3.0.0,>=2.1.0 (from hypothesis<7,>=6.14->pyctcdecode->mindnlp)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/32/46/9cb0e58b2deb7f82b84065f37f3bffeb12413f947f9388e4cac22c4621ce/sortedcontainers-2.4.0-py2.py3-none-any.whl (29 kB)

Requirement already satisfied: python-dateutil>=2.8.2 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pandas->datasets->mindnlp) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pandas->datasets->mindnlp) (2024.1)

Requirement already satisfied: tzdata>=2022.7 in /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages (from pandas->datasets->mindnlp) (2024.1)

Building wheels for collected packages: jieba

Building wheel for jieba (setup.py) ... [?25ldone

[?25h Created wheel for jieba: filename=jieba-0.42.1-py3-none-any.whl size=19314459 sha256=107f32c8ef152ee9014c980e99beb8179acca866ca882ea0e4a5f1f31cbe844a

Stored in directory: /home/nginx/.cache/pip/wheels/1a/76/68/b6d79c4db704bb18d54f6a73ab551185f4711f9730c0c15d97

Successfully built jieba

Installing collected packages: sortedcontainers, sentencepiece, pygtrie, jieba, addict, xxhash, safetensors, regex, pytest, pyarrow-hotfix, pyarrow, multiprocess, multidict, ml-dtypes, hypothesis, fsspec, frozenlist, async-timeout, yarl, pyctcdecode, aiosignal, tokenizers, aiohttp, datasets, evaluate, mindnlp

Attempting uninstall: pytest

Found existing installation: pytest 8.0.0

Uninstalling pytest-8.0.0:

Successfully uninstalled pytest-8.0.0

Attempting uninstall: fsspec

Found existing installation: fsspec 2024.6.0

Uninstalling fsspec-2024.6.0:

Successfully uninstalled fsspec-2024.6.0

Successfully installed addict-2.4.0 aiohttp-3.9.5 aiosignal-1.3.1 async-timeout-4.0.3 datasets-2.20.0 evaluate-0.4.2 frozenlist-1.4.1 fsspec-2024.5.0 hypothesis-6.108.2 jieba-0.42.1 mindnlp-0.3.1 ml-dtypes-0.4.0 multidict-6.0.5 multiprocess-0.70.16 pyarrow-16.1.0 pyarrow-hotfix-0.6 pyctcdecode-0.5.0 pygtrie-2.5.0 pytest-7.2.0 regex-2024.5.15 safetensors-0.4.3 sentencepiece-0.2.0 sortedcontainers-2.4.0 tokenizers-0.19.1 xxhash-3.4.1 yarl-1.9.4

[1m[[0m[34;49mnotice[0m[1;39;49m][0m[39;49m A new release of pip is available: [0m[31;49m24.1[0m[39;49m -> [0m[32;49m24.1.2[0m

[1m[[0m[34;49mnotice[0m[1;39;49m][0m[39;49m To update, run: [0m[32;49mpython -m pip install --upgrade pip[0m

#greedy_search

from mindnlp.transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("iiBcai/gpt2", mirror='modelscope')

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

# generate text until the output length (which includes the context length) reaches 50

greedy_output = model.generate(input_ids, max_length=50)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(greedy_output[0], skip_special_tokens=True))

Building prefix dict from the default dictionary ...

Dumping model to file cache /tmp/jieba.cache

Loading model cost 1.018 seconds.

Prefix dict has been built successfully.

0%| | 0.00/26.0 [00:00<?, ?B/s]

0%| | 0.00/0.99M [00:00<?, ?B/s]

0%| | 0.00/446k [00:00<?, ?B/s]

0%| | 0.00/1.29M [00:00<?, ?B/s]

0%| | 0.00/665 [00:00<?, ?B/s]

0%| | 0.00/523M [00:00<?, ?B/s]

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with my dog. I'm not sure if I'll ever be able to walk with my dog.

I'm not sure if I'll

Beam search

Beam search通过在每个时间步保留最可能的 num_beams 个词,并从中最终选择出概率最高的序列来降低丢失潜在的高概率序列的风险。如图以 num_beams=2 为例:

(“The”,“dog”,“has”) : 0.4 * 0.9 = 0.36

(“The”,“nice”,“woman”) : 0.5 * 0.4 = 0.20

优点:一定程度保留最优路径

缺点:1. 无法解决重复问题;2. 开放域生成效果差

from mindnlp.transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("iiBcai/gpt2", mirror='modelscope')

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

# activate beam search and early_stopping

beam_output = model.generate(

input_ids,

max_length=50,

num_beams=5,

early_stopping=True

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

print(100 * '-')

# set no_repeat_ngram_size to 2

beam_output = model.generate(

input_ids,

max_length=50,

num_beams=5,

no_repeat_ngram_size=2,

early_stopping=True

)

print("Beam search with ngram, Output:\n" + 100 * '-')

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

print(100 * '-')

# set return_num_sequences > 1

beam_outputs = model.generate(

input_ids,

max_length=50,

num_beams=5,

no_repeat_ngram_size=2,

num_return_sequences=5,

early_stopping=True

)

# now we have 3 output sequences

print("return_num_sequences, Output:\n" + 100 * '-')

for i, beam_output in enumerate(beam_outputs):

print("{}: {}".format(i, tokenizer.decode(beam_output, skip_special_tokens=True)))

print(100 * '-')

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I don't think I'll ever be able to walk with her again."

"I don't think I

----------------------------------------------------------------------------------------------------

Beam search with ngram, Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I'm not sure what to say to that," she said. "I mean, it's not like I'm

----------------------------------------------------------------------------------------------------

return_num_sequences, Output:

----------------------------------------------------------------------------------------------------

0: I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I'm not sure what to say to that," she said. "I mean, it's not like I'm

1: I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I'm not sure what to say to that," she said. "I mean, it's not like she's

2: I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I'm not sure what to say to that," she said. "I mean, it's not like we're

3: I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I'm not sure what to say to that," she said. "I mean, it's not like I've

4: I enjoy walking with my cute dog, but I don't think I'll ever be able to walk with her again."

"I'm not sure what to say to that," she said. "I mean, it's not like I can

----------------------------------------------------------------------------------------------------

Beam search issues

缺点:1. 无法解决重复问题;2. 开放域生成效果差

Repeat problem

n-gram 惩罚:

将出现过的候选词的概率设置为 0

设置no_repeat_ngram_size=2 ,任意 2-gram 不会出现两次

Notice: 实际文本生成需要重复出现

Sample

根据当前条件概率分布随机选择输出词𝑤_𝑡

(“car”) ~P(w∣"The")

(“drives”) ~P(w∣"The",“car”)

优点:文本生成多样性高

缺点:生成文本不连续

import mindspore

from mindnlp.transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("iiBcai/gpt2", mirror='modelscope')

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

mindspore.set_seed(0)

# activate sampling and deactivate top_k by setting top_k sampling to 0

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=0

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog Neddy as much as I'd like. Keep up the good work Neddy!"

I realized what Neddy meant when he first launched the website. "Thank you so much for joining."

I

Temperature

降低softmax 的temperature使 P(w∣w1:t−1)分布更陡峭

增加高概率单词的似然并降低低概率单词的似然

import mindspore

from mindnlp.transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("iiBcai/gpt2", mirror='modelscope')

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

mindspore.set_seed(1234)

# activate sampling and deactivate top_k by setting top_k sampling to 0

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=0,

temperature=0.7

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog and have never had a problem with her until now.

A large dog named Chucky managed to get a few long stretches of grass on her back and ran around with it for about 5 minutes, ran around

TopK sample

选出概率最大的 K 个词,重新归一化,最后在归一化后的 K 个词中采样

TopK sample problems

将采样池限制为固定大小 K :

- 在分布比较尖锐的时候产生胡言乱语

- 在分布比较平坦的时候限制模型的创造力

import mindspore

from mindnlp.transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("iiBcai/gpt2", mirror='modelscope')

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

mindspore.set_seed(0)

# activate sampling and deactivate top_k by setting top_k sampling to 0

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=50

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog.

She's always up for some action, so I have seen her do some stuff with it.

Then there's the two of us.

The two of us I'm talking about were

Top-P sample

在累积概率超过概率 p 的最小单词集中进行采样,重新归一化

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

mindspore.set_seed(0)

# deactivate top_k sampling and sample only from 92% most likely words

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_p=0.92,

top_k=0

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog Neddy as much as I'd like. Keep up the good work Neddy!"

I realized what Neddy meant when he first launched the website. "Thank you so much for joining."

I

top_k_top_p

import mindspore

from mindnlp.transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("iiBcai/gpt2", mirror='modelscope')

# add the EOS token as PAD token to avoid warnings

model = GPT2LMHeadModel.from_pretrained("iiBcai/gpt2", pad_token_id=tokenizer.eos_token_id, mirror='modelscope')

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='ms')

mindspore.set_seed(0)

# set top_k = 50 and set top_p = 0.95 and num_return_sequences = 3

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=5,

top_p=0.95,

num_return_sequences=3

)

print("Output:\n" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

Output:

----------------------------------------------------------------------------------------------------

0: I enjoy walking with my cute dog.

"My dog loves the smell of the dog. I'm so happy that she's happy with me.

"I love to walk with my dog. I'm so happy that she's happy

1: I enjoy walking with my cute dog. I'm a big fan of my cat and her dog, but I don't have the same enthusiasm for her. It's hard not to like her because it is my dog.

My husband, who

2: I enjoy walking with my cute dog, but I'm also not sure I would want my dog to walk alone with me."

She also told The Daily Beast that the dog is very protective.

"I think she's very protective of

心得

1、基于N-gram或者其他的都是概率模型, N-gram模型对训练数据的需求较少,但对数据的覆盖性要求高。GPT则需要大量的训练数据和计算资源来获得良好的性能。

2、除了N-gram和GPT,还有其他类型的概率模型用于自然语言处理,例如:

隐马尔可夫模型(HMM): 用于序列标注任务,例如语音识别和机器翻译。

条件随机场(CRF): 用于序列标注任务,能够更好地处理局部特征和全局依赖关系。

贝叶斯网络: 用于文本分类和关系抽取等任务,能够表示文本中的知识和推理关系。