前言:

nova服务是openstack最重要的一个组件,没有之一,该组件是云计算的计算核心,大体组件如下:

OpenStack Docs: Compute service overview

挑些重点,nova-api,libvirt,nova-placement-api,nova-api-metadata,nova-compute

nova-api service

Accepts and responds to end user compute API calls. The service supports the OpenStack Compute API. It enforces some policies and initiates most orchestration activities, such as running an instance.

nova-api-metadata service

Accepts metadata requests from instances. The nova-api-metadata service is generally used when you run in multi-host mode with nova-network installations. For details, see Metadata service in the Compute Administrator Guide.

nova-compute service

A worker daemon that creates and terminates virtual machine instances through hypervisor APIs. For example:

XenAPI for XenServer/XCP

libvirt for KVM or QEMU

VMwareAPI for VMware

Processing is fairly complex. Basically, the daemon accepts actions from the queue and performs a series of system commands such as launching a KVM instance and updating its state in the database.

nova-placement-api service

Tracks the inventory and usage of each provider. For details, see Placement API.

nova-scheduler service

Takes a virtual machine instance request from the queue and determines on which compute server host it runs.

nova-conductor module

Mediates interactions between the nova-compute service and the database. It eliminates direct accesses to the cloud database made by the nova-compute service. The nova-conductor module scales horizontally. However, do not deploy it on nodes where the nova-compute service runs. For more information, see the conductor section in the Configuration Options.

nova-consoleauth daemon

Authorizes tokens for users that console proxies provide. See nova-novncproxy and nova-xvpvncproxy. This service must be running for console proxies to work. You can run proxies of either type against a single nova-consoleauth service in a cluster configuration. For information, see About nova-consoleauth.

Deprecated since version 18.0.0: nova-consoleauth is deprecated since 18.0.0 (Rocky) and will be removed in an upcoming release. See workarounds.enable_consoleauth for details.

nova-novncproxy daemon

Provides a proxy for accessing running instances through a VNC connection. Supports browser-based novnc clients.

nova-spicehtml5proxy daemon

Provides a proxy for accessing running instances through a SPICE connection. Supports browser-based HTML5 client.

nova-xvpvncproxy daemon

Provides a proxy for accessing running instances through a VNC connection. Supports an OpenStack-specific Java client.

The queue

A central hub for passing messages between daemons. Usually implemented with RabbitMQ, also can be implemented with another AMQP message queue, such as ZeroMQ并且nova安装部署是分为controller节点和computer节点了,controller节点就一个,computer节点可以是若干个,总体来说,nova的安装部署难度是比keystone,glance大很多的,因为涉及的组件更加的多了,也有节点的加入这些因素。

OK,下面开始controller节点的nova部署。

一,

官方文档:OpenStack Docs: Install and configure controller node for Red Hat Enterprise Linux and CentOS

创建数据库、服务凭据和API端点

本例中是在192.168.123.130服务器上操作,该服务器的主机名是openstack1

1)

数据库的创建,SQL语句如下;

注意,密码都是和数据库库名一样的哦

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'nova';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'placement';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'placement';

flush privileges;2)

在keystone上面注册nova服务

#在keystone上创建nova用户

openstack user create --domain default --password=nova nova

#输出如下:

[root@openstack1 ~]# openstack user create --domain default --password=nova nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 013b5c3530a144628fedabd7a158b08f |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#在keystone上将nova用户配置为admin角色并添加进service项目

openstack role add --project service --user nova admin

#注:此命令无输出

3)创建nova计算服务的实体

openstack service create --name nova --description "OpenStack Compute" compute

输出如下:

[root@openstack1 ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 83721cda2dd94e8bbfad43e34657a6da |

| name | nova |

| type | compute |

+-------------+----------------------------------+

4)

创建nova-api的检查点

openstack endpoint create --region RegionOne compute public http://openstack1:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://openstack1:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://openstack1:8774/v2.15)

在keystone上注册placement的用户和service

注意,这里的密码是placement

openstack user create --domain default --password=placement placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement6)

创建placement服务的检查点:

openstack endpoint create --region RegionOne placement public http://openstack1:8778

openstack endpoint create --region RegionOne placement internal http://openstack1:8778

openstack endpoint create --region RegionOne placement admin http://openstack1:8778整体查询一下,service和endpoint:

4个service,12个endpoint

[root@openstack1 ~]# openstack service list

+----------------------------------+-----------+-----------+

| ID | Name | Type |

+----------------------------------+-----------+-----------+

| 629d817aa28d4579b08663529efc63e4 | placement | placement |

| 7aa0d862c3dc4ae884e7f02551b07630 | glance | image |

| 83721cda2dd94e8bbfad43e34657a6da | nova | compute |

| c187cea7ed9c46668a229a3278b1e434 | keystone | identity |

+----------------------------------+-----------+-----------+

[root@openstack1 ~]# openstack endpoint list

+----------------------------------+-----------+--------------+--------------+---------+-----------+-----------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+-----------+-----------------------------+

| 010363cc3b224811ab1c45d67f56d475 | RegionOne | placement | placement | True | public | http://openstack1:8778 |

| 09b682984f4d446b9624de291b27ba43 | RegionOne | keystone | identity | True | internal | http://openstack1:5000/v3/ |

| 168e152a5ecd471183d5772b0d582039 | RegionOne | glance | image | True | public | http://openstack1:9292 |

| 1d267eb74ab245958730f80b75c1abf3 | RegionOne | nova | compute | True | internal | http://openstack1:8774/v2.1 |

| 4cbde990b9ac4e5d8cb58ecea6591361 | RegionOne | placement | placement | True | admin | http://openstack1:8778 |

| 632410fddc98491496f54d93a9d13a96 | RegionOne | keystone | identity | True | public | http://openstack1:5000/v3/ |

| 63cf103027204a5d845c9da6a08f36e0 | RegionOne | nova | compute | True | public | http://openstack1:8774/v2.1 |

| 8bbfa274e32f4a069b172976a0e209e4 | RegionOne | placement | placement | True | internal | http://openstack1:8778 |

| 9f46fdd5d8a7498d8a12b047f21095ab | RegionOne | glance | image | True | admin | http://openstack1:9292 |

| a3610b51395e49d8898463136d24cec3 | RegionOne | nova | compute | True | admin | http://openstack1:8774/v2.1 |

| a57efb7be1664e9bae2ad823bef3ea5a | RegionOne | glance | image | True | internal | http://openstack1:9292 |

| a9e0562e0f5241b49c9106dadcf88db7 | RegionOne | keystone | identity | True | admin | http://openstack1:5000/v3/ |

+----------------------------------+-----------+--------------+--------------+---------+-----------+-----------------------------+

二,

安装nova服务和配置文件的修改

1,

nova的yum安装:

六个服务

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api -y2,

快速修改配置文件

#这里在强调一次,rabbitmq的密码是RABBIT_PASS,主机名是openstack1,而不是官网文档里的controller

# 默认情况下,计算服务使用内置的防火墙服务。由于网络服务包含了防火墙服务,必须使用``nova.virt.firewall.NoopFirewallDriver``防火墙服务来禁用掉计算服务内置的防火墙服务(第四行的内容)

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.123.130

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@openstack1

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:nova@openstack1/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:nova@openstack1/nova

openstack-config --set /etc/nova/nova.conf placement_database connection mysql+pymysql://placement:placement@openstack1/placement

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://openstack1:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers openstack1:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password nova

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf glance api_servers http://openstack1:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://openstack1:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password placement

openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 300配置文件的修改具体内容如下:

[root@openstack1 ~]# grep '^[a-z]' /etc/nova/nova.conf

enabled_apis = osapi_compute,metadata

my_ip = 192.168.123.130

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

transport_url = rabbit://openstack:RABBIT_PASS@openstack1

auth_strategy = keystone

connection = mysql+pymysql://nova:nova@openstack1/nova_api

connection = mysql+pymysql://nova:nova@openstack1/nova

api_servers = http://openstack1:9292

auth_url = http://openstack1:5000/v3

memcached_servers = openstack1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = nova

lock_path = /var/lib/nova/tmp

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://openstack1:5000/v3

username = placement

password = placement

connection = mysql+pymysql://placement:placement@openstack1/placement

discover_hosts_in_cells_interval = 300

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

3,

由于某个包的bug,需要修改虚拟主机的配置文件,增加如下内容:

vim /etc/httpd/conf.d/00-nova-placement-api.conf

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>修改完毕后,重启httpd服务:

systemctl restart httpd查看端口8778,可以发现表示正常:

root@openstack1 ~]# netstat -antup |grep 8778

tcp6 0 0 :::8778 :::* LISTEN 5522/httpd

4)

数据库创建相关工作表

# nova_api有32张表,placement有32张表,nova_cell0有110张表,nova也有110张表

填充nova-api and placement databases

su -s /bin/sh -c "nova-manage api_db sync" nova

#注,此命令无输出,因此,请用echo $?查看是否正常执行注册cell0 database

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

#注,此命令无输出,因此,请用echo $?查看是否正常执行创建cell1 cell单元格

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

#注,此命令有输出,本例是d8257cfe-e583-474c-b97c-03de5eba2b0e

填充nova这个数据库:

su -s /bin/sh -c "nova-manage db sync" nova

#注,此命令有输出,会有警告,但警告可忽略。因此,请用echo $?查看是否正常执行

#警告内容如下:/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)确认nova cell0 and cell1 是否正确注册:

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

#输出如下表示正确

[root@openstack1 ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@openstack1/nova_cell0 | False |

| cell1 | d8257cfe-e583-474c-b97c-03de5eba2b0e | rabbit://openstack:****@openstack1 | mysql+pymysql://nova:****@openstack1/nova | False |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

查询数据库的表的数目是否正确(两个110,两个32):

[root@openstack1 ~]# mysql -h192.168.123.130 -unova -pnova -e "use nova;show tables;" |wc -l

111

[root@openstack1 ~]# mysql -h192.168.123.130 -unova -pnova -e "use nova_cell0;show tables;" |wc -l

111

[root@openstack1 ~]# mysql -h192.168.123.130 -unova -pnova -e "use nova_api;show tables;" |wc -l

33

[root@openstack1 ~]# mysql -h192.168.123.130 -uplacement -pplacement -e "use placement;show tables;" |wc -l

33

三,

控制节点的nova相关服务的启动和加入自启:

但是,这里是只有5个服务,前面yum安装的是6个,是怎么回事呢?

nova-consoleauth自18.0.0 (Rocky)起已被弃用,并将在即将发布的版本中删除。因此,全新安装的时候,此服务不需要启动。

systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service \

openstack-nova-scheduler.service openstack-nova-conductor.service \

openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service openstack-nova-consoleauth.service \

openstack-nova-scheduler.service openstack-nova-conductor.service \

openstack-nova-novncproxy.service

systemctl status openstack-nova-api.service openstack-nova-consoleauth.service \

openstack-nova-scheduler.service openstack-nova-conductor.service \

openstack-nova-novncproxy.serviceOK,查看服务状态,确认服务都正常启动后控制节点的nova就安装完毕了,下面将在computer节点安装nova

192.168.123.131服务器上安装部署nova,此节点是computer节点,也就是说libvirt,qemu-kvm等等软件都是安装在此节点上的

基本环境搭建和controller是一致的,具体搭建过程见:云计算|OpenStack|社区版OpenStack安装部署文档(二---OpenStack运行环境搭建)_晚风_END的博客-CSDN博客

1,

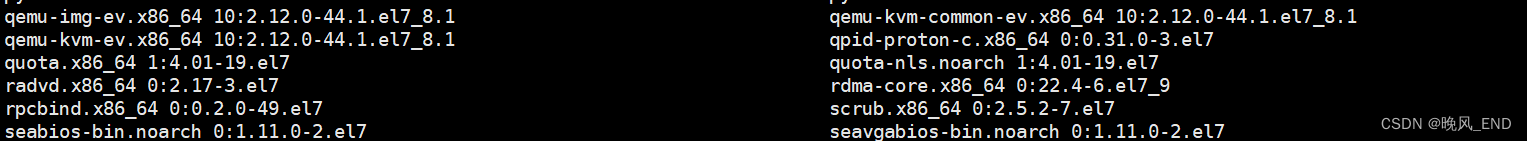

yum安装nova-computer

yum install openstack-nova-compute python-openstackclient openstack-utils -y

这些虚拟软件是以扩展的形式安装的

2,

快速修改配置文件(/etc/nova/nova.conf)

第一个IP地址是131,其它的仍然是使用openstack1这个主机名,例如,rabbitmq,这个服务是部署在130上的。

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.123.131

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@openstack1

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://openstack1:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers openstack1:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password nova

openstack-config --set /etc/nova/nova.conf vnc enabled True

openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://openstack1:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf glance api_servers http://openstack1:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://openstack1:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password placement具体的内容如下;

ain_name = default

user_domain_name = default

project_name = service

username = nova

password = nova

lock_path = /var/lib/nova/tmp

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://openstack1:5000/v3

username = placement

enabled = True

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://openstack1:6080/vnc_auto.html

3,

启动nova相关服务,并配置为开机自启动

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service4,

将计算节点增加到cell数据库

Important

Run the following commands on the controller node. 也就是在130服务器上运行以下命令:

获取管理员凭据以启用仅限管理员的CLI命令,然后确认数据库中有计算主机:

openstack compute service list --service nova-compute

#输出如下,表示发现了computer节点

[root@openstack1 ~]# openstack compute service list --service nova-compute

+----+--------------+------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+------------+------+---------+-------+----------------------------+

| 10 | nova-compute | openstack2 | nova | enabled | up | 2023-02-01T06:42:35.000000 |

+----+--------------+------------+------+---------+-------+----------------------------+

5,

手动注册computer节点单元格信息到nvoa数据库内:

[root@openstack1 ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

#输出如下:

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': d8257cfe-e583-474c-b97c-03de5eba2b0e

Found 0 unmapped computes in cell: d8257cfe-e583-474c-b97c-03de5eba2b0e

6,

验证nova服务是否正确安装(仍然是在130controller节点操作)

openstack compute service list

#输出如下:

可以看到三个服务在130上,一个computer在131也就是openstack2上面

[root@openstack1 ~]# openstack compute service list

+----+------------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+------------+----------+---------+-------+----------------------------+

| 1 | nova-consoleauth | openstack1 | internal | enabled | up | 2023-02-01T06:47:42.000000 |

| 2 | nova-scheduler | openstack1 | internal | enabled | up | 2023-02-01T06:47:48.000000 |

| 6 | nova-conductor | openstack1 | internal | enabled | up | 2023-02-01T06:47:44.000000 |

| 10 | nova-compute | openstack2 | nova | enabled | up | 2023-02-01T06:47:45.000000 |

+----+------------------+------------+----------+---------+-------+----------------------------+在身份认证服务中列出API端点以验证其连接性

openstack catalog list

#输出如下:

可以看到四个服务,每个服务有三个检查点

[root@openstack1 ~]# openstack catalog list

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| placement | placement | RegionOne |

| | | public: http://openstack1:8778 |

| | | RegionOne |

| | | admin: http://openstack1:8778 |

| | | RegionOne |

| | | internal: http://openstack1:8778 |

| | | |

| glance | image | RegionOne |

| | | public: http://openstack1:9292 |

| | | RegionOne |

| | | admin: http://openstack1:9292 |

| | | RegionOne |

| | | internal: http://openstack1:9292 |

| | | |

| nova | compute | RegionOne |

| | | internal: http://openstack1:8774/v2.1 |

| | | RegionOne |

| | | public: http://openstack1:8774/v2.1 |

| | | RegionOne |

| | | admin: http://openstack1:8774/v2.1 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://openstack1:5000/v3/ |

| | | RegionOne |

| | | public: http://openstack1:5000/v3/ |

| | | RegionOne |

| | | admin: http://openstack1:5000/v3/ |

| | | |

+-----------+-----------+-----------------------------------------+

检查placement API和cell服务是否正常工作

nova-status upgrade check

#输出如下,全是success即可:

root@openstack1 ~]# nova-status upgrade check

+-------------------------------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+-------------------------------------------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+-------------------------------------------------------------------+

| Check: Resource Providers |

| Result: Warning |

| Details: There are no compute resource providers in the Placement |

| service but there are 1 compute nodes in the deployment. |

| This means no compute nodes are reporting into the |

| Placement service and need to be upgraded and/or fixed. |

| See |

| https://docs.openstack.org/nova/latest/user/placement.html |

| for more details. |

+-------------------------------------------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+-------------------------------------------------------------------+

| Check: API Service Version |

| Result: Success |

| Details: None |

+-------------------------------------------------------------------+

| Check: Request Spec Migration |

| Result: Success |

| Details: None |

+-------------------------------------------------------------------+

| Check: Console Auths |

| Result: Success |

| Details: None |

+-------------------------------------------------------------------+

OK,双节点的nova就安装配置完成了。