目前集群状态

- 如K8S 集群搭建中规划的集群一样

| Master | node01 | node02 | |

|---|---|---|---|

| IP | 192.168.100.100 | 192.168.100.101 | 192.168.100.102 |

| OS | Cent OS 7.9 | Cent OS 7.9 | Cent OS 7.9 |

- 目前打算新增节点node03

| Master | node01 | node02 | node03 | |

|---|---|---|---|---|

| IP | 192.168.100.100 | 192.168.100.101 | 192.168.100.102 | 192.168.100.103 |

| OS | Cent OS 7.9 | Cent OS 7.9 | Cent OS 7.9 | Cent OS 7.9 |

1. 安装虚拟机

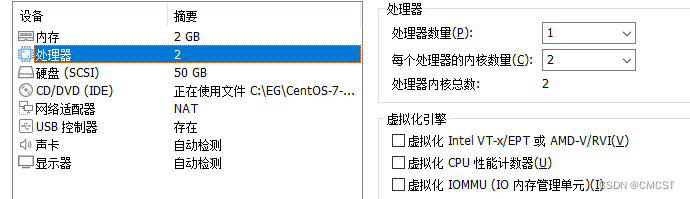

1.1 硬件分配

- CPU : 1 颗 2 核 CPU

- 内存:2048 MB

- 磁盘:50 GB

- 其它:默认

1.2 为设备安装OS

Cent OS 7.9 阿里云ISO链接

- 加载系统时,选择基础服务器

[root@Master ~]# cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

1.3 配置网卡

1.3.1 node 03

| op | key | value | 位置 |

|---|---|---|---|

| 修改 | BOOTPROTO | none | 4 |

| 修改 | ONBOOT | yes | 15 |

| 新增 | IPADDR | 192.168.100.103 | |

| 新增 | PREFIX | 24 | |

| 新增 | GATEWAY | 192.168.100.2 | |

| 新增 | DNS1 | 223.5.5.5 | |

| 新增 | IPV6_PRIVACY | no |

[root@node03 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=eb4335b9-98b7-4c63-ba0d-a25fa63aa5f5

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.100.103

PREFIX=24

GATEWAY=192.168.100.2

DNS1=223.5.5.5

IPV6_PRIVACY=no

1.4 修改hosts文件

1.3.1 Master

[root@Master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

192.168.100.103 node03

1.3.2 node 01

[root@node01 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

192.168.100.103 node03

1.3.3 node 02

[root@node02 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

192.168.100.103 node03

1.3.3 node 03

[root@node03 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

192.168.100.103 node03

2. 修改内核

建议安装XShell, XShell 官网

2.2~2.7的命令,此次仅node 03上同时执行,2.1除外

- 在XShell上,单击右键->发送键输入到->所有会话

2.1 时间同步

2.1 的命令建议Master、node 01、node 02、node 03上同时执行

# 启动chronyd服务

[root@node03 ~]# systemctl start chronyd

# 设置chronyd服务开机自启

[root@node03 ~]# systemctl enable chronyd

# 验证时间

[root@node03 ~]# date

2.2 禁用iptables&firewalld

[root@node03 ~]# systemctl stop firewalld

[root@node03 ~]# systemctl disable firewalld

[root@node03 ~]# systemctl stop iptables

[root@node03 ~]# systemctl disable iptables

2.3 禁用SELinux

- 命令getenforce可查看是否开启

# 仅修改SELINUX值为disabled

[root@node03 ~]# vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

# SELINUX=enforcing

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

2.4 禁用swap

# 将最后一行注释掉

[root@node03 ~]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Sun Jun 23 11:17:14 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=83ed761a-3ce3-46d3-889c-e4078b53b0c1 /boot xfs defaults 0 0

# /dev/mapper/centos-swap swap swap defaults 0 0

# 查看swap是否关闭,如刚刚修改,需要重启才能生效,此时暂不重启,2.7会重启

[root@node03 ~]# free -m

2.5 修改linux内核参数

[root@node03 ~]# vim /etc/sysctl.d/kubernetes.conf

# 添加网桥过滤和地址转发功能

# 在/etc/sysctl.d/kubernetes.conf文件中添加如下内容

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

# 重新加载配置

[root@node03 ~]# sysctl -p

# 加载网桥过滤模块

[root@node03 ~]# modprobe br_netfilter

# 查看网桥过滤模块是否加载成功

[root@node03 ~]# lsmod | grep br_netfilter

2.6 配置ipvs功能

此处可能需要配置yum源

[root@node03 ~]# mkdir -p /etc/yum.repos.d/backup/

# 备份本地yum包

[root@node03 ~]# mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/

[root@node03 ~]# wget -O /etc/yum.repos.d/CentOs-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@node03 ~]# yum clean all

[root@node03 ~]# yum makecache

在k8s中Service有两种代理模型:

- 基于iptables的

- 基于ipvs的

本人使用ipvs,下面手动载入ipvs模块

[root@node03 ~]# yum install ipset ipvsadm -y

[root@node03 ~]# vim /etc/sysconfig/modules/ipvs.modulesmodprobe

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

[root@node03 ~]# chmod +x /etc/sysconfig/modules/ipvs.modulesmodprobe

[root@node03 ~]# /bin/bash /etc/sysconfig/modules/ipvs.modulesmodprobe

[root@node03 ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

2.7 重启

[root@node03 ~]# reboot

注意:2.2~2.7的命令仅在node 03上执行,2.1除外(在全部节点同时执行)

3. 安装Docker

3.1~3.5的命令,仅在node 03上执行

3.1 切换镜像源

[root@node03 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

3.2 查看镜像源中docker版本

[root@node03 ~]# yum list docker-ce --showduplicates

3.3 安装docker-ce

- 若不指定–setopt=obsoletes=0 参数,yum自动安装更高版本

[root@node03 ~]# yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

3.4 添加配置文件

- Docker默认使用Cgroup Driver为cgroupfs

- K8s推荐使用systemd替代cgroupfs

[root@node03 ~]# mkdir /etc/docker

[root@node03 ~]# vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["https://kn0t2bca.mirror.aliyuncs.com"]

}

3.5 启动docker

[root@node03 ~]# systemctl restart docker

[root@node03 ~]# systemctl enable docker

# 查看docker是否将CGroupDriver更新为systemd

[root@node03 ~]# docker info

注意:3.1~3.5的命令仅在node03上执行

4. 安装kubernetes组件

4.1~4.4的命令,仅在node03 上执行

4.1 更换K8s镜像源为阿里镜像源

[root@node03 ~]# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

4.2 安装kubeadm、kubelet、kubectl

[root@node03 ~]# yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y

4.3 配置kubelet的cgroup

# 配置kubelet的cgroup

[root@node03 ~]# vim /etc/sysconfig/kubelet

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

4.4 kubelet开机自启

[root@node03 ~]# systemctl enable kubelet

注意:4.1~4.4的命令仅在node03上执行

5. 准备集群镜像

5.1~5.2的命令,仅在node03上执行

5.1 查看集群所需镜像

[root@node03 ~]# kubeadm config images list

5.2 手动编写脚本,拉取镜像

[root@node03 ~]# vim k8s.repo

#!/bin/bash

images=(

kube-apiserver:v1.17.4

kube-controller-manager:v1.17.4

kube-scheduler:v1.17.4

kube-proxy:v1.17.4

pause:3.1

etcd:3.4.3-0

coredns:1.6.5

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

[root@node03 ~]# chmod +x k8s.repo

[root@node03 ~]# ./k8s.repo

[root@node03 ~]# docker images

注意:5.1~5.2的命令仅在node03 上执行

6. 将node03加入集群

6.1 node03加载镜像

- worker节点目前有哪些镜像,node03就要有哪些镜像

- worker节点指node01、node02、node03

6.2 node03加入集群

仅在node 03节点上操作

6.2.1 场景一:距离最近一次集群中加入新节点不超过24h

- 可通过查看最近一次加入集群的新节点的历史命令,复制到目前要加入的新节点node03下执行,命令如下所示

kubeadm join 192.168.100.100:6443 --token gkdzgt.v73dx3hhiczgmeud --discovery-token-ca-cert

6.2.2 场景二:距离最近一次集群中加入新节点超过24h

kubeadm token create --print-join-command

6.3 验证node03是否加入集群

仅在Master节点上操作

kubectl get nodes -o wide