前面两节学习到了各种Source的创建和extractor service的启动,本节将以本地播放为例记录下GenericSource是如何创建一个extractor的。extractor是在PrepareAsync()方法中被创建出来的,为了不过多赘述,我们直接从GenericSource的onPrepareAsync()开始看。

onPrepareAsync()

Android系统自带了很多源生的extractor,我们这里主要基于MP4 extractor来进行以下内容的分析。

//frameworks/av/media/libmediaplayerservice/nuplayer/GenericSource.cpp

void NuPlayer::GenericSource::onPrepareAsync() {

mDisconnectLock.lock();

// delayed data source creation

if (mDataSource == NULL) {

// set to false first, if the extractor

// comes back as secure, set it to true then.

mIsSecure = false;

if (!mUri.empty()) {

//省略

} else {

//第一部分

if (property_get_bool("media.stagefright.extractremote", true) &&

!PlayerServiceFileSource::requiresDrm(

mFd.get(), mOffset, mLength, nullptr /* mime */)) {

sp<IBinder> binder =

defaultServiceManager()->getService(String16("media.extractor"));

if (binder != nullptr) {

ALOGD("FileSource remote");

sp<IMediaExtractorService> mediaExService(

interface_cast<IMediaExtractorService>(binder));

sp<IDataSource> source;

mediaExService->makeIDataSource(base::unique_fd(dup(mFd.get())), mOffset, mLength, &source);

ALOGV("IDataSource(FileSource): %p %d %lld %lld",

source.get(), mFd.get(), (long long)mOffset, (long long)mLength);

if (source.get() != nullptr) {

mDataSource = CreateDataSourceFromIDataSource(source);

}

//省略

}

//省略

}

//省略

}

//省略

mDisconnectLock.unlock();

//第二部分

// init extractor from data source

status_t err = initFromDataSource();

if (err != OK) {

ALOGE("Failed to init from data source!");

notifyPreparedAndCleanup(err);

return;

}

if (mVideoTrack.mSource != NULL) {

sp<MetaData> meta = getFormatMeta_l(false /* audio */);

sp<AMessage> msg = new AMessage;

err = convertMetaDataToMessage(meta, &msg);

if(err != OK) {

notifyPreparedAndCleanup(err);

return;

}

notifyVideoSizeChanged(msg);

}

notifyFlagsChanged(

// FLAG_SECURE will be known if/when prepareDrm is called by the app

// FLAG_PROTECTED will be known if/when prepareDrm is called by the app

FLAG_CAN_PAUSE |

FLAG_CAN_SEEK_BACKWARD |

FLAG_CAN_SEEK_FORWARD |

FLAG_CAN_SEEK);

//第三部分

finishPrepareAsync();

ALOGV("onPrepareAsync: Done");

}上述代码中省略了mp4文件播放时不会走到的流程,只抓主干做了解。我将onPrepareAsync()分成了三个部分,下面逐个进行分析。

DataSource的创建

初始阶段GenericSource的mDataSource是没有值的,因此需要基于setDataSource()传递下来的文件fd/offset/length变量来创建一个。先将步骤总结如下:

- 获取"media.extractor" service的本地代理,为调用其接口做准备。

- 基于被打开MP4文件的fd/offset/length创建一个RemoteDataSource,并返回其Bp端(BpDataSource)。

- 将BpDataSource转化为TinyCacheSource,保存到mDataSource中。

第一步没啥好讲的,直接开始讲第二步:

//frameworks/av/services/mediaextractor/MediaExtractorService.cpp

::android::binder::Status MediaExtractorService::makeIDataSource(

base::unique_fd fd,

int64_t offset,

int64_t length,

::android::sp<::android::IDataSource>* _aidl_return) {

sp<DataSource> source = DataSourceFactory::getInstance()->CreateFromFd(fd.release(), offset, length);

*_aidl_return = CreateIDataSourceFromDataSource(source);

return binder::Status::ok();

}

//frameworks/av/media/libdatasource/DataSourceFactory.cpp

sp<DataSourceFactory> DataSourceFactory::getInstance() {

Mutex::Autolock l(sInstanceLock);

if (!sInstance) {

sInstance = new DataSourceFactory();

}

return sInstance;

}

sp<DataSource> DataSourceFactory::CreateFromFd(int fd, int64_t offset, int64_t length) {

sp<FileSource> source = new FileSource(fd, offset, length);

return source->initCheck() != OK ? nullptr : source;

}

//frameworks/av/media/libstagefright/InterfaceUtils.cpp

sp<IDataSource> CreateIDataSourceFromDataSource(const sp<DataSource> &source) {

if (source == nullptr) {

return nullptr;

}

return RemoteDataSource::wrap(source);

}

//frameworks/av/media/libstagefright/include/media/stagefright/RemoteDataSource.h

static sp<IDataSource> wrap(const sp<DataSource> &source) {

if (source.get() == nullptr) {

return nullptr;

}

if (source->getIDataSource().get() != nullptr) {

return source->getIDataSource();

}

return new RemoteDataSource(source);

}这里直接调用extractor service的makeIDataSource()方法,在该方法中会先构建一个FileSource实例,通过这个实例可以读取文件内容。基于FileSource再封装成一个RemoteDataSource实例,通过binder回传到GenericSource那的已经是Bp端了。

接下来是第三步:

//frameworks/av/media/libstagefright/InterfaceUtils.cpp

sp<DataSource> CreateDataSourceFromIDataSource(const sp<IDataSource> &source) {

if (source == nullptr) {

return nullptr;

}

return new TinyCacheSource(new CallbackDataSource(source));

}可以很清楚的看到,BpDataSource被先后封装了两层最终返回的则是TinyCacheSource实例。

到这里,第一部分结束了。

initFromDataSource()

第二部分则是重点了,这里是创建extractor的位子所在。

//frameworks/av/media/libmediaplayerservice/nuplayer/GenericSource.cpp

status_t NuPlayer::GenericSource::initFromDataSource() {

sp<IMediaExtractor> extractor;

sp<DataSource> dataSource;

{

Mutex::Autolock _l_d(mDisconnectLock);

dataSource = mDataSource;

}

CHECK(dataSource != NULL);

// This might take long time if data source is not reliable.

extractor = MediaExtractorFactory::Create(dataSource, NULL);

//省略

sp<MetaData> fileMeta = extractor->getMetaData();

size_t numtracks = extractor->countTracks();

//省略

mFileMeta = fileMeta;

//省略

for (size_t i = 0; i < numtracks; ++i) {

sp<IMediaSource> track = extractor->getTrack(i);

if (track == NULL) {

continue;

}

sp<MetaData> meta = extractor->getTrackMetaData(i);

//省略

// Do the string compare immediately with "mime",

// we can't assume "mime" would stay valid after another

// extractor operation, some extractors might modify meta

// during getTrack() and make it invalid.

if (!strncasecmp(mime, "audio/", 6)) {

if (mAudioTrack.mSource == NULL) {

mAudioTrack.mIndex = i;

mAudioTrack.mSource = track;

mAudioTrack.mPackets =

new AnotherPacketSource(mAudioTrack.mSource->getFormat());

if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_VORBIS)) {

mAudioIsVorbis = true;

} else {

mAudioIsVorbis = false;

}

mMimes.add(String8(mime));

}

} else if (!strncasecmp(mime, "video/", 6)) {

if (mVideoTrack.mSource == NULL) {

mVideoTrack.mIndex = i;

mVideoTrack.mSource = track;

mVideoTrack.mPackets =

new AnotherPacketSource(mVideoTrack.mSource->getFormat());

// video always at the beginning

mMimes.insertAt(String8(mime), 0);

}

}

//省略

}

//省略

return OK;

}

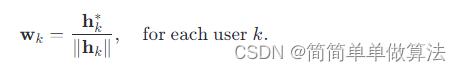

上述代码只保留了主干,这段代码的主要做了这些事情:

- 创建RemoteMediaExtractor,并返回其Bp端(BpMediaExtractor)。这里比较复杂,稍后详细展开。

- 通过BpMediaExtractor调用getMetaData()读取并解析MP4文件的metadata,保存到mFileMeta中。

- 调用countTracks()获取MP4文件中包含的track数量。

- 依次遍历这些track,根据其内的MIME type将对应的track区分为video还是audio track,保存在mVideoTrack/mAudioTrack中。mVideoTrack/mAudioTrack每个都会创建一个AnotherPacketSource保存起来,这个AnotherPacketSource应该就是为后面解码提供数据了。

MediaExtractorFactory::Create()

下面来解析下MediaExtractorFactory::Create()。

//frameworks/av/media/libstagefright/MediaExtractorFactory.cpp

sp<IMediaExtractor> MediaExtractorFactory::Create(

const sp<DataSource> &source, const char *mime) {

ALOGV("MediaExtractorFactory::Create %s", mime);

// remote extractor

ALOGV("get service manager");

sp<IBinder> binder = defaultServiceManager()->getService(String16("media.extractor"));

if (binder != 0) {

sp<IMediaExtractorService> mediaExService(

interface_cast<IMediaExtractorService>(binder));

sp<IMediaExtractor> ex;

mediaExService->makeExtractor(

CreateIDataSourceFromDataSource(source),

mime ? std::optional<std::string>(mime) : std::nullopt,

&ex);

return ex;

}

}调用extractor的makeExtractor()方法直接创建extractor。在此之前,需要先从TinyCacheSource对象中剥离出BpDataSource,因为需要跨binder传输。

//frameworks/av/media/libstagefright/InterfaceUtils.cpp

sp<IDataSource> CreateIDataSourceFromDataSource(const sp<DataSource> &source) {

if (source == nullptr) {

return nullptr;

}

return RemoteDataSource::wrap(source);

}

//frameworks/av/media/libstagefright/include/media/stagefright/RemoteDataSource.h

static sp<IDataSource> wrap(const sp<DataSource> &source) {

if (source.get() == nullptr) {

return nullptr;

}

if (source->getIDataSource().get() != nullptr) {

return source->getIDataSource();

}

return new RemoteDataSource(source);

}来看看makeExtractor()方法:

//frameworks/av/services/mediaextractor/MediaExtractorService.cpp

::android::binder::Status MediaExtractorService::makeExtractor(

const ::android::sp<::android::IDataSource>& remoteSource,

const ::std::optional< ::std::string> &mime,

::android::sp<::android::IMediaExtractor>* _aidl_return) {

ALOGV("@@@ MediaExtractorService::makeExtractor for %s", mime ? mime->c_str() : nullptr);

sp<DataSource> localSource = CreateDataSourceFromIDataSource(remoteSource);

MediaBuffer::useSharedMemory();

sp<IMediaExtractor> extractor = MediaExtractorFactory::CreateFromService(

localSource,

mime ? mime->c_str() : nullptr);

ALOGV("extractor service created %p (%s)",

extractor.get(),

extractor == nullptr ? "" : extractor->name());

if (extractor != nullptr) {

registerMediaExtractor(extractor, localSource, mime ? mime->c_str() : nullptr);

}

*_aidl_return = extractor;

return binder::Status::ok();

}这里remoteSource经过binder已经处于extractor service端了,那已经是RemoteDataSource的本体了。在service端会通过CreateDataSourceFromIDataSource()将RemoteDataSource重新封装成另一个TinyCacheSource对象。虽然这里和GenericSource端的TinyCacheSource是不同的东西,但其核心都是指向extractor service端的RemoteDataSource。

接下来就要开始真正创建extractor了。

//frameworks/av/media/libstagefright/MediaExtractorFactory.cpp

sp<IMediaExtractor> MediaExtractorFactory::CreateFromService(

const sp<DataSource> &source, const char *mime) {

ALOGV("MediaExtractorFactory::CreateFromService %s", mime);

void *meta = nullptr;

void *creator = NULL;

FreeMetaFunc freeMeta = nullptr;

float confidence;

sp<ExtractorPlugin> plugin;

uint32_t creatorVersion = 0;

creator = sniff(source, &confidence, &meta, &freeMeta, plugin, &creatorVersion);

if (!creator) {

ALOGV("FAILED to autodetect media content.");

return NULL;

}

MediaExtractor *ex = nullptr;

if (creatorVersion == EXTRACTORDEF_VERSION_NDK_V1 ||

creatorVersion == EXTRACTORDEF_VERSION_NDK_V2) {

CMediaExtractor *ret = ((CreatorFunc)creator)(source->wrap(), meta);

if (meta != nullptr && freeMeta != nullptr) {

freeMeta(meta);

}

ex = ret != nullptr ? new MediaExtractorCUnwrapper(ret) : nullptr;

}

ALOGV("Created an extractor '%s' with confidence %.2f",

ex != nullptr ? ex->name() : "<null>", confidence);

return CreateIMediaExtractorFromMediaExtractor(ex, source, plugin);

}

void *MediaExtractorFactory::sniff(

const sp<DataSource> &source, float *confidence, void **meta,

FreeMetaFunc *freeMeta, sp<ExtractorPlugin> &plugin, uint32_t *creatorVersion) {

*confidence = 0.0f;

*meta = nullptr;

std::shared_ptr<std::list<sp<ExtractorPlugin>>> plugins;

{

Mutex::Autolock autoLock(gPluginMutex);

if (!gPluginsRegistered) {

return NULL;

}

plugins = gPlugins;

}

void *bestCreator = NULL;

for (auto it = plugins->begin(); it != plugins->end(); ++it) {

ALOGV("sniffing %s", (*it)->def.extractor_name);

float newConfidence;

void *newMeta = nullptr;

FreeMetaFunc newFreeMeta = nullptr;

void *curCreator = NULL;

if ((*it)->def.def_version == EXTRACTORDEF_VERSION_NDK_V1) {

curCreator = (void*) (*it)->def.u.v2.sniff(

source->wrap(), &newConfidence, &newMeta, &newFreeMeta);

} else if ((*it)->def.def_version == EXTRACTORDEF_VERSION_NDK_V2) {

curCreator = (void*) (*it)->def.u.v3.sniff(

source->wrap(), &newConfidence, &newMeta, &newFreeMeta);

}

if (curCreator) {

if (newConfidence > *confidence) {

*confidence = newConfidence;

if (*meta != nullptr && *freeMeta != nullptr) {

(*freeMeta)(*meta);

}

*meta = newMeta;

*freeMeta = newFreeMeta;

plugin = *it;

bestCreator = curCreator;

*creatorVersion = (*it)->def.def_version;

} else {

if (newMeta != nullptr && newFreeMeta != nullptr) {

newFreeMeta(newMeta);

}

}

}

}

return bestCreator;

}

//frameworks/av/media/libstagefright/InterfaceUtils.cpp

sp<IMediaExtractor> CreateIMediaExtractorFromMediaExtractor(

MediaExtractor *extractor,

const sp<DataSource> &source,

const sp<RefBase> &plugin) {

if (extractor == nullptr) {

return nullptr;

}

return RemoteMediaExtractor::wrap(extractor, source, plugin);

}罗列下CreateFromService()做的事情:

- 调用自身的sniff()方法来依次遍历注册在系统内的gPlugins(ExtractorPlugin list),逐个调用每个extractor实现的sniff()来解析文件,成功解析则会返回一个confidence。然后再根据这个confidence来选取一个得分最高的extractor,本文则选取的是libmp4extractor。

- sniff()执行完,返回的是libmp4extractor的CreateExtractor函数指针。直接执行CreateExtractor(),这里会创建一个MPEG4Extractor并wrap成CMediaExtractor返回。

- CMediaExtractor进一步被wrap成MediaExtractorCUnwrapper对象。

- 为了能够跨binder操作,又通过CreateIMediaExtractorFromMediaExtractor()将MediaExtractorCUnwrapper封装成RemoteMediaExtractor对象。

看到这里,可以看出这个RemoteMediaExtractor已经和libmp4extractor中创建的MPEG4Extractor挂钩了。

MPEG4Extractor关于sniff()和CreateExtractor()代码这里就不贴了,代码位置在frameworks/av/media/extractors/mp4/,大家自行查看。

extractor相关操作

上面的分析完,extractor已经创建了,接下来就是执行initFromDataSource()中的四个操作了:

- getMetaData()

- countTracks()

- getTrack()

- getTrackMetaData()

上述四个接口看名字都能大概知道是在做什么。四个接口都会调用到readMetaData()方法。

//frameworks/av/media/extractors/mp4/MPEG4Extractor.cpp

status_t MPEG4Extractor::readMetaData() {

if (mInitCheck != NO_INIT) {

return mInitCheck;

}

off64_t offset = 0;

status_t err;

bool sawMoovOrSidx = false;

while (!((mHasMoovBox && sawMoovOrSidx && (mMdatFound || mMoofFound)) ||

(mIsHeif && (mPreferHeif || !mHasMoovBox) &&

(mItemTable != NULL) && mItemTable->isValid()))) {

off64_t orig_offset = offset;

err = parseChunk(&offset, 0);

if (err != OK && err != UNKNOWN_ERROR) {

break;

} else if (offset <= orig_offset) {

// only continue parsing if the offset was advanced,

// otherwise we might end up in an infinite loop

ALOGE("did not advance: %lld->%lld", (long long)orig_offset, (long long)offset);

err = ERROR_MALFORMED;

break;

} else if (err == UNKNOWN_ERROR) {

sawMoovOrSidx = true;

}

}

if ((mIsAvif || mIsHeif) && (mItemTable != NULL) && (mItemTable->countImages() > 0)) {

//avif/heif图片相关处理,省略

}

if (mInitCheck == OK) {

if (findTrackByMimePrefix("video/") != NULL) {

AMediaFormat_setString(mFileMetaData,

AMEDIAFORMAT_KEY_MIME, MEDIA_MIMETYPE_CONTAINER_MPEG4);

} else if (findTrackByMimePrefix("audio/") != NULL) {

AMediaFormat_setString(mFileMetaData,

AMEDIAFORMAT_KEY_MIME, "audio/mp4");

} else if (findTrackByMimePrefix(

MEDIA_MIMETYPE_IMAGE_ANDROID_HEIC) != NULL) {

AMediaFormat_setString(mFileMetaData,

AMEDIAFORMAT_KEY_MIME, MEDIA_MIMETYPE_CONTAINER_HEIF);

} else if (findTrackByMimePrefix(

MEDIA_MIMETYPE_IMAGE_AVIF) != NULL) {

AMediaFormat_setString(mFileMetaData,

AMEDIAFORMAT_KEY_MIME, MEDIA_MIMETYPE_IMAGE_AVIF);

} else {

AMediaFormat_setString(mFileMetaData,

AMEDIAFORMAT_KEY_MIME, "application/octet-stream");

}

} else {

mInitCheck = err;

}

CHECK_NE(err, (status_t)NO_INIT);

// copy pssh data into file metadata

//pssh DRM解密相关处理,省略

return mInitCheck;

}这里的主体内容就是那个while循环以及循环内的parseChunk()函数。这个parseChunk()的命名感觉不太合适,个人觉得改成parseBox()更好,不容易引起初学者的误解(我刚学的时候乍一看以为是media data中的chunk概念)。

parseChunk()方法很长,这里就不贴了,简单解释以下它的功能:

它是一个递归函数,在外层while循环里会从MP4文件的开头开始启动parseChunk()函数去依次解析文件中的每个box,如果这个box是一个container box,那么它就会去递归的解析下一级的box直到没有更下一级的box为止。解析出来的信息会保存到MPEG4Extractor的变量中。

说一句题外话,大家学习的时候如果能下载到对应视频格式解析软件,最好还是下载一个。我这里用的是“MP4 Inspector”软件。实际做extractor开发和维护工作还是需要诸多的spec来支撑的。

用这个软件打开我用的MP4文件的信息可以很清晰的看到如下内容:

返回正文,parseChunk()方法读取文件的功能则是通过mDataSource->readAt()来做到的,实际就是调用上文中创建的FileSource去读取。

第二部分到这里就分析结束了。AnotherPacketSource的内容在本节暂不展开了,等后续学习完了在其他章节解读。

在开始讲解第三部分之前,简单提一下notifyVideoSizeChanged()和notifyFlagsChanged()这两个方法。

- notifyVideoSizeChanged()是将从视频文件中读取到的video的width和height通知到NuPlayer中去。

- notifyFlagsChanged()是将FLAG_CAN_PAUSE/FLAG_CAN_SEEK_BACKWARD/FLAG_CAN_SEEK_FORWARD/FLAG_CAN_SEEK这四个flags通知到NuPlayer中去并保存到mPlayerFlags中。在java层会调用getMetadata()接口时在NuPlayer中会根据mPlayerFlags构造成一个Metadata返回。

finishPrepareAsync()

//frameworks/av/media/libmediaplayerservice/nuplayer/GenericSource.cpp

void NuPlayer::GenericSource::finishPrepareAsync() {

ALOGV("finishPrepareAsync");

status_t err = startSources();

if (err != OK) {

ALOGE("Failed to init start data source!");

notifyPreparedAndCleanup(err);

return;

}

if (mIsStreaming) {

mCachedSource->resumeFetchingIfNecessary();

mPreparing = true;

schedulePollBuffering();

} else {

notifyPrepared();

}

if (mAudioTrack.mSource != NULL) {

postReadBuffer(MEDIA_TRACK_TYPE_AUDIO);

}

if (mVideoTrack.mSource != NULL) {

postReadBuffer(MEDIA_TRACK_TYPE_VIDEO);

}

}

status_t NuPlayer::GenericSource::startSources() {

// Start the selected A/V tracks now before we start buffering.

// Widevine sources might re-initialize crypto when starting, if we delay

// this to start(), all data buffered during prepare would be wasted.

// (We don't actually start reading until start().)

//

// TODO: this logic may no longer be relevant after the removal of widevine

// support

if (mAudioTrack.mSource != NULL && mAudioTrack.mSource->start() != OK) {

ALOGE("failed to start audio track!");

return UNKNOWN_ERROR;

}

if (mVideoTrack.mSource != NULL && mVideoTrack.mSource->start() != OK) {

ALOGE("failed to start video track!");

return UNKNOWN_ERROR;

}

return OK;

}这里主要关注两个函数:startSources()和postReadBuffer()。由于篇幅原因,不再展开code。直接文字简要描述他俩的功能:

- startSources():看名字是start,其实还没有start起来。这里主要是在分配、创建MediaBuffer并加入管理。

- postReadBuffer():这个才是真正开始从视频文件中读取media data的地方。

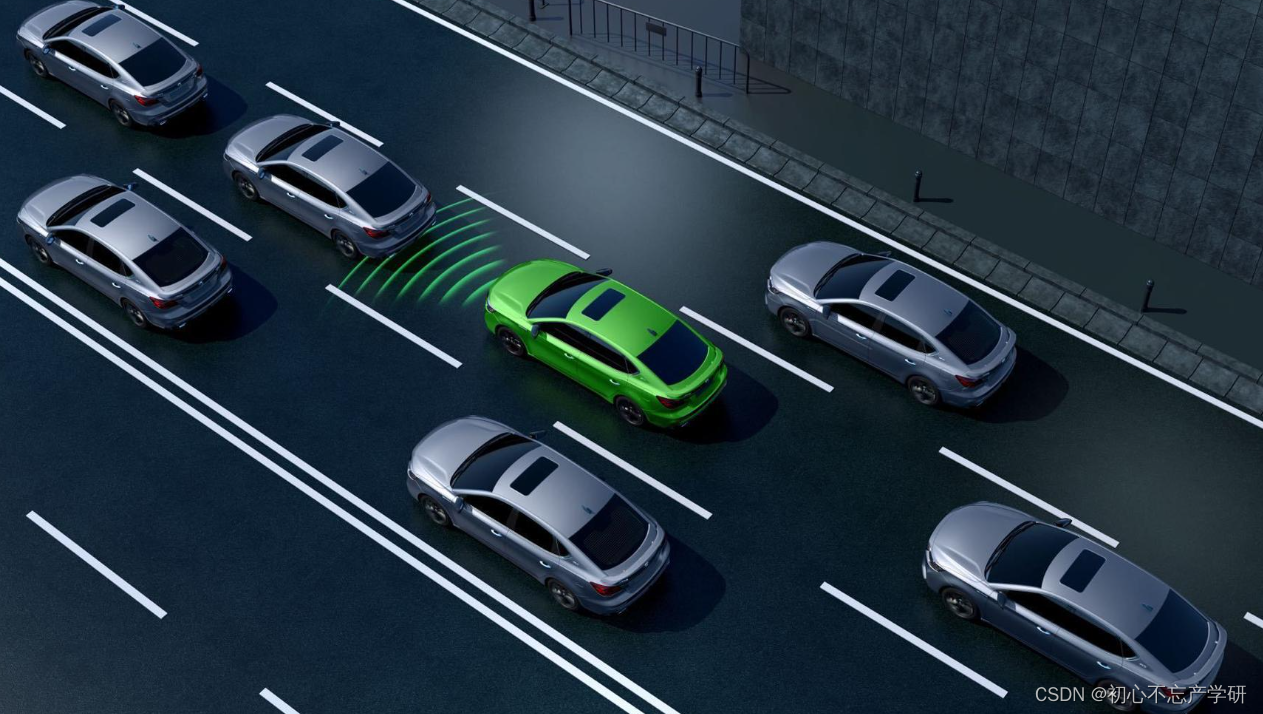

总结

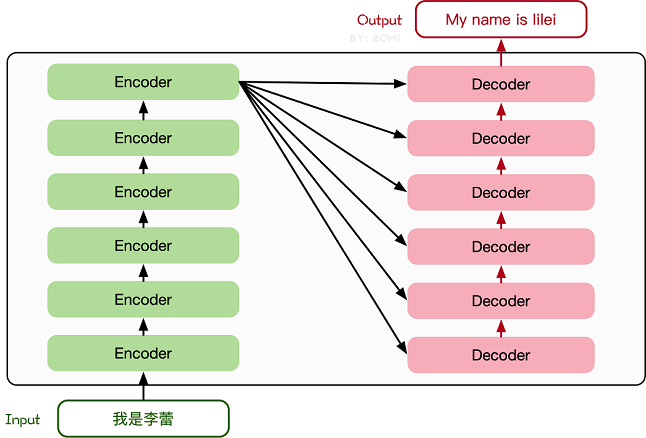

onPrepareAsync() 函数到这里结束,主要内容基本都过了一遍,暂时还缺少了MediaBuffer的部分没有涉及到。下面还是老规矩,以图的方式总结下本节的内容:

图一 onPrepareAsync()执行流程

图二 MP4 extractor关系架构图

看代码感觉还没那么强烈,但是从图二的架构图来看,就可以看出设计NuPlayer这个架构的架构师太牛了。图中绿色方框框起来的是MP4 extractor自己实现的内容,其他extractor也是按照这种方式去替换方框中的实现即可。这种plugin的设计模式太溜了。

图三 mp4常见组成box示意图

图三是我简单查看spec稍微画的一个示意图,只画了常见的一些内容,并不专业和正确,只是方便我自己回顾。