文章目录

- 前言

- Orange Pi AIpro 简介

- Orange Pi AIpro 体验

- 将Linux镜像烧录到TF卡

- YOLO识别视频中物体

- 肺部CT识别

- Orange Pi AIpro 总结

前言

Orange Pi AIpro,作为首款基于昇腾技术的AI开发板,它集成了高性能图形处理器,配备8GB/16GB LPDDR4X内存,支持外接存储模块,并具备双4K高清输出和强大的AI算力。

Orange Pi AIpro 简介

怀着迫不及待的打开了我们的Orange Pi AIpro,外观十分漂亮,映入眼帘的就是一个橙子的标志和为AI而生

整个板子的包裹性还是很好的,有一个盒子(里面都是海绵),板子外面还有一层塑料袋包裹着,大赞

整个板子的精密程度,以及它的美观程度都是很赞的,满满的科技感,爱不释手。

该开发板拥有丰富的接口,包括HDMI、GPIO、Type-C电源、M.2插槽、USB等,香橙派对应部分产品规格:

| 部件 | 参数 |

|---|---|

| CPU | 4核64位处理器+ AI处理器 |

| GPU | 集成图形处理器 |

| AI算力 | 8-12TOPS算力 |

| 内存 | LPDDR4X:8GB/16GB(可选),速率:3200Mbps |

| 存储 | SPI FLASH:32MB• SATA/NVME SSD(M.2接口2280);eMMC插槽:32GB/64GB/128GB/256GB(可选),eMMC5.1 HS400;TF插槽 |

| WIFI+蓝牙 | Wi-Fi 5双频2.4G和5G;BT4.2/BLE |

| 以太网收发器 | 10/100/1000Mbps以太网 |

| 显示 | 2xHDMI2.0 Type-A TX 4K@60FPS;1x2 lane MIPI DSI via FPC connector |

| 摄像头 | 2x2-lane MIPI CSI camera interface,兼容树莓派摄像头 |

我们可以官方给我们提供的接口详情图:

可以看到是堆料满满的一款产品,昇腾AI处理器是为了满足飞速发展的深度神经网络对芯片算力的需求,由华为公司在2018年推出的AI处理器,对整型数(INT8、INT4)或浮点数(FP16)提供了强大高效的计算力,在硬件结构上对深度神经网络做了优化,可以很高效率完成神经网络中的前向计算因此在智能终端领域有很大的应用前景。

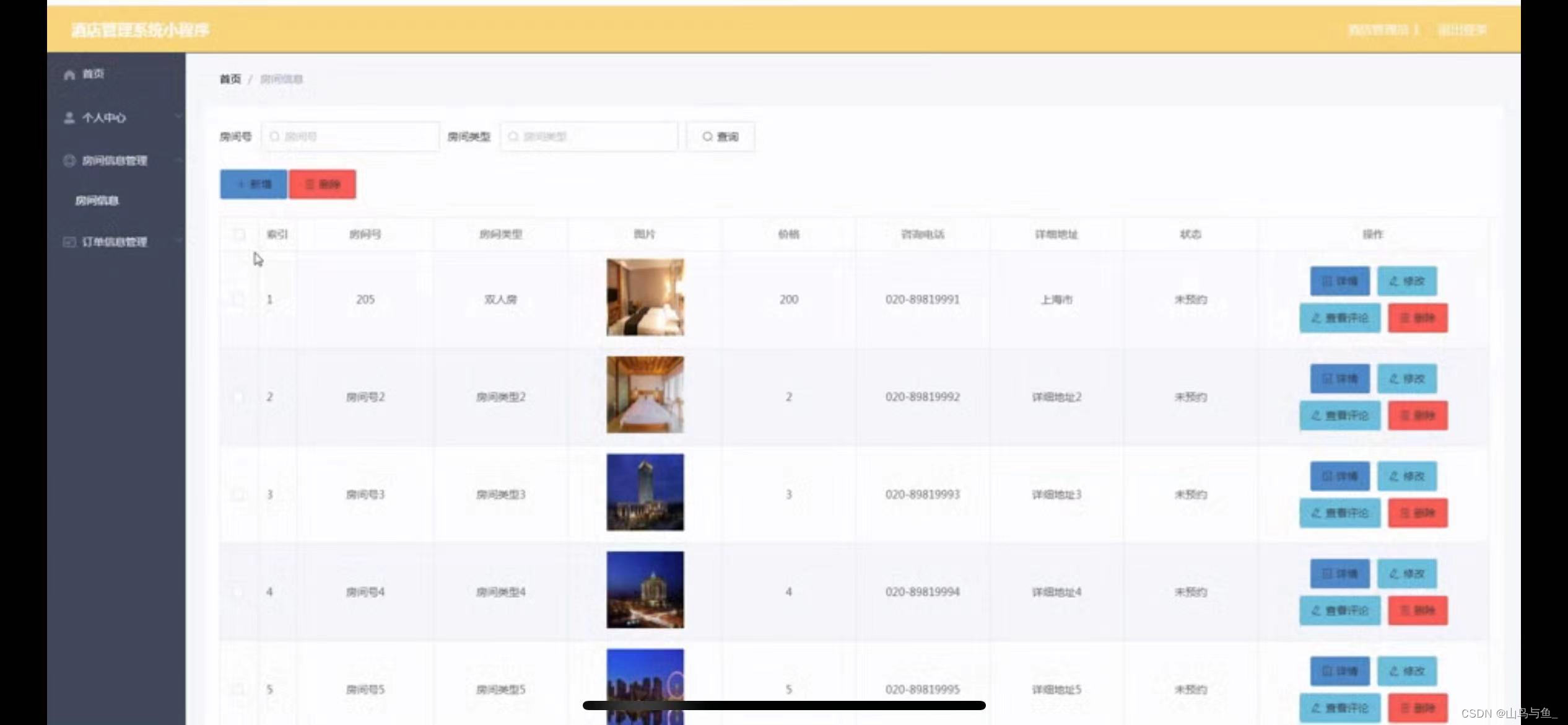

Orange Pi AIpro 体验

将Linux镜像烧录到TF卡

打开香橙派官网:香橙派官网

选择我们对应的产品,我们可以根据自己的需求去下载官方的资料或者去下载官方的镜像,我们这里直接去下载ubuntu镜像

我们会跟着指示来到百度网盘,然后选择desktop的进行下载。

因为desktop镜像预装Linux桌面、CANN、AI 示例代码等,minimal镜像不包括上述内容

经过耐心等待,终于下载完成了

下载balenaEtcher:balenaEtcher官网,点击Download Etcher,

我们选择对应版本下载即可

我们将我们的TF卡插入读卡器中,接入电脑中,准备烧录

我们将我们下载好的balenaEtcher打开,选择聪文件烧录

我们选择好对应配置后,点击现在烧录!

当我们看到左边为紫色进度条时,证明我们正在烧录中

当我们看到左边为绿色进度条时,证明正在验证是否烧录成功中

YOLO识别视频中物体

我们首先打开连接上显示器,默认账号密码为:用户为HwHiAiUser,登录密码为Mind@123

我们右上角连接上WIFI

我们总共32G,操作系统预装了Ubuntu-22.04。系统本身加上预装的开发软件占用了大概17G的空间,剩余15G左右的空间。

HwHiAiUser@orangepiaipro:~$ cd samples

HwHiAiUser@orangepiaipro:~/samples$ ./start_notebook.sh

执行脚本之后,终端中会打印出Jupyter Lab的网址连接,我们将其复制去游览器打开即可

我们选择yolov5,然后选择main.ipynb,点击运行

我们运行完结果可以发现基本可以识别我们图片中的汽车,除了完全覆盖的没有识别到,也算正常。

我们在整个运行过程中,我们的风扇转速算不错了,散热效果比较好,拿起板子基本是一个常温的状态,没有发热的情况。

肺部CT识别

我们再来开发板运行一个项目:

import math

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.model_zoo as model_zoo

from torch.nn import init

import torch

__all__ = ['xception']

model_urls = {

'xception': 'http://data.lip6.fr/cadene/pretrainedmodels/xception-43020ad28.pth'

}

class SeparableConv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=1, stride=1, padding=0, dilation=1, bias=False):

super(SeparableConv2d, self).__init__()

self.conv1 = nn.Conv2d(in_channels, in_channels, kernel_size, stride, padding, dilation, groups=in_channels,

bias=bias)

self.pointwise = nn.Conv2d(in_channels, out_channels, 1, 1, 0, 1, 1, bias=bias)

def forward(self, x):

x = self.conv1(x)

x = self.pointwise(x)

return x

class Block(nn.Module):

def __init__(self, in_filters, out_filters, reps, strides=1, start_with_relu=True, grow_first=True):

super(Block, self).__init__()

if out_filters != in_filters or strides != 1:

self.skip = nn.Conv2d(in_filters, out_filters, 1, stride=strides, bias=False)

self.skipbn = nn.BatchNorm2d(out_filters)

else:

self.skip = None

self.relu = nn.ReLU(inplace=True)

rep = []

filters = in_filters

if grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d(in_filters, out_filters, 3, stride=1, padding=1, bias=False))

rep.append(nn.BatchNorm2d(out_filters))

filters = out_filters

for i in range(reps - 1):

rep.append(self.relu)

rep.append(SeparableConv2d(filters, filters, 3, stride=1, padding=1, bias=False))

rep.append(nn.BatchNorm2d(filters))

if not grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d(in_filters, out_filters, 3, stride=1, padding=1, bias=False))

rep.append(nn.BatchNorm2d(out_filters))

if not start_with_relu:

rep = rep[1:]

else:

rep[0] = nn.ReLU(inplace=False)

if strides != 1:

rep.append(nn.MaxPool2d(3, strides, 1))

self.rep = nn.Sequential(*rep)

def forward(self, inp):

x = self.rep(inp)

if self.skip is not None:

skip = self.skip(inp)

skip = self.skipbn(skip)

else:

skip = inp

x += skip

return x

class Xception(nn.Module):

"""

Xception optimized for the ImageNet dataset, as specified in

https://arxiv.org/pdf/1610.02357.pdf

"""

def __init__(self, num_classes=38):

""" Constructor

Args:

num_classes: number of classes

"""

super(Xception, self).__init__()

self.num_classes = num_classes

self.conv1 = nn.Conv2d(3, 32, 3, 2, 0, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(32, 64, 3, bias=False)

self.bn2 = nn.BatchNorm2d(64)

# do relu here

self.block1 = Block(64, 128, 2, 2, start_with_relu=False, grow_first=True)

self.block2 = Block(128, 256, 2, 2, start_with_relu=True, grow_first=True)

self.block3 = Block(256, 728, 2, 2, start_with_relu=True, grow_first=True)

self.block4 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block5 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block6 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block7 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block8 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block9 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block10 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block11 = Block(728, 728, 3, 1, start_with_relu=True, grow_first=True)

self.block12 = Block(728, 1024, 2, 2, start_with_relu=True, grow_first=False)

self.conv3 = SeparableConv2d(1024, 1536, 3, 1, 1)

self.bn3 = nn.BatchNorm2d(1536)

# do relu here

self.conv4 = SeparableConv2d(1536, 2048, 3, 1, 1)

self.bn4 = nn.BatchNorm2d(2048)

self.fc = nn.Linear(2048, num_classes)

# ------- init weights --------

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

# -----------------------------

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = self.block6(x)

x = self.block7(x)

x = self.block8(x)

x = self.block9(x)

x = self.block10(x)

x = self.block11(x)

x = self.block12(x)

x = self.conv3(x)

x = self.bn3(x)

x = self.relu(x)

x = self.conv4(x)

x = self.bn4(x)

x = self.relu(x)

x = F.adaptive_avg_pool2d(x, (1, 1))

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def xception(pretrained=False, **kwargs):

"""

Construct Xception.

"""

model = Xception(**kwargs)

if pretrained:

model.load_state_dict(model_zoo.load_url(model_urls['xception']))

return model

import matplotlib.pyplot as plt

from PIL import Image

from torchvision.transforms import transforms

from model import xception

import torch

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif']=['SimHei'] #解决中文显示乱码问题

plt.rcParams['axes.unicode_minus']=False #解决坐标轴负数的负号显示问题

data_transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Resize((224, 224)),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

img = Image.open(r"F:\SqueezeNet\dataset\test\PNEUMONIA\person1_virus_8.jpeg")

img = img.convert("RGB")

plt.imshow(img)

img = data_transform(img)

img = torch.unsqueeze(img, dim=0)

name = ['正常', '肺炎']

model_weight_path = "Xception_last.pth"

model = xception(num_classes=2)

model.load_state_dict(torch.load(model_weight_path))

model.eval()

with torch.no_grad():

output = torch.squeeze(model(img))

predict = torch.softmax(output, dim=0)

# 获得最大可能性索引

predict_cla = torch.argmax(predict).numpy()

print('索引为', predict_cla)

print('预测结果为:{},置信度为: {}'.format(name[predict_cla], predict[predict_cla].item()))

plt.suptitle("预测结果为:{}".format(name[predict_cla]))

plt.show()

模型预测结果:

import torch

import torch.nn as nn

import torchvision.transforms as transforms

from torch.utils import data

import torch.optim as optim

from model import xception

from DataLoader import LoadData

# ================================= 下载图片、预处理图片、数据加载器 =================================

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

net = xception(num_classes=2).to(device=device)

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Resize((224, 224)),

transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))])

train_data = LoadData(r"F:\SqueezeNet\Xception\train.txt", True)

train_loader = torch.utils.data.DataLoader(train_data, batch_size=8, pin_memory=True,

shuffle=True, num_workers=0)

loss_function = nn.CrossEntropyLoss() # 定义损失函数

optimizer = torch.optim.Adam(net.parameters(), lr=0.001)

min_loss = 100

for epoch in range(2): # loop over the dataset multiple times

print('第{}轮训练开始'.format(epoch))

running_loss = 0.0 # 累加训练过程中的损失

for i, (inputs, labels) in enumerate(train_loader):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad() # 将历史损失梯度清零

# forward + backward + optimize

outputs = net(inputs)

loss = loss_function(outputs, labels)

loss.backward() # 反向传播

optimizer.step() # 参数更新

# print statistics

running_loss += loss.item()

if i % 50 == 49: # print every 500 mini-batches

print('进来了')

# with torch.no_grad(): # with是一个上下文管理器

# outputs = net(val_image) # [batch, 10]

predict_y = torch.max(outputs, dim=1)[1]

accuracy = torch.eq(predict_y, labels).sum().item() / len(labels)

print('[%d %5d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, i + 1, running_loss / 50, accuracy))

print('Finished Training')

save_path = './Xception_last.pth'

torch.save(net.state_dict(), save_path)

训练可视化:

经过训练之后,我们的模型识别还是挺准的

Orange Pi AIpro 总结

通过个人的体验,我觉得Orange Pi AIpro 具有以下几个优势:

- 接口丰富:除了标准的USB、HDMI、MIPI等接口外,还支持SATA/NVMe SSD 2280的M.2插槽,可外接32GB/64GB/128GB/256GB eMMC模块。

- 支持多种操作系统:除了预装的Ubuntu系统外,还支持openEuler操作系统,满足不同的开发需求。

- 昇腾AI技术路线:采用华为昇腾AI技术路线,具有高性能、低功耗的特点。

不足之处:

- AI算力限制:虽然具有8TOPS和20TOPS两种AI算力版本,但对于更复杂的AI应用来说,可能还需要更强的算力支持。

Orange Pi AIpro 是一款硬件配置强大、接口丰富的高性能AI开发板。对于需要强大AI算力和丰富接口的用户来说,20T版本是一个不错的选择,尽管价格较高。而对于预算有限的用户,8T版本也是一个性价比较高的选择。

![[Vulnhub] Stapler wp-videos+ftp+smb+bash_history权限提升+SUID权限提升+Kernel权限提升](https://img-blog.csdnimg.cn/img_convert/cef3452efd5dc86d425d235c6e43255c.jpeg)