Tip: 如果你在进行深度学习、自动驾驶、模型推理、微调或AI绘画出图等任务,并且需要GPU资源,可以考虑使用UCloud云计算旗下的Compshare的GPU算力云平台。他们提供高性价比的4090 GPU,按时收费每卡2.6元,月卡只需要1.7元每小时,并附带200G的免费磁盘空间。通过链接注册并联系客服,可以获得20元代金券(相当于6-7H的免费GPU资源)。欢迎大家体验一下~

0. 简介

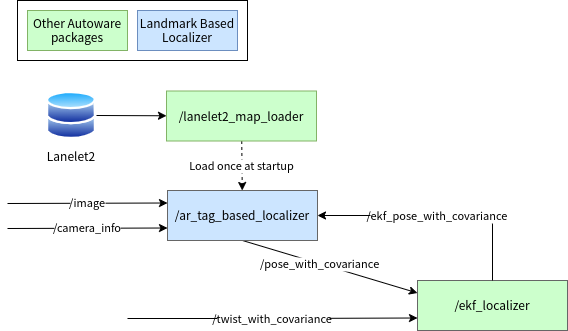

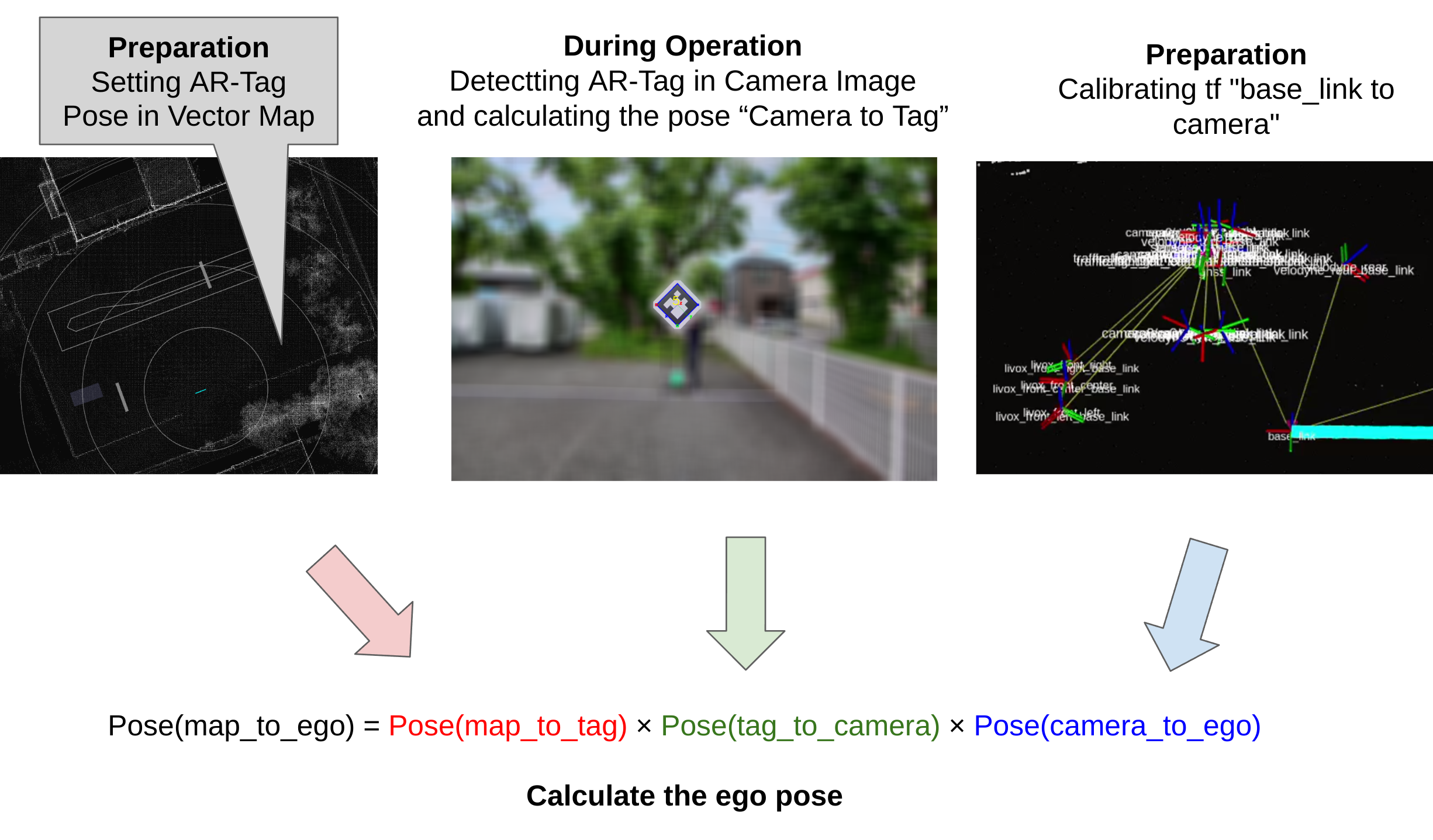

这一讲按照《Autoware 技术代码解读(三)》梳理的顺序,我们来说一说Autoware中基于地标的定位,这里的地标可以是:相机检测到的AR标签,还可以是通过LiDAR检测到的强度特征的板块。由于这些地标易于检测和估计姿态,如果地图上预先标注了地标的姿态,那么可以从检测到的地标的姿态计算出自我姿态。

1. 代码阅读

1.1 基于ar_tag的定位功能

该代码实现了一个基于ArUco标记的定位器节点,其中包含了构造函数和设置节点参数、日志记录、tf变换、图像传输、订阅者和发布者等功能。设置函数setup()用于初始化节点参数、日志记录、tf变换、图像传输、订阅者和发布者等。通过订阅地图数据和图像数据,进行ArUco标记的检测,并将检测到的标记位置信息发布出去。同时,还会根据相机信息进行相机参数的设置,并根据EKF姿态信息发布位置信息。

setup()函数中,通过声明节点参数、设置检测模式、初始化tf变换的缓冲区、监听器和广播器、初始化图像传输、设置订阅者和发布者等,完成了节点的初始化工作。在地图数据回调函数中,解析特定类型的地标信息并发布可视化消息;在图像数据回调函数中,进行ArUco标记的检测,并在图像中绘制检测到的标记,然后发布处理后的图像和诊断信息;相机信息回调函数用于设置相机参数;EKF姿态信息回调函数用于保存最新的姿态信息。最后,根据ArUco标记的位置信息,将位置信息发布为tf变换,并进行位置信息的计算和发布。这个流程主要依赖以下三个函数:

- map_bin_callback()函数:当接收到地图数据时,解析地图中的特定类型的地标信息,并将其转换为可视化消息后发布出去。

- image_callback()函数:当接收到图像数据时,进行ArUco标记的检测,并在图像中绘制检测到的标记,然后发布处理后的图像和诊断信息。

- publish_pose_as_base_link()函数:将相机到ArUco标记的位置信息发布为tf变换,并计算位置信息。

/// @brief 构造函数

/// @param options ros2节点选项

ArTagBasedLocalizer::ArTagBasedLocalizer(const rclcpp::NodeOptions & options)

: Node("ar_tag_based_localizer", options), cam_info_received_(false)

{

}

/// @brief 用于设置节点参数、日志记录、tf变换、图像传输、订阅者和发布者

/// @return

bool ArTagBasedLocalizer::setup()

{

/*

Declare node parameters

*/

marker_size_ = static_cast<float>(this->declare_parameter<double>("marker_size"));

target_tag_ids_ = this->declare_parameter<std::vector<std::string>>("target_tag_ids");

base_covariance_ = this->declare_parameter<std::vector<double>>("base_covariance");

distance_threshold_squared_ = std::pow(this->declare_parameter<double>("distance_threshold"), 2);

ekf_time_tolerance_ = this->declare_parameter<double>("ekf_time_tolerance");

ekf_position_tolerance_ = this->declare_parameter<double>("ekf_position_tolerance");

std::string detection_mode = this->declare_parameter<std::string>("detection_mode");//设置 ArUco 检测器的检测模式

float min_marker_size = static_cast<float>(this->declare_parameter<double>("min_marker_size"));

if (detection_mode == "DM_NORMAL") {

detector_.setDetectionMode(aruco::DM_NORMAL, min_marker_size);

} else if (detection_mode == "DM_FAST") {

detector_.setDetectionMode(aruco::DM_FAST, min_marker_size);

} else if (detection_mode == "DM_VIDEO_FAST") {

detector_.setDetectionMode(aruco::DM_VIDEO_FAST, min_marker_size);

} else {

// Error

RCLCPP_ERROR_STREAM(this->get_logger(), "Invalid detection_mode: " << detection_mode);

return false;

}

/*

Log parameter info

*/

RCLCPP_INFO_STREAM(this->get_logger(), "min_marker_size: " << min_marker_size);

RCLCPP_INFO_STREAM(this->get_logger(), "detection_mode: " << detection_mode);

RCLCPP_INFO_STREAM(this->get_logger(), "thresMethod: " << detector_.getParameters().thresMethod);

RCLCPP_INFO_STREAM(this->get_logger(), "marker_size_: " << marker_size_);

/*

初始化 tf 变换的缓冲区、监听器和广播器

*/

tf_buffer_ = std::make_unique<tf2_ros::Buffer>(this->get_clock());

tf_listener_ = std::make_unique<tf2_ros::TransformListener>(*tf_buffer_);

tf_broadcaster_ = std::make_unique<tf2_ros::TransformBroadcaster>(this);

/*

Initialize image transport

*/

it_ = std::make_unique<image_transport::ImageTransport>(shared_from_this());

/*

Subscribers

*/

map_bin_sub_ = this->create_subscription<HADMapBin>(

"~/input/lanelet2_map", rclcpp::QoS(10).durability(rclcpp::DurabilityPolicy::TransientLocal),

std::bind(&ArTagBasedLocalizer::map_bin_callback, this, std::placeholders::_1));

//初始化图像传输

rclcpp::QoS qos_sub(rclcpp::QoSInitialization::from_rmw(rmw_qos_profile_default));

qos_sub.best_effort();

image_sub_ = this->create_subscription<Image>(

"~/input/image", qos_sub,

std::bind(&ArTagBasedLocalizer::image_callback, this, std::placeholders::_1));

cam_info_sub_ = this->create_subscription<CameraInfo>(

"~/input/camera_info", qos_sub,

std::bind(&ArTagBasedLocalizer::cam_info_callback, this, std::placeholders::_1));

ekf_pose_sub_ = this->create_subscription<PoseWithCovarianceStamped>(

"~/input/ekf_pose", qos_sub,

std::bind(&ArTagBasedLocalizer::ekf_pose_callback, this, std::placeholders::_1));

/*

Publishers

*/

rclcpp::QoS qos_marker = rclcpp::QoS(rclcpp::KeepLast(10));

qos_marker.transient_local();

qos_marker.reliable();

marker_pub_ = this->create_publisher<MarkerArray>("~/debug/marker", qos_marker);

rclcpp::QoS qos_pub(rclcpp::QoSInitialization::from_rmw(rmw_qos_profile_default));

image_pub_ = it_->advertise("~/debug/result", 1);

pose_pub_ =

this->create_publisher<PoseWithCovarianceStamped>("~/output/pose_with_covariance", qos_pub);

diag_pub_ = this->create_publisher<DiagnosticArray>("/diagnostics", qos_pub);

RCLCPP_INFO(this->get_logger(), "Setup of ar_tag_based_localizer node is successful!");

return true;

}

/// @brief 当接收到地图数据时,该回调函数会解析地图中的特定类型的地标信息,并将其转换为可视化消息后发布出去

/// @param msg 类型为 HADMapBin::ConstSharedPtr 的地图数据指针

void ArTagBasedLocalizer::map_bin_callback(const HADMapBin::ConstSharedPtr & msg)

{

const std::vector<landmark_manager::Landmark> landmarks =

landmark_manager::parse_landmarks(msg, "apriltag_16h5", this->get_logger());//提取特定类型的地标信息,并将其转换为 Landmark 结构体的向量

for (const landmark_manager::Landmark & landmark : landmarks) {

landmark_map_[landmark.id] = landmark.pose;//解析得到的地标信息存储到 landmark_map_ 中

}

const MarkerArray marker_msg = landmark_manager::convert_landmarks_to_marker_array_msg(landmarks);

marker_pub_->publish(marker_msg);

}

/// @brief 当接收到图像数据时,该回调函数会进行 ArUco 标记的检测,并在图像中绘制检测到的标记,然后发布处理后的图像和诊断信息。

/// @param msg 类型为 Image::ConstSharedPtr 的图像数据指针

void ArTagBasedLocalizer::image_callback(const Image::ConstSharedPtr & msg)

{

//检查是否有订阅者,如果没有则不进行 ArUco 标记的检测

if ((image_pub_.getNumSubscribers() == 0) && (pose_pub_->get_subscription_count() == 0)) {

RCLCPP_DEBUG(this->get_logger(), "No subscribers, not looking for ArUco markers");

return;

}

//检查是否已接收到相机信息

if (!cam_info_received_) {

RCLCPP_DEBUG(this->get_logger(), "No cam_info has been received.");

return;

}

//将图像数据转换为 OpenCV 格式,并进行 ArUco 标记的检测

const builtin_interfaces::msg::Time curr_stamp = msg->header.stamp;

cv_bridge::CvImagePtr cv_ptr;

try {

cv_ptr = cv_bridge::toCvCopy(*msg, sensor_msgs::image_encodings::RGB8);

} catch (cv_bridge::Exception & e) {

RCLCPP_ERROR(this->get_logger(), "cv_bridge exception: %s", e.what());

return;

}

cv::Mat in_image = cv_ptr->image;

// 检测结果将存储在 markers 中

std::vector<aruco::Marker> markers;

// ok, let's detect

detector_.detect(in_image, markers, cam_param_, marker_size_, false);

// 每个检测到的标记都会发布位置信息

for (const aruco::Marker & marker : markers) {

const tf2::Transform tf_cam_to_marker = aruco_marker_to_tf2(marker);//位置信息发布为 tf 变换,并绘制在图像中

TransformStamped tf_cam_to_marker_stamped;

tf2::toMsg(tf_cam_to_marker, tf_cam_to_marker_stamped.transform);

tf_cam_to_marker_stamped.header.stamp = curr_stamp;

tf_cam_to_marker_stamped.header.frame_id = msg->header.frame_id;

tf_cam_to_marker_stamped.child_frame_id = "detected_marker_" + std::to_string(marker.id);

tf_broadcaster_->sendTransform(tf_cam_to_marker_stamped);//发布 tf 变换

PoseStamped pose_cam_to_marker;

tf2::toMsg(tf_cam_to_marker, pose_cam_to_marker.pose);//根据 tf 变换计算位置信息

pose_cam_to_marker.header.stamp = curr_stamp;

pose_cam_to_marker.header.frame_id = msg->header.frame_id;

publish_pose_as_base_link(pose_cam_to_marker, std::to_string(marker.id));//发布位置信息

// 画出检测到的标记

marker.draw(in_image, cv::Scalar(0, 0, 255), 2);//在图像中绘制检测到的标记

}

// 画出每个标记的 3D 立方体

if (cam_param_.isValid()) {

for (aruco::Marker & marker : markers) {

aruco::CvDrawingUtils::draw3dAxis(in_image, marker, cam_param_);

}

}

if (image_pub_.getNumSubscribers() > 0) {

// 将处理后的图像发布出去

cv_bridge::CvImage out_msg;

out_msg.header.stamp = curr_stamp;

out_msg.encoding = sensor_msgs::image_encodings::RGB8;

out_msg.image = in_image;

image_pub_.publish(out_msg.toImageMsg());

}

const int detected_tags = static_cast<int>(markers.size());

diagnostic_msgs::msg::DiagnosticStatus diag_status;

// 根据检测到的标记数量发布诊断信息

if (detected_tags > 0) {

diag_status.level = diagnostic_msgs::msg::DiagnosticStatus::OK;

diag_status.message = "AR tags detected. The number of tags: " + std::to_string(detected_tags);

} else {

diag_status.level = diagnostic_msgs::msg::DiagnosticStatus::WARN;

diag_status.message = "No AR tags detected.";

}

diag_status.name = "localization: " + std::string(this->get_name());

diag_status.hardware_id = this->get_name();

diagnostic_msgs::msg::KeyValue key_value;

key_value.key = "Number of Detected AR Tags";

key_value.value = std::to_string(detected_tags);

diag_status.values.push_back(key_value);

DiagnosticArray diag_msg;//发布诊断信息

diag_msg.header.stamp = this->now();

diag_msg.status.push_back(diag_status);

diag_pub_->publish(diag_msg);

}

// wait for one camera info, then shut down that subscriber

/// @brief 当接收到相机信息后,将其转换为OpenCV矩阵并存储

/// @param msg 相机信息

void ArTagBasedLocalizer::cam_info_callback(const CameraInfo & msg)

{

if (cam_info_received_) {

return;

}

//将输入的相机信息 msg 转换成 OpenCV 的矩阵格式

cv::Mat camera_matrix(3, 4, CV_64FC1, 0.0);

camera_matrix.at<double>(0, 0) = msg.p[0];

camera_matrix.at<double>(0, 1) = msg.p[1];

camera_matrix.at<double>(0, 2) = msg.p[2];

camera_matrix.at<double>(0, 3) = msg.p[3];

camera_matrix.at<double>(1, 0) = msg.p[4];

camera_matrix.at<double>(1, 1) = msg.p[5];

camera_matrix.at<double>(1, 2) = msg.p[6];

camera_matrix.at<double>(1, 3) = msg.p[7];

camera_matrix.at<double>(2, 0) = msg.p[8];

camera_matrix.at<double>(2, 1) = msg.p[9];

camera_matrix.at<double>(2, 2) = msg.p[10];

camera_matrix.at<double>(2, 3) = msg.p[11];

cv::Mat distortion_coeff(4, 1, CV_64FC1);

for (int i = 0; i < 4; ++i) {

distortion_coeff.at<double>(i, 0) = 0;

}

const cv::Size size(static_cast<int>(msg.width), static_cast<int>(msg.height));

cam_param_ = aruco::CameraParameters(camera_matrix, distortion_coeff, size);

cam_info_received_ = true;

}

/// @brief 更新最新的扩展卡尔曼滤波器(EKF)姿态估计值

/// @param msg 带协方差的姿态消息

void ArTagBasedLocalizer::ekf_pose_callback(const PoseWithCovarianceStamped & msg)

{

//直接将输入的姿态消息 msg 存储在 latest_ekf_pose_ 变量中

latest_ekf_pose_ = msg;

}

/// @brief 发布基于基准链接的位置信息

/// @param sensor_to_tag 传感器到标签的姿态信息

/// @param tag_id 标签ID

void ArTagBasedLocalizer::publish_pose_as_base_link(

const PoseStamped & sensor_to_tag, const std::string & tag_id)

{

// Check tag_id

if (std::find(target_tag_ids_.begin(), target_tag_ids_.end(), tag_id) == target_tag_ids_.end()) {//检查是否在目标标签ID中

RCLCPP_INFO_STREAM(this->get_logger(), "tag_id(" << tag_id << ") is not in target_tag_ids");

return;

}

if (landmark_map_.count(tag_id) == 0) {//检查是否已经接收到地图信息

RCLCPP_INFO_STREAM(this->get_logger(), "tag_id(" << tag_id << ") is not in landmark_map_");

return;

}

// Range filter

const double distance_squared = sensor_to_tag.pose.position.x * sensor_to_tag.pose.position.x +

sensor_to_tag.pose.position.y * sensor_to_tag.pose.position.y +

sensor_to_tag.pose.position.z * sensor_to_tag.pose.position.z;

if (distance_threshold_squared_ < distance_squared) {//检查是否超出距离阈值

return;

}

// Transform to base_link

PoseStamped base_link_to_tag;

try {

const TransformStamped transform =

tf_buffer_->lookupTransform("base_link", sensor_to_tag.header.frame_id, tf2::TimePointZero);

tf2::doTransform(sensor_to_tag, base_link_to_tag, transform);//将传感器到标签的姿态信息转换为基准链接到标签的姿态信息

base_link_to_tag.header.frame_id = "base_link";

} catch (tf2::TransformException & ex) {

RCLCPP_INFO(this->get_logger(), "Could not transform base_link to camera: %s", ex.what());

return;

}

// (1) map_to_tag

const Pose & map_to_tag = landmark_map_.at(tag_id);

const Eigen::Affine3d map_to_tag_affine = pose_to_affine3d(map_to_tag);

// (2) tag_to_base_link

const Eigen::Affine3d base_link_to_tag_affine = pose_to_affine3d(base_link_to_tag.pose);

const Eigen::Affine3d tag_to_base_link_affine = base_link_to_tag_affine.inverse();

// calculate map_to_base_link

// 计算地图到基准链接的姿态变换

const Eigen::Affine3d map_to_base_link_affine = map_to_tag_affine * tag_to_base_link_affine;

const Pose map_to_base_link = tf2::toMsg(map_to_base_link_affine);

// 如果当前姿态与最新的 EKF 姿态估计值的差异超过 ekf_time_tolerance_,则不会发布

const rclcpp::Duration diff_time =

rclcpp::Time(sensor_to_tag.header.stamp) - rclcpp::Time(latest_ekf_pose_.header.stamp);

if (diff_time.seconds() > ekf_time_tolerance_) {//计算地图到基准链接的姿态变换,并进行时间戳和姿态差异的检查

RCLCPP_INFO(

this->get_logger(),

"latest_ekf_pose_ is older than %f seconds compared to current frame. "

"latest_ekf_pose_.header.stamp: %d.%d, sensor_to_tag.header.stamp: %d.%d",

ekf_time_tolerance_, latest_ekf_pose_.header.stamp.sec, latest_ekf_pose_.header.stamp.nanosec,

sensor_to_tag.header.stamp.sec, sensor_to_tag.header.stamp.nanosec);

return;

}

// 如果当前姿态与最新的 EKF 姿态估计值的差异超过 ekf_position_tolerance_,则不会发布

const Pose curr_pose = map_to_base_link;

const Pose latest_ekf_pose = latest_ekf_pose_.pose.pose;

const double diff_position = norm(curr_pose.position, latest_ekf_pose.position);

if (diff_position > ekf_position_tolerance_) {

RCLCPP_INFO(

this->get_logger(),

"curr_pose differs from latest_ekf_pose_ by more than %f m. "

"curr_pose: (%f, %f, %f), latest_ekf_pose: (%f, %f, %f)",

ekf_position_tolerance_, curr_pose.position.x, curr_pose.position.y, curr_pose.position.z,

latest_ekf_pose.position.x, latest_ekf_pose.position.y, latest_ekf_pose.position.z);

return;

}

// Construct output message

PoseWithCovarianceStamped pose_with_covariance_stamped;

pose_with_covariance_stamped.header.stamp = sensor_to_tag.header.stamp;

pose_with_covariance_stamped.header.frame_id = "map";

pose_with_covariance_stamped.pose.pose = curr_pose;

// ~5[m]: base_covariance

// 5~[m]: scaling base_covariance by std::pow(distance/5, 3)

//构造输出消息,并根据距离调整协方差

const double distance = std::sqrt(distance_squared);

const double scale = distance / 5;

const double coeff = std::max(1.0, std::pow(scale, 3));

for (int i = 0; i < 36; i++) {

pose_with_covariance_stamped.pose.covariance[i] = coeff * base_covariance_[i];

}

pose_pub_->publish(pose_with_covariance_stamped);

}

/// @brief 将 ArUco 标记转换为 tf 变换

/// @param marker ArUco 标记

/// @return

tf2::Transform ArTagBasedLocalizer::aruco_marker_to_tf2(const aruco::Marker & marker)

{

cv::Mat rot(3, 3, CV_64FC1);//存储标记的旋转矩阵

cv::Mat r_vec64;

marker.Rvec.convertTo(r_vec64, CV_64FC1);//将其转换为 CV_64FC1(即双精度浮点数)类型的 r_vec64

cv::Rodrigues(r_vec64, rot);

cv::Mat tran64;//存储标记的平移矩阵

marker.Tvec.convertTo(tran64, CV_64FC1);

tf2::Matrix3x3 tf_rot(

rot.at<double>(0, 0), rot.at<double>(0, 1), rot.at<double>(0, 2), rot.at<double>(1, 0),

rot.at<double>(1, 1), rot.at<double>(1, 2), rot.at<double>(2, 0), rot.at<double>(2, 1),

rot.at<double>(2, 2));

tf2::Vector3 tf_orig(tran64.at<double>(0, 0), tran64.at<double>(1, 0), tran64.at<double>(2, 0));

return tf2::Transform(tf_rot, tf_orig);

}

1.2 landmark管理

landmark_manager是一个实用程序包,用于从地图中加载地标。

- 转换:地标的中心是四个顶点的中心

- 旋转:让顶点编号为1、2、3、4,按逆时针方向如下一节所示。方向定义为从1到2的向量和从2到3的向量的叉积。

用户可以将地标定义为Lanelet2的4个顶点多边形。在这种情况下,可能定义一种排列,其中四个顶点不能被认为在同一平面上。在这种情况下,地标的方向很难计算。因此,如果将4个顶点视为构成一个四面体,并且其体积超过volume_threshold参数,则地标将不会发布tf_static

这个文件中包含了两个函数:parse_landmarks和convert_landmarks_to_marker_array_msg。这两个函数用于处理地图数据中特定类型和子类型的地标信息,并将其转换为自定义的Landmark结构体,同时将Landmark结构体的地标信息转换为可视化消息。

在parse_landmarks函数中,首先使用日志记录器输出地图数据的基本信息,然后将地图数据转换为lanelet_map_ptr。接着遍历lanelet_map_ptr中的多边形图层,提取类型为"pose_marker"且子类型为目标子类型的地标信息。对于每个符合条件的多边形,计算其体积、中心点、坐标轴、旋转矩阵和四元数,并将这些信息存储在Landmark结构体中,最终返回包含Landmark结构体的向量。

在convert_landmarks_to_marker_array_msg函数中,将Landmark结构体的地标信息转换为可视化消息。根据每个地标信息创建立方体标记和文本标记,并将它们添加到标记数组中,最终返回包含标记数组的可视化消息。