本博文主要记录了使用LoFTR_TRT项目将LoFTR模型导出为onnx模型,然后将onnx模型转化为trt模型。并分析了LoFTR_TRT与LoFTR的基本代码差异,但从最后图片效果来看是与官网demo基本一致的,具体可以查看上一篇博客记录。最后记录了onnx模型的使用【特征点提取、图像重叠区提取】,同时记录了在3060显卡,cuda12.1+ort17.1,输入尺寸为320x320的环境下,30ms一组图。

项目地址:https://github.com/Kolkir/LoFTR_TRT

模型地址:https://drive.google.com/drive/folders/1DOcOPZb3-5cWxLqn256AhwUVjBPifhuf

1、基本环境准备

这里要求已经安装好torch-gpu、onnxruntime-gpu环境。

1.1 下载安装tensorRT推理库

1、根据cuda版本下载最新的tensorrt,解压后,设置好环境变量即可

tensorrt下载地址:https://developer.nvidia.com/nvidia-tensorrt-8x-download

配置环境变量细节如下所示:

2、然后查看自己的python版本

3、安装与版本对应的tensorRT库

1.2 下载LoFTR_TRT项目与模型

下载https://github.com/Kolkir/LoFTR_TRT项目

下载预训练模型,https://drive.google.com/drive/folders/1DOcOPZb3-5cWxLqn256AhwUVjBPifhuf

将下载好的模型解压,放到项目根目录下,具体组织形式如下所示。

2、代码改动分析

正常LoFTR模型是无法导出为onnx模型的,为此对LoFTR_TRT-main项目代码进行分析。通过其官网说明einsum and einops were removed,可以发现其移除了einsum 与einops 操作

2.1 主流程

通过LoFTR_TRT-main中loftr\loftr.py,可以看到与原始的loftr模型forward不一样。原始loftr模型有下面的三个步骤

self.fine_preprocess = FinePreprocess(config)

self.loftr_fine = LocalFeatureTransformer(config["fine"])

self.fine_matching = FineMatching()

而改动后的loftr,滤除了这三个步骤。同时对于 rearrange操作,使用了torch进行改写。此外,对于图像特征结果feat_c0, feat_f0, feat_c1, feat_f1,只使用了feat_c0与feat_c1。同时模型输出的只是原始LoFTR的中间数据 conf_matrix,需要特定后处理。

import torch

import torch.nn as nn

from .backbone import build_backbone

from .utils.position_encoding import PositionEncodingSine

from .loftr_module import LocalFeatureTransformer

from .utils.coarse_matching import CoarseMatching

class LoFTR(nn.Module):

def __init__(self, config):

super().__init__()

# Misc

self.config = config

# Modules

self.backbone = build_backbone(config)

self.pos_encoding = PositionEncodingSine(

config['coarse']['d_model'],

temp_bug_fix=config['coarse']['temp_bug_fix'])

self.loftr_coarse = LocalFeatureTransformer(config['coarse'])

self.coarse_matching = CoarseMatching(config['match_coarse'])

self.data = dict()

def backbone_forward(self, img0, img1):

"""

'img0': (torch.Tensor): (N, 1, H, W)

'img1': (torch.Tensor): (N, 1, H, W)

"""

# we assume that data['hw0_i'] == data['hw1_i'] - faster & better BN convergence

feats_c, feats_i, feats_f = self.backbone(torch.cat([img0, img1], dim=0))

feats_c, feats_f = self.backbone.complete_result(feats_c, feats_i, feats_f)

bs = 1

(feat_c0, feat_c1), (feat_f0, feat_f1) = feats_c.split(bs), feats_f.split(bs)

return feat_c0, feat_f0, feat_c1, feat_f1

def forward(self, img0, img1):

"""

'img0': (torch.Tensor): (N, 1, H, W)

'img1': (torch.Tensor): (N, 1, H, W)

"""

# 1. Local Feature CNN

feat_c0, feat_f0, feat_c1, feat_f1 = self.backbone_forward(img0, img1)

# 2. coarse-level loftr module

# add featmap with positional encoding, then flatten it to sequence [N, HW, C]

# feat_c0 = rearrange(self.pos_encoding(feat_c0), 'n c h w -> n (h w) c')

# feat_c1 = rearrange(self.pos_encoding(feat_c1), 'n c h w -> n (h w) c')

feat_c0 = torch.flatten(self.pos_encoding(feat_c0), 2, 3).permute(0, 2, 1)

feat_c1 = torch.flatten(self.pos_encoding(feat_c1), 2, 3).permute(0, 2, 1)

feat_c0, feat_c1 = self.loftr_coarse(feat_c0, feat_c1)

# 3. match coarse-level

conf_matrix = self.coarse_matching(feat_c0, feat_c1)

return conf_matrix

def load_state_dict(self, state_dict, *args, **kwargs):

for k in list(state_dict.keys()):

if k.startswith('matcher.'):

state_dict[k.replace('matcher.', '', 1)] = state_dict.pop(k)

return super().load_state_dict(state_dict, *args, **kwargs)

2.2 后处理

通过对LoFTR_TRT项目中webcam.py分析,发现其输出结果依赖get_coarse_match函数,才可抽取出mkpts0, mkpts1, mconf 信息。

使用代码如下:

mkpts0, mkpts1, mconf = get_coarse_match(conf_matrix, img_size[1], img_size[0], loftr_coarse_resolution)

get_coarse_match函数的定义实现如下,有一个重要参数resolution,其值默认为16。如果需要实现c++部署,则需要将以下代码修改为c++,可以参考numcpp进行改写实现

def get_coarse_match(conf_matrix, input_height, input_width, resolution):

"""

Predicts coarse matches from conf_matrix

Args:

resolution: image

input_width:

input_height:

conf_matrix: [N, L, S]

Returns:

mkpts0_c: [M, 2]

mkpts1_c: [M, 2]

mconf: [M]

"""

hw0_i = (input_height, input_width)

hw0_c = (input_height // resolution, input_width // resolution)

hw1_c = hw0_c # input images have the same resolution

feature_num = hw0_c[0] * hw0_c[1]

# 3. find all valid coarse matches

# this only works when at most one `True` in each row

mask = conf_matrix

all_j_ids = mask.argmax(axis=2)

j_ids = all_j_ids.squeeze(0)

b_ids = np.zeros_like(j_ids, dtype=np.long)

i_ids = np.arange(feature_num, dtype=np.long)

mconf = conf_matrix[b_ids, i_ids, j_ids]

# 4. Update with matches in original image resolution

scale = hw0_i[0] / hw0_c[0]

mkpts0_c = np.stack(

[i_ids % hw0_c[1], np.trunc(i_ids / hw0_c[1])],

axis=1) * scale

mkpts1_c = np.stack(

[j_ids % hw1_c[1], np.trunc(j_ids / hw1_c[1])],

axis=1) * scale

return mkpts0_c, mkpts1_c, mconf

3、模型导出

安装依赖项目:

pip install yacs

pip install pycuda #trt运行必须,ort运行可以忽略

3.1 导出配置说明

loftr\utils\cvpr_ds_config.py对应着导出模型的参数设置,主要是针对图像的宽高、BORDER_RM 、DSMAX_TEMPERATURE 等参数

from yacs.config import CfgNode as CN

def lower_config(yacs_cfg):

if not isinstance(yacs_cfg, CN):

return yacs_cfg

return {k.lower(): lower_config(v) for k, v in yacs_cfg.items()}

_CN = CN()

_CN.BACKBONE_TYPE = 'ResNetFPN'

_CN.RESOLUTION = (8, 2) # options: [(8, 2)]

_CN.INPUT_WIDTH = 640

_CN.INPUT_HEIGHT = 480

# 1. LoFTR-backbone (local feature CNN) config

_CN.RESNETFPN = CN()

_CN.RESNETFPN.INITIAL_DIM = 128

_CN.RESNETFPN.BLOCK_DIMS = [128, 196, 256] # s1, s2, s3

# 2. LoFTR-coarse module config

_CN.COARSE = CN()

_CN.COARSE.D_MODEL = 256

_CN.COARSE.D_FFN = 256

_CN.COARSE.NHEAD = 8

_CN.COARSE.LAYER_NAMES = ['self', 'cross'] * 4

_CN.COARSE.TEMP_BUG_FIX = False

# 3. Coarse-Matching config

_CN.MATCH_COARSE = CN()

_CN.MATCH_COARSE.BORDER_RM = 2

_CN.MATCH_COARSE.DSMAX_TEMPERATURE = 0.1

default_cfg = lower_config(_CN)

3.2 导出onnx模型

运行export_onnx.py即可导出模型,修改weights参数可以选择要导出的预训练权重,配置prune参数的efault=True,可以导出剪枝后的模型

import argparse

from loftr import LoFTR, default_cfg

import torch

import torch.nn.utils.prune as prune

def main():

parser = argparse.ArgumentParser(description='LoFTR demo.')

parser.add_argument('--weights', type=str, default='weights/outdoor_ds.ckpt',

help='Path to network weights.')

parser.add_argument('--device', type=str, default='cuda',

help='cpu or cuda')

parser.add_argument('--prune', default=False, help='Do unstructured pruning')

opt = parser.parse_args()

print(opt)

device = torch.device(opt.device)

print('Loading pre-trained network...')

model = LoFTR(config=default_cfg)

checkpoint = torch.load(opt.weights)

if checkpoint is not None:

missed_keys, unexpected_keys = model.load_state_dict(checkpoint['state_dict'], strict=False)

if len(missed_keys) > 0:

print('Checkpoint is broken')

return 1

print('Successfully loaded pre-trained weights.')

else:

print('Failed to load checkpoint')

return 1

if opt.prune:

print('Model pruning')

for name, module in model.named_modules():

# prune connections in all 2D-conv layers

if isinstance(module, torch.nn.Conv2d):

prune.l1_unstructured(module, name='weight', amount=0.5)

prune.remove(module, 'weight')

# prune connections in all linear layers

elif isinstance(module, torch.nn.Linear):

prune.l1_unstructured(module, name='weight', amount=0.5)

prune.remove(module, 'weight')

weight_total_sum = 0

weight_total_num = 0

for name, module in model.named_modules():

# prune connections in all 2D-conv layers

if isinstance(module, torch.nn.Conv2d):

weight_total_sum += torch.sum(module.weight == 0)

# prune connections in all linear layers

elif isinstance(module, torch.nn.Linear):

weight_total_num += module.weight.nelement()

print(f'Global sparsity: {100. * weight_total_sum / weight_total_num:.2f}')

print(f'Moving model to device: {device}')

model = model.eval().to(device=device)

with torch.no_grad():

dummy_image = torch.randn(1, 1, default_cfg['input_height'], default_cfg['input_width'], device=device)

torch.onnx.export(model, (dummy_image, dummy_image), 'loftr.onnx', verbose=True, opset_version=11)

if __name__ == "__main__":

main()

代码运行成功后,会在根目录下,生成 loftr.onnx

3.3 onnx模型转trt模型

先将前面转换好的onnx模型上传到https://netron.app/进行分析,可以发现是一个静态模型。

转换命令:trtexec --onnx=loftr.onnx --workspace=4096 --saveEngine=loftr.trt --fp16

具体执行效果如下所示,生成了 loftr.trt

4、运行模型

4.1 前置修改

修改一: utils.py 中np.long修改为np.int64,具体操作为将代码中第30行与31行修改为以下

b_ids = np.zeros_like(j_ids, dtype=np.int64)

i_ids = np.arange(feature_num, dtype=np.int64)

如果电脑没有摄像头,则需要进行下列额外代码修改

修改一: webcam.py中默认参数camid,类型修改为str,默认值修改为自己准备好的视频文件

def main():

parser = argparse.ArgumentParser(description='LoFTR demo.')

parser.add_argument('--weights', type=str, default='weights/outdoor_ds.ckpt',

help='Path to network weights.')

# parser.add_argument('--camid', type=int, default=0,

# help='OpenCV webcam video capture ID, usually 0 or 1.')

parser.add_argument('--camid', type=str, default=r"C:\Users\Administrator\Videos\风景视频素材分享_202477135455.mp4",

help='OpenCV webcam video capture ID, usually 0 or 1.')

修改二:camera.py中init函数修改为以下,用于支持读取视频文件

class Camera(object):

def __init__(self, index):

if isinstance(index,int):#加载摄像头视频流

self.cap = cv2.VideoCapture(index, cv2.CAP_V4L2)

else:#加载视频

self.cap = cv2.VideoCapture(index)

if not self.cap.isOpened():

print('Failed to open camera {0}'.format(index))

exit(-1)

# self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1920)

# self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 1080)

self.thread = Thread(target=self.update, args=())

self.thread.daemon = True

self.thread.start()

self.status = False

self.frame = None

将推理时的图像分辨率修改为320x240 ,即将webcam.py中的 img_size 设置(320, 240),loftr\utils\cvpr_ds_config.py中对应的设置

_CN.INPUT_WIDTH = 320

_CN.INPUT_HEIGHT = 240

然后运行webcam.py,可以发现fps为20左右,此时硬件环境为3060显卡。与https://hpg123.blog.csdn.net/article/details/140235431中的分析一致,40ms左右处理完一张图片。

4.2 torch模型运行

直接运行 webcam.py ,可以看到fps为5

4.3 trt模型运行

修改webcam.py 中的trt配置,为其添加默认值 loftr.trt,具体修改如以下代码的最后一行。

def main():

parser = argparse.ArgumentParser(description='LoFTR demo.')

parser.add_argument('--weights', type=str, default='weights/outdoor_ds.ckpt',

help='Path to network weights.')

# parser.add_argument('--camid', type=int, default=0,

# help='OpenCV webcam video capture ID, usually 0 or 1.')

parser.add_argument('--camid', type=str, default=r"C:\Users\Administrator\Videos\风景视频素材分享_202477135455.mp4",

help='OpenCV webcam video capture ID, usually 0 or 1.')

parser.add_argument('--device', type=str, default='cuda',

help='cpu or cuda')

parser.add_argument('--trt', type=str, default='loftr.trt', help='TensorRT model engine path')

运行效果如下,发现fps是2,比较低,不如torch运行

5、onnx模型推理

章节4中是原作者实现的推理代码,这里参考https://hpg123.blog.csdn.net/article/details/137381647中的代码进行推理实现。

5.1 依赖库与前置操作

操作一:

将 博客 https://hpg123.blog.csdn.net/article/details/124824892 中的代码保存为 imgutils.py

操作二:

将 cvpr_ds_config.py中的宽高配置,修改为320x320,然后重新运行export_onnx.py,导出onnx模型

_CN.INPUT_WIDTH = 320

_CN.INPUT_HEIGHT = 320

运行后生成的模型结构如下所示

5.2 运行代码

将下列代码保存为onnx_infer.py ,代码中有几行是计算运行时间的,可以删除。

from imgutils import *

import onnxruntime as ort

import numpy as np

import time

def get_coarse_match(conf_matrix, input_height, input_width, resolution):

"""

Predicts coarse matches from conf_matrix

Args:

resolution: image

input_width:

input_height:

conf_matrix: [N, L, S]

Returns:

mkpts0_c: [M, 2]

mkpts1_c: [M, 2]

mconf: [M]

"""

hw0_i = (input_height, input_width)

hw0_c = (input_height // resolution, input_width // resolution)

hw1_c = hw0_c # input images have the same resolution

feature_num = hw0_c[0] * hw0_c[1]

# 3. find all valid coarse matches

# this only works when at most one `True` in each row

mask = conf_matrix

all_j_ids = mask.argmax(axis=2)

j_ids = all_j_ids.squeeze(0)

b_ids = np.zeros_like(j_ids, dtype=np.int64)

i_ids = np.arange(feature_num, dtype=np.int64)

mconf = conf_matrix[b_ids, i_ids, j_ids]

# 4. Update with matches in original image resolution

scale = hw0_i[0] / hw0_c[0]

mkpts0_c = np.stack(

[i_ids % hw0_c[1], np.trunc(i_ids / hw0_c[1])],

axis=1) * scale

mkpts1_c = np.stack(

[j_ids % hw1_c[1], np.trunc(j_ids / hw1_c[1])],

axis=1) * scale

return mkpts0_c, mkpts1_c, mconf

model_name="loftr.onnx"

model = ort.InferenceSession(model_name,providers=['CUDAExecutionProvider'])

img_size=(320,320)

loftr_coarse_resolution=8

tensor2a,img2a=read_img_as_tensor_gray(r"C:\Users\Administrator\Pictures\t1.jpg",img_size,device='cpu')

tensor2b,img2b=read_img_as_tensor_gray(r"C:\Users\Administrator\Pictures\t2.jpg",img_size,device='cpu')

data={'img0':tensor2a.numpy(),'img1':tensor2b.numpy()}

conf_matrix = model.run(None,data)[0]

mkpts0, mkpts1, confidence = get_coarse_match(conf_matrix, img_size[1], img_size[0], loftr_coarse_resolution)

#-----------计算运行时间---------------------

times=10

st=time.time()

for i in range(times):

conf_matrix = model.run(None,data)[0]

mkpts0, mkpts1, confidence = get_coarse_match(conf_matrix, img_size[1], img_size[0], loftr_coarse_resolution)

et=time.time()

runtime=(et-st)/times

print(f"{img_size} 图像推理时间为: {runtime:.4f}")

#myimshows( [img2a,img2b],size=12)

import cv2 as cv

pt_num = mkpts0.shape[0]

im_dst,im_res=img2a,img2b

img = np.zeros((max(im_dst.shape[0], im_res.shape[0]), im_dst.shape[1]+im_res.shape[1]+10,3))

img[:,:im_res.shape[0],]=im_dst

img[:,-im_res.shape[0]:]=im_res

img=img.astype(np.uint8)

match_threshold=0.6

for i in range(0, pt_num):

if (confidence[i] > match_threshold):

pt0 = mkpts0[i].astype(np.int32)

pt1 = mkpts1[i].astype(np.int32)

#cv.circle(img, (pt0[0], pt0[1]), 1, (0, 0, 255), 2)

#cv.circle(img, (pt1[0], pt1[1]+650), (0, 0, 255), 2)

cv.line(img, pt0, (pt1[0]+im_res.shape[0], pt1[1]), (0, 255, 0), 1)

myimshow( img,size=12)

import cv2

def getGoodMatchPoint(mkpts0, mkpts1, confidence, match_threshold:float=0.5):

print(mkpts0.shape,mkpts1.shape)

n = min(mkpts0.shape[0], mkpts1.shape[0])

srcImage1_matchedKPs, srcImage2_matchedKPs=[],[]

if (match_threshold > 1 or match_threshold < 0):

print("match_threshold error!")

for i in range(n):

kp0 = mkpts0[i]

kp1 = mkpts1[i]

pt0=(kp0[0].item(),kp0[1].item());

pt1=(kp1[0].item(),kp1[1].item());

c = confidence[i].item();

if (c > match_threshold):

srcImage1_matchedKPs.append(pt0);

srcImage2_matchedKPs.append(pt1);

return np.array(srcImage1_matchedKPs),np.array(srcImage2_matchedKPs)

pts_src, pts_dst=getGoodMatchPoint(mkpts0, mkpts1, confidence)

h1, status = cv2.findHomography(pts_src, pts_dst, cv.RANSAC, 8)

im_out1 = cv2.warpPerspective(im_dst, h1, (im_dst.shape[1],im_dst.shape[0]))

im_out2 = cv2.warpPerspective(im_res, h1, (im_dst.shape[1],im_dst.shape[0]),16)

#这里 im_res和im_out1是严格配准的状态

myimshowsCL([im_dst,im_out1,im_res,im_out2],rows=2,cols=2, size=6)

此时项目的目录结构如下所示,图中画红框的是项目依赖文件

5.3 运行效果

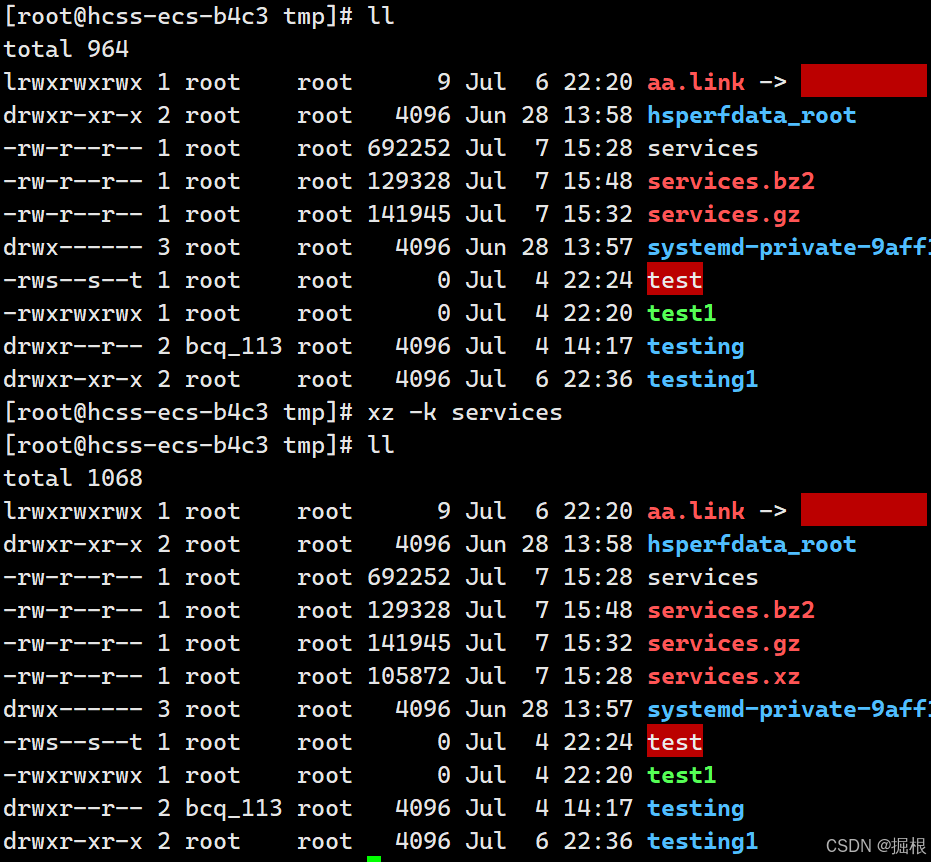

代码运行速度信息如下所示,30ms一张图(3060显卡,cuda12,ort17.1【自行编译】)

点匹配效果如下所示,可以发现针对于近景与远景有不同的匹配关系组,对于这样的数据,是无法进行良好的图像拼接或者重叠区提取的

重叠区提取效果如下所示