一、前言

了解到Sahi,是通过切图,实现提高小目标的检测效果。sahi 目前支持yolo5\yolo8\mmdet\detection2 等等算法,本篇主要通过实验onnx加载模型的方式使sahi支持yolov10。

二、代码

(1)转换模型

首先使用 conda创建虚拟环境,配置好yolov10环境,然后 pip 将sahi 安装上

pip install sahi将yolov10 模型导出为 onnx格式文件,命令窗cd 至 模型文件所在目录 执行

yolo export model=vitrolite_best.pt format=onnx opset=11 simplify转换成功,将在目录下生成同名 onnx格式文件

(2)加载onnx 模型推理代码

参考 sahi 提供的demo 文件 inference_for_yolov8_onnx.ipynb ,分块大小和重叠比例可设置

from sahi import AutoDetectionModel

from sahi.utils.cv import read_image

from sahi.utils.file import download_from_url

from sahi.predict import get_prediction, get_sliced_prediction, predict

import time

if __name__ == '__main__':

yolov8_onnx_model_path = "runs\\detect\\train_v102\\weights\\vitrolite_best.onnx" #加载自己的onnx模型文件

#yolov8_onnx_model_path = "D:\\Project\\yolov8\\weights\\yolov8x.onnx"

category_mapping = {'0': 'p0', '1': 'p1', '2': 'p2', '3': 'p3' } #类别映射,换成你的

detection_model = AutoDetectionModel.from_pretrained(

model_type='yolov8onnx',

model_path=yolov8_onnx_model_path,

confidence_threshold=0.3,

category_mapping=category_mapping,

device='cuda:0', # or 'cuda:0' #这里要使用GPU

)

#img_path = "datasets\\vitrolite\\images\\val\\c144846_5_10.png" #推导图片路径

#result = get_prediction(read_image(img_path), detection_model) #第一次启动GOU ,时间比较慢,必须先启动一次

#分块检测

result = get_sliced_prediction(

"D:\\Project\\vitroliteDefect\\tile_round1_train_20201231\\train_imgs\\197_2_t20201119084924170_CAM1.jpg",

detection_model,

slice_height=640,

slice_width=640,

overlap_height_ratio=0.05,

overlap_width_ratio=0.05

)

result.export_visuals(export_dir="demo_data/")(3)修改sahi的接口文件

找到pip安装的sahi 位置 , 修改 yolov8onnx.py

修改 _post_process 函数 , 主要是由于yolov8 和yolov10 输出 shape有所不同,yolov10没有nms

def _post_process(

self, outputs: np.ndarray, input_shape: Tuple[int, int], image_shape: Tuple[int, int]

) -> List[torch.Tensor]:

image_h, image_w = image_shape

input_w, input_h = input_shape

predictions = np.squeeze(outputs[0]) # 不用.T 转置 , #( 300,6)

# 在下面这个地方改动,by zjy , self.confidence_threshold 是0.3

#for row in predictions:

#print( "row:" , row.shape )

scores = predictions[: , 4] #shape 为 ( 300,6) ,第5个,下标为4

#self.confidence_threshold = 0.9

predictions = predictions[ scores > self.confidence_threshold, : ]

scores = scores[scores > self.confidence_threshold]

boxes = predictions[:, :4]

boxes = boxes.astype(np.int32)

class_ids = predictions[:, 5 ].astype(np.int32)

# Format the results

prediction_result = []

for bbox, score, label in zip(boxes , scores , class_ids ):

bbox = bbox.tolist()

cls_id = int(label)

prediction_result.append([bbox[0], bbox[1], bbox[2], bbox[3], score, cls_id])

"""

# Filter out object confidence scores below threshold

scores = np.max(predictions[:, 4:], axis=1)

predictions = predictions[scores > self.confidence_threshold, :]

scores = scores[scores > self.confidence_threshold]

class_ids = np.argmax(predictions[:, 4:], axis=1)

boxes = predictions[:, :4]

# Scale boxes to original dimensions

input_shape = np.array([input_w, input_h, input_w, input_h])

boxes = np.divide(boxes, input_shape, dtype=np.float32)

boxes *= np.array([image_w, image_h, image_w, image_h])

boxes = boxes.astype(np.int32)

# Convert from xywh two xyxy

boxes = xywh2xyxy(boxes).round().astype(np.int32)

# Perform non-max supressions

indices = non_max_supression(boxes, scores, self.iou_threshold)

# Format the results

prediction_result = []

for bbox, score, label in zip(boxes[indices], scores[indices], class_ids[indices]):

bbox = bbox.tolist()

cls_id = int(label)

prediction_result.append([bbox[0], bbox[1], bbox[2], bbox[3], score, cls_id])

"""

prediction_result = [torch.tensor(prediction_result)]

# prediction_result = [prediction_result]

return prediction_result三、结果

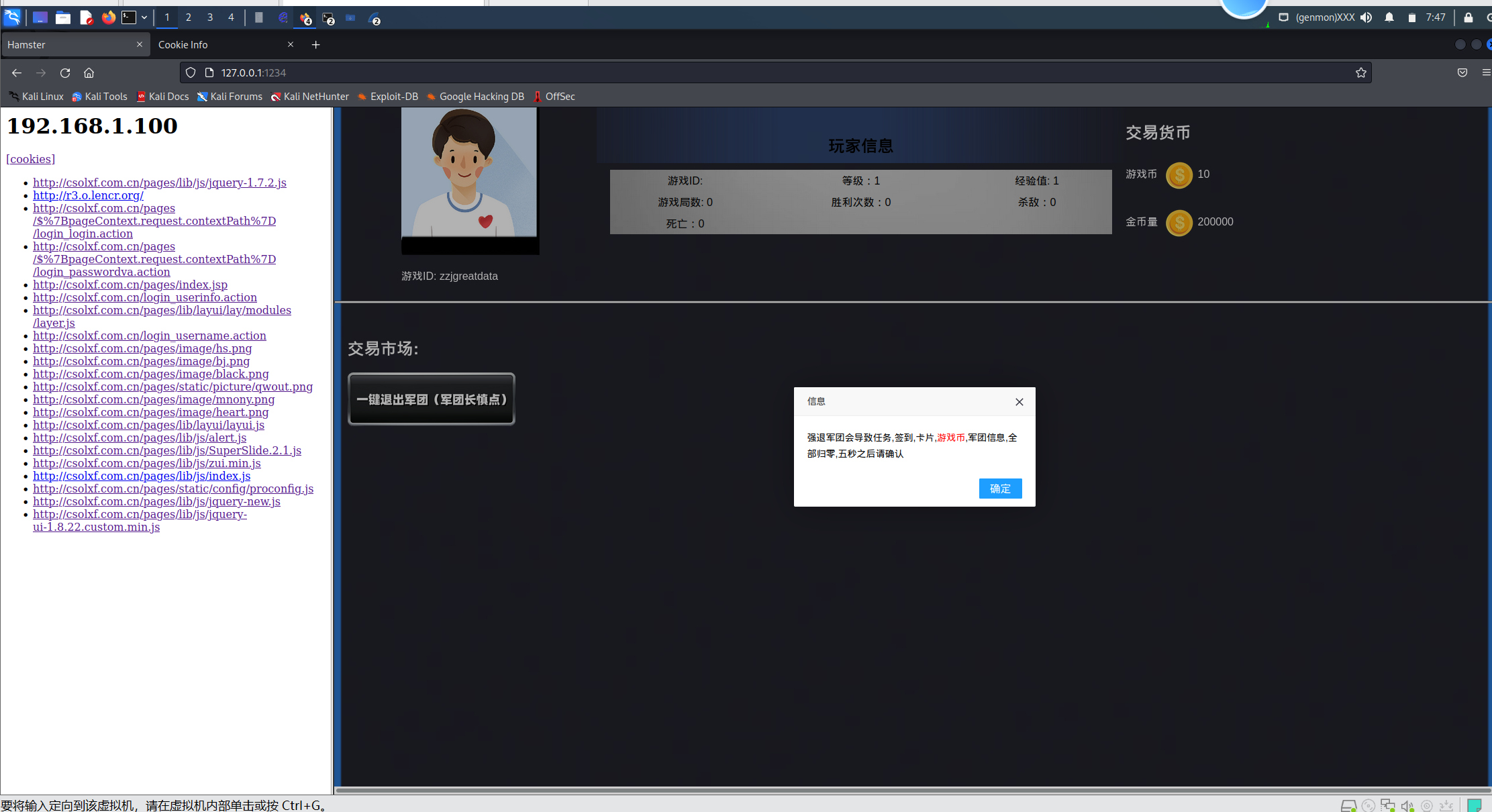

运行推理代码,在 demo_data 文件夹下生成结果图片, 实验图片是一张高分辨率瓷砖图片