目录

一、模型准备

二、修改结构

1、在网络中添加一层

2、在classifier结点添加一个线性层

3、修改网络中的某一层(features 结点举例)

4、替换网络中的某一层结构(与第3点类似)

5、提取全连接层的输入特征数和输出特征数

6、删除网络层

7、指定某一层冻结

8、批量冻结(只训练最后一层)

9、查看哪些层冻结哪些没有

10、加载网络权重

三、自定义数据集加载

一、模型准备

import torch

import torch.nn as nn

from torchvision import models

model = models.vgg11(pretrained=False)查看模型结构:

print(model)VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(11): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(12): ReLU(inplace=True)

(13): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(14): ReLU(inplace=True)

(15): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(16): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(17): ReLU(inplace=True)

(18): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(19): ReLU(inplace=True)

(20): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)二、修改结构

1、在网络中添加一层

model.features.add_module('last_layer', nn.Conv2d(512,512, kernel_size=3, stride=1, padding=1))

print(model)2、在classifier结点添加一个线性层

model.classifier.add_module('Linear', nn.Linear(1000, 10))

print(model)3、修改网络中的某一层(features 结点举例)

model.features[8] = nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

print(model)4、替换网络中的某一层结构(与第3点类似)

直接提取这个结构,重新赋值一个结构即可

import torch

import torch.nn as nn

from torchvision import models

# 创建resnet50网络

model = models.resnet50(num_classes=1000)

print(model)

fc_in_features = model.fc.in_features

model.fc = torch.nn.Linear(fc_in_features, 2, bias=True)5、提取全连接层的输入特征数和输出特征数

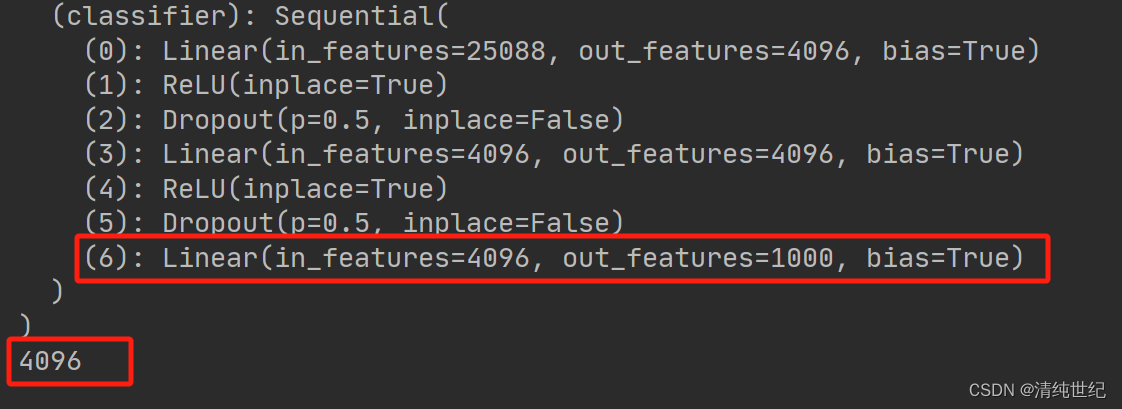

查看最后一层全连接的输入数量:

import torch

import torch.nn as nn

from torchvision import models

model = models.vgg11(pretrained=False)

print(model)

print(model.classifier[6].in_features)

其他的结构也类似,主要是写出结构里的参数即可。例如:全连接的in_features和out_features

6、删除网络层

使用一个空结构替换即可,即nn.Sequential()

model.classifier[6] = nn.Sequential()

print(model)或者用切片来删除(删除后4层):

model.features = nn.Sequential(*list(model.features.children())[:-4])net.classifier 对应 net.classifier.children()

net.features 对应 net.features.children()

7、指定某一层冻结

# 冻结指定层的预训练参数

model.classifier[3].weight.requires_grad = False8、批量冻结(只训练最后一层)

import torch

import torch.nn as nn

from torchvision import models

model = models.vgg11(pretrained=False)

print(model)

for param in model.parameters():

param.requires_grad_(False)

fc_in_features = model.classifier[6].in_features

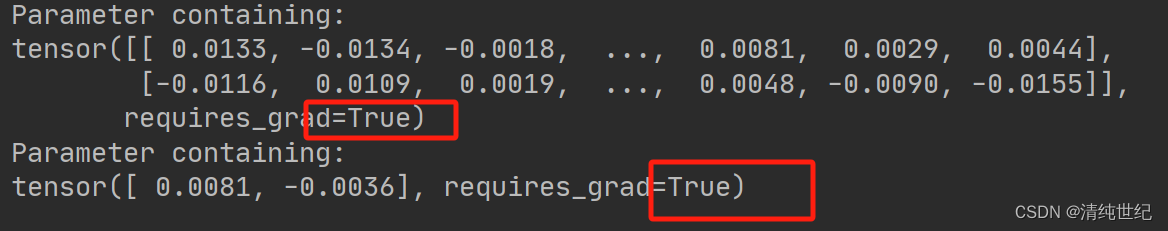

model.classifier[6] = nn.Linear(fc_in_features, 2, bias=True)9、查看哪些层冻结哪些没有

for i in model.parameters():

if i.requires_grad:

print(i)

10、加载网络权重

model.load_state_dict(torch.load('model.pth')) # 加载网络参数三、自定义数据集加载

from torch.utils.data import DataLoader, Dataset

class MyDataset(Dataset):

def __init__(self, xxxx):

super(MyDataset, self).__init__()

pass

def __len__(self):

return len(self.images)

def __getitem__(self, idx):

pass

return image, classes