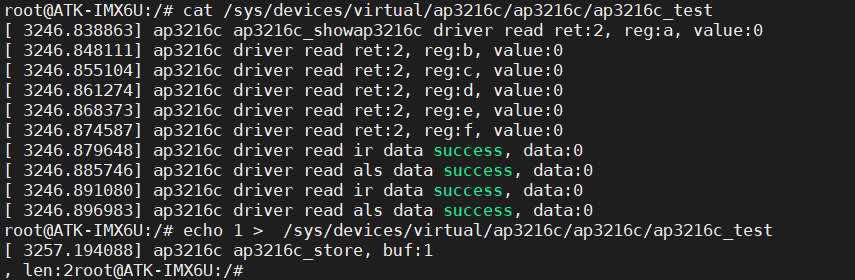

现象

某个查询ES接口慢调用告警,如图,接口P999的耗时都在2500ms:

基本耗时都在查询ES阶段:

场景与ES设定

慢调用接口为输入多个条件分页查询,慢调用接口调用的ES索引为 express_order_info,该索引通过DTS(数据同步服务)聚合了 订单服务的一张MySQL表 和 分班服务的一张MySQL表 的相关数据:

- 一个subClazzNumber (用户查询必填) :来自分班服务,辅导班编号;

- endIdx(查询必填,由后端自动填充):来自分班服务,班级权益相关,查询条件值固定等于-1;

- 一个userId(用户选填):来自分班服务,用户id;

- 一个orderNumber(用户选填):来自订单服务,订单编号

- 订单下单payTime(用户选填):来自订单服务,订单下单时间

ES版本:7.7.1,ES集群有3个节点。order_info索引有3个主分片,2个副本,已存有数据5亿条,每个主分片大小20G。mapping如下:

{

"express_order_info" : {

"mappings" : {

"properties" : {

...

"businessTime" : {

"type" : "date",

"format" : "yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||epoch_millis"

},

"endIdx" : {

"type" : "integer"

},

"orderNumber" : {

"type" : "long"

},

"subClazzNumber" : {

"type" : "long"

},

"userAddressId" : {

"type" : "long"

},

"userId" : {

"type" : "long"

}

}

}

}

}

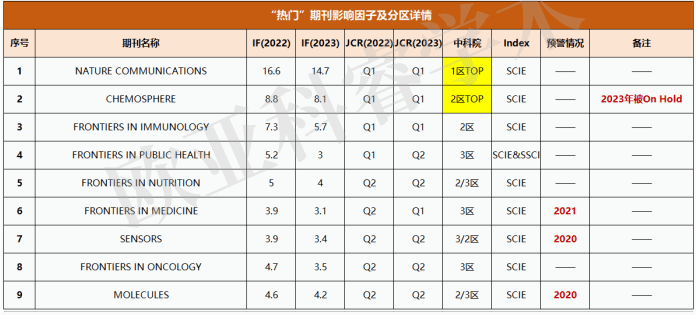

优化方案

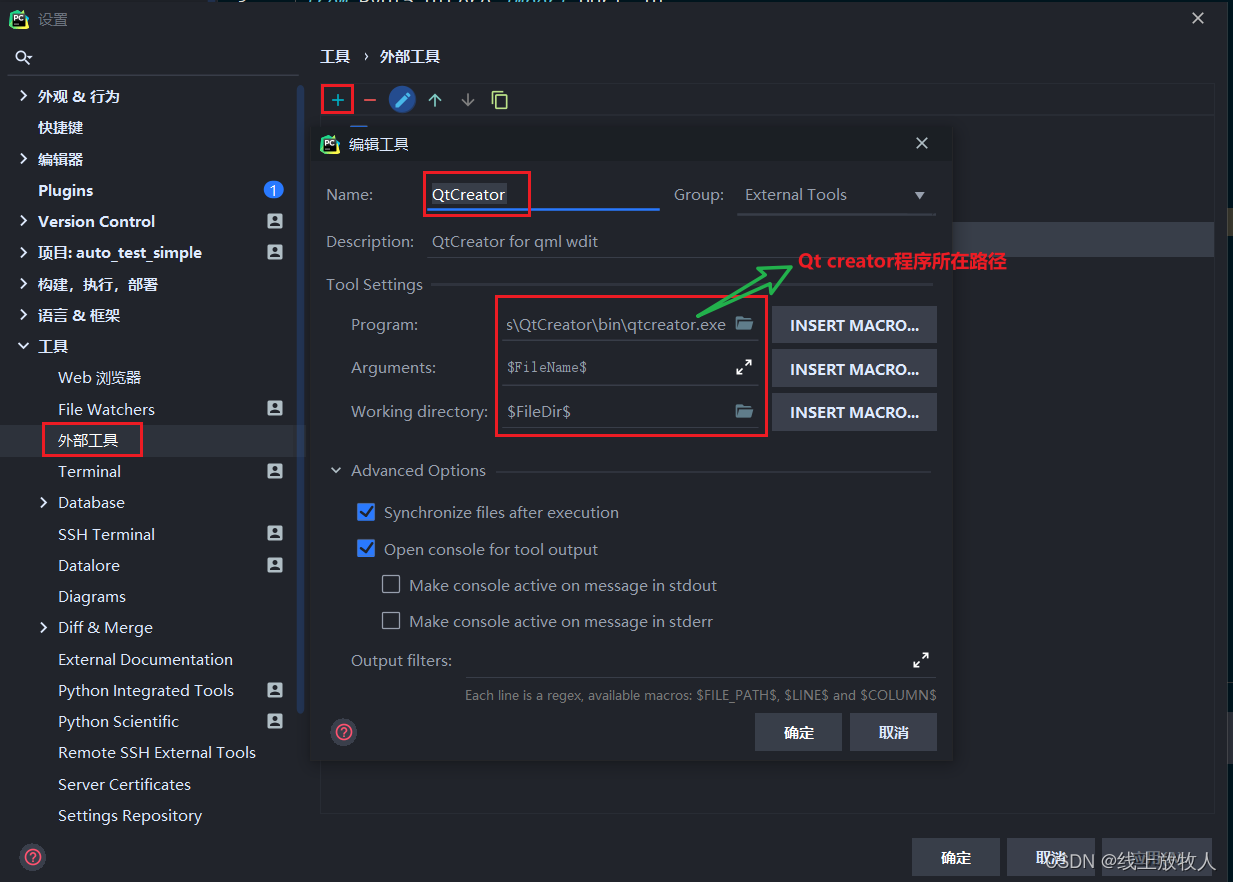

滚动索引方案

首先想到的是不是索引过大导致的查询效率低下。同时,组内订单服务使用的ES使用 Index Template + RollOver + Index Alias 方案稳定运行,该方案每个月会根据Index Template新建物理索引,并更新Index Alias指向该物理索引,所以有效避免了索引过大的情况,且使用别名上层调用无感知。

而慢查询的express_order_info没有任何措施预防索引膨胀问题,考虑新增滚动索引来解决该问题,整体方案如下:

滚动索引可以参考:ElasticSearch中使用Index Template、Index Alias和Rollover 维护索引

方案可参考:

- 使用索引别名和Rollover滚动创建索引

- Aliases API

- Index templates

- Rollover API

- Roll over an index alias with a write index

- Elasticsearch multindex performance

新增滚动索引涉及到 增量数据同步索引切换 和 存量数据的索引迁移。老索引数据量较大,迁移十分繁杂,也不好协调基础架构的同事;如果不迁移,仍然有查询瓶颈,因为一个索引别名对应多个物理索引时,ES会对每个物理索引都查一遍,只能达到不让慢查询变得更慢的程度。

Feign接口调用替换ES

由于ES存储字段较少,查询不复杂,考虑使用Feign直接调用服务接口,在内存中聚合,但发现不行,无法支持根据订单下单时间进行筛选查询(分班服务没存下单时间),不考虑改方案。

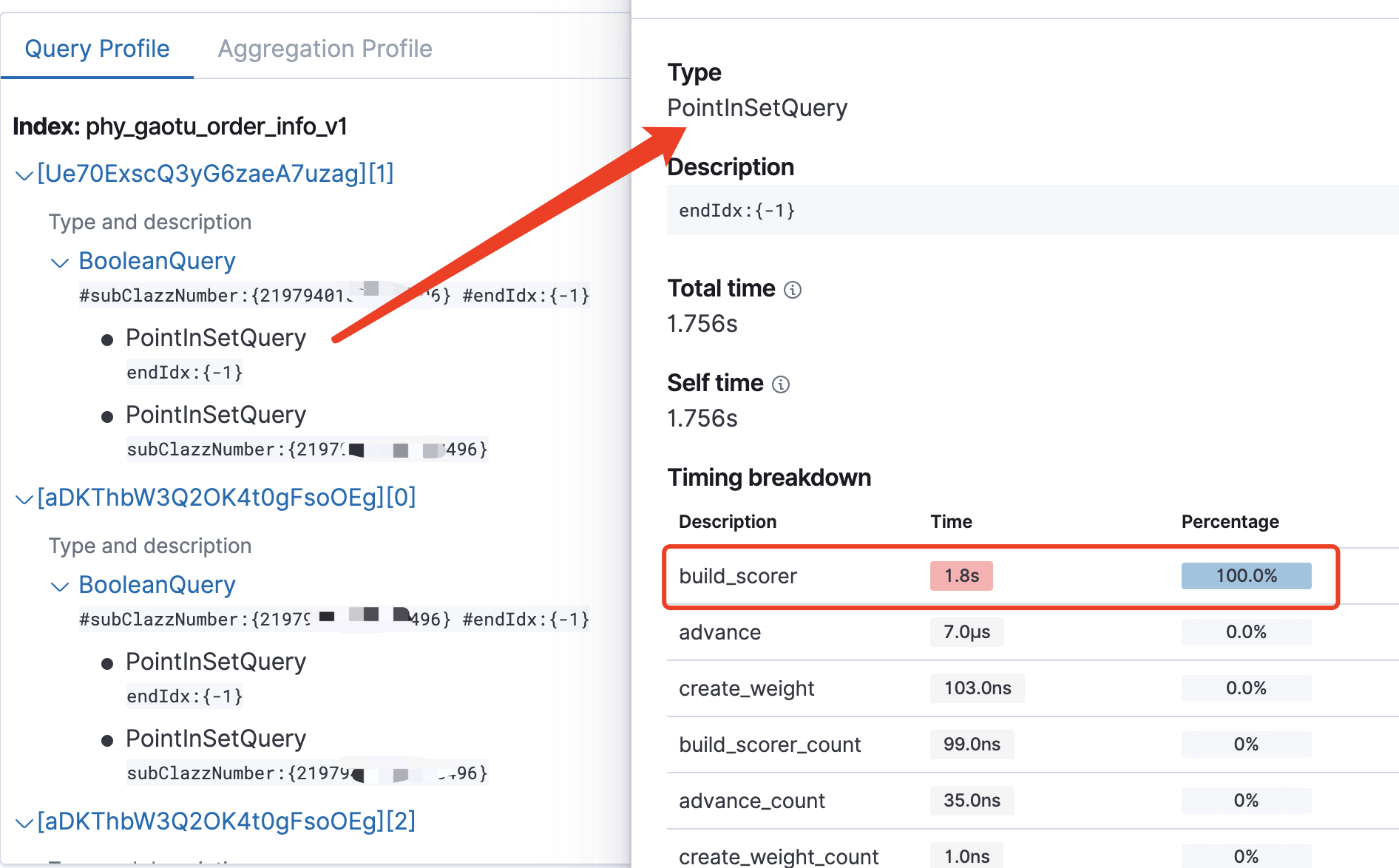

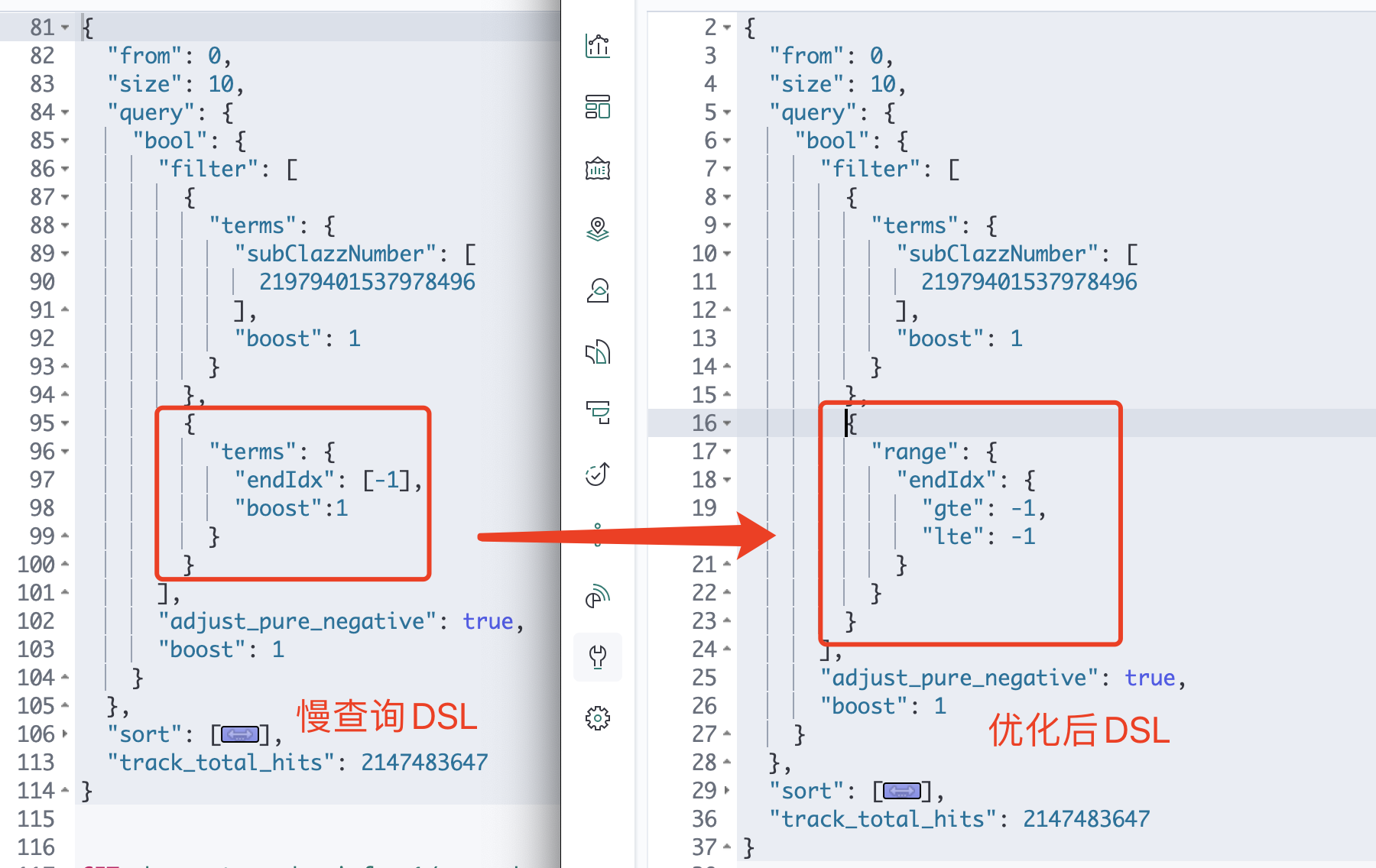

优化查询语句

Java应用端构建的DSL如下:

GET express_order_info/_search

{

"from": 0,

"size": 10,

"query": {

"bool": {

"filter": [

{

"terms": {

"subClazzNumber": [

219794********8496

],

"boost": 1

}

},

{

"terms": {

"endIdx": [

-1

],

"boost": 1

}

}

],

"adjust_pure_negative": true,

"boost": 1

}

},

"sort": [

{

"businessTime": {

"order": "desc"

}

}

],

"track_total_hits": 2147483647

}

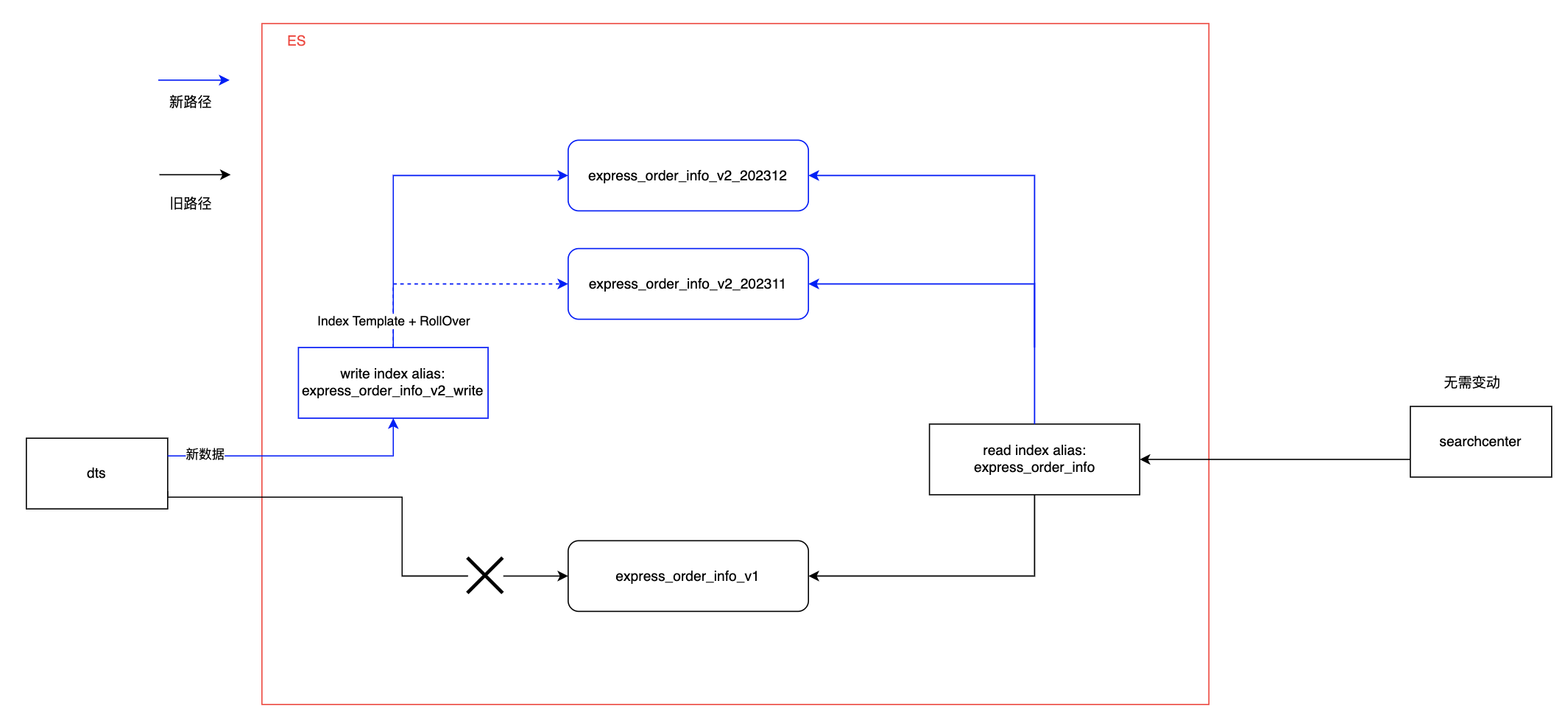

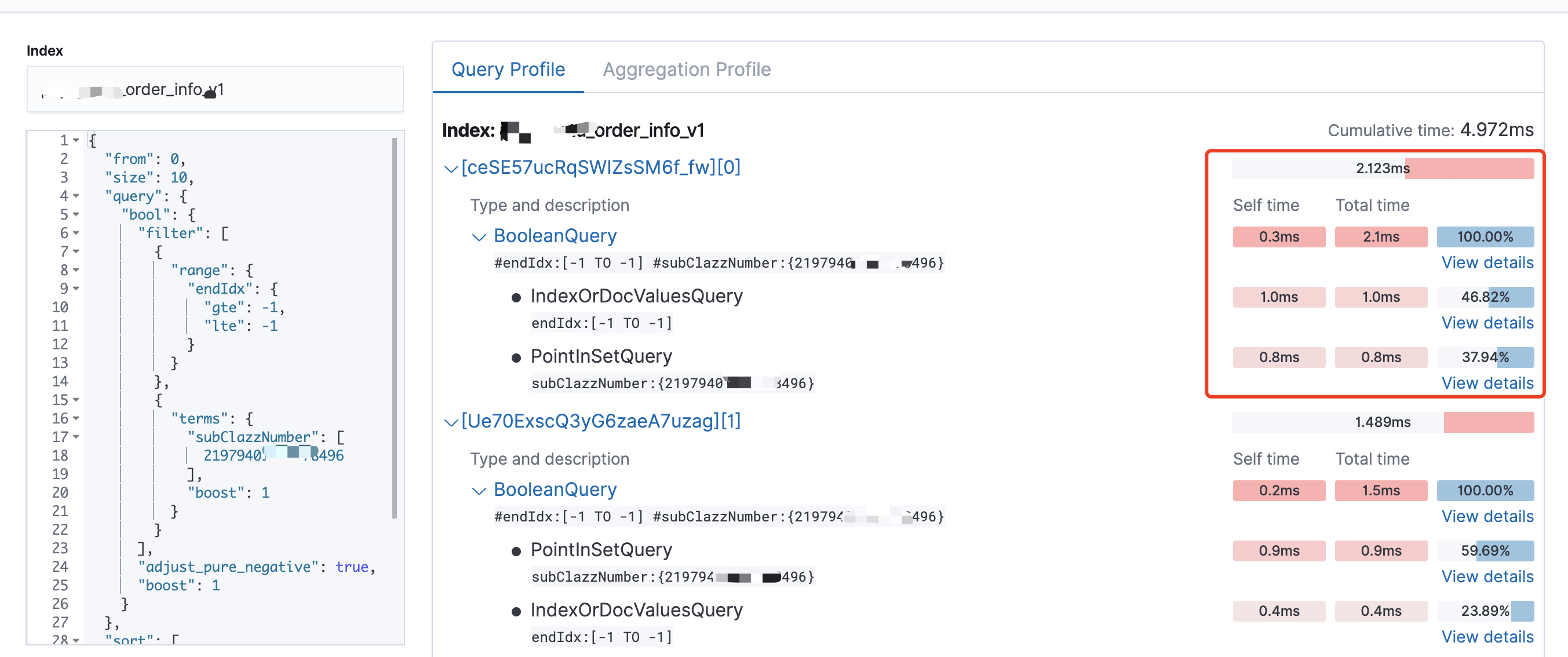

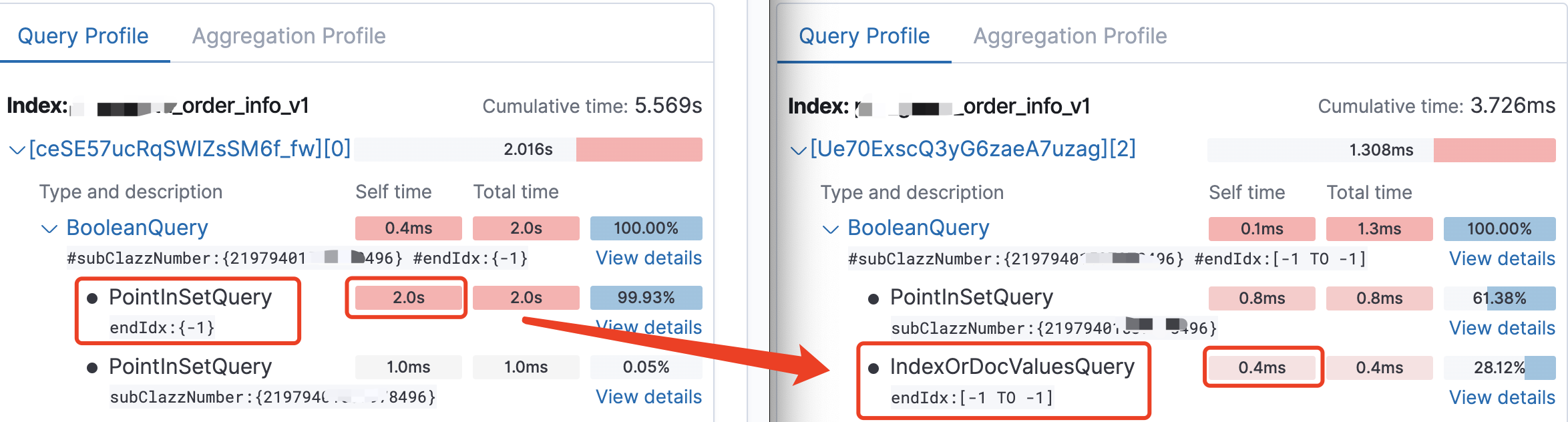

使用 Search Profiler定位慢的地方:

查询耗时集中在endIdx属性查询的build_scorer阶段。

参考网上相似案例:

- Elasticsearch-spend-all-time-in-build-scorer

- Elastic对类似枚举数据的搜索性能优化

- number?keyword?傻傻分不清楚

- 如何解释将标识字段映射为keyword比integer更好

- Elasitcsearch 底层系列 Lucene 内核解析之Point索引

现有查询DSL可做出以下优化:

- endIdx属性type改为keyword

- 使用range查询endIdx

由于索引mapping不可修改,暂时使用第二种优化,优化后的DSL:

GET express_order_info/_search

{

"from": 0,

"size": 10,

"query": {

"bool": {

"filter": [

{

"range": {

"endIdx": {

"gte": -1,

"lte": -1

}

}

},

{

"terms": {

"subClazzNumber": [

21979*******78496

],

"boost": 1

}

}

],

"adjust_pure_negative": true,

"boost": 1

}

},

"sort": [

{

"businessTime": {

"order": "desc"

}

}

],

"track_total_hits": 2147483647

}

查询性能提升明显。

优化语句上线后P999为1300ms,性能提升1倍:

原理

慢查询耗时主要集中在endIdx的build_scorer阶段,切换后从PointInSetQuery变为IndexOrDocValusQuery

ES的Query/Filter可以概括为分别为每个查询条件找到满足的docID,然后将各个查询条件的结果进行合并(Conjunction)

参考:

In which order are my Elasticsearch queries/filters executed?

ES在检索在各个查询条件符合的doc之后进行build_scorer,关于build_scorer阶段:

build_scorer:This parameter shows how long it takes to build a Scorer for the query. A Scorer is the mechanism that iterates over matching documents and generates a score per-document (e.g. how well does “foo” match the document?). Note, this records the time required to generate the Scorer object, not actually score the documents. Some queries have faster or slower initialization of the Scorer, depending on optimizations, complexity, etc.

This may also show timing associated with caching, if enabled and/or applicable for the query

注意,build_scorer记录了生成记分器(Scorer)对象而不是对文档进行评分所需的时间,Scorer Object通过迭代所有匹配的doc的方式对doc进行打分和排序,但是filter query不是不进行score吗?关于这个问题,elasticsearch官方的回答是:

Scorers are created for queries and filters, the name is misleading.

The build_scorer operation in Elasticsearch is used to build a scorer for each document in the index. A scorer is used to calculate the relevance score of a document for a given query. In a filter context, no scoring is applied, but Elasticsearch still builds a scorer for each document, which can slow down searches.

This behavior is due to the fact that Elasticsearch is designed to handle both scoring and non-scoring operations in a uniform way. When a query is executed, Elasticsearch builds a scorer for each document in the index, regardless of whether scoring is actually needed for that query. This is because Elasticsearch needs to prepare for the possibility that a scoring operation might be needed later on in the query execution.

If you are dealing with numeric fields, consider using the keyword type instead of the default numeric type. This can improve the performance of TermQuery operations, which are faster than RangeQuery operations.

具体可参考:

Scorers are being created under filter context, while they shouldn’t

Filter context and Build Scorers

Scorer以迭代方式进行打分,那么build_scorer过程如何构建Scorer以支持迭代呢?倒排列表 或 BitSet.

The build_scorer function constructs an iterator internally. This iterator can traverse all matched documents. The construction of the iterator is a time-consuming operation as it involves building inverted lists or bitsets for the result set of docIds from subqueries, and doing conjunction to generate the final iterable docId bitset or inverted list

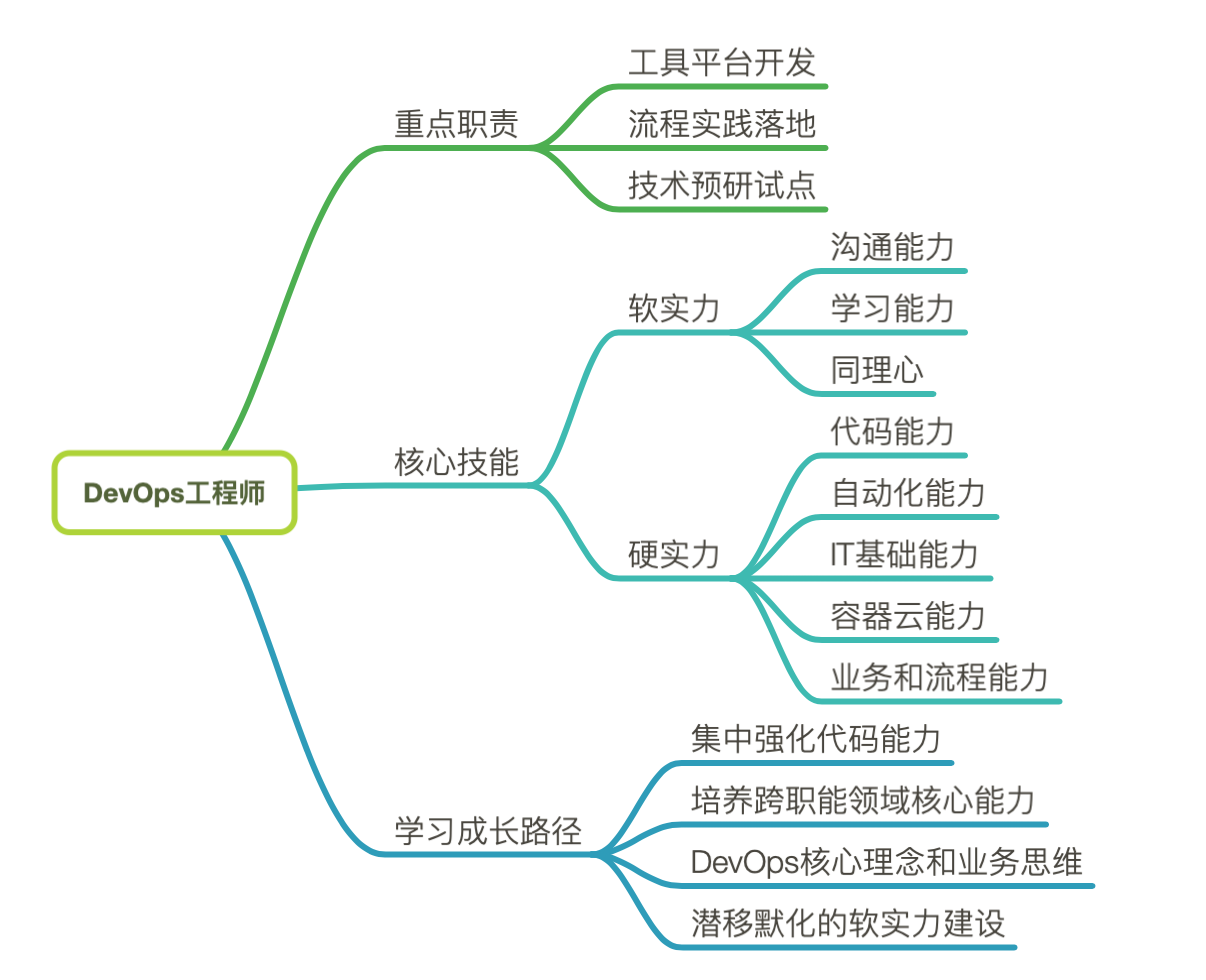

Lucene6.0以后取消了对数值类型进行倒排索引,而是使用下图的BKD Tree的方式记录doc_id来优化数值类型的Range Query,其中block位于磁盘,其他位于内存。BKD Tree也是以倒排方式查询,即拿着value找doc。

但也带来了2个问题:

- 数据量大时的数值类型的Term Query查询慢

- 数据量大时Range Query查询慢。

为什么慢?以上都涉及到数据量大的问题:

- 数值类型的TermQuery会被转成PointInSetQuery。BKD-Tree查找到的docId集合在磁盘上并非向 Postlings list 那样按照 docid 顺序存放,也就无法利用 BKD tree 的算法优势了。而此时要实现对 docid 集合的快速 advance 操作,只能将 docid 集合拿出来,然后构造成一个代表 docid 集合的 BitSet。build_scorer阶段创建Scorer的过程即为构造该BitSet,这个过程是导致查询缓慢的罪魁祸首。

- Range Query的结果集范围非常大的话,advance操作耗费的时间也会越长。

其中,第一个问题就是本文integer类型的endIdx属性进行Term Query时遇到的问题,这里没法直接优化,要么直接用Keyword进行Term Query,要么改造成Range Query。

为什么可以改造成Range Query来提升查询性能? 但是在数据量很大时,Range Query也会很慢啊,因为ES从5.4版本中通过IndexOrDocValuesQuery对Range Query进一步做了优化。

首先,考虑引入IndexOrDocValuesQuery的动机:

Range Query的过程分为以下两个步骤:

- 找到所有符合的doc;

- 筛选符合的doc中满足其他查询条件的doc(conjunction过程).

针对numeric类型,numeric类型根据BKD Tree(organized by Value,即倒排)能在第1步快速找到符合条件的doc。但是第2步如果用倒排:比如我要在步骤1中的结果里筛选subClazzNumber=xxx的doc,我只能拿着subClazzNumber=xxx去倒排索引查符合subClazzNumber条件的doc,然后把这两个条件的doc做个交集(通过构造BitSet完成)。太慢了,显然,检验endIdx=-1的doc中是否满足subClazzNumber=xxx 使用正排索引更高效,因此,ES引入Doc values数据结构来优化conjunction过程。

Numerics are indexed using a tree structure, called “points”, that is organized by value: it can help you find matching documents for a given range of value, but if you want to verify whether a particular document matches, you have no choice but to compute the set of matching documents and test whether it contains your document.Elasticsearch also has doc values enabled on numeric fields. Doc values are a per-field lookup structure that associates each document with the set of values it contains. This is great for sorting or aggregations, but we could also use them in order to verify matches: this is the right data-structure for it.

关于IndexOrDocValuesQuery,参考:

Better Query Planning for Range Queries in Elasticsearch

再提IndexOrDocValuesQuery的问题

All About Elasticsearch Filter BitSets

即我们endIdx改为Range Query后通过IndexOrDocValuesQuery来查询,IndexOrDocValuesQuery不会去查询所有文档中符合endIdx=-1的,而是在subClazzNumber=xxx的Term Query (lead the iteration)后的doc_id结果中进行确认(verify matches as follow iteration),这样Scorer对象的构建就不用对所有满足endIdx=-1的doc_id生成BitSet。注意只有IndexOrDocValuesQuery为follow iteration时才会使用正排方式筛选结果,即conjuntion从其他查询条件的结果开始,IndexOrDocValuesQuery针对别人的结果进行正排筛选。

也就是ES 5.4版本后,numeric类型在不同的查询场景下时使用不同的Query:

- Term Query: PointInSetQuery (数据量大会导致慢)

- Range Query: IndexOrDocValuesQuery (很快啊)

Elasticsearch干货(三):对于数值类型索引优化

K-d Tree是什么

Bkd-Tree: A Dynamic Scalable kd-Tree

总结

在设置mapping时请结合查询场景合理设置type:

numeric 类型在数据区分度很高、量不大(如订单编号这种唯一属性)时,可以使用Term Query;在区分度低、数据量大(如枚举值、状态值)时不要设为numeric类型而是设为Keyword类型进行Term Query,若已经设为numeric类型请使用Range Query,参考本文endIdx查询DSL的优化。