提醒:本例涉及到三个 Python 文件,即 two_layer_net.py,train_neuralnet.py,mnist.py 等。

显然,要进行神经网络的学习,必须先构建神经网络。

因此,本文先构建了一个2层神经网络。代码详见 two_layer_net.py,内容如下:

import numpy as np

import sys,os

sys.path.append(os.pardir)

def sigmoid(x):

return 1/(1+np.exp(-x))

def sigmoid_grad(x):

return (1.0-sigmoid(x))*sigmoid(x)

def softmax(x):

if x.ndim==2:

x=x.T

x=x-np.max(x,axis=0)

y=np.exp(x)/np.sum(np.exp(x),axis=0)

return y.T

x=x-np.max(x)

return np.exp(x)/np.sum(np.exp(x))

def cross_entropy_error(y,t):

if y.ndim==1:

t=t.reshape(1,t.size)

y=y.reshape(1,y.size)

if t.size==y.size:

t=t.argmax(axis=1)

batch_size=y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size),t]+1e-7))/batch_size

def numerical_gradient_no_batch(f,x):

h=1e-4

grad=np.zeros_like(x)

for idx in range(x.size):

tmp_val=x[idx]

x[idx]=float(tmp_val)+h

fxh1=f(x)

x[idx]=tmp_val-h

fxh2=f(x)

grad[idx]=(fxh1-fxh2)/(2*h)

x[idx]=tmp_val

return grad

def numerical_gradient(f,X):

if X.ndim==1:

return numerical_gradient_no_batch(f,X)

else:

grad=np.zeros_like(X)

for idx,x in enumerate(X):

grad[idx]=numerical_gradient_no_batch(f,x)

return grad

class TwoLayerNet:

def __init__(self,input_size,hidden_size,output_size,weight_init_std=0.01):

# 初始化权重

self.params={}

self.params['W1']=weight_init_std*np.random.randn(input_size,hidden_size)

self.params['b1']=np.zeros(hidden_size)

self.params['W2']=weight_init_std*np.random.randn(hidden_size,output_size)

self.params['b2']=np.zeros(output_size)

def predict(self,x):

W1,W2=self.params['W1'],self.params['W2']

b1,b2=self.params['b1'],self.params['b2']

a1=np.dot(x,W1)+b1

z1=sigmoid(a1)

a2=np.dot(z1,W2)+b2

y=softmax(a2)

return y

# x:输入数据,t:监督数据

def loss(self,x,t):

y=self.predict(x)

return cross_entropy_error(y,t)

def accuracy(self,x,t):

y=self.predict(x)

y=np.argmax(y,axis=1)

t=np.argmax(t,axis=1)

accuracy=np.sum(y == t)/float(x.shape[0])

return accuracy

# x:输入数据,t:监督数据

def numerical_gradient(self,x,t):

loss_W=lambda W: self.loss(x,t)

grads={}

grads['W1']=numerical_gradient(loss_W,self.params['W1'])

grads['b1']=numerical_gradient(loss_W,self.params['b1'])

grads['W2']=numerical_gradient(loss_W,self.params['W2'])

grads['b2']=numerical_gradient(loss_W,self.params['b2'])

return grads

def gradient(self,x,t):

W1,W2=self.params['W1'],self.params['W2']

b1,b2=self.params['b1'],self.params['b2']

grads={}

batch_num=x.shape[0]

# forward

a1=np.dot(x,W1)+b1

z1=sigmoid(a1)

a2=np.dot(z1,W2)+b2

y=softmax(a2)

# backward

dy=(y-t)/batch_num

grads['W2']=np.dot(z1.T,dy)

grads['b2']=np.sum(dy,axis=0)

da1=np.dot(dy,W2.T)

dz1=sigmoid_grad(a1)*da1

grads['W1']=np.dot(x.T,dz1)

grads['b1']=np.sum(dz1,axis=0)

return grads紧接着,要对上文所构建的神经网络进行学习,其参见神经网络学习的一般步骤如下:

● 前提

神经网络存在合适的权重和偏置,调整权重和偏置以便拟合训练数据的过程称为“学习”。神经网络的学习分成下面4个步骤。

● 步骤1(mini-batch)

从训练数据中随机选出一部分数据,这部分数据称为mini-batch。我们的目标是减小mini-batch的损失函数的值。

● 步骤2(计算梯度)

为了减小mini-batch的损失函数的值,需要求出各个权重参数的梯度。梯度表示损失函数的值减小最多的方向。

● 步骤3(更新参数)

将权重参数沿梯度方向进行微小更新。

● 步骤4(重复)

重复步骤1、步骤2、步骤3。

之后,按照上面步骤,对上文代码 two_layer_net.py 所构建的神经网络进行学习的代码 train_neuralnet.py 的内容如下:

import sys,os

sys.path.append(os.pardir)

import numpy as np

import matplotlib.pyplot as plt

from mnist import load_mnist

from two_layer_net import TwoLayerNet

# 读入数据

(x_train,t_train),(x_test,t_test)=load_mnist(normalize=True,one_hot_label=True)

network=TwoLayerNet(input_size=784,hidden_size=50,output_size=10)

iters_num=10000 # 适当设定循环的次数

train_size=x_train.shape[0]

batch_size=100

learning_rate=0.1

train_loss_list=[]

train_acc_list=[]

test_acc_list=[]

# 平均每个epoch的重复次数

iter_per_epoch=max(train_size/batch_size,1)

for i in range(iters_num):

batch_mask=np.random.choice(train_size,batch_size)

x_batch=x_train[batch_mask]

t_batch=t_train[batch_mask]

# 计算梯度

#grad=network.numerical_gradient(x_batch,t_batch)

grad=network.gradient(x_batch,t_batch)

# 更新参数

for key in ('W1','b1','W2','b2'):

network.params[key]-=learning_rate*grad[key]

loss=network.loss(x_batch,t_batch)

train_loss_list.append(loss)

# 计算每个epoch的识别精度

if i % iter_per_epoch==0:

train_acc=network.accuracy(x_train,t_train)

test_acc=network.accuracy(x_test,t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print("train acc,test acc | "+str(train_acc)+","+str(test_acc))

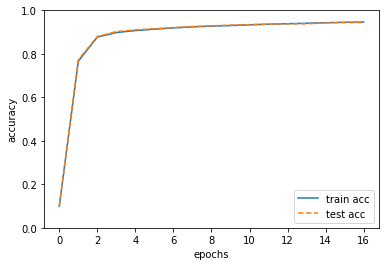

# 绘制图形

markers={'train': 'o','test': 's'}

x=np.arange(len(train_acc_list))

plt.plot(x,train_acc_list,label='train acc')

plt.plot(x,test_acc_list,label='test acc',linestyle='--')

plt.xlabel("epochs")

plt.ylabel("accuracy")

plt.ylim(0,1.0)

plt.legend(loc='lower right')

plt.show()运行代码 train_neuralnet.py 后的结果,每次都有差异。其中一次的运行结果如下:

train acc,test acc | 0.09915,0.1009

train acc,test acc | 0.7660833333333333,0.7735

train acc,test acc | 0.8770666666666667,0.8797

train acc,test acc | 0.8981666666666667,0.9027

train acc,test acc | 0.907,0.9103

train acc,test acc | 0.9135666666666666,0.9156

train acc,test acc | 0.91935,0.9208

train acc,test acc | 0.924,0.9257

train acc,test acc | 0.9277833333333333,0.929

train acc,test acc | 0.9300666666666667,0.9324

train acc,test acc | 0.9336,0.9343

train acc,test acc | 0.93635,0.9362

train acc,test acc | 0.9387333333333333,0.9378

train acc,test acc | 0.9407333333333333,0.9384

train acc,test acc | 0.9430833333333334,0.9421

train acc,test acc | 0.9452666666666667,0.9437

train acc,test acc | 0.9463666666666667,0.9438

顺便提一下,代码 train_neuralnet.py 中会用到代码 mnist.py,其内容可详见:

https://blog.csdn.net/hnjzsyjyj/article/details/128721706

为方便起见,此处再把 mnist.py 的内容复写如下:

try:

import urllib.request

except ImportError:

raise ImportError('You should use Python 3.x')

import os.path

import gzip

import pickle

import os

import numpy as np

url_base = 'http://yann.lecun.com/exdb/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

dataset_dir = os.path.dirname(os.path.abspath('__file__'))

save_file = dataset_dir + "/mnist.pkl"

train_num = 60000

test_num = 10000

img_dim = (1, 28, 28)

img_size = 784

def _download(file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

print("Done")

def download_mnist():

for v in key_file.values():

_download(v)

def _load_label(file_name):

file_path = dataset_dir + "/" + file_name

print("Converting " + file_name + " to NumPy Array ...")

with gzip.open(file_path, 'rb') as f:

labels = np.frombuffer(f.read(), np.uint8, offset=8)

print("Done")

return labels

def _load_img(file_name):

file_path = dataset_dir + "/" + file_name

print("Converting " + file_name + " to NumPy Array ...")

with gzip.open(file_path, 'rb') as f:

data = np.frombuffer(f.read(), np.uint8, offset=16)

data = data.reshape(-1, img_size)

print("Done")

return data

def _convert_numpy():

dataset = {}

dataset['train_img'] = _load_img(key_file['train_img'])

dataset['train_label'] = _load_label(key_file['train_label'])

dataset['test_img'] = _load_img(key_file['test_img'])

dataset['test_label'] = _load_label(key_file['test_label'])

return dataset

def init_mnist():

download_mnist()

dataset = _convert_numpy()

print("Creating pickle file ...")

with open(save_file, 'wb') as f:

pickle.dump(dataset, f, -1)

print("Done!")

def _change_one_hot_label(X):

T = np.zeros((X.size, 10))

for idx, row in enumerate(T):

row[X[idx]] = 1

return T

def load_mnist(normalize=True, flatten=True, one_hot_label=False):

"""读入MNIST数据集

Parameters

----------

normalize : 将图像的像素值正规化为0.0~1.0

one_hot_label :

one_hot_label为True的情况下,标签作为one-hot数组返回

one-hot数组是指[0,0,1,0,0,0,0,0,0,0]这样的数组

flatten : 是否将图像展开为一维数组

Returns

-------

(训练图像, 训练标签), (测试图像, 测试标签)

"""

if not os.path.exists(save_file):

init_mnist()

with open(save_file, 'rb') as f:

dataset = pickle.load(f)

if normalize:

for key in ('train_img', 'test_img'):

dataset[key] = dataset[key].astype(np.float32)

dataset[key] /= 255.0

if one_hot_label:

dataset['train_label'] = _change_one_hot_label(dataset['train_label'])

dataset['test_label'] = _change_one_hot_label(dataset['test_label'])

if not flatten:

for key in ('train_img', 'test_img'):

dataset[key] = dataset[key].reshape(-1, 1, 28, 28)

return (dataset['train_img'], dataset['train_label']), (dataset['test_img'], dataset['test_label'])

if __name__ == '__main__':

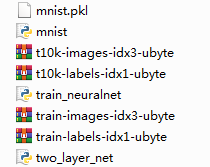

init_mnist()本文所涉及的神经网络学习的所有文件如下图所示:

当然,运行此代码前,可将之前 https://blog.csdn.net/hnjzsyjyj/article/details/128721706 例子中下载的 MNIST 数据集的 'train-images-idx3-ubyte.gz'、'train-labels-idx1-ubyte.gz'、't10k-images-idx3-ubyte.gz'、't10k-labels-idx1-ubyte.gz' 等四个文件,以及生成的 mnist.pkl 文件直接复制过来。

【参考文献】

https://blog.csdn.net/hnjzsyjyj/article/details/128759703

https://blog.csdn.net/hnjzsyjyj/article/details/128721706