文章目录

- 1. 问题

- 2. 方案

- 2.1 学习一个 5 * 5的滤波核

- 2.2 学习分通道的滤波核 以及 分离卷积

- 3. 分析

根据图像对学习滤波核

之前研究过根据图像对生成3Dlut, 以及生成颜色变换系数

这里我们利用图像对学习 滤波

1. 问题

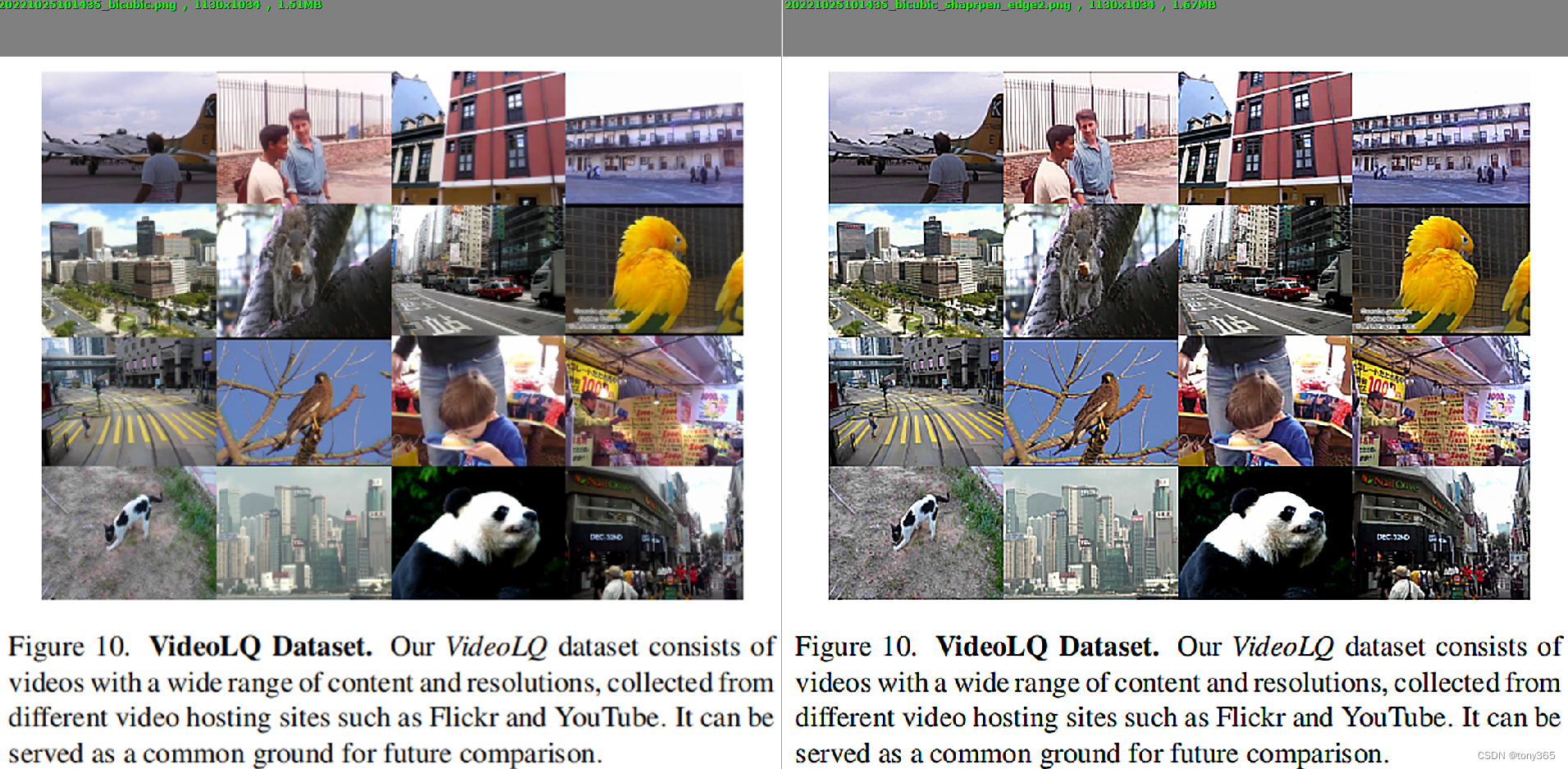

遇到的问题是这样的,已知一个图像和经过邻域滤波处理后的图像,想要求解这两个图像的滤波核

2. 方案

利用最小二乘法求解

这里以一对图像为例,src为锐化前,target为锐化后

ground truth滤波核为

kernel_sharpen = np.array([[-1, -1, -1, -1, -1],

[-1, 2, 2, 2, -1],

[-1, 2, 8, 2, -1],

[-1, 2, 2, 2, -1],

[-1, -1, -1, -1, -1]])

kernel_sharpen = kernel_sharpen / np.sum(kernel_sharpen)

im2 = cv2.filter2D(im1, -1, kernel_sharpen)

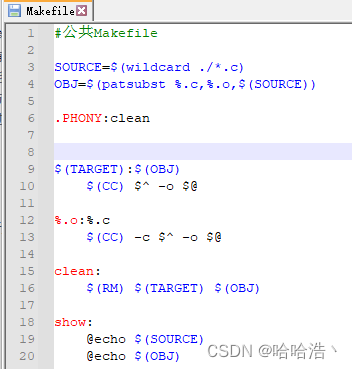

2.1 学习一个 5 * 5的滤波核

原理很简单,直接利用scipy.optimize 的 least_squares函数求解

代码如下:

import cv2

import numpy as np

from scipy.optimize import least_squares

def min_fun_model_sr(x, im1, im2):

x = x.reshape([5, 5])

t = cv2.filter2D(im1, -1, x) - im2

return t.reshape(-1)

import colour

def calc_deltaE(input_sRGB, ref_sRGB):

"""TODO: calculate deltaE 2000 with ref.

:input_sRGB: target sRGB in linear domain, float [0, 1]

:ref_sRGB: ref. sRGB in linear domain, float[0, 1]

:returns: delta E 2000 between target and reference

"""

XYZ = colour.sRGB_to_XYZ(input_sRGB, apply_cctf_decoding=True)

ref_XYZ = colour.sRGB_to_XYZ(ref_sRGB, apply_cctf_decoding=True)

Lab = colour.XYZ_to_Lab(XYZ)

ref_Lab = colour.XYZ_to_Lab(ref_XYZ)

delta_E = colour.delta_E(Lab, ref_Lab)

# print('deltaE 2000: {}'.format(delta_E))

return delta_E

if __name__ == "__main__":

src = r'D:\superresolution\sharpen\learn_filter\\20221025101435_bicubic.png'

target = r'D:\superresolution\sharpen\learn_filter\\20221025101435_bicubic_shaprpen_edge2.png'

im1 = cv2.imread(src)

h, w, c = im1.shape

print(h,w,c)

im2 = cv2.imread(target)

# # generating the kernels

# kernel_sharpen = np.array([[-1, -1, -1, -1, -1],

# [-1, 2, 2, 2, -1],

# [-1, 2, 8, 2, -1],

# [-1, 2, 2, 2, -1],

# [-1, -1, -1, -1, -1]])

# kernel_sharpen = kernel_sharpen / np.sum(kernel_sharpen)

# im2 = cv2.filter2D(im1, -1, kernel_sharpen)

# im3 = cv2.resize(im1, (h//2, w//2) )

# im4 = cv2.resize(im2, (h//2, w//2) )

print(im1.shape, im2.shape)

# 先中值滤波一下,避免噪声像素的影响, 滤波后生成的3Dlut 产生的图像可能会有一丢丢的模糊效果。

# rgb = cv2.medianBlur(im3, 3).reshape(-1, 3)

# srgb = cv2.medianBlur(im4, 3).reshape(-1, 3)

rgb = im1/ 255

srgb = im2/ 255

print('lq method ret:\n')

len = 5

filter = np.random.uniform(0, 1, [len, len]).reshape(-1)

print('init filter:', filter)

res = least_squares(min_fun_model_sr, filter, bounds=(-1, 2), args=(rgb, srgb), verbose=1)

kernel = res.x.reshape(len, len)

# apply

# file = r'd.jpeg'

# im0 = cv2.imread(file)

im_t = im1 / 255

im_t1 = cv2.filter2D(im_t, -1, kernel)

a3 = np.clip(im_t1 * 255, 0, 255).astype(np.uint8)

np.set_printoptions(suppress=True)

cv2.imwrite(src[:-4] + '_filter_gen.png', a3)

np.savetxt(src[:-4] + '_filter_gen.txt', kernel, fmt='%.05f')

print(np.round(kernel, 3), np.sum(kernel))

2.2 学习分通道的滤波核 以及 分离卷积

# 实现分通道卷积

def model(kernel, im):

filtered_image2 = np.zeros_like(im)

# print(kernel.shape, im.shape)

kernel = kernel.reshape(5, 5, 3)

filtered_image2[..., 0] = ndimage.convolve(im[..., 0], kernel[..., 0], mode='nearest')

filtered_image2[..., 1] = ndimage.convolve(im[..., 1], kernel[..., 1], mode='nearest')

filtered_image2[..., 2] = ndimage.convolve(im[..., 2], kernel[..., 2], mode='nearest')

return filtered_image2 # N*11 , 11*3 -> N * 3

# 实现分通道卷积后再融合, 类似depth-wise卷积

def model2(para, im):

ks = 5

k_int = 3

k_out = 3

t1 = ks*ks*k_int*k_out

kernel = para[:t1]

kernel = kernel.reshape(ks, ks, k_int, k_out)

kernel1 = kernel[..., 0]

out1 = model(kernel1, im)

kernel2 = kernel[..., 1]

out2 = model(kernel2, im)

kernel3 = kernel[..., 2]

out3 = model(kernel3, im)

rat = para[t1:]

ret = np.zeros_like(im)

ret[..., 0] = rat[0] * out1[..., 0] + rat[1] * out1[..., 1] + rat[2] * out1[..., 2]

ret[..., 1] = rat[3] * out2[..., 0] + rat[4] * out2[..., 1] + rat[5] * out2[..., 2]

ret[..., 2] = rat[6] * out3[..., 0] + rat[7] * out3[..., 1] + rat[8] * out3[..., 2]

return ret # N*11 , 11*3 -> N * 3

# 定义函数, 不需要自己写损失函数,在调用函数的时候可以指定损失函数类型

def min_fun_model_sr_split_conv(x, rgb, target):

t = model(x, rgb)

t -= target

# t = np.linalg.norm(t, ord=2, axis=1)

return t.reshape(-1) # 需要是1维数组

def min_fun_model_sr_split_conv_merge(x, rgb, target):

t = model2(x, rgb) - target

# t = np.linalg.norm(t, ord=2, axis=1)

return t.reshape(-1) # 需要是1维数组

def min_fun_model_sr(x, im1, im2):

x = x.reshape([5, 5])

t = cv2.filter2D(im1, -1, x) - im2

return t.reshape(-1)

if __name__ == "__main__":

src = r'D:\superresolution\sharpen\cubic\20221025101435_bicubic.png'

target = r'D:\superresolution\sharpen\cubic\20221025101435_bicubic_shaprpen_edge.png'

im1 = cv2.imread(src)

h, w, c = im1.shape

print(h,w,c)

im2 = cv2.imread(target)

# generating the kernels

kernel_sharpen = np.array([[-1, -1, -1, -1, -1],

[-1, 2, 2, 2, -1],

[-1, 2, 8, 2, -1],

[-2, 2, 2, 2, -1],

[-1, -1, -1, -1, -1]])

kernel_sharpen = kernel_sharpen / np.sum(kernel_sharpen)

# process and output the image

im2 = cv2.filter2D(im1, -1, kernel_sharpen)

im3 = cv2.resize(im1, (h//2, w//2))

im4 = cv2.resize(im2, (h//2, w//2))

print(im1.shape, im2.shape)

# 先中值滤波一下,避免噪声像素的影响, 滤波后生成的3Dlut 产生的图像可能会有一丢丢的模糊效果。

# rgb = cv2.medianBlur(im3, 3).reshape(-1, 3) / 255

# srgb = cv2.medianBlur(im4, 3).reshape(-1, 3) / 255

rgb = im3/ 255

srgb = im4/ 255

print('lq method ret:\n')

len = 5

# filter = np.random.uniform(0, 1, [len, len]).reshape(-1)

# print('init filter:', filter)

# res = least_squares(min_fun_model_sr, filter, bounds=(-1, 2), args=(rgb, srgb), verbose=1)

filter = np.random.uniform(0, 1, [len *len* 3* 3 + 9]).reshape(-1)

print('init filter:', filter)

res = least_squares(min_fun_model_sr_split_conv_merge, filter, bounds=(-1, 2), args=(rgb, srgb), verbose=1)

para = res.x

# apply

# file = r'd.jpeg'

# im0 = cv2.imread(file)

im_t = im1 / 255

# method 1

#im_t1 = cv2.filter2D(im_t, -1, kernel)

# mothod 2

# im_t1 = model( kernel, im_t)

# method 3

im_t1 = model2(para, im_t)

a3 = np.clip(im_t1 * 255, 0, 255).astype(np.uint8)

cv2.imwrite(src[:-4] + '_filter_gen_split_merge.png', a3)

np.set_printoptions(suppress=True)

print(np.round(para, 3), np.sum(para[:75*3]))

print(kernel_sharpen, np.sum(kernel_sharpen))

3. 分析

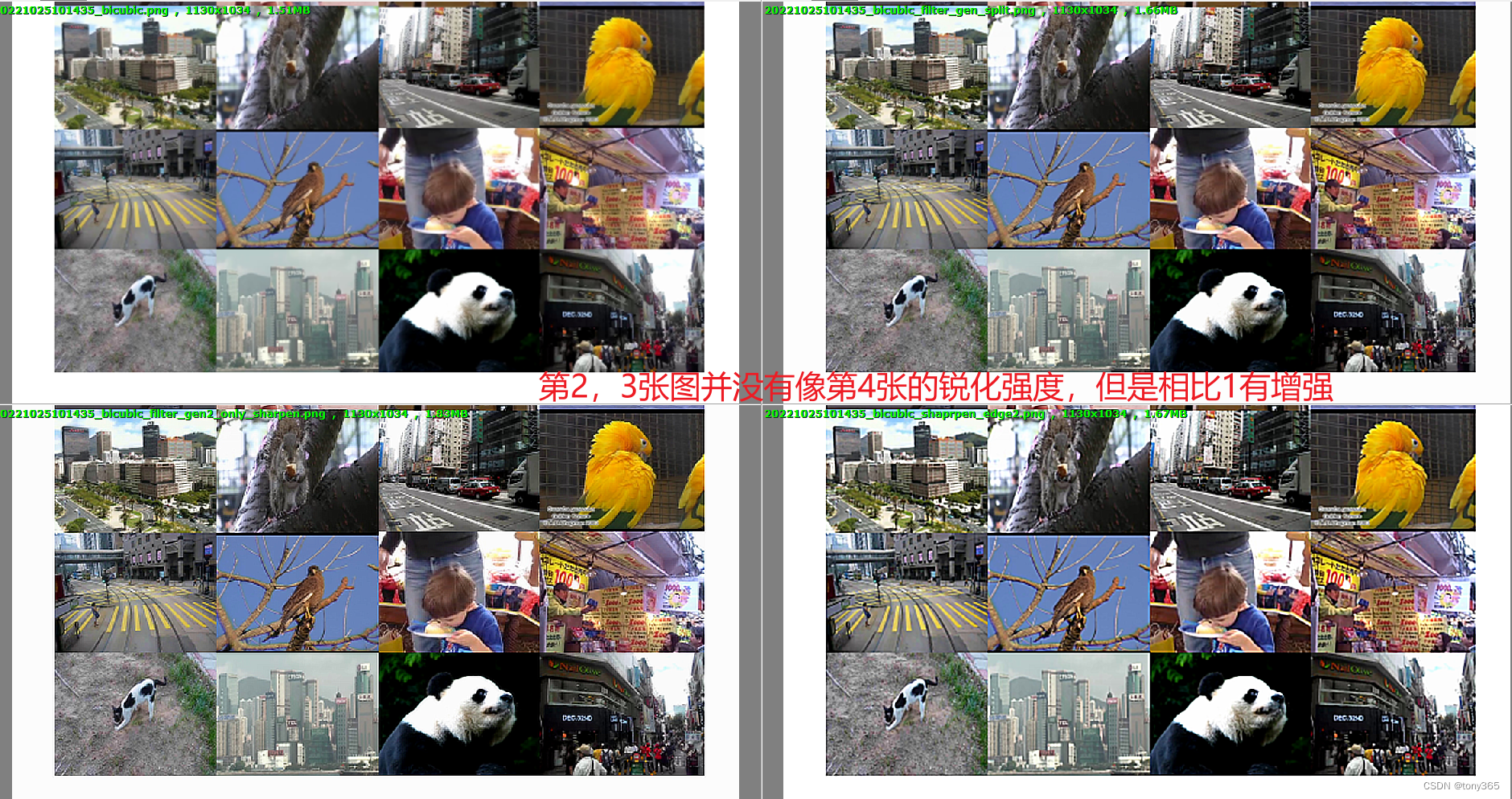

通过以上3个model都可以优化得到相关参数,3个model的结果差距不大,和groundtruth 效果比较锐化效果减弱。

因此训练的时候,1. 对target 进行增强 2. 可以利用更多图像来 学习 filter.