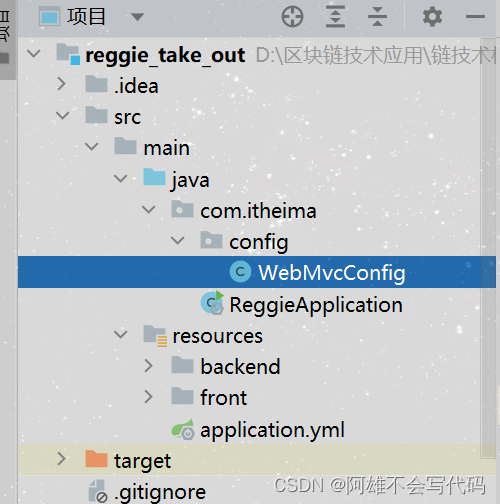

Ollama安装运行

安装与配置

Download Ollama

安装默认在C盘,成功后,window任务栏图标会有Ollama Logo

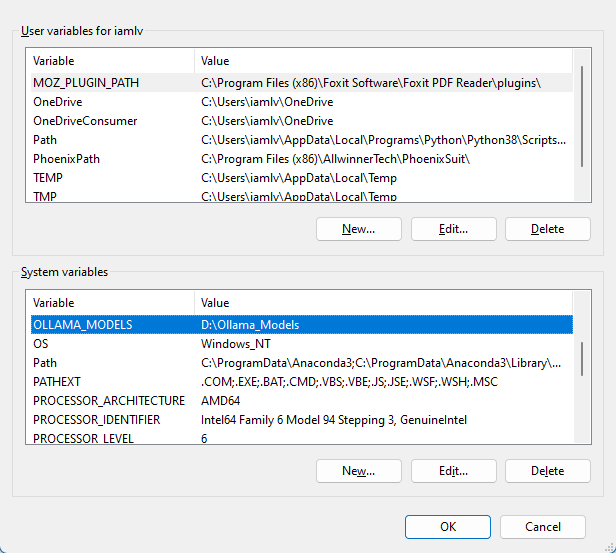

为了不占用C盘更大的空间,修改模型下载路径,修改环境变量

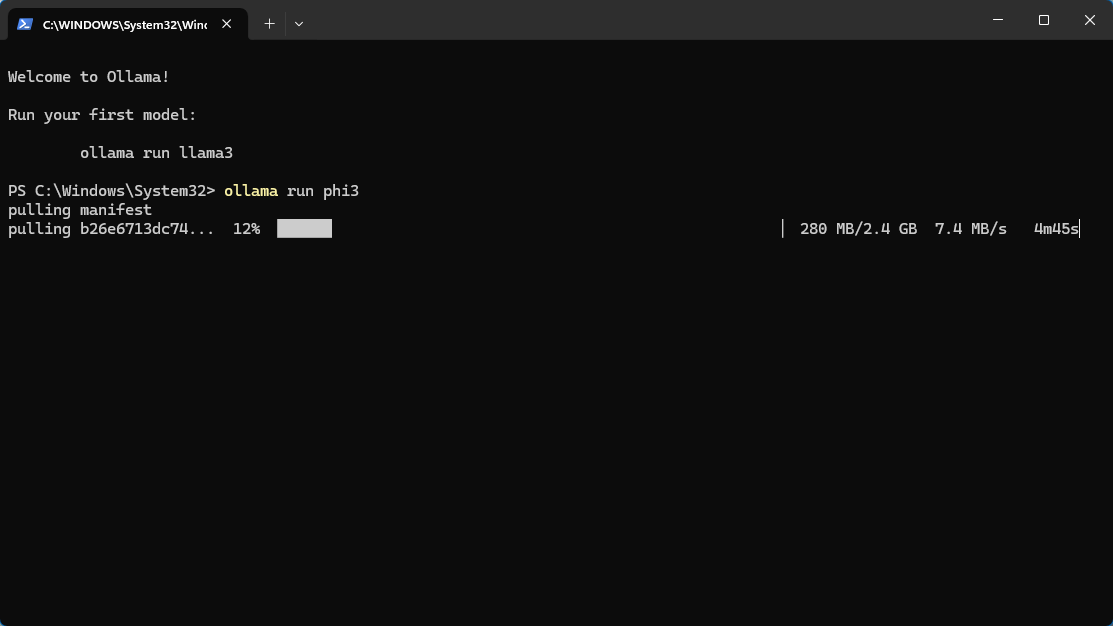

下载模型

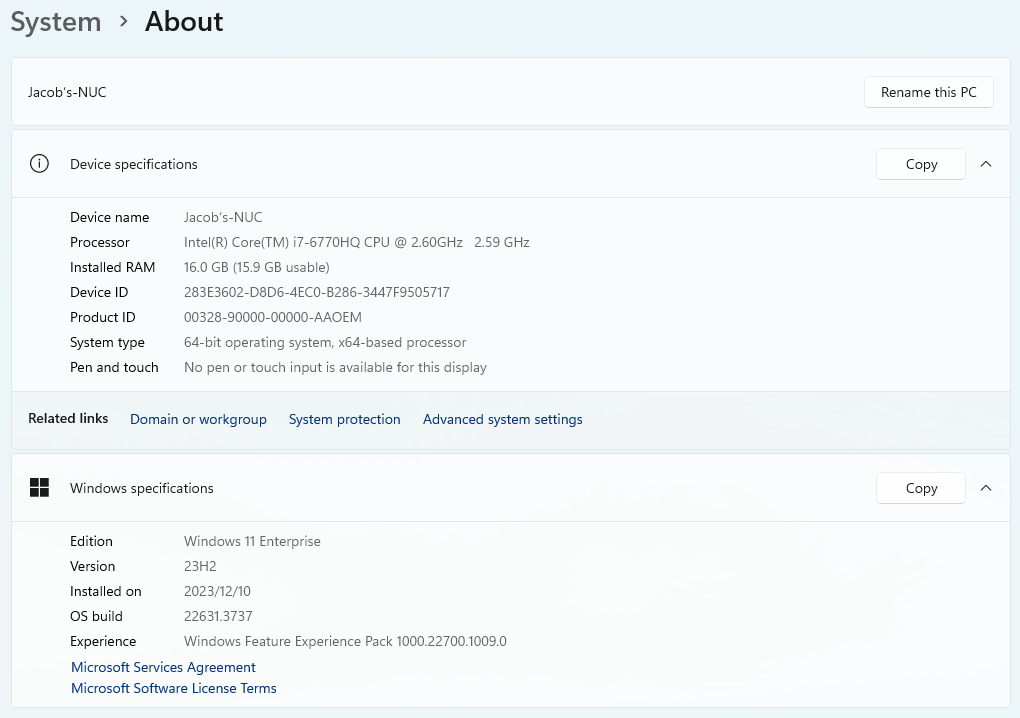

由于我电脑是第六代Intel,集显,没有独立显卡;这里选择3B比较小的模型,防止模型太大响应太慢;

在Window系统的PowerShell软件,或者Windows Terminal中输入命令

ollama run phi3此处使用微软的phi3模型,3B的版本,2.4GB大小;

下载完成

首次安装完成,就进入了,运行模型的窗口

后续运行模型

PS C:\Users\iamlv> ollama run phi3

>>> 回复速度如下图

另外安装运行模型llama3, 7B版本

PS C:\Users\iamlv> ollama run llama3

>>>

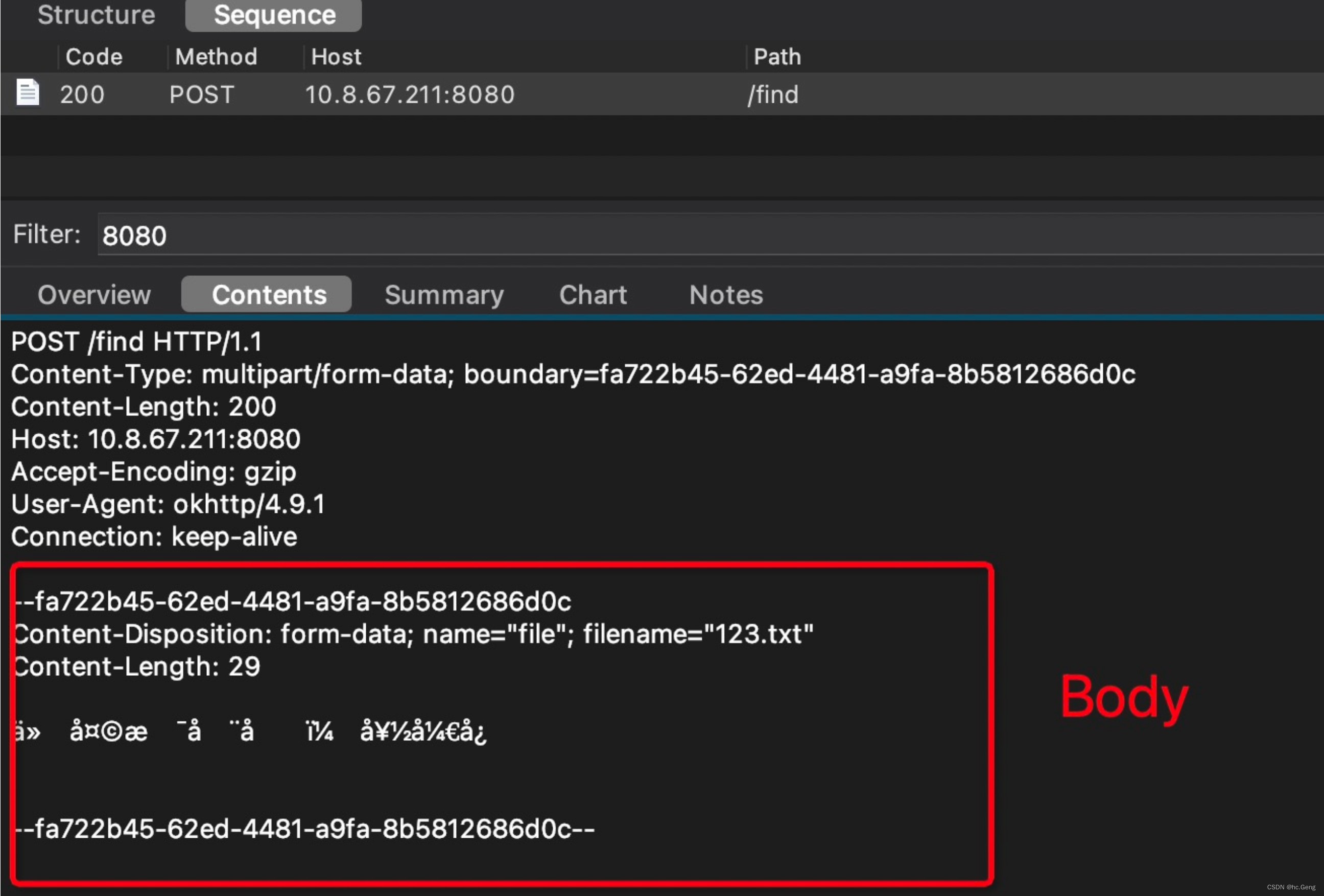

网页界面交互

open WebUI提供web网页

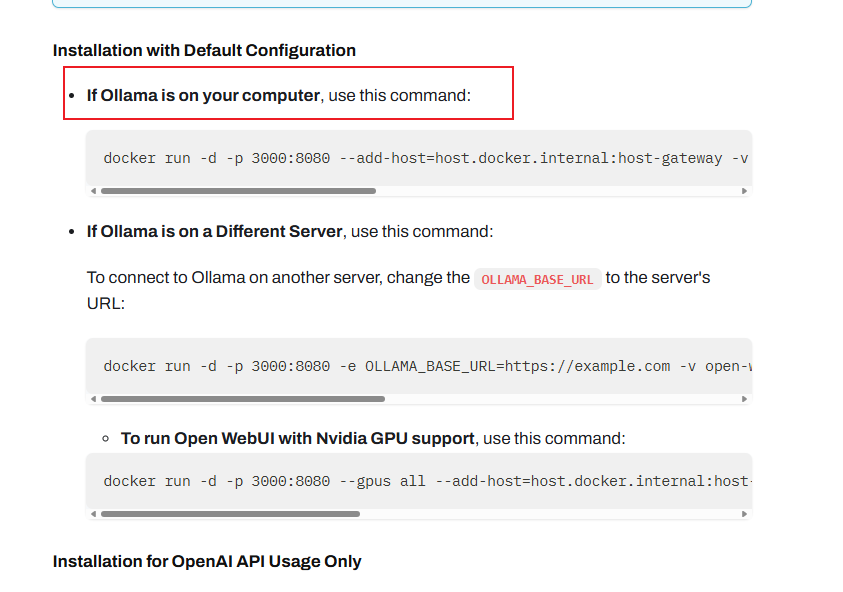

open WebUI的安装有很多方法,如下文,推荐docker容器安装,

🚀 Getting Started | Open WebUI

安装docker Windows 桌面版

PS C:\Users\iamlv> docker version

Client:

Cloud integration: v1.0.35+desktop.13

Version: 26.1.1

API version: 1.45

Go version: go1.21.9

Git commit: 4cf5afa

Built: Tue Apr 30 11:48:43 2024

OS/Arch: windows/amd64

Context: default

Server: Docker Desktop 4.30.0 (149282)

Engine:

Version: 26.1.1

API version: 1.45 (minimum version 1.24)

Go version: go1.21.9

Git commit: ac2de55

Built: Tue Apr 30 11:48:28 2024

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.31

GitCommit: e377cd56a71523140ca6ae87e30244719194a521

runc:

Version: 1.1.12

GitCommit: v1.1.12-0-g51d5e94

docker-init:

Version: 0.19.0

GitCommit: de40ad0在docker下载open Web UI的镜像,注意Ollama不在你电脑和使用Nvidia GPU驱动Open WebUI的情况;

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainPS C:\Users\iamlv> docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Unable to find image 'ghcr.io/open-webui/open-webui:main' locally

main: Pulling from open-webui/open-webui

2cc3ae149d28: Pull complete

87c0edd565e2: Pull complete

3df7545512d5: Pull complete

8f26d42ebf67: Pull complete

8951c7adce45: Pull complete

e68b3f3b28fc: Pull complete

4f4fb700ef54: Pull complete

04910e925ba2: Pull complete

e00576178709: Pull complete

7b74341f8bd7: Pull complete

46aff8bb649e: Pull complete

d2f7110849c1: Pull complete

672fa8e030d8: Pull complete

90d4d9484fcc: Pull complete

4889567b5c13: Pull complete

4ec90e217655: Pull complete

Digest: sha256:0fa56a9d947413cba22e4029df88e8c47acc78c013f68df303619ed05e45d9cf

Status: Downloaded newer image for ghcr.io/open-webui/open-webui:main

47b3c067e2d2b254336194add3431dd151664c8d4095f3ae4f5b3b5fb7acf139

PS C:\Users\iamlv>

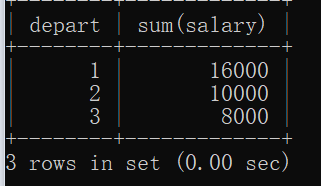

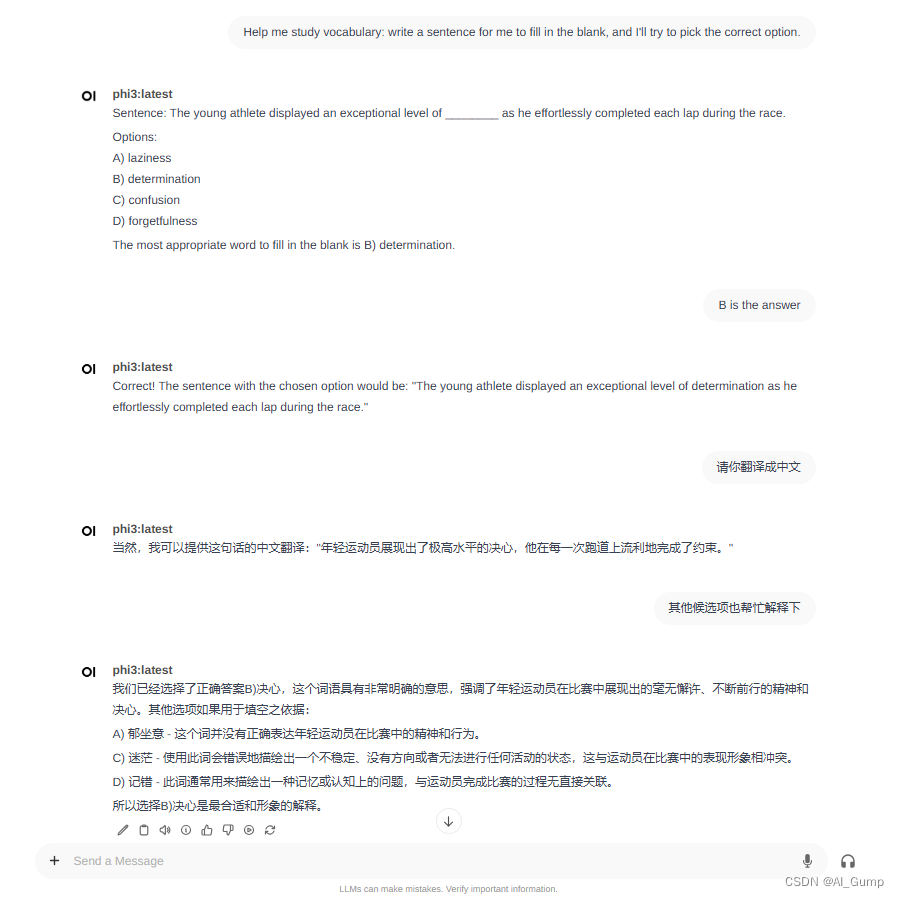

测试一个英语题和翻译解读

附录

PS C:\Users\jacob> ollama -h

Large language model runner

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

Use "ollama [command] --help" for more information about a command.