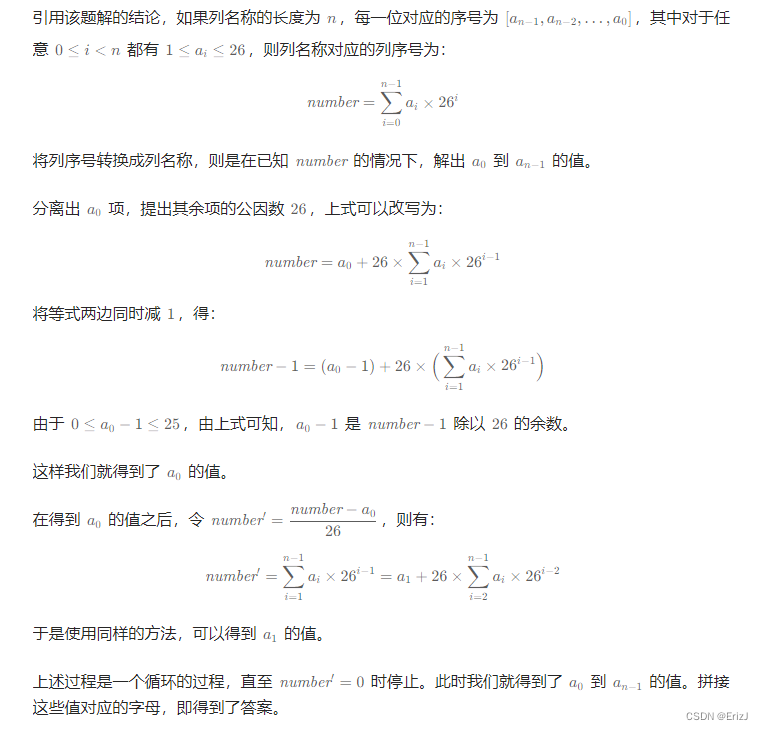

美国国家航空航天局的商用模块化航空推进仿真系统(CMAPSS)所模拟出的涡扇发动机性能退化数据进行实验验证,数据中包含有风扇、涡轮、压气机等组件参数。C-MAPSS中所包含的数据集可以模拟出从海平面到42千英尺的高度,从0到0.9马赫的速度以及从60到100的油门杆角度。同时在每次循环的某一时间点开始会设置指定故障,并且故障在剩余循环继续存在,从而可以确定故障出现在哪一时刻,所以该数据集被普遍用作预测涡扇发动机RUL问题的基准数据集。

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn import linear_model

from sklearn.metrics import mean_squared_error, r2_score,mean_absolute_percentage_error

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestRegressor

from sklearn.neighbors import KNeighborsRegressor

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import GradientBoostingRegressor

import mplcyberpunk as cyberpunkImporting Data

index_names = ['id', 'cycle']

setting_names = ['setting_1', 'setting_2', 'setting_3']

sensor_list=[ "(Fan inlet temperature) (◦R)",

"(LPC outlet temperature) (◦R)",

"(HPC outlet temperature) (◦R)",

"(LPT outlet temperature) (◦R)",

"(Fan inlet Pressure) (psia)",

"(bypass-duct pressure) (psia)",

"(HPC outlet pressure) (psia)",

"(Physical fan speed) (rpm)",

"(Physical core speed) (rpm)",

"(Engine pressure ratio(P50/P2)",

"(HPC outlet Static pressure) (psia)",

"(Ratio of fuel flow to Ps30) (pps/psia)",

"(Corrected fan speed) (rpm)",

"(Corrected core speed) (rpm)",

"(Bypass Ratio) ",

"(Burner fuel-air ratio)",

"(Bleed Enthalpy)",

"(Required fan speed)",

"(Required fan conversion speed)",

"(High-pressure turbines Cool air flow)",

"(Low-pressure turbines Cool air flow)" ]

columns = index_names + setting_names + sensor_list

#print(len(columns))

train = pd.read_csv('train_FD001.txt',sep='\s+',names=columns)

test = pd.read_csv('test_FD001.txt',sep='\s+',header=None,index_col=False,names=columns)

test_res = pd.read_csv('RUL_FD001.txt',sep="\s+",header=None)Data Cleaning

df_train=train.copy()

df_test=test.copy()

print("Shape of dataset",df_train.shape)

print("Null values in dataset",df_train.isnull().sum())Shape of dataset (20631, 26)

Null values in dataset id 0

cycle 0

setting_1 0

setting_2 0

setting_3 0

(Fan inlet temperature) (◦R) 0

(LPC outlet temperature) (◦R) 0

(HPC outlet temperature) (◦R) 0

(LPT outlet temperature) (◦R) 0

(Fan inlet Pressure) (psia) 0

(bypass-duct pressure) (psia) 0

(HPC outlet pressure) (psia) 0

(Physical fan speed) (rpm) 0

(Physical core speed) (rpm) 0

(Engine pressure ratio(P50/P2) 0

(HPC outlet Static pressure) (psia) 0

(Ratio of fuel flow to Ps30) (pps/psia) 0

(Corrected fan speed) (rpm) 0

(Corrected core speed) (rpm) 0

(Bypass Ratio) 0

(Burner fuel-air ratio) 0

(Bleed Enthalpy) 0

(Required fan speed) 0

(Required fan conversion speed) 0

(High-pressure turbines Cool air flow) 0

(Low-pressure turbines Cool air flow) 0

dtype: int64

x = df_train[index_names].groupby('id').max()

#print(x)

plt.figure(figsize=(20,70))

ax= x['cycle'].plot(kind='barh',width=0.8)

plt.title('Engines LifeTime',size=40)

plt.xlabel('Cycles',size=30)

plt.xticks(size=15)

plt.ylabel('Engine_ID',size=30)

plt.yticks(size=15)

plt.grid(True)

plt.tight_layout()

plt.show()

sns.set_theme(style="whitegrid")

sns.displot(x['cycle'], kde=True, bins=20, height=6, aspect=2, color='blue')

plt.gca().lines[0].set_color('lime')

cyberpunk.make_lines_glow()

plt.xlabel('Max Cycle')

plt.show()

Data Filtering and Treatment

n = len(df_train)

y = list(df_train.groupby(['id'])['cycle'].max())

for i in range(len(y)):

y[i] = int(y[i])

z = list(df_train['cycle'])

for i in range(n):

z[i] = int(z[i])

ans = [0]*(n)

for i in range(n):

ans[i] = y[df_train.iloc[i,0]-1] - z[i]

df_train['RUL'] = ans

train['RUL'] = ans

train

rem_col = list()

for i in range(df_train.shape[1]-1):

cor = df_train["RUL"].corr(df_train.iloc[:,i])

if -0.001<=cor<=0.001 or np.isnan(cor):

rem_col.append(df_train.columns[i])

print(rem_col)['setting_3', '(Fan inlet temperature) (◦R)', '(Fan inlet Pressure) (psia)', '(Engine pressure ratio(P50/P2)', '(Burner fuel-air ratio)', '(Required fan speed)', '(Required fan conversion speed)']

plt.figure(figsize=(18,18))

sns.set_style("whitegrid", {"axes.facecolor": ".0"})

df_cluster2 = df_train.corr()

plot_kws={"s": 1}

sns.heatmap(train.corr(),

cmap='RdYlBu',

annot=True,

linecolor='lightgrey').set_facecolor('white')

Since correlation of some of the columns are almost zero we will remove them from the dataset for a better training model.

df_train = df_train.drop(columns=rem_col)

df_train

Heatmap after removing unwanted columns

plt.figure(figsize=(18,18))

sns.set_style("whitegrid", {"axes.facecolor": ".0"})

df_cluster2 = df_train.corr()

plot_kws={"s": 1}

sns.heatmap(df_train.corr(),

cmap='RdYlBu',

annot=True,

linecolor='lightgrey').set_facecolor('white')

Heatmap of the dataset showing correlation greater than 0.9.

threshold = 0.90

plt.figure(figsize=(10,10))

sns.set_style("whitegrid", {"axes.facecolor": ".0"})

df_cluster2 = df_train.corr()

mask = df_cluster2.where((abs(df_cluster2) >= threshold)).isna()

plot_kws={"s": 1}

sns.heatmap(df_cluster2,

cmap='RdYlBu',

annot=True,

mask=mask,

linewidths=0.2,

linecolor='lightgrey').set_facecolor('white')

rem_col_new = []

y =[]

for i in range(df_train.shape[1]):

for j in range(i+1,df_train.shape[1]):

corr = df_train.iloc[:,i].corr(df_train.iloc[:,j])

if abs(corr)>=0.9:

rem_col_new.append(df_train.columns[j])

rem_col_newdf_train = df_train.drop(columns=rem_col_new)

df_train

Columns having 2 unique values with a ratio greater or equal to 0.95 will be removed from the datset for a better training model.

uniq = list(df_train.nunique())

rem_column = []

for i in range(len(uniq)):

if uniq[i]==2:

x_un = df_train.iloc[:,i].unique()

x1 = ((df_train[df_train.columns[i]]==x_un[0]).sum())/df_train.shape[0]

x2 = ((df_train[df_train.columns[i]]==x_un[1]).sum())/df_train.shape[0]

if x1/x2>0.95 or x2/x1>0.95:

rem_column.append(df_train.columns[i])

rem_columndf_train = df_train.drop(columns=rem_column)

df_train

from sklearn.metrics import mean_squared_error, r2_score

def error(test_res, y_pred):

mse = mean_squared_error(test_res, y_pred)

rmse = np.sqrt(mse)

r2 = r2_score(test_res, y_pred)

print(f"Mean squared error: {mse}")

print(f"Root mean squared error: {rmse}")

print(f"R-squared score: {r2}")

return [r2,rmse]r_2_score = []

rmse = []

Method = []Linear Regression without dropping features/columns.

drop_col = ['RUL']

X_train=train.drop(columns=drop_col).copy()

Y_train = df_train["RUL"]

reg = linear_model.LinearRegression()

reg.fit(X_train, Y_train)

LinearRegression()X_test=test.copy()

ans = reg.predict(X_test)

df_test["Pred_RUL_LR_wrf"] = ans

rem = ["Pred_RUL_LR_wrf"]y_pred_LR_wrf = list(df_test.groupby(['id'])['Pred_RUL_LR_wrf'].min())

error_LR = error(test_res, y_pred_LR_wrf)

r_2_score.append(error_LR[0])

rmse.append(error_LR[1])

Method.append("LR_wrf")Mean squared error: 894.8305578921604

Root mean squared error: 29.91371855674517

R-squared score: 0.48181926539666886

Applying Linear Regression after removing features.

x_train=df_train.drop(columns=['RUL']).copy()

y_train = df_train["RUL"]

reg = linear_model.LinearRegression()

reg.fit(x_train, y_train)y_test = df_test.drop(columns = rem+rem_col+rem_col_new+rem_column).copy()

lin_pre = reg.predict(y_test)

df_test["Pred_RUL_LR_arf"] = lin_prey_pred_LR_arf = list(df_test.groupby(['id'])['Pred_RUL_LR_arf'].min())

f = error(test_res, y_pred_LR_arf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("LR_arf")

rem.append("Pred_RUL_LR_arf")Mean squared error: 890.8276472071307

Root mean squared error: 29.846735955664073

R-squared score: 0.48413728100423403

Random Forest Regression before removing any features.

rf = RandomForestRegressor(max_features = "log2")

rf.fit(X_train, Y_train)

ans = rf.predict(X_test)

df_test["Pred_RUL_RF_wrf"] = ans

rem.append("Pred_RUL_RF_wrf")

y_pred_RF_wrf = list(df_test.groupby(['id'])['Pred_RUL_RF_wrf'].min())

f = error(test_res, y_pred_RF_wrf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("RF_wrf")Mean squared error: 576.1797359999999

Root mean squared error: 24.00374420793556

R-squared score: 0.6663443863972125

Applying Random Forest Regression after removing features

rf = RandomForestRegressor(max_features = "log2")

rf.fit(x_train, y_train)

pre_rf = rf.predict(y_test)

df_test["Pred_RUL_RF_arf"] = pre_rf

y_pred_RF_arf = list(df_test.groupby(['id'])['Pred_RUL_RF_arf'].min())

f = error(test_res, y_pred_RF_arf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("RF_arf")

rem.append("Pred_RUL_RF_arf")Mean squared error: 651.5577000000001

Root mean squared error: 25.525628297849988

R-squared score: 0.6226943250376287

KNN before removing features

model=KNeighborsRegressor(n_neighbors = 24)

model.fit(X_train, Y_train)

ans = model.predict(X_test)

df_test["Pred_RUL_KNN_wrf"] = ans

rem.append("Pred_RUL_KNN_wrf")

y_pred_KNN_wrf = list(df_test.groupby(['id'])['Pred_RUL_KNN_wrf'].min())

f = error(test_res, y_pred_KNN_wrf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("KNN_wrf")Mean squared error: 969.0081597222222

Root mean squared error: 31.128895896292597

R-squared score: 0.4388643127875884

KNN after removing features

model = KNeighborsRegressor(n_neighbors = 78)

model.fit(x_train, y_train)

pre_KNN = model.predict(y_test)

df_test["Pred_RUL_KNN_arf"] = pre_KNN

y_pred_KNN_arf = list(df_test.groupby(['id'])['Pred_RUL_KNN_arf'].min())

f = error(test_res, y_pred_KNN_arf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("KNN_arf")

rem.append("Pred_RUL_KNN_arf")Mean squared error: 940.5797731755424

Root mean squared error: 30.66887303399886

R-squared score: 0.45532669451385177

Gradient Boosting Regression before removing features

model=GradientBoostingRegressor(loss = "absolute_error",criterion = "squared_error",max_features = "sqrt")

model.fit(X_train, Y_train)

ans = model.predict(X_test)

df_test["Pred_RUL_GB_wrf"] = ans

rem.append("Pred_RUL_GB_wrf")

y_pred_GB_wrf = list(df_test.groupby(['id'])['Pred_RUL_GB_wrf'].min())

f = error(test_res, y_pred_GB_wrf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("GB_wrf")Mean squared error: 549.1271065841099

Root mean squared error: 23.433461259150555

R-squared score: 0.6820100912170148

Gradient Boosting Regression after removing features

model=GradientBoostingRegressor(loss = "absolute_error",criterion = "squared_error",max_features = "log2")

model.fit(x_train, y_train)

pre_KNN = model.predict(y_test)

df_test["Pred_RUL_GBR_arf"] = pre_KNN

y_pred_GB_arf = list(df_test.groupby(['id'])['Pred_RUL_GBR_arf'].min())

f = error(test_res, y_pred_GB_arf)

r_2_score.append(f[0])

rmse.append(f[1])

Method.append("GBR_arf")

rem.append("Pred_RUL_GBR_arf")Mean squared error: 571.646562441639

Root mean squared error: 23.909131361085436

R-squared score: 0.6689694679658271

Deciding the final regression method for prediction

import numpy as np

import matplotlib.pyplot as plt

method = ["LR","RF","KNN","GB"]

X_axis = np.arange(len(method))

r2_score_wr = r_2_score[::2]

r2_score_r = r_2_score[1::2]

plt.figure(figsize = (11,8))

plt.bar(X_axis - 0.1, r2_score_wr, 0.2, label='Without removing features')

plt.bar(X_axis + 0.1, r2_score_r, 0.2, label='After removing features')

plt.xticks(X_axis, method)

plt.xlabel("Methods")

plt.ylabel("R2-score")

plt.title("R2-score of applied method")

plt.legend(facecolor='white')

for i in range(len(X_axis)):

plt.text(X_axis[i] - 0.1, r2_score_wr[i] + 0.01, "{:.2f}".format(r2_score_wr[i]), ha='center', va='bottom')

plt.text(X_axis[i] + 0.1, r2_score_r[i] + 0.01, "{:.2f}".format(r2_score_r[i]), ha='center', va='bottom')

plt.gca().set_facecolor('white')

plt.show()

maxi = r_2_score.index(max(r_2_score))

print("Maximum R2-score",max(r_2_score))

print("Best Regression method for this dataset is:",Method[maxi])x=[0]*(len(test_res))

for i in range(len(test_res)):

x[i]=i+1

import matplotlib.pyplot as plt

plt.figure(figsize = (16,8))

plt.xlabel('Engine ID')

plt.ylabel('RUL')

plt.plot(x[30:40], test_res.iloc[30:40,0], label='Actual RUL',linestyle='dotted',marker='o')

plt.plot(x[30:40], y_pred_LR_wrf[30:40], label='Linear Regression',marker='o')

plt.plot(x[30:40], y_pred_RF_wrf[30:40], label='Random Forest',marker='o')

plt.plot(x[30:40], y_pred_KNN_wrf[30:40], label='KNN',marker='o')

plt.plot(x[30:40], y_pred_GB_wrf[30:40], label='Gradient Boosting',marker='o')

plt.legend(facecolor='white')

plt.gca().set_facecolor('white')

plt.show()

工学博士,担任《Mechanical System and Signal Processing》《中国电机工程学报》《控制与决策》等期刊审稿专家,擅长领域:现代信号处理,机器学习,深度学习,数字孪生,时间序列分析,设备缺陷检测、设备异常检测、设备智能故障诊断与健康管理PHM等。