安装 Ultralytics 之后,可以直接通过本地获取摄像头数据流,并通过 Yolo 模型实时进行识别。大多情况下,安装本地程序成本比较高,需要编译打包等等操作,如果可以直接通过浏览器显示视频,并实时显示识别到的对象类型就会方便很多。本文将通过 JS 原生代码 + 后台 Yolo 识别服务实现浏览器实时显示并识别对象类型的效果。

后发服务

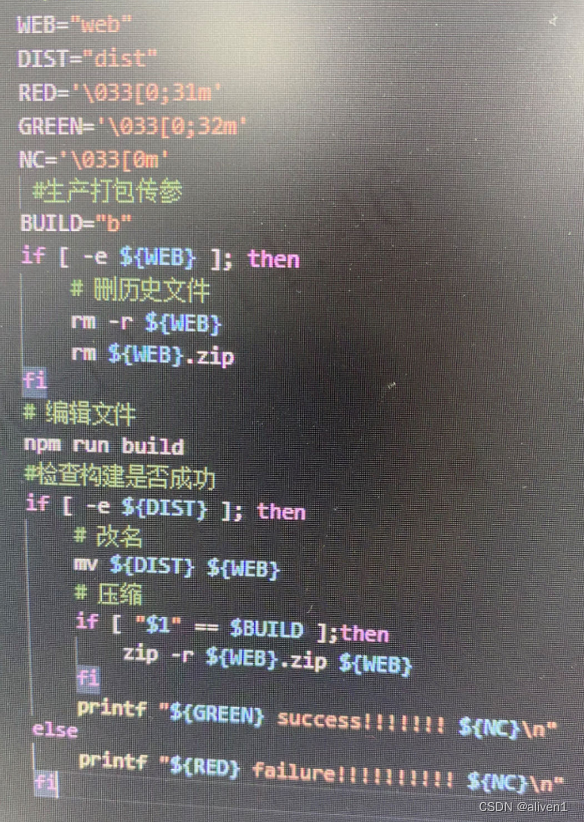

后台服务采用 Python Flask 框架实现图片识别的 Rest API,开发之前,首先安装 Ultralytics 环境,我们使用官方的 DockerImage,用官方镜像作为基础镜像,安装相关依赖。

Dockerfile

# Use the ultralytics/ultralytics image as the base

FROM ultralytics/ultralytics:latest

# Update package lists and install vim

RUN apt-get update && apt-get install -y vim

# Install Flask using pip

RUN pip install flask flask-cors

# Set the working directory

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Expose port 5000 for Flask

EXPOSE 5000

# Command to run the Flask application

App.py

后台 Rest API,/detect,解析 base64 图片,并返回识别到的图片分类和位置信息。

import os

from flask import Flask, request, jsonify

from ultralytics import YOLO

import cv2

import numpy as np

# Initialize Flask app

app = Flask(__name__)

# Load YOLOv8 model

model = YOLO('yolov8n.pt') # You can change 'yolov8n.pt' to other versions like 'yolov8m.pt' or 'yolov8x.pt'

# Function to perform object detection

def detect_objects(image):

results = model(image)

detections = []

for result in results:

for box in result.boxes:

x1, y1, x2, y2 = map(int, box.xyxy)

class_id = int(box.cls)

confidence = box.conf

detections.append({

'class_id': class_id,

'label': model.names[class_id],

'confidence': float(confidence),

'bbox': [x1, y1, x2, y2]

})

return detections

# Route for object detection

@app.route('/detect', methods=['POST'])

def detect():

if 'image' not in request.files:

return jsonify({'error': 'No image provided'}), 400

file = request.files['image']

if file.filename == '':

return jsonify({'error': 'No image selected for uploading'}), 400

# Read image

image = np.frombuffer(file.read(), np.uint8)

image = cv2.imdecode(image, cv2.IMREAD_COLOR)

# Perform detection

detections = detect_objects(image)

return jsonify(detections)

# Run Flask app

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

前端页面

在页面显示摄像头,实时发送图片数据到后台进行识别,获取位置并显示在画布纸上。

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Real-Time Object Detection with YOLO</title>

<style>

body {

display: flex;

justify-content: center;

align-items: center;

height: 100vh;

margin: 0;

background-color: #f0f0f0;

overflow: hidden;

}

#camera, #canvas {

position: absolute;

width: 50%;

height: 50%;

object-fit: cover;

}

#camera {

z-index: 1;

}

#canvas {

z-index: 2;

}

</style>

</head>

<body>

<video id="camera" autoplay playsinline></video>

<canvas id="canvas"></canvas>

<script>

const classColors = {};

function getRandomColor() {

const letters = '0123456789ABCDEF';

let color = '#';

for (let i = 0; i < 6; i++) {

color += letters[Math.floor(Math.random() * 16)];

}

return color;

}

function isMobileDevice() {

return /Mobi|Android/i.test(navigator.userAgent);

}

async function setupCamera() {

const video = document.getElementById('camera');

const facingMode = isMobileDevice() ? 'environment' : 'user'; // Use back camera for mobile, front camera for PC

try {

const stream = await navigator.mediaDevices.getUserMedia({

video: {

facingMode: { ideal: facingMode }

}

});

video.srcObject = stream;

return new Promise((resolve) => {

video.onloadedmetadata = () => {

resolve(video);

};

});

} catch (error) {

console.error('Error accessing camera:', error);

alert('Error accessing camera: ' + error.message);

}

}

async function sendFrameToBackend(imageData) {

const response = await fetch('https://c.hawk.leedar360.com/api/detect', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({ image: imageData })

});

return await response.json();

}

function getBase64Image(video) {

const canvas = document.createElement('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

const ctx = canvas.getContext('2d');

ctx.drawImage(video, 0, 0, canvas.width, canvas.height);

return canvas.toDataURL('image/jpeg').split(',')[1];

}

function renderDetections(detections, canvas, video) {

const ctx = canvas.getContext('2d');

ctx.clearRect(0, 0, canvas.width, canvas.height);

ctx.drawImage(video, 0, 0, canvas.width, canvas.height);

detections.forEach(det => {

const { bbox, confidence, class_id, label } = det;

if (confidence > 0.5) { // Only show detections with confidence > 0.5

const [x, y, w, h] = bbox;

if (!classColors[class_id]) {

classColors[class_id] = getRandomColor();

}

const color = classColors[class_id];

ctx.beginPath();

ctx.rect(x, y, w - x, h - y);

ctx.lineWidth = 2;

ctx.strokeStyle = color;

ctx.fillStyle = color;

ctx.stroke();

ctx.font = '24px Arial'; // Set font size to 24px

ctx.fillText(

`${label} (${Math.round(confidence * 100)}%)`,

x,

y > 24 ? y - 10 : 24 // Adjust position for the larger font size

);

}

});

}

async function main() {

const video = await setupCamera();

if (!video) {

console.error('Camera setup failed');

return;

}

video.play();

const canvas = document.getElementById('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

async function processFrame() {

const imageData = getBase64Image(video);

const detections = await sendFrameToBackend(imageData);

renderDetections(detections, canvas, video);

requestAnimationFrame(processFrame);

}

processFrame();

}

main();

// Handle orientation change and resizing

window.addEventListener('resize', () => {

const video = document.getElementById('camera');

const canvas = document.getElementById('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

});

window.addEventListener('orientationchange', () => {

const video = document.getElementById('camera');

const canvas = document.getElementById('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

});

</script>

</body>

</html>

总结

功能很好实现,效果还要微调,苹果的充电器并没有识别出来。