大模型基础——从零实现一个Transformer(1)-CSDN博客

一、前言

之前两篇文章已经讲了Transformer的Embedding,Tokenizer,Attention,Position Encoding,

本文我们继续了解Transformer中剩下的其他组件.

二、归一化

2.1 Layer Normalization

layerNorm是针对序列数据提出的一种归一化方法,主要在layer维度进行归一化,即对整个序列进行归一化。

layerNorm会计算一个layer的所有activation的均值和方差,利用均值和方差进行归一化。

𝜇=∑𝑖=1𝑑𝑥𝑖

𝜎=1𝑑∑𝑖=1𝑑(𝑥𝑖−𝜇)2

归一化后的激活值如下:

𝑦=𝑥−𝜇𝜎+𝜖𝛾+𝛽

其中 𝛾 和 𝛽 是可训练的模型参数。 𝛾 是缩放参数,新分布的方差 𝛾2 ; 𝛽 是平移系数,新分布的均值为 𝛽 。 𝜖 为一个小数,添加到方差上,避免分母为0。

2.2 LayerNormalization 代码实现

import torch

import torch.nn as nn

class LayerNorm(nn.Module):

def __init__(self,num_features,eps=1e-6):

super().__init__()

self.gamma = nn.Parameter(torch.ones(num_features))

self.beta = nn.Parameter(torch.zeros(num_features))

self.eps = eps

def forward(self,x):

"""

Args:

x (Tensor): (batch_size, seq_length, d_model)

Returns:

Tensor: (batch_size, seq_length, d_model)

"""

mean = x.mean(dim=-1,keepdim=True)

std = x.std(dim=-1,keepdim=True,unbiased=False)

normalized_x = (x - mean) / (std + self.eps)

return self.gamma * normalized_x + self.beta

if __name__ == '__main__':

batch_size = 2

seqlen = 3

hidden_dim = 4

# 初始化一个随机tensor

x = torch.randn(batch_size,seqlen,hidden_dim)

print(x)

# 初始化LayerNorm

layer_norm = LayerNorm(num_features=hidden_dim)

output_tensor = layer_norm(x)

print("output after layer norm:\n,",output_tensor)

torch_layer_norm = torch.nn.LayerNorm(normalized_shape=hidden_dim)

torch_output_tensor = torch_layer_norm(x)

print("output after torch layer norm:\n",torch_output_tensor)三、残差连接

残差连接(residual connection,skip residual,也称为残差块)其实很简单

x为网络层的输入,该网络层包含非线性激活函数,记为F(x),用公式描述的话就是:

代码简单实现

x = x + layer(x)四、前馈神经网络

4.1 Position-wise Feed Forward

Position-wise Feed Forward(FFN),逐位置的前馈网络,其实就是一个全连接前馈网络。目的是为了增加非线性,增强模型的表示能力。

它一个简单的两层全连接神经网络,不是将整个嵌入序列处理成单个向量,而是独立地处理每个位置的嵌入。所以称为position-wise前馈网络层。也可以看为核大小为1的一维卷积。

目的是把输入投影到特定的空间,再投影回输入维度。

FFN具体的公式如下:

𝐹𝐹𝑁(𝑥)=𝑓(𝑥𝑊1+𝑏1)𝑊2+𝑏2

上述公式对应FFN中的向量变换操作,其中f为非线性激活函数。

4.2 FFN代码实现

from torch import nn,Tensor

from torch.nn import functional as F

class PositonWiseFeedForward(nn.Module):

def __init__(self,d_model:int ,d_ff: int ,dropout: float=0.1) -> None:

'''

:param d_model: dimension of embeddings

:param d_ff: dimension of feed-forward network

:param dropout: dropout ratio

'''

super().__init__()

self.ff1 = nn.Linear(d_model,d_ff)

self.ff2 = nn.Linear(d_ff,d_model)

self.dropout = nn.Dropout(dropout)

def forward(self,x: Tensor) -> Tensor:

'''

:param x: (batch_size, seq_length, d_model) output from attention

:return: (batch_size, seq_length, d_model)

'''

return self.ff2(self.dropout(F.relu(self.ff1(x))))五、Transformer Encoder Block

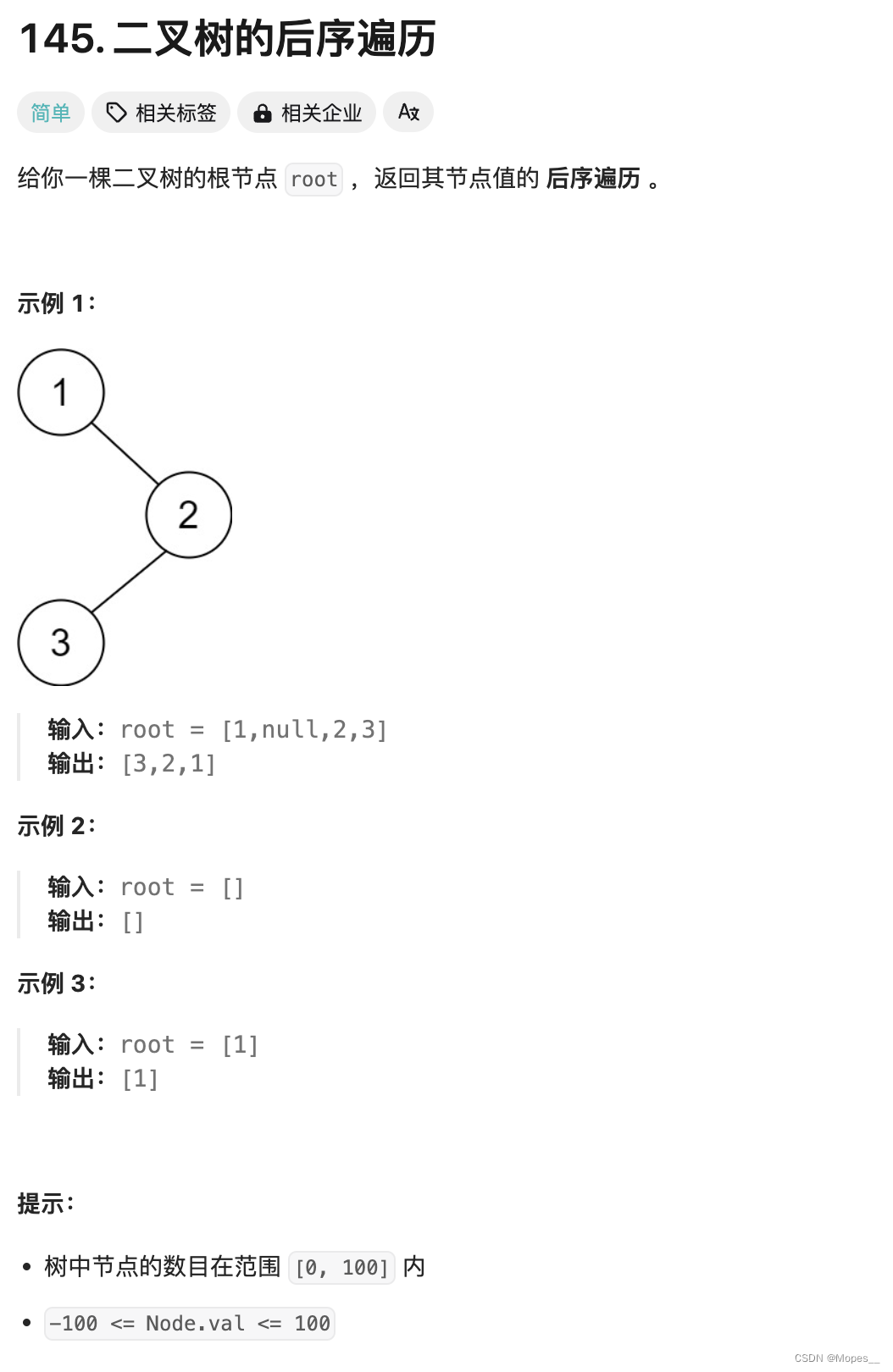

如图所示,编码器(Encoder)由N个编码器块(Encoder Block)堆叠而成,我们依次实现。

from torch import nn,Tensor

## 之前实现的函数引入

from llm_base.attention.MultiHeadAttention1 import MultiHeadAttention

from llm_base.layer_norm.normal_layernorm import LayerNorm

from llm_base.ffn.PositionWiseFeedForward import PositonWiseFeedForward

from typing import *

class EncoderBlock(nn.Module):

def __init__(self,

d_model: int,

n_heads: int,

d_ff: int,

dropout: float,

norm_first: bool = False):

'''

:param d_model: dimension of embeddings

:param n_heads: number of heads

:param d_ff: dimension of inner feed-forward network

:param dropout:dropout ratio

:param norm_first : if True, layer norm is done prior to attention and feedforward operations(Pre-Norm).

Otherwise it's done after(Post-Norm). Default to False.

'''

super().__init__()

self.norm_first = norm_first

self.attention = MultiHeadAttention(d_model,n_heads,dropout)

self.norm1 = LayerNorm(d_model)

self.ff = PositonWiseFeedForward(d_model,d_ff,dropout)

self.norm2 = LayerNorm(d_model)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

# self attention sub layer

def _self_attention_sub_layer(self,x: Tensor, attn_mask: Tensor, keep_attentions: bool) -> Tensor:

x = self.attention(x,x,x,attn_mask,keep_attentions)

return self.dropout1(x)

# ffn sub layer

def _ffn_sub_layer(self,x: Tensor) -> Tensor:

x = self.ff(x)

return self.dropout2(x)

def forward(self,src: Tensor,src_mask: Tensor == None,keep_attentions: bool= False) -> Tuple[Tensor,Tensor]:

'''

:param src: (batch_size, seq_length, d_model)

:param src_mask: (batch_size, 1, seq_length)

:param keep_attentions:whether keep attention weigths or not. Defaults to False.

:return:(batch_size, seq_length, d_model) output of encoder block

'''

# pass througth multi-head attention

# src (batch_size, seq_length, d_model)

# attn_score (batch_size, n_heads, seq_length, k_length)

x = src

# post LN or pre LN

if self.norm_first:

# pre LN

x = x + self._self_attention_sub_layer(self.norm1(x),src_mask,keep_attentions)

x = x + self._ffn_sub_layer(self.norm2(x))

else:

x = self.norm1(x + self._self_attention_sub_layer(x,src_mask,keep_attentions))

x = self.norm2(x + self._ffn_sub_layer(x))

return x

5.1 Post Norm Vs Pre Norm

公式区别

Pre Norm 和 Post Norm 的式子分别如下:

在大模型的区别

Post-LN :是在 Transformer 的原始版本中使用的归一化方案。在此方案中,每个子层(例如,自注意力机制或前馈网络)的输出先通过子层自身的操作,然后再通过层归一化(Layer Normalization)

Pre-LN:是先对输入进行层归一化,然后再传递到子层操作中。这样的顺序对于训练更深的网络可能更稳定,因为归一化的输入可以帮助缓解训练过程中的梯度消失和梯度爆炸问题。

5.2为什么Pre效果弱于Post