目录

一、环境介绍

1.1、本节实验环境

1.2、实验拓扑

1.3、实验要求

1.4、实现思路

二、系统环境准备

2.1、主机配置

2.2、安装 Docker

2.3、设置防火墙

2.4、禁用 SELinux

三、生成通信加密证书

3.1、生成 CA 证书

3.2、生成 server 证书

3.3、生成 admin 证书

3.4、生成 proxy 证书

四、部署 Etcd 集群

4.1、在 k8s-master 主机上部署 Etcd 节点

4.2、在 k8s-node1、k8s-node2 主机上部署 Etcd 节点

4.3、查看 Etcd 集群部署状况

五、部署 Flannel 网络

5.1、分配子网段到 Etcd

5.2、配置 Flannel

5.3、启动 Flannel

5.4、测试 flanneld 是否安装成功

六、部署 Kubernetes-master 组件

6.1、添加 kubectl 命令环境

6.2、创建 TLS Bootstrapping Token

6.3、创建 Kubelet kubeconfig

1)设置群集参数

2)设置客户端认证参数

3)设置上下文参数

4)设置默认上下文

6.4、创建 kuby-proxy kubeconfig

6.5、部署 Kube-apiserver

6.6、部署 Kube-controller-manager

6.7、部署 Kube-scheduler

6.8、检查组件运行是否正常

七、部署 Kubernetes-node 组件

7.1、准备环境

7.2、部署 kube-kubelet

7.3、部署 kube-proxy

7.4、查看 Node 节点组件是否安装成功

八、查看自动签发证书

一、环境介绍

1.1、本节实验环境

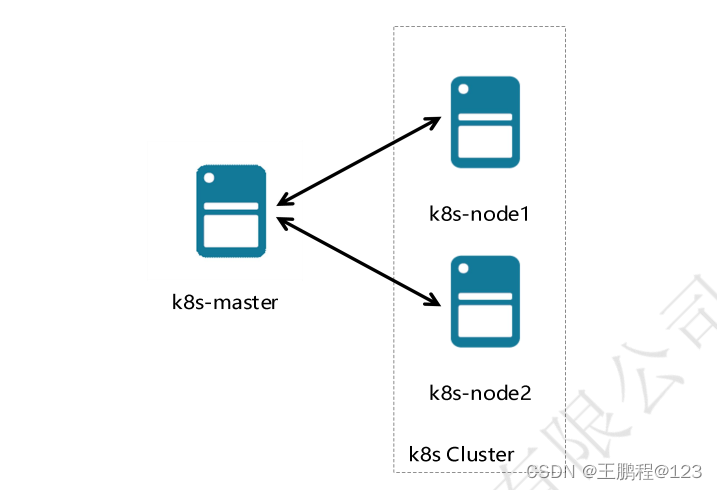

本节采用二进制方式来安装 Kubernetes 集群,集群结果同前面 Kubeadm 方式安装类似。实验环境包括一台 master 节点,两台 node 节点,具体的配置要求和角色分配如下表所示。

| 主机 | 操作系统 | 主机名 / IP 地址 | 主要软件 |

| 服务器 | CentOS 7.9 | k8s-master / 192.168.23.210 | Docker-ce-19.03.15 |

| 服务器 | CentOS 7.9 | k8s-node1 / 192.168.23.211 | Docker-ce-19.03.15 |

| 服务器 | CentOS 7.9 | k8s-node2 / 192.168.23.212 | Docker-ce-19.03.15 |

二进制安装 k8s 系统环境

| IP 地址 | Hostname | Roles and Service |

| 192.168.23.210 | k8s-master | Master、Kube-apiserver、Kube-controller-manager、Kube-Scheduler、Kubelet、Etcd |

| 192.168.23.211 | k8s-node1 | node、Kubectl、Kube-proxy、Flannel、Etcd |

| 192.168.23.212 | k8s-node2 | node、Kubectl、Kube-proxy、Flannel、Etcd |

Docker 角色分配

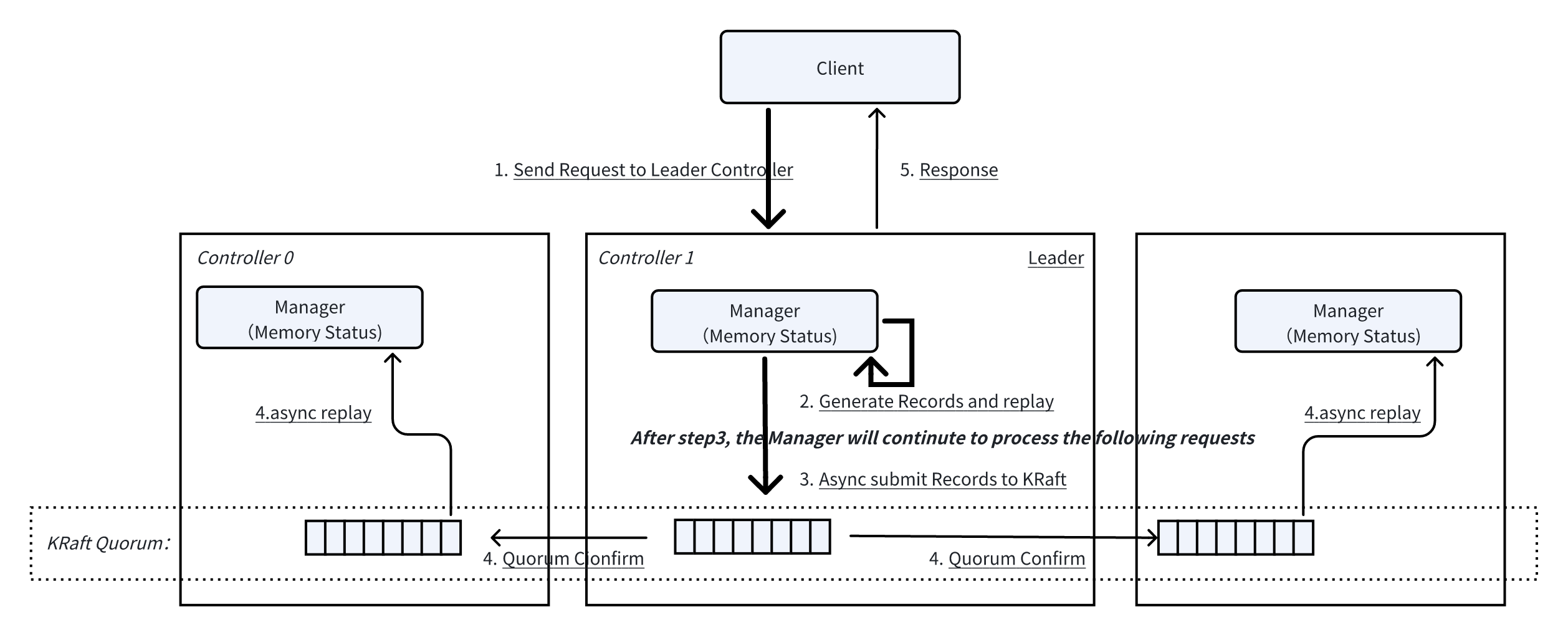

1.2、实验拓扑

二进制安装 k8s 实验拓扑

二进制安装 k8s 实验拓扑

1.3、实验要求

- 完成 Etcd 服务集群部署。

- 完成 Flannel 跨主机通信网络部署。

- 完成 K8S 集群部署。

1.4、实现思路

- 准备 K8S 系统环境。

- 创建自签的 TLS 通信加密证书。

- 部署 Etcd 集群。

- 部署 Flannel 网络。

- 部署 K8S Master 组件。

- 部署 K8S Node 组件。

- 查看自动签发证书,验证 K8S 集群成功部署。

二、系统环境准备

2.1、主机配置

为三台主机分别设置主机名,具体操作如下所示。

[root@centos7-10 ~]# hostnamectl set-hostname k8s-master //192.168.23.210 主机上操作

[root@centos7-10 ~]# bash

bash

[root@k8s-master ~]#

[root@centos7-11 ~]# hostnamectl set-hostname k8s-node1 //192.168.23.211 主机上操作

[root@centos7-11 ~]# bash

[root@k8s-node1 ~]#

[root@centos7-12 ~]# hostnamectl set-hostname k8s-node2 //192.168.23.212 主机上操作

[root@centos7-12 ~]# bash

bash

[root@k8s-node2 ~]#在三台主机上修改 hosts 文件添加地址解析记录,下面以 k8s-master 主机为例进行操作演示。

[root@k8s-master ~]# cat <<EOF>> /etc/hosts

192.168.23.210 k8s-master

192.168.23.211 k8s-node1

192.168.23.212 k8s-node2

EOF在所有主机上添加外网 DNS 服务器,也可以根据本地的网络环境添加相对应的 DNS 服务器。下面以 k8s-master 主机为例进行操作演示。

[root@k8s-master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens32

DNS="202.96.128.86" //省略了部分内容,只显示 DNS 设置信息2.2、安装 Docker

在所有主机上安装并配置 Docker,下面以 k8s-master 主机为例进行操作演示。

[root@k8s-master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master ~]# yum -y install docker-ce-19.03.15 docker-ce-cli-19.03.15

[root@k8s-master ~]# mkdir /etc/docker

[root@k8s-master ~]# cat <<EOF>> /etc/docker/daemon.json

> {

> "registry-mirrors": ["https://z1qbjqql.mirror.aliyuncs.com"]

> }

> EOF

[root@k8s-master ~]# systemctl enable docker

[root@k8s-master ~]# systemctl start docker2.3、设置防火墙

关闭 firewalld 跟 iptables

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# systemctl disable firewalld

[root@k8s-master ~]# systemctl stop iptables

[root@k8s-master ~]# systemctl disable iptables2.4、禁用 SELinux

[root@k8s-master ~]# sed -i '/^SELINUX=/s/enforcing/disabled/' /etc/selinux/config

[root@k8s-master ~]# getenforce

Disabled

三、生成通信加密证书

Kubernetes 系统各组件之间需要使用 TLS 证书对通信进行加密,本实验使用 CloudFlare 的 PKI 工具集 CFSSL 来生成 Certificate Authority 和其他证书。

3.1、生成 CA 证书

执行以下操作,创建证书存放位置并安装证书生成工具。

[root@k8s-master ~]# mkdir -p /root/software/ssl

[root@k8s-master ~]# cd /root/software/ssl/

[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@k8s-master ssl]# chmod +x * //下载完后设置权限

[root@k8s-master ssl]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@k8s-master ssl]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@k8s-master ssl]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

[root@k8s-master ssl]# cfssl --help

Usage:

Available commands:

gencrl

selfsign

info

certinfo

sign

revoke

ocspsign

ocspserve

print-defaults

serve

gencert

genkey

ocspdump

ocsprefresh

scan

bundle

version

Top-level flags:

-allow_verification_with_non_compliant_keys

Allow a SignatureVerifier to use keys which are technically non-compliant with RFC6962.

-loglevel int

Log level (0 = DEBUG, 5 = FATAL) (default 1)

执行以下命令,拷贝证书生成脚本。

[root@k8s-master ssl]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@k8s-master ssl]# cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF执行以下操作,生成 CA 证书。

[root@k8s-master ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2024/06/06 09:43:39 [INFO] generating a new CA key and certificate from CSR

2024/06/06 09:43:39 [INFO] generate received request

2024/06/06 09:43:39 [INFO] received CSR

2024/06/06 09:43:39 [INFO] generating key: rsa-2048

2024/06/06 09:43:40 [INFO] encoded CSR

2024/06/06 09:43:40 [INFO] signed certificate with serial number 7117949274643722506501372034595479739313895686783.2、生成 server 证书

执行以下操作,创建 kubernetes-csr.json 文件,并生成 Server 证书。文件中配置的 IP 地址,是使用该证书的主机 IP 地址,根据实际的实验环境填写。其中 10.10.10.1 是 kubernetes 自带的 Service。

[root@k8s-master ssl]# cat << EOF >server-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.23.210",

"192.168.23.211",

"192.168.23.212",

"10.10.10.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2024/06/06 09:48:19 [INFO] generate received request

2024/06/06 09:48:19 [INFO] received CSR

2024/06/06 09:48:19 [INFO] generating key: rsa-2048

2024/06/06 09:48:20 [INFO] encoded CSR

2024/06/06 09:48:20 [INFO] signed certificate with serial number 603776903512835511379739614600806552987803011977

2024/06/06 09:48:20 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

3.3、生成 admin 证书

执行以下操作,创建 admin-csr.json 文件,并生成 admin 证书。

[root@k8s-master ssl]# cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin //admin 证书是用于管理员访问集群的证书

2024/06/06 09:50:18 [INFO] generate received request

2024/06/06 09:50:18 [INFO] received CSR

2024/06/06 09:50:18 [INFO] generating key: rsa-2048

2024/06/06 09:50:19 [INFO] encoded CSR

2024/06/06 09:50:19 [INFO] signed certificate with serial number 361778712728728623116210992282914399385926688561

2024/06/06 09:50:19 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

3.4、生成 proxy 证书

执行以下操作,创建 kube-proxy-csr.json 文件并生成证书。

[root@k8s-master ssl]# cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2024/06/06 09:52:15 [INFO] generate received request

2024/06/06 09:52:15 [INFO] received CSR

2024/06/06 09:52:15 [INFO] generating key: rsa-2048

2024/06/06 09:52:16 [INFO] encoded CSR

2024/06/06 09:52:16 [INFO] signed certificate with serial number 722599743697609779626650310952890015058740567842

2024/06/06 09:52:16 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master ssl]# ls | grep -v pem | xargs -i rm {} //删除证书以外的 json 文件,只保留 pem 证书

[root@k8s-master ssl]# ll

总用量 32

-rw------- 1 root root 1675 6月 6 09:50 admin-key.pem

-rw-r--r-- 1 root root 1399 6月 6 09:50 admin.pem

-rw------- 1 root root 1675 6月 6 09:43 ca-key.pem

-rw-r--r-- 1 root root 1359 6月 6 09:43 ca.pem

-rw------- 1 root root 1679 6月 6 09:52 kube-proxy-key.pem

-rw-r--r-- 1 root root 1403 6月 6 09:52 kube-proxy.pem

-rw------- 1 root root 1675 6月 6 09:48 server-key.pem

-rw-r--r-- 1 root root 1627 6月 6 09:48 server.pem

四、部署 Etcd 集群

执行以下操作,创建配置文件目录。

[root@k8s-master ~]# mkdir /opt/kubernetes

[root@k8s-master ~]# mkdir /opt/kubernetes/{bin,cfg,ssl}上传 etcd-v3.3.18-linux-amd64.tar.gz 软件包并执行以下操作,解压 etcd 软件包并拷贝二进制 bin 文件。

[root@k8s-master ~]# tar zxf etcd-v3.3.18-linux-amd64.tar.gz

[root@k8s-master ~]# cd etcd-v3.3.18-linux-amd64

[root@k8s-master etcd-v3.3.18-linux-amd64]# mv etcd /opt/kubernetes/bin/

[root@k8s-master etcd-v3.3.18-linux-amd64]# mv etcdctl /opt/kubernetes/bin/创建完配置目录并准备好 Etcd 软件安装包后,即可配置 Etcd 集群。具体操作如下所示。

4.1、在 k8s-master 主机上部署 Etcd 节点

创建 Etcd 配置文件。

[root@k8s-master ~]# vim /opt/kubernetes/cfg/etcd

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.23.210:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.23.210:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.23.210:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.23.210:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

创建脚本配置文件。

[root@k8s-master ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/kubernetes/cfg/etcd

ExecStart=/opt/kubernetes/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-state=new \

--cert-file=/opt/kubernetes/ssl/server.pem \

--key-file=/opt/kubernetes/ssl/server-key.pem \

--peer-cert-file=/opt/kubernetes/ssl/server.pem \

--peer-key-file=/opt/kubernetes/ssl/server-key.pem \

--trusted-ca-file=/opt/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target拷贝 Etcd 启动所依赖的证书

[root@k8s-master ~]# cd /root/software/

[root@k8s-master software]# cp ssl/server*pem ssl/ca*.pem /opt/kubernetes/ssl/

启动 Etcd 主节点。若主节点启动卡顿,直接 ctrl +c 终止即可。实际 Etcd 进程已经启动,在连接另外两个节点时会超时,因为另外两个节点尚未启动。

[root@k8s-master ~]# systemctl enable etcd

[root@k8s-master ~]# systemctl start etcd查看 Etcd 启动结果

[root@k8s-master ~]# ps -ef | grep etcd

root 17932 1 7 10:39 ? 00:00:01 /opt/kubernetes/bin/etcd --name=etcd01 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.23.210:2380 --listen-client-urls=https://192.168.23.210:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.23.210:2379 --initial-advertise-peer-urls=https://192.168.23.210:2380 --initial-cluster=etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380 --initial-cluster-token=etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380 --initial-cluster-state=new --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --peer-cert-file=/opt/kubernetes/ssl/server.pem --peer-key-file=/opt/kubernetes/ssl/server-key.pem --trusted-ca-file=/opt/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pem

root 17941 1703 0 10:39 pts/0 00:00:00 grep --color=auto etcd4.2、在 k8s-node1、k8s-node2 主机上部署 Etcd 节点

拷贝 Etcd 配置文件到计算节点主机,然后修改对应的主机 IP 地址。

[root@k8s-master ~]# rsync -avcz /opt/kubernetes/* 192.168.23.211:/opt/kubernetes/

[root@k8s-node1 ~]# vim /opt/kubernetes/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.23.211:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.23.211:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.23.211:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.23.211:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-master ~]# rsync -avcz /opt/kubernetes/* 192.168.23.212:/opt/kubernetes/

[root@k8s-node2 ~]# vim /opt/kubernetes/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.23.212:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.23.212:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.23.212:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.23.212:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

拷贝启动脚本文件。

[root@k8s-master ~]# scp /usr/lib/systemd/system/etcd.service 192.168.23.211:/usr/lib/systemd/system/

[root@k8s-master ~]# scp /usr/lib/systemd/system/etcd.service 192.168.23.212:/usr/lib/systemd/system/启动 Node 节点上的 Etcd。

[root@k8s-node1 ~]# systemctl enable etcd

[root@k8s-node1 ~]# systemctl start etcd

[root@k8s-node2 ~]# systemctl enable etcd

[root@k8s-node2 ~]# systemctl start etcd

4.3、查看 Etcd 集群部署状况

[root@k8s-master ~]# vim /etc/profile

......//省略部分内容

export PATH=$PATH:/opt/kubernetes/bin

[root@k8s-master ~]# source /etc/profile查看 Etcd 集群部署状况。

[root@k8s-master ~]# cd /root/software/ssl/

[root@k8s-master ssl]# etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.23.210:2379,https://192.168.23.211:2379,https://192.168.23.212:2379" cluster-health

member 84b74cdd714893b1 is healthy: got healthy result from https://192.168.23.212:2379

member c2af9fc3ae6df536 is healthy: got healthy result from https://192.168.23.210:2379

member f4df9e97a5989f60 is healthy: got healthy result from https://192.168.23.211:2379

cluster is healthy

至此完成 Etcd 集群部署。

五、部署 Flannel 网络

Flannel 是 Overlay 网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持 UDP、VXLAN、AWS、VPC、和 GCE 路由等数据转发方式。多主机容器网络通信的其他主流方案包括:隧道方案(Weave、OpenSwitch)、路由方案 (Calico)等。

5.1、分配子网段到 Etcd

在主节点写入分配子网段到 Etcd,供 Flanneld 使用。

[root@k8s-master ssl]# etcdctl -ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.23.210:2379,https://192.168.23.211:2379,https://192.168.23.212:2379" set /coreos.com/network/config '{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"} }'

{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"} }

上传 flannel-v0.12.0-linux-amd64.tar.gz 软件包,解压 Flannel 二进制并分别拷贝到 Node 节点。

[root@k8s-master ~]# tar zxf flannel-v0.12.0-linux-amd64.tar.gz

[root@k8s-master ~]# scp flanneld mk-docker-opts.sh 192.168.23.211:/opt/kubernetes/bin/

[root@k8s-master ~]# scp flanneld mk-docker-opts.sh 192.168.23.212:/opt/kubernetes/bin/

[root@k8s-master ~]# mv flanneld mk-docker-opts.sh /opt/kubernetes/bin/5.2、配置 Flannel

在 k8-master 上编辑 flanneld 配置文件。

[root@k8s-master ~]# cat >> /opt/kubernetes/cfg/flanneld <<EOF

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.23.210:2379,https://192.168.23.211:2379,https://192.168.23.212:2379 -etcd-cafile=/opt/kubernetes/ssl/ca.pem -etcd-certfile=/opt/kubernetes/ssl/server.pem -etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

EOF

在 k8s-master 主机上创建 flanneld.service 脚本文件管理 Flanneld。

[root@k8s-master ~]# cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF拷贝文件到 k8s-node1、k8s-node2

[root@k8s-master ~]# scp /opt/kubernetes/cfg/flanneld root@192.168.23.211:/opt/kubernetes/cfg/flanneld

[root@k8s-master ~]# scp /opt/kubernetes/cfg/flanneld root@192.168.23.212:/opt/kubernetes/cfg/flanneld

[root@k8s-master ~]# scp /usr/lib/systemd/system/flanneld.service root@192.168.23.211:/usr/lib/systemd/system/flanneld.service

[root@k8s-master ~]# scp /usr/lib/systemd/system/flanneld.service root@192.168.23.212:/usr/lib/systemd/system/flanneld.service 在 k8s-master 主机上配置 Docker 启动指定网段,修改 Docker 配置脚本文件。

[root@k8s-master ~]# vim /usr/lib/systemd/system/docker.service

......//省略部分内容

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env //添加此行

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock $DOCKER_NETWORK_OPTIONS //此行后面添加 $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

......省略部分内容拷贝文件到 k8s-node1、k8s-node2

[root@k8s-master ~]# scp /usr/lib/systemd/system/docker.service root@192.168.23.211:/usr/lib/systemd/system/docker.service

[root@k8s-master ~]# scp /usr/lib/systemd/system/docker.service root@192.168.23.212:/usr/lib/systemd/system/docker.service 5.3、启动 Flannel

在三台主机中启动 flanneld

[root@k8s-master ~]# systemctl enable flanneld

[root@k8s-master ~]# systemctl start flanneld

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl restart docker

[root@k8s-master ~]# ifconfig //查看 flannel 是否与 docker0 在一个网段

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.24.1 netmask 255.255.255.0 broadcast 172.17.24.255

ether 02:42:b0:4c:11:b2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.24.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::1051:73ff:feae:1f6e prefixlen 64 scopeid 0x20<link>

ether 12:51:73:ae:1f:6e txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

[root@k8s-node1 ~]# systemctl enable flanneld

[root@k8s-node1 ~]# systemctl start flanneld

[root@k8s-node1 ~]# systemctl daemon-reload

[root@k8s-node1 ~]# systemctl restart docker

[root@k8s-node1 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.33.1 netmask 255.255.255.0 broadcast 172.17.33.255

ether 02:42:3a:23:bf:b1 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.33.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::40b9:77ff:fe79:9af2 prefixlen 64 scopeid 0x20<link>

ether 42:b9:77:79:9a:f2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

[root@k8s-node2 ~]# systemctl enable flanneld

[root@k8s-node2 ~]# systemctl start flanneld

[root@k8s-node2 ~]# systemctl daemon-reload

[root@k8s-node2 ~]# systemctl restart docker

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.23.1 netmask 255.255.255.0 broadcast 172.17.23.255

ether 02:42:84:94:6c:4e txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.23.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::64b1:86ff:fe71:41ba prefixlen 64 scopeid 0x20<link>

ether 66:b1:86:71:41:ba txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

5.4、测试 flanneld 是否安装成功

在 k8s-master 上测试到 k8s-node1 节点和 k8s-node2 节点 docker0 网桥 IP 地址的连通性,出现如下结果说明 Flanneld 安装成功。

[root@k8s-master ~]# ping 172.17.33.1

PING 172.17.33.1 (172.17.33.1) 56(84) bytes of data.

64 bytes from 172.17.33.1: icmp_seq=1 ttl=64 time=0.944 ms

64 bytes from 172.17.33.1: icmp_seq=2 ttl=64 time=0.794 ms

64 bytes from 172.17.33.1: icmp_seq=3 ttl=64 time=1.48 ms

[root@k8s-master ~]# ping 172.17.23.1

PING 172.17.23.1 (172.17.23.1) 56(84) bytes of data.

64 bytes from 172.17.23.1: icmp_seq=1 ttl=64 time=0.941 ms

64 bytes from 172.17.23.1: icmp_seq=2 ttl=64 time=0.643 ms

64 bytes from 172.17.23.1: icmp_seq=3 ttl=64 time=3.31 ms至此 Flannel 配置完成。

六、部署 Kubernetes-master 组件

Kubernetes 二进制安装方式所需的二进制安装程序 Google 已经提供了下载,可以通过地址 https://github.com/kubernetes/kubernetes/releases 进行下载,选择对应的版本之 后,从 CHANGELOG 页面下载二进制文件。由于网络的特殊情况,相关安装程序会与文档一起发布。

在 k8s-master 主机上依次进行如下操作,部署 Kubernetes-master 组件,具体操作如下所示。

6.1、添加 kubectl 命令环境

上传 tar zxf kubernetes-server-linux-amd64.tar.gz 软件包,解压并添加 kubectl 命令环境。

[root@k8s-master ~]# tar zxf kubernetes-server-linux-amd64.tar.gz

[root@k8s-master ~]# cd kubernetes/server/bin/

[root@k8s-master bin]# cp kubectl /opt/kubernetes/bin/6.2、创建 TLS Bootstrapping Token

执行以下命令,创建 TLS Bootstrapping Token。

[root@k8s-master ~]# cd /opt/kubernetes/

[root@k8s-master kubernetes]# export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

[root@k8s-master kubernetes]# cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF6.3、创建 Kubelet kubeconfig

执行以下命令,创建 Kubelet kubeconfig。

[root@k8s-master kubernetes]# export KUBE_APISERVER="https://192.168.23.210:6443"1)设置群集参数

[root@k8s-master kubernetes]# cd /root/software/ssl/

[root@k8s-master ssl]# kubectl config set-cluster kubernetes \

> --certificate-authority=./ca.pem \

> --embed-certs=true \

> --server=${KUBE_APISERVER} \

> --kubeconfig=bootstrap.kubeconfig

Cluster "kubernetes" set.2)设置客户端认证参数

[root@k8s-master ssl]# kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig

User "kubelet-bootstrap" set.

3)设置上下文参数

[root@k8s-master ssl]# kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig

Context "default" created.4)设置默认上下文

[root@k8s-master ssl]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

Switched to context "default".6.4、创建 kuby-proxy kubeconfig

执行以下命令,创建 kuby-proxy kubeconfig。

[root@k8s-master ssl]# kubectl config set-cluster kubernetes --certificate-authority=./ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=kube-proxy.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master ssl]# kubectl config set-credentials kube-proxy --client-certificate=./kube-proxy.pem --client-key=./kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

User "kube-proxy" set.

[root@k8s-master ssl]# kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

Context "default" created.

[root@k8s-master ssl]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

Switched to context "default".

6.5、部署 Kube-apiserver

执行以下命令,部署 Kube-apiserver。

[root@k8s-master ssl]# cd /root/kubernetes/server/bin/

[root@k8s-master bin]# cp kube-controller-manager kube-scheduler kube-apiserver /opt/kubernetes/bin/

[root@k8s-master bin]# cp /opt/kubernetes/token.csv /opt/kubernetes/cfg/

[root@k8s-master bin]# cd /opt/kubernetes/bin/

##上传master.zip

[root@k8s-master bin]# unzip master.zip

[root@k8s-master bin]# chmod +x *.sh

[root@k8s-master bin]# ./apiserver.sh 192.168.23.210 https://192.168.23.210:2379,https://192.168.23.211:2379,https://192.168.23.212:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.6.6、部署 Kube-controller-manager

执行以下命令,部署 Kube-controller-manager。

[root@k8s-master bin]# sh controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.6.7、部署 Kube-scheduler

执行以下命令,部署 Kube-scheduler。

[root@k8s-master bin]# sh scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

6.8、检查组件运行是否正常

执行以下命令,检测组件运行是否正常。

[root@k8s-master bin]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"} 七、部署 Kubernetes-node 组件

部署完 Kubernetes-master 组件后,即可开始部署 Kubernetes-node 组件。需要依次执行以下步骤。

7.1、准备环境

执行以下命令,准备 Kubernetes-node 组件的部署环境。

//在 k8s-master 主机上执行

[root@k8s-master ~]# cd /root/software/ssl/

[root@k8s-master ssl]# scp *kubeconfig 192.168.23.211:/opt/kubernetes/cfg/

[root@k8s-master ssl]# scp *kubeconfig 192.168.23.212:/opt/kubernetes/cfg/

[root@k8s-master ssl]# cd /root/kubernetes/server/bin/

[root@k8s-master bin]# scp kubelet kube-proxy 192.168.23.211:/opt/kubernetes/bin/

[root@k8s-master bin]# scp kubelet kube-proxy 192.168.23.212:/opt/kubernetes/bin/

[root@k8s-master bin]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

[root@k8s-master bin]# kubectl describe clusterrolebinding

7.2、部署 kube-kubelet

执行以下命令,部署 kubelet。

[root@k8s-node1 ~]# cd /opt/kubernetes/bin/

##上传 node.zip

[root@k8s-node1 bin]# unzip node.zip

[root@k8s-node1 bin]# chmod +x *.sh

[root@k8s-node1 bin]# sh kubelet.sh 192.168.23.211 192.168.23.100

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-node2 ~]# cd /opt/kubernetes/bin/

##上传 node.zip

[root@k8s-node2 bin]# unzip node.zip

[root@k8s-node2 bin]# chmod +x *.sh

[root@k8s-node2 bin]# sh kubelet.sh 192.168.23.212 192.168.23.100

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

7.3、部署 kube-proxy

执行以下命令,部署 kube-proxy。

[root@k8s-node1 bin]# sh proxy.sh 192.168.23.211

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-node2 bin]# sh proxy.sh 192.168.23.212

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

7.4、查看 Node 节点组件是否安装成功

执行以下命令,查看 Node 节点组件是否安装成功

[root@k8s-node1 bin]# ps -ef | grep kube

root 17789 1 4 12:48 ? 00:06:00 /opt/kubernetes/bin/etcd --name=etcd02 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.23.211:2380 --listen-client-urls=https://192.168.23.211:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.23.211:2379 --initial-advertise-peer-urls=https://192.168.23.211:2380 --initial-cluster=etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380 --initial-cluster-token=etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380 --initial-cluster-state=new --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --peer-cert-file=/opt/kubernetes/ssl/server.pem --peer-key-file=/opt/kubernetes/ssl/server-key.pem --trusted-ca-file=/opt/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pem

root 17902 1 0 14:11 ? 00:00:05 /opt/kubernetes/bin/flanneld --ip-masq

root 21531 1 0 14:58 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --address=192.168.23.211 --hostname-override=192.168.23.211 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --cert-dir=/opt/kubernetes/ssl --cluster-dns=192.168.23.100 --cluster-domain=cluster.local --fail-swap-on=false --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

root 21770 1 0 15:00 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=192.168.23.211 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

root 21920 1664 0 15:01 pts/0 00:00:00 grep --color=auto kube

[root@k8s-node2 bin]# ps -ef | grep kube

root 17763 1 5 12:49 ? 00:06:56 /opt/kubernetes/bin/etcd --name=etcd03 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.23.212:2380 --listen-client-urls=https://192.168.23.212:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.23.212:2379 --initial-advertise-peer-urls=https://192.168.23.212:2380 --initial-cluster=etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380 --initial-cluster-token=etcd01=https://192.168.23.210:2380,etcd02=https://192.168.23.211:2380,etcd03=https://192.168.23.212:2380 --initial-cluster-state=new --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --peer-cert-file=/opt/kubernetes/ssl/server.pem --peer-key-file=/opt/kubernetes/ssl/server-key.pem --trusted-ca-file=/opt/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pem

root 17883 1 0 14:12 ? 00:00:05 /opt/kubernetes/bin/flanneld --ip-masq

root 21564 1 0 14:59 ? 00:00:01 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --address=192.168.23.212 --hostname-override=192.168.23.212 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --cert-dir=/opt/kubernetes/ssl --cluster-dns=192.168.23.100 --cluster-domain=cluster.local --fail-swap-on=false --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

root 21704 1 0 15:00 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=192.168.23.212 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

root 21828 1640 0 15:01 pts/0 00:00:00 grep --color=auto kube

八、查看自动签发证书

部署完组件后,Master 节点即可获取到 Node 节点的请求证书,然后允许加入集群即可。

[root@k8s-master ~]# kubectl get csr //查看请求证书

NAME AGE REQUESTOR CONDITION

node-csr-3oY8GiY4wPcnysZAho2QDTWrLZtMWd8-XrkxfDbHlo0 3m39s kubelet-bootstrap Pending

node-csr-qvn_AVGkbVibHivJ3g2_OVTB-jhwrZS33r3h2_gF-eo 5m16s kubelet-bootstrap Pending

[root@k8s-master ~]# kubectl certificate approve node-csr-3oY8GiY4wPcnysZAho2QDTWrLZtMWd8-XrkxfDbHlo0 // 允许节点加入集群,替换为自己的节点名

certificatesigningrequest.certificates.k8s.io/node-csr-3oY8GiY4wPcnysZAho2QDTWrLZtMWd8-XrkxfDbHlo0 approved

[root@k8s-master ~]# kubectl certificate approve node-csr-qvn_AVGkbVibHivJ3g2_OVTB-jhwrZS33r3h2_gF-eo

certificatesigningrequest.certificates.k8s.io/node-csr-qvn_AVGkbVibHivJ3g2_OVTB-jhwrZS33r3h2_gF-eo approved

[root@k8s-master ~]# kubectl get nodes //查看节点是否添加成功

NAME STATUS ROLES AGE VERSION

192.168.23.211 Ready <none> 112s v1.17.3

192.168.23.212 Ready <none> 2m9s v1.17.3

至此,k8s 集群部署完成

如果想把 k8s-master 节点也做成工作节点,执行以下命令

[root@k8s-master ~]# cp /root/software/ssl/*kubeconfig /opt/kubernetes/cfg/

[root@k8s-master ~]# cp /root/kubernetes/server/bin/kubelet /opt/kubernetes/bin/

[root@k8s-master ~]# cp /root/kubernetes/server/bin/kube-proxy /opt/kubernetes/bin/

[root@k8s-master ~]# cd /opt/kubernetes/bin/

## 上传 node.zip

[root@k8s-master bin]# unzip node.zip

[root@k8s-master bin]# chmod +x *.sh

[root@k8s-master bin]# sh kubelet.sh 192.168.23.210 192.168.23.100

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-master bin]# sh proxy.sh 192.168.23.100

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-master bin]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-3oY8GiY4wPcnysZAho2QDTWrLZtMWd8-XrkxfDbHlo0 18m kubelet-bootstrap Approved,Issued

node-csr-VSdVgMtozEulCQJ9n_Dhf71cZOkhvw9WL8RETZByoEo 28s kubelet-bootstrap Pending

node-csr-qvn_AVGkbVibHivJ3g2_OVTB-jhwrZS33r3h2_gF-eo 20m kubelet-bootstrap Approved,Issued

[root@k8s-master bin]# kubectl certificate approve node-csr-VSdVgMtozEulCQJ9n_Dhf71cZOkhvw9WL8RETZByoEo

certificatesigningrequest.certificates.k8s.io/node-csr-VSdVgMtozEulCQJ9n_Dhf71cZOkhvw9WL8RETZByoEo approved

[root@k8s-master bin]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.23.210 Ready <none> 113s v1.17.3

192.168.23.211 Ready <none> 15m v1.17.3

192.168.23.212 Ready <none> 15m v1.17.3