2.4.2 Tfidf计算

2.4.2.1 目的

- 计算出每篇文章的词语的TFIDF结果用于抽取画像

2.4.2.2TFIDF模型的训练步骤

- 读取N篇文章数据

- 文章数据进行分词处理

- TFIDF模型训练保存,spark使用count与idf进行计算

- 利用模型计算N篇文章数据的TFIDF值

2.4.2.3 实现

想要用TFIDF进行计算,需要训练一个模型保存结果

- 新建一个compute_tfidf.ipynb的文件

import os

import sys

# 如果当前代码文件运行测试需要加入修改路径,避免出现后导包问题

BASE_DIR = os.path.dirname(os.path.dirname(os.getcwd()))

sys.path.insert(0, os.path.join(BASE_DIR))

PYSPARK_PYTHON = "/miniconda2/envs/reco_sys/bin/python"

# 当存在多个版本时,不指定很可能会导致出错

os.environ["PYSPARK_PYTHON"] = PYSPARK_PYTHON

os.environ["PYSPARK_DRIVER_PYTHON"] = PYSPARK_PYTHON

from offline import SparkSessionBase

class KeywordsToTfidf(SparkSessionBase):

SPARK_APP_NAME = "keywordsByTFIDF"

SPARK_EXECUTOR_MEMORY = "7g"

ENABLE_HIVE_SUPPORT = True

def __init__(self):

self.spark = self._create_spark_session()

ktt = KeywordsToTfidf()

- 读取文章原始数据

ktt.spark.sql("use article")

article_dataframe = ktt.spark.sql("select * from article_data limit 20")

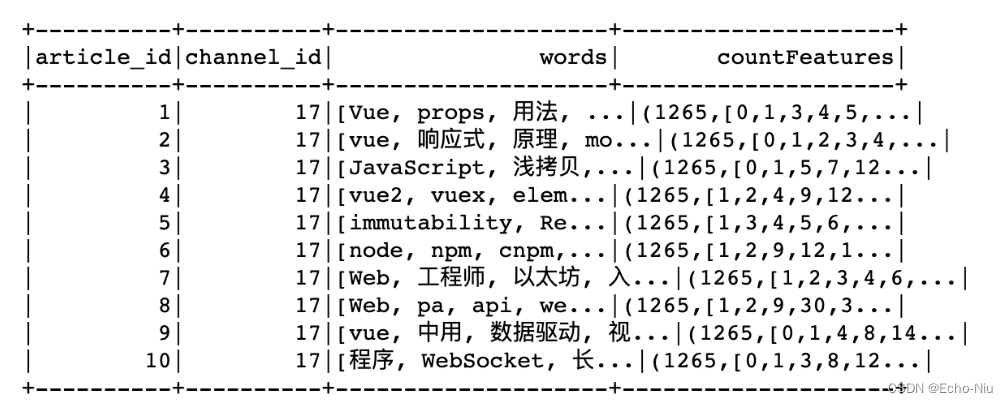

words_df = article_dataframe.rdd.mapPartitions(segmentation).toDF(["article_id", "channel_id", "words"])

- 文章数据处理函数

- 结巴词典,以及停用词(词典文件,三台Centos都需要上传一份,目录相同)

- ITKeywords.txt, stopwords.txt

scp -r ./words/ root@192.168.19.138:/root/words/

scp -r ./words/ root@192.168.19.139:/root/words/

分词部分代码:

# 分词

def segmentation(partition):

import os

import re

import jieba

import jieba.analyse

import jieba.posseg as pseg

import codecs

abspath = "/root/words"

# 结巴加载用户词典

userDict_path = os.path.join(abspath, "ITKeywords.txt")

jieba.load_userdict(userDict_path)

# 停用词文本

stopwords_path = os.path.join(abspath, "stopwords.txt")

def get_stopwords_list():

"""返回stopwords列表"""

stopwords_list = [i.strip()

for i in codecs.open(stopwords_path).readlines()]

return stopwords_list

# 所有的停用词列表

stopwords_list = get_stopwords_list()

# 分词

def cut_sentence(sentence):

"""对切割之后的词语进行过滤,去除停用词,保留名词,英文和自定义词库中的词,长度大于2的词"""

# print(sentence,"*"*100)

# eg:[pair('今天', 't'), pair('有', 'd'), pair('雾', 'n'), pair('霾', 'g')]

seg_list = pseg.lcut(sentence)

seg_list = [i for i in seg_list if i.flag not in stopwords_list]

filtered_words_list = []

for seg in seg_list:

# print(seg)

if len(seg.word) <= 1:

continue

elif seg.flag == "eng":

if len(seg.word) <= 2:

continue

else:

filtered_words_list.append(seg.word)

elif seg.flag.startswith("n"):

filtered_words_list.append(seg.word)

elif seg.flag in ["x", "eng"]: # 是自定一个词语或者是英文单词

filtered_words_list.append(seg.word)

return filtered_words_list

for row in partition:

sentence = re.sub("<.*?>", "", row.sentence) # 替换掉标签数据

words = cut_sentence(sentence)

yield row.article_id, row.channel_id, words

- 训练模型,得到每个文章词的频率Counts结果

# 词语与词频统计

from pyspark.ml.feature import CountVectorizer

# 总词汇的大小,文本中必须出现的次数

cv = CountVectorizer(inputCol="words", outputCol="countFeatures", vocabSize=200*10000, minDF=1.0)

# 训练词频统计模型

cv_model = cv.fit(words_df)

cv_model.write().overwrite().save("hdfs://hadoop-master:9000/headlines/models/CV.model")

- 训练idf模型,保存

# 词语与词频统计

from pyspark.ml.feature import CountVectorizerModel

cv_model = CountVectorizerModel.load("hdfs://hadoop-master:9000/headlines/models/CV.model")

# 得出词频向量结果

cv_result = cv_model.transform(words_df)

# 训练IDF模型

from pyspark.ml.feature import IDF

idf = IDF(inputCol="countFeatures", outputCol="idfFeatures")

idfModel = idf.fit(cv_result)

idfModel.write().overwrite().save("hdfs://hadoop-master:9000/headlines/models/IDF.model")

得到了两个少量文章测试的模型,以这两个模型为例子查看结果,所有不同的词

cv_model.vocabulary

['this',

'pa',

'node',

'data',

'数据',

'let',

'keys',

'obj',

'组件',

'npm',

'child',

'节点',

'log',

'属性',

'key',

'console',

'value',

'var',

'return',

'div']

# 每个词的逆文档频率,在历史13万文章当中是固定的值,也作为后面计算TFIDF依据

idfModel.idf.toArray()[:20]

array([0.6061358 , 0. , 0.6061358 , 0.6061358 , 0.45198512,

0.78845736, 1.01160091, 1.01160091, 1.01160091, 0.78845736,

1.29928298, 1.70474809, 0.31845373, 1.01160091, 0.78845736,

0.45198512, 0.78845736, 0.78845736, 0.45198512, 1.70474809])

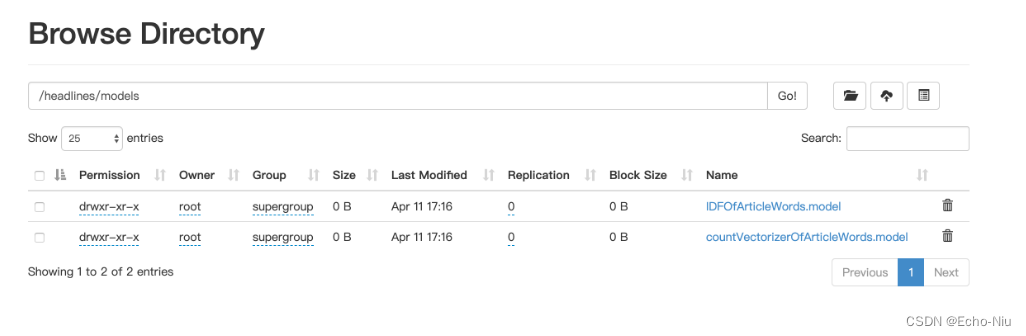

上传训练13万文章的模型

- 20篇文章,计算出代表20篇文章中N个词的IDF以及每个文档的词频,最终得到的是这20片文章的TFIDF

两个模型训练需要很久,所以在这里我们上传已经训练好的模型到指定路径

hadoop dfs -mkdir /headlines

hadoop dfs -mkdir /headlines/models/

hadoop dfs -put modelsbak/countVectorizerOfArticleWords.model/ /headlines/models/

hadoop dfs -put modelsbak/IDFOfArticleWords.model/ /headlines/models/

最终:

2.4.2.3 计算N篇文章数据的TFIDF值

- 步骤:

- 1、获取两个模型相关参数,将所有的13万文章中的关键字对应的idf值和索引保存

- 为什么要保存这些值?并且存入数据库当中?

- 2、模型计算得出N篇文章的TFIDF值选取TOPK,与IDF索引查询得到词

- 1、获取两个模型相关参数,将所有的13万文章中的关键字对应的idf值和索引保存

获取两个模型相关参数,将所有的关键字对应的idf值和索引保存

-

后续计算tfidf画像需要使用,避免放入内存中占用过多,持久化使用

-

建立表

CREATE TABLE idf_keywords_values(

keyword STRING comment "article_id",

idf DOUBLE comment "idf",

index INT comment "index");

然后读取13万参考文档所有词语与IDF值进行计算:

from pyspark.ml.feature import CountVectorizerModel

cv_model = CountVectorizerModel.load("hdfs://hadoop-master:9000/headlines/models/countVectorizerOfArticleWords.model")

from pyspark.ml.feature import IDFModel

idf_model = IDFModel.load("hdfs://hadoop-master:9000/headlines/models/IDFOfArticleWords.model")

进行计算保存

#keywords_list_with_idf = list(zip(cv_model.vocabulary, idf_model.idf.toArray()))

#def func(data):

# for index in range(len(data)):

# data[index] = list(data[index])

# data[index].append(index)

# data[index][1] = float(data[index][1])

#func(keywords_list_with_idf)

#sc = spark.sparkContext

#rdd = sc.parallelize(keywords_list_with_idf)

#df = rdd.toDF(["keywords", "idf", "index"])

# df.write.insertInto('idf_keywords_values')

存储结果:

hive> select * from idf_keywords_values limit 10;

OK

&# 1.417829594344155 0

pa 0.6651385256756351 1

ul 0.8070591229443697 2

代码 0.7368239176481552 3

方法 0.7506253985501485 4

数据 0.9375297590538404 5

return 1.1584986818528347 6

对象 1.2765716628665975 7

name 1.3833429138490618 8

this 1.6247297855214076 9

hive> desc idf_keywords_values;

OK

keyword string article_id

idf double idf

index int index

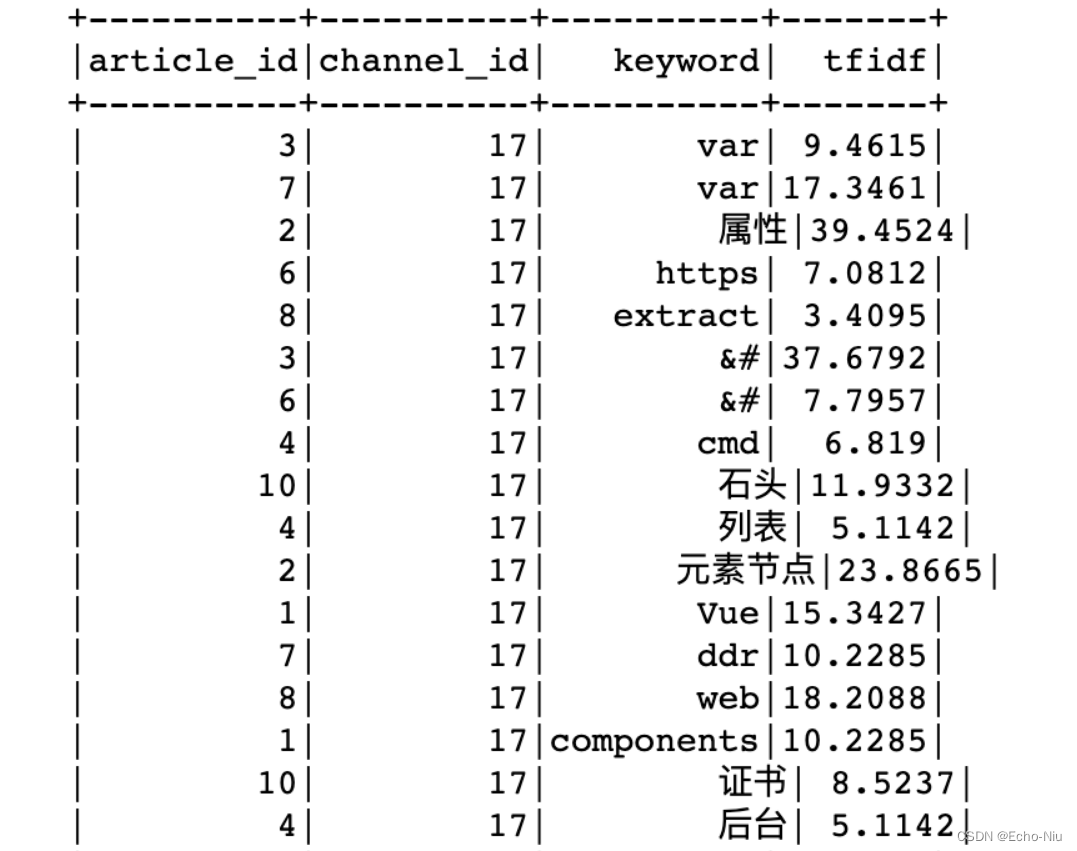

模型计算得出N篇文章的TFIDF值,IDF索引结果合并得到词

对于词频处理之后的结果进行计算

保存TFIDF的结果,在article数据库中创建表

CREATE TABLE tfidf_keywords_values(

article_id INT comment "article_id",

channel_id INT comment "channel_id",

keyword STRING comment "keyword",

tfidf DOUBLE comment "tfidf");

计算tfidf值进行存储

from pyspark.ml.feature import CountVectorizerModel

cv_model = CountVectorizerModel.load("hdfs://hadoop-master:9000/headlines/models/countVectorizerOfArticleWords.model")

from pyspark.ml.feature import IDFModel

idf_model = IDFModel.load("hdfs://hadoop-master:9000/headlines/models/IDFOfArticleWords.model")

cv_result = cv_model.transform(words_df)

tfidf_result = idf_model.transform(cv_result)

def func(partition):

TOPK = 20

for row in partition:

# 找到索引与IDF值并进行排序

_ = list(zip(row.idfFeatures.indices, row.idfFeatures.values))

_ = sorted(_, key=lambda x: x[1], reverse=True)

result = _[:TOPK]

for word_index, tfidf in result:

yield row.article_id, row.channel_id, int(word_index), round(float(tfidf), 4)

_keywordsByTFIDF = tfidf_result.rdd.mapPartitions(func).toDF(["article_id", "channel_id", "index", "tfidf"])

结果为:

如果要保存对应words读取idf_keywords_values表结果合并

# 利用结果索引与”idf_keywords_values“合并知道词

keywordsIndex = ktt.spark.sql("select keyword, index idx from idf_keywords_values")

# 利用结果索引与”idf_keywords_values“合并知道词

keywordsByTFIDF = _keywordsByTFIDF.join(keywordsIndex, keywordsIndex.idx == _keywordsByTFIDF.index).select(["article_id", "channel_id", "keyword", "tfidf"])

keywordsByTFIDF.write.insertInto("tfidf_keywords_values")

合并之后存储到TFIDF结果表中,便于后续读取处理

hive> desc tfidf_keywords_values;

OK

article_id int article_id

channel_id int channel_id

keyword string keyword

tfidf double tfidf

Time taken: 0.085 seconds, Fetched: 4 row(s)

hive> select * from tfidf_keywords_values limit 10;

OK

98319 17 var 20.6079

98323 17 var 7.4938

98326 17 var 104.9128

98344 17 var 5.6203

98359 17 var 69.3174

98360 17 var 9.3672

98392 17 var 14.9875

98393 17 var 155.4958

98406 17 var 11.2407

98419 17 var 59.9502

Time taken: 0.499 seconds, Fetched: 10 row(s)

2.4.3 TextRank计算

步骤:

- 1、TextRank存储结构

- 2、TextRank过滤计算

创建textrank_keywords_values表

CREATE TABLE textrank_keywords_values(

article_id INT comment "article_id",

channel_id INT comment "channel_id",

keyword STRING comment "keyword",

textrank DOUBLE comment "textrank");

进行词的处理与存储

# 计算textrank

textrank_keywords_df = article_dataframe.rdd.mapPartitions(textrank).toDF(

["article_id", "channel_id", "keyword", "textrank"])

# textrank_keywords_df.write.insertInto("textrank_keywords_values")

分词结果:

# 分词

def textrank(partition):

import os

import jieba

import jieba.analyse

import jieba.posseg as pseg

import codecs

abspath = "/root/words"

# 结巴加载用户词典

userDict_path = os.path.join(abspath, "ITKeywords.txt")

jieba.load_userdict(userDict_path)

# 停用词文本

stopwords_path = os.path.join(abspath, "stopwords.txt")

def get_stopwords_list():

"""返回stopwords列表"""

stopwords_list = [i.strip()

for i in codecs.open(stopwords_path).readlines()]

return stopwords_list

# 所有的停用词列表

stopwords_list = get_stopwords_list()

class TextRank(jieba.analyse.TextRank):

def __init__(self, window=20, word_min_len=2):

super(TextRank, self).__init__()

self.span = window # 窗口大小

self.word_min_len = word_min_len # 单词的最小长度

# 要保留的词性,根据jieba github ,具体参见https://github.com/baidu/lac

self.pos_filt = frozenset(

('n', 'x', 'eng', 'f', 's', 't', 'nr', 'ns', 'nt', "nw", "nz", "PER", "LOC", "ORG"))

def pairfilter(self, wp):

"""过滤条件,返回True或者False"""

if wp.flag == "eng":

if len(wp.word) <= 2:

return False

if wp.flag in self.pos_filt and len(wp.word.strip()) >= self.word_min_len \

and wp.word.lower() not in stopwords_list:

return True

# TextRank过滤窗口大小为5,单词最小为2

textrank_model = TextRank(window=5, word_min_len=2)

allowPOS = ('n', "x", 'eng', 'nr', 'ns', 'nt', "nw", "nz", "c")

for row in partition:

tags = textrank_model.textrank(row.sentence, topK=20, withWeight=True, allowPOS=allowPOS, withFlag=False)

for tag in tags:

yield row.article_id, row.channel_id, tag[0], tag[1]

最终存储为:

hive> select * from textrank_keywords_values limit 20;

OK

98319 17 var 20.6079

98323 17 var 7.4938

98326 17 var 104.9128

98344 17 var 5.6203

98359 17 var 69.3174

98360 17 var 9.3672

98392 17 var 14.9875

98393 17 var 155.4958

98406 17 var 11.2407

98419 17 var 59.9502

98442 17 var 18.7344

98445 17 var 37.4689

98512 17 var 29.9751

98544 17 var 5.6203

98545 17 var 22.4813

98548 17 var 71.1909

98599 17 var 11.2407

98609 17 var 18.7344

98642 17 var 67.444

98648 15 var 20.6079

Time taken: 0.344 seconds, Fetched: 20 row(s)

hive> desc textrank_keywords_values;

OK

article_id int article_id

channel_id int channel_id

keyword string keyword

textrank double textrank

2.5.3 文章画像结果

对文章进行计算画像

- 步骤:

- 1、加载IDF,保留关键词以及权重计算(TextRank * IDF)

- 2、合并关键词权重到字典结果

- 3、将tfidf和textrank共现的词作为主题词

- 4、将主题词表和关键词表进行合并,插入表

加载IDF,保留关键词以及权重计算(TextRank * IDF)

idf = ktt.spark.sql("select * from idf_keywords_values")

idf = idf.withColumnRenamed("keyword", "keyword1")

result = textrank_keywords_df.join(idf,textrank_keywords_df.keyword==idf.keyword1)

keywords_res = result.withColumn("weights", result.textrank * result.idf).select(["article_id", "channel_id", "keyword", "weights"])

合并关键词权重到字典结果

keywords_res.registerTempTable("temptable")

merge_keywords = ktt.spark.sql("select article_id, min(channel_id) channel_id, collect_list(keyword) keywords, collect_list(weights) weights from temptable group by article_id")

# 合并关键词权重合并成字典

def _func(row):

return row.article_id, row.channel_id, dict(zip(row.keywords, row.weights))

keywords_info = merge_keywords.rdd.map(_func).toDF(["article_id", "channel_id", "keywords"])

将tfidf和textrank共现的词作为主题词

topic_sql = """

select t.article_id article_id2, collect_set(t.keyword) topics from tfidf_keywords_values t

inner join

textrank_keywords_values r

where t.keyword=r.keyword

group by article_id2

"""

article_topics = ktt.spark.sql(topic_sql)

将主题词表和关键词表进行合并

article_profile = keywords_info.join(article_topics, keywords_info.article_id==article_topics.article_id2).select(["article_id", "channel_id", "keywords", "topics"])

# articleProfile.write.insertInto("article_profile")

结果显示

hive> select * from article_profile limit 1;

OK

26 17 {"策略":0.3973770571351729,"jpg":0.9806348975390871,"用户":1.2794959063944176,"strong":1.6488457985625076,"文件":0.28144603583387057,"逻辑":0.45256526469610714,"形式":0.4123994242601279,"全自":0.9594604850547191,"h2":0.6244481634710125,"版本":0.44280276959510817,"Adobe":0.8553618185108718,"安装":0.8305037437573172,"检查更新":1.8088946300014435,"产品":0.774842382276899,"下载页":1.4256311032544344,"过程":0.19827163395829256,"json":0.6423301791599972,"方式":0.582762869780791,"退出应用":1.2338671268242603,"Setup":1.004399549339134} ["Electron","全自动","产品","版本号","安装包","检查更新","方案","版本","退出应用","逻辑","安装过程","方式","定性","新版本","Setup","静默","用户"]

Time taken: 0.322 seconds, Fetched: 1 row(s)