从GIMPLE到RTL

- 从GIMPLE到RTL

- GIMPLE序列

- 测试代码:

- CFG如下所示:

- 一些典型数据结构

- RTL生成的基本过程

- 变量展开

- 计算当前函数堆栈(Stack Frame)的初始状态

- 变量展开的初始化

- 对可以展开的变量进行展开操作,生成该变量对应的RTX

- 展开与程序块范围相关的、TREE_USED为1的变量

- 其他的堆栈处理(优化分配)

- 总结

- 参数及返回值处理

- 初始块的处理(TODO)

- 基本块的RTL的RTL生成(TODO)

- 退出块的处理

- 其他处理

- GIMPLE语句转换成RTL

- GIMPLE语句转换的一般转换过程

- GIMPLE语句转换成树形结构

- 从树形结构生成RTL

- GIMPLE_GOTO语句的RTL语句生成

- GIMPLE_ASSIGN语句的RTL生成

- GIMPLE->TREE:

- TREE->RTL:

- 总结

从GIMPLE到RTL

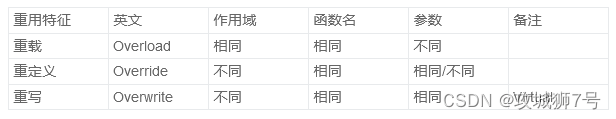

GIMPLE是一种与前端编程语言和后端目标机器无关的中间表示形式,为了实现对多种目标机器的额支持,GCC引入了RTL。这里的从GIMPLE到RTL,准确的讲是从GIMPLE到IR-RTL的转换,也就是GIMPLE转换成insn的序列过程。

表示insn的RTX包含下列6中RTX表达式:

DEF_RTL_EXPR(INSN, "insn", "iuuBieie", RTX_INSN)

DEF_RTL_EXPR(JUMP_INSN, "jump_insn", "iuuBieie0", RTX_INSN)

DEF_RTL_EXPR(CALL_INSN, "call_insn", "iuuBieiee", RTX_INSN)

DEF_RTL_EXPR(BARRIER, "barrier", "iuu00000", RTX_EXTRA)

DEF_RTL_EXPR(CODE_LABEL, "code_label", "iuuB00is", RTX_EXTRA)

DEF_RTL_EXPR(NOTE, "note", "iuuB0ni", RTX_EXTRA)

GIMPLE序列

在GIMPLE序列生成之后,GCC在GIMPLE中间格式上进行了各种各样的与目标机器无关的处理和优化,这些处理被组织成一系列的处理过程(Pass),其中针对GIMPLE的最后一个关键处理过程为pass_expand,该Pass就完成了GIMPLE向RTL的转换,即由GIMPLE中间结果生成RTL形式的insn。从GIMPLE向RTL的转换过程是一个从机器无关信息向机器相关信息的转换。

测试代码:

int main(int argc, char *argv[]) {

int i = 0;

int sum = 0;

for (int i = 0; i < 10; i++) {

sum = sum + i;

}

return sum;

}

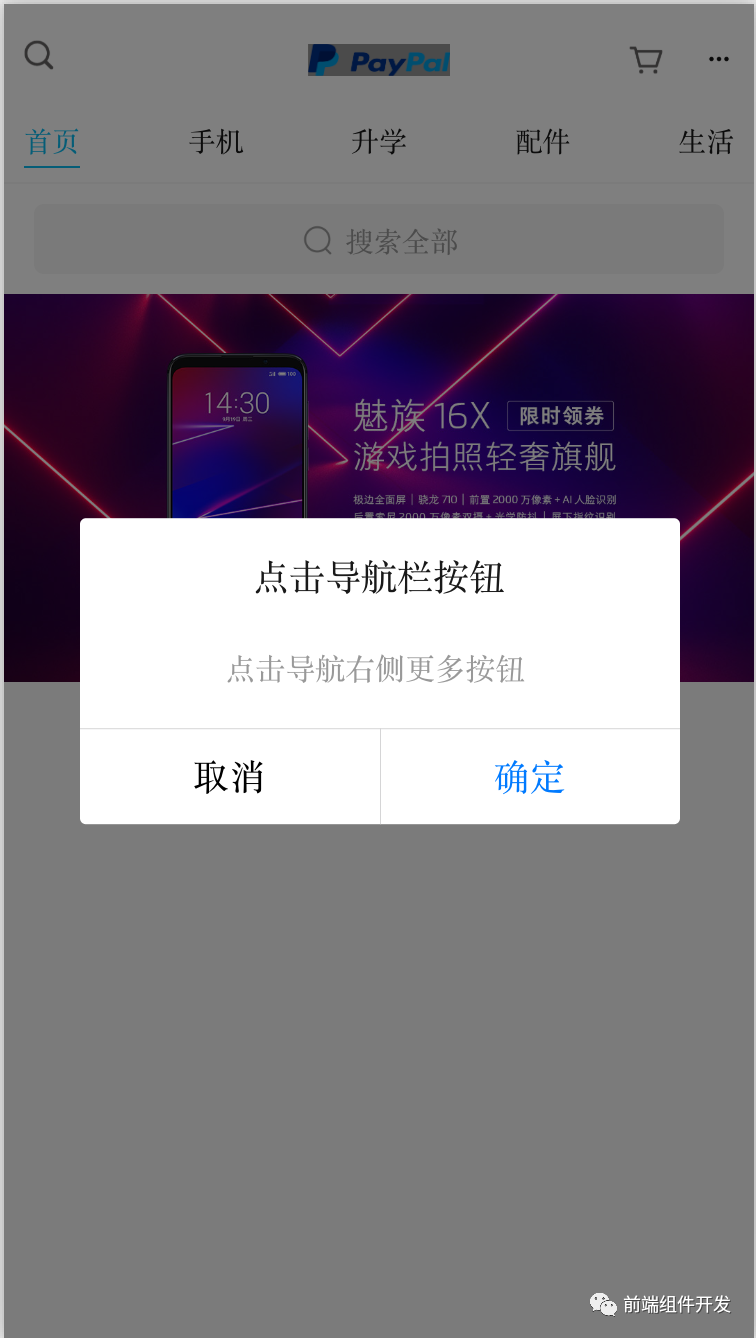

CFG如下所示:

<bb 2> :

gimple_assign <integer_cst, i, 0, NULL, NULL>

gimple_assign <integer_cst, sum, 0, NULL, NULL>

gimple_assign <integer_cst, i, 0, NULL, NULL>

goto <bb 4>; [INV]

<bb 3> :

gimple_assign <plus_expr, sum, sum, i, NULL>

gimple_assign <plus_expr, i, i, 1, NULL>

<bb 4> :

gimple_cond <le_expr, i, 9, NULL, NULL>

goto <bb 3>; [INV]

else

goto <bb 5>; [INV]

<bb 5> :

gimple_assign <var_decl, D.1954, sum, NULL, NULL>

<bb 6> :

gimple_label <<L3>>

gimple_return <D.1954>

一些典型数据结构

在以函数为单位进行RTL生成时,需要当前函数的RTL信息进行维护,主要是通过rtl_data这个结构体来描述的,在function.h中描述定义:

/* Datastructures maintained for currently processed function in RTL form. */

struct rtl_data GTY(())

{

struct expr_status expr;

struct emit_status emit;

struct varasm_status varasm;

struct incoming_args args;

struct function_subsections subsections;

struct rtl_eh eh;

/* For function.c */

/* # of bytes of outgoing arguments. If ACCUMULATE_OUTGOING_ARGS is

defined, the needed space is pushed by the prologue. */

int outgoing_args_size;

/* If nonzero, an RTL expression for the location at which the current

function returns its result. If the current function returns its

result in a register, current_function_return_rtx will always be

the hard register containing the result. */

rtx return_rtx;

/* Opaque pointer used by get_hard_reg_initial_val and

has_hard_reg_initial_val (see integrate.[hc]). */

struct initial_value_struct *hard_reg_initial_vals;

/* A variable living at the top of the frame that holds a known value.

Used for detecting stack clobbers. */

tree stack_protect_guard;

/* List (chain of EXPR_LIST) of labels heading the current handlers for

nonlocal gotos. */

rtx x_nonlocal_goto_handler_labels;

/* Label that will go on function epilogue.

Jumping to this label serves as a "return" instruction

on machines which require execution of the epilogue on all returns. */

rtx x_return_label;

/* Label that will go on the end of function epilogue.

Jumping to this label serves as a "naked return" instruction

on machines which require execution of the epilogue on all returns. */

rtx x_naked_return_label;

/* List (chain of EXPR_LISTs) of all stack slots in this function.

Made for the sake of unshare_all_rtl. */

rtx x_stack_slot_list;

/* Place after which to insert the tail_recursion_label if we need one. */

rtx x_stack_check_probe_note;

/* Location at which to save the argument pointer if it will need to be

referenced. There are two cases where this is done: if nonlocal gotos

exist, or if vars stored at an offset from the argument pointer will be

needed by inner routines. */

rtx x_arg_pointer_save_area;

/* Dynamic Realign Argument Pointer used for realigning stack. */

rtx drap_reg;

/* Offset to end of allocated area of stack frame.

If stack grows down, this is the address of the last stack slot allocated.

If stack grows up, this is the address for the next slot. */

HOST_WIDE_INT x_frame_offset;

/* Insn after which register parms and SAVE_EXPRs are born, if nonopt. */

rtx x_parm_birth_insn;

/* List of all used temporaries allocated, by level. */

VEC(temp_slot_p,gc) *x_used_temp_slots;

/* List of available temp slots. */

struct temp_slot *x_avail_temp_slots;

/* Current nesting level for temporaries. */

int x_temp_slot_level;

/* The largest alignment needed on the stack, including requirement

for outgoing stack alignment. */

unsigned int stack_alignment_needed;

/* Preferred alignment of the end of stack frame, which is preferred

to call other functions. */

unsigned int preferred_stack_boundary;

/* The minimum alignment of parameter stack. */

unsigned int parm_stack_boundary;

/* The largest alignment of slot allocated on the stack. */

unsigned int max_used_stack_slot_alignment;

/* The stack alignment estimated before reload, with consideration of

following factors:

1. Alignment of local stack variables (max_used_stack_slot_alignment)

2. Alignment requirement to call other functions

(preferred_stack_boundary)

3. Alignment of non-local stack variables but might be spilled in

local stack. */

unsigned int stack_alignment_estimated;

/* For reorg. */

/* If some insns can be deferred to the delay slots of the epilogue, the

delay list for them is recorded here. */

rtx epilogue_delay_list;

/* Nonzero if function being compiled called builtin_return_addr or

builtin_frame_address with nonzero count. */

bool accesses_prior_frames;

/* Nonzero if the function calls __builtin_eh_return. */

bool calls_eh_return;

/* Nonzero if function saves all registers, e.g. if it has a nonlocal

label that can reach the exit block via non-exceptional paths. */

bool saves_all_registers;

/* Nonzero if function being compiled has nonlocal gotos to parent

function. */

bool has_nonlocal_goto;

/* Nonzero if function being compiled has an asm statement. */

bool has_asm_statement;

/* This bit is used by the exception handling logic. It is set if all

calls (if any) are sibling calls. Such functions do not have to

have EH tables generated, as they cannot throw. A call to such a

function, however, should be treated as throwing if any of its callees

can throw. */

bool all_throwers_are_sibcalls;

/* Nonzero if stack limit checking should be enabled in the current

function. */

bool limit_stack;

/* Nonzero if profiling code should be generated. */

bool profile;

/* Nonzero if the current function uses the constant pool. */

bool uses_const_pool;

/* Nonzero if the current function uses pic_offset_table_rtx. */

bool uses_pic_offset_table;

/* Nonzero if the current function needs an lsda for exception handling. */

bool uses_eh_lsda;

/* Set when the tail call has been produced. */

bool tail_call_emit;

/* Nonzero if code to initialize arg_pointer_save_area has been emitted. */

bool arg_pointer_save_area_init;

/* Nonzero if current function must be given a frame pointer.

Set in global.c if anything is allocated on the stack there. */

bool frame_pointer_needed;

/* When set, expand should optimize for speed. */

bool maybe_hot_insn_p;

/* Nonzero if function stack realignment is needed. This flag may be

set twice: before and after reload. It is set before reload wrt

stack alignment estimation before reload. It will be changed after

reload if by then criteria of stack realignment is different.

The value set after reload is the accurate one and is finalized. */

bool stack_realign_needed;

/* Nonzero if function stack realignment is tried. This flag is set

only once before reload. It affects register elimination. This

is used to generate DWARF debug info for stack variables. */

bool stack_realign_tried;

/* Nonzero if function being compiled needs dynamic realigned

argument pointer (drap) if stack needs realigning. */

bool need_drap;

/* Nonzero if function stack realignment estimation is done, namely

stack_realign_needed flag has been set before reload wrt estimated

stack alignment info. */

bool stack_realign_processed;

/* Nonzero if function stack realignment has been finalized, namely

stack_realign_needed flag has been set and finalized after reload. */

bool stack_realign_finalized;

/* True if dbr_schedule has already been called for this function. */

bool dbr_scheduled_p;

};

对rtl_data结构体某些字段的访问通过以下宏去完成:

#define return_label (crtl->x_return_label)

#define naked_return_label (crtl->x_naked_return_label)

#define stack_slot_list (crtl->x_stack_slot_list)

#define parm_birth_insn (crtl->x_parm_birth_insn)

#define frame_offset (crtl->x_frame_offset)

#define stack_check_probe_note (crtl->x_stack_check_probe_note)

#define arg_pointer_save_area (crtl->x_arg_pointer_save_area)

#define used_temp_slots (crtl->x_used_temp_slots)

#define avail_temp_slots (crtl->x_avail_temp_slots)

#define temp_slot_level (crtl->x_temp_slot_level)

#define nonlocal_goto_handler_labels (crtl->x_nonlocal_goto_handler_labels)

#define frame_pointer_needed (crtl->frame_pointer_needed)

#define stack_realign_fp (crtl->stack_realign_needed && !crtl->need_drap)

#define stack_realign_drap (crtl->stack_realign_needed && crtl->need_drap)

emit是rtl_data结构体中比较重要的一个成员,如下所示,其中包含了当前函数正在处理的insn序列( rtx x_first_insn、

rtx x_last_insn、 int x_cur_insn_uid):

struct emit_status GTY(())

{

/* This is reset to LAST_VIRTUAL_REGISTER + 1 at the start of each function.

After rtl generation, it is 1 plus the largest register number used. */

int x_reg_rtx_no;

/* Lowest label number in current function. */

int x_first_label_num;

/* The ends of the doubly-linked chain of rtl for the current function.

Both are reset to null at the start of rtl generation for the function.

start_sequence saves both of these on `sequence_stack' and then starts

a new, nested sequence of insns. */

rtx x_first_insn;

rtx x_last_insn;

/* Stack of pending (incomplete) sequences saved by `start_sequence'.

Each element describes one pending sequence.

The main insn-chain is saved in the last element of the chain,

unless the chain is empty. */

struct sequence_stack *sequence_stack;

/* INSN_UID for next insn emitted.

Reset to 1 for each function compiled. */

int x_cur_insn_uid;

/* Location the last line-number NOTE emitted.

This is used to avoid generating duplicates. */

location_t x_last_location;

/* The length of the regno_pointer_align, regno_decl, and x_regno_reg_rtx

vectors. Since these vectors are needed during the expansion phase when

the total number of registers in the function is not yet known, the

vectors are copied and made bigger when necessary. */

int regno_pointer_align_length;

/* Indexed by pseudo register number, if nonzero gives the known alignment

for that pseudo (if REG_POINTER is set in x_regno_reg_rtx).

Allocated in parallel with x_regno_reg_rtx. */

unsigned char * GTY((skip)) regno_pointer_align;

};

当然可以发现,emit-rtl.c中定义了如下的宏,用来访问当前函数正在处理的insn序列:

#define first_insn (crtl->emit.x_first_insn)

#define last_insn (crtl->emit.x_last_insn)

#define cur_insn_uid (crtl->emit.x_cur_insn_uid)

#define last_location (crtl->emit.x_last_location)

#define first_label_num (crtl->emit.x_first_label_num)

RTL生成的基本过程

RTL的内部表示是从GIMPLE形式转化而来的,是程序代码另外一种规范的中间表示,记为IR-RTL,目标机器对应的汇编代码就是在IR-RTL基础上生成的。

本质上程序代码的RTL中间表示就是双向链表所链接的insn链表,包括了insn、jump_insn、call_insn、barrier、code_label以及note六种RTX表达形式。

下面看下GIMPLE处理的关键过程(Pass),rtl_opt_pass pass_expand完成了GIMPLE到RTL的转换,该Pass的声明如下,其处理的入口函数为gcc/cfgexpand.c中的gimple_expand_cfg函数:

struct rtl_opt_pass pass_expand =

{

{

RTL_PASS,

"expand", /* name */

NULL, /* gate */

gimple_expand_cfg, /* execute */

NULL, /* sub */

NULL, /* next */

0, /* static_pass_number */

TV_EXPAND, /* tv_id */

/* ??? If TER is enabled, we actually receive GENERIC. */

PROP_gimple_leh | PROP_cfg, /* properties_required */

PROP_rtl, /* properties_provided */

PROP_trees, /* properties_destroyed */

0, /* todo_flags_start */

TODO_dump_func, /* todo_flags_finish */

}

};

函数调用栈如下:

struct rtl_opt_pass pass_expand =

{

{

RTL_PASS,

"expand", /* name */

NULL, /* gate */

gimple_expand_cfg, /* execute */

NULL, /* sub */

NULL, /* next */

0, /* static_pass_number */

TV_EXPAND, /* tv_id */

/* ??? If TER is enabled, we actually receive GENERIC. */

PROP_gimple_leh | PROP_cfg, /* properties_required */

PROP_rtl, /* properties_provided */

PROP_trees, /* properties_destroyed */

0, /* todo_flags_start */

TODO_dump_func, /* todo_flags_finish */

}

};

注:在gcc中,GIMPLE到RTL的转换是以函数为单位进行的,每当gcc语法分析完一个函数后,就构建起了该函数的AST,然后对该AST进行规范化并转换成GIMPLE语句。GCC针对该函数的GIMPLE中间表示进行各种优化处理,最后,再执行pass_expand将每个函数的GIMPLE序列转换成RTL序列。

gimple_expand_cfg函数如下:

/* Translate the intermediate representation contained in the CFG

from GIMPLE trees to RTL.

We do conversion per basic block and preserve/update the tree CFG.

This implies we have to do some magic as the CFG can simultaneously

consist of basic blocks containing RTL and GIMPLE trees. This can

confuse the CFG hooks, so be careful to not manipulate CFG during

the expansion. */

static unsigned int

gimple_expand_cfg (void)

{

basic_block bb, init_block;

sbitmap blocks;

edge_iterator ei;

edge e;

/* Some backends want to know that we are expanding to RTL. */

currently_expanding_to_rtl = 1;

rtl_profile_for_bb (ENTRY_BLOCK_PTR);

insn_locators_alloc ();

if (!DECL_BUILT_IN (current_function_decl))

{

/* Eventually, all FEs should explicitly set function_start_locus. */

if (cfun->function_start_locus == UNKNOWN_LOCATION)

set_curr_insn_source_location

(DECL_SOURCE_LOCATION (current_function_decl));

else

set_curr_insn_source_location (cfun->function_start_locus);

}

set_curr_insn_block (DECL_INITIAL (current_function_decl));

prologue_locator = curr_insn_locator ();

/* Make sure first insn is a note even if we don't want linenums.

This makes sure the first insn will never be deleted.

Also, final expects a note to appear there. */

emit_note (NOTE_INSN_DELETED);

/* Mark arrays indexed with non-constant indices with TREE_ADDRESSABLE. */

discover_nonconstant_array_refs ();

targetm.expand_to_rtl_hook ();

crtl->stack_alignment_needed = STACK_BOUNDARY;

crtl->max_used_stack_slot_alignment = STACK_BOUNDARY;

crtl->stack_alignment_estimated = STACK_BOUNDARY;

crtl->preferred_stack_boundary = STACK_BOUNDARY;

cfun->cfg->max_jumptable_ents = 0;

/* Expand the variables recorded during gimple lowering. */

expand_used_vars ();

/* Honor stack protection warnings. */

if (warn_stack_protect)

{

if (cfun->calls_alloca)

warning (OPT_Wstack_protector,

"not protecting local variables: variable length buffer");

if (has_short_buffer && !crtl->stack_protect_guard)

warning (OPT_Wstack_protector,

"not protecting function: no buffer at least %d bytes long",

(int) PARAM_VALUE (PARAM_SSP_BUFFER_SIZE));

}

/* Set up parameters and prepare for return, for the function. */

expand_function_start (current_function_decl);

/* If this function is `main', emit a call to `__main'

to run global initializers, etc. */

if (DECL_NAME (current_function_decl)

&& MAIN_NAME_P (DECL_NAME (current_function_decl))

&& DECL_FILE_SCOPE_P (current_function_decl))

expand_main_function ();

/* Initialize the stack_protect_guard field. This must happen after the

call to __main (if any) so that the external decl is initialized. */

if (crtl->stack_protect_guard)

stack_protect_prologue ();

/* Update stack boundary if needed. */

if (SUPPORTS_STACK_ALIGNMENT)

{

/* Call update_stack_boundary here to update incoming stack

boundary before TARGET_FUNCTION_OK_FOR_SIBCALL is called.

TARGET_FUNCTION_OK_FOR_SIBCALL needs to know the accurate

incoming stack alignment to check if it is OK to perform

sibcall optimization since sibcall optimization will only

align the outgoing stack to incoming stack boundary. */

if (targetm.calls.update_stack_boundary)

targetm.calls.update_stack_boundary ();

/* The incoming stack frame has to be aligned at least at

parm_stack_boundary. */

gcc_assert (crtl->parm_stack_boundary <= INCOMING_STACK_BOUNDARY);

}

/* Register rtl specific functions for cfg. */

rtl_register_cfg_hooks ();

init_block = construct_init_block ();

/* Clear EDGE_EXECUTABLE on the entry edge(s). It is cleaned from the

remaining edges in expand_gimple_basic_block. */

FOR_EACH_EDGE (e, ei, ENTRY_BLOCK_PTR->succs)

e->flags &= ~EDGE_EXECUTABLE;

lab_rtx_for_bb = pointer_map_create ();

FOR_BB_BETWEEN (bb, init_block->next_bb, EXIT_BLOCK_PTR, next_bb)

bb = expand_gimple_basic_block (bb);

/* Expansion is used by optimization passes too, set maybe_hot_insn_p

conservatively to true until they are all profile aware. */

pointer_map_destroy (lab_rtx_for_bb);

free_histograms ();

construct_exit_block ();

set_curr_insn_block (DECL_INITIAL (current_function_decl));

insn_locators_finalize ();

/* We're done expanding trees to RTL. */

currently_expanding_to_rtl = 0;

/* Convert tree EH labels to RTL EH labels and zap the tree EH table. */

convert_from_eh_region_ranges ();

set_eh_throw_stmt_table (cfun, NULL);

rebuild_jump_labels (get_insns ());

find_exception_handler_labels ();

blocks = sbitmap_alloc (last_basic_block);

sbitmap_ones (blocks);

find_many_sub_basic_blocks (blocks);

purge_all_dead_edges ();

sbitmap_free (blocks);

compact_blocks ();

expand_stack_alignment ();

#ifdef ENABLE_CHECKING

verify_flow_info ();

#endif

/* There's no need to defer outputting this function any more; we

know we want to output it. */

DECL_DEFER_OUTPUT (current_function_decl) = 0;

/* Now that we're done expanding trees to RTL, we shouldn't have any

more CONCATs anywhere. */

generating_concat_p = 0;

if (dump_file)

{

fprintf (dump_file,

"\n\n;;\n;; Full RTL generated for this function:\n;;\n");

/* And the pass manager will dump RTL for us. */

}

/* If we're emitting a nested function, make sure its parent gets

emitted as well. Doing otherwise confuses debug info. */

{

tree parent;

for (parent = DECL_CONTEXT (current_function_decl);

parent != NULL_TREE;

parent = get_containing_scope (parent))

if (TREE_CODE (parent) == FUNCTION_DECL)

TREE_SYMBOL_REFERENCED (DECL_ASSEMBLER_NAME (parent)) = 1;

}

/* We are now committed to emitting code for this function. Do any

preparation, such as emitting abstract debug info for the inline

before it gets mangled by optimization. */

if (cgraph_function_possibly_inlined_p (current_function_decl))

(*debug_hooks->outlining_inline_function) (current_function_decl);

TREE_ASM_WRITTEN (current_function_decl) = 1;

/* After expanding, the return labels are no longer needed. */

return_label = NULL;

naked_return_label = NULL;

/* Tag the blocks with a depth number so that change_scope can find

the common parent easily. */

set_block_levels (DECL_INITIAL (cfun->decl), 0);

default_rtl_profile ();

return 0;

}

从函数中可以发现,每个函数代码从GIMMPLE形式转换到RTYL的过程主要包括以下几个步骤:

(1)变量展开:调用expand_used_vars(void)函数,对当前函数中所有的变量进行分析,在虚拟寄存器或者堆栈中为其分配空间,并生成对应的RTX。

(2)参数和返回值的处理:调用expand_function_start(current_function_decl)函数,对函数的参数和返回值进行处理,生成其对应的RTX。

(3)初始块的处理:调用construct_init_block(void)函数,创建初始块,并修正函数的控制流图CFG。

(4)基本块的展开:对函数体中每个基本块所包含的GIMPLE语句序列逐个进行展开,这是RTL生成的主要部分,采用的形式为:即对函数初始块之后的每个基本块逐一进行展开。

(5)退出块的处理:调用construct_exit_block(void)函数,创建退出块,生成函数退出时的RTL,并修正函数的控制流图CFG。

变量展开

变量展开的实质就是对函数中所涉及的变量进行分析,根据其定义的类型和存储特性在堆栈或寄存器(包括虚拟寄存器或者物理寄存器)中分配空间,并创建其相应的RTX,这些变量展开所生成的RTX将作为insn中的操作数出现。

函数中定义的局部变量在堆栈中分配内存,需要根据当前的堆栈布局确定该变量在堆栈中的存储地址,并生成该堆栈地址的RTX对象(其RTX_CODE为MEM)。对于GIMPLE语句中所使用的GIMPLE临时变量,一般是为其分配虚拟寄存器,这种情况下,需要确定虚拟寄存器编号并生成表示该虚拟寄存器的RTX对象(其RTX_CODE为REG)。而函数使用的静态变量和全局变量一般不在堆栈中进行地址分配,也不分配虚拟寄存器,而是保存在目标程序.data段或者.bss段等节区,insn则通过这些变量的符号信息对其进行访问。

下面分析expand_used_vars函数:

/* Expand all variables used in the function. */

static void

expand_used_vars (void)

{

tree t, next, outer_block = DECL_INITIAL (current_function_decl);

/* Compute the phase of the stack frame for this function. */

{

int align = PREFERRED_STACK_BOUNDARY / BITS_PER_UNIT;

int off = STARTING_FRAME_OFFSET % align;

frame_phase = off ? align - off : 0;

}

init_vars_expansion ();

/* At this point all variables on the local_decls with TREE_USED

set are not associated with any block scope. Lay them out. */

t = cfun->local_decls;

cfun->local_decls = NULL_TREE;

for (; t; t = next)

{

tree var = TREE_VALUE (t);

bool expand_now = false;

next = TREE_CHAIN (t);

/* We didn't set a block for static or extern because it's hard

to tell the difference between a global variable (re)declared

in a local scope, and one that's really declared there to

begin with. And it doesn't really matter much, since we're

not giving them stack space. Expand them now. */

if (TREE_STATIC (var) || DECL_EXTERNAL (var))

expand_now = true;

/* Any variable that could have been hoisted into an SSA_NAME

will have been propagated anywhere the optimizers chose,

i.e. not confined to their original block. Allocate them

as if they were defined in the outermost scope. */

else if (is_gimple_reg (var))

expand_now = true;

/* If the variable is not associated with any block, then it

was created by the optimizers, and could be live anywhere

in the function. */

else if (TREE_USED (var))

expand_now = true;

/* Finally, mark all variables on the list as used. We'll use

this in a moment when we expand those associated with scopes. */

TREE_USED (var) = 1;

if (expand_now)

{

expand_one_var (var, true, true);

if (DECL_ARTIFICIAL (var) && !DECL_IGNORED_P (var))

{

rtx rtl = DECL_RTL_IF_SET (var);

/* Keep artificial non-ignored vars in cfun->local_decls

chain until instantiate_decls. */

if (rtl && (MEM_P (rtl) || GET_CODE (rtl) == CONCAT))

{

TREE_CHAIN (t) = cfun->local_decls;

cfun->local_decls = t;

continue;

}

}

}

ggc_free (t);

}

/* At this point, all variables within the block tree with TREE_USED

set are actually used by the optimized function. Lay them out. */

expand_used_vars_for_block (outer_block, true);

if (stack_vars_num > 0)

{

/* Due to the way alias sets work, no variables with non-conflicting

alias sets may be assigned the same address. Add conflicts to

reflect this. */

add_alias_set_conflicts ();

/* If stack protection is enabled, we don't share space between

vulnerable data and non-vulnerable data. */

if (flag_stack_protect)

add_stack_protection_conflicts ();

/* Now that we have collected all stack variables, and have computed a

minimal interference graph, attempt to save some stack space. */

partition_stack_vars ();

if (dump_file)

dump_stack_var_partition ();

}

/* There are several conditions under which we should create a

stack guard: protect-all, alloca used, protected decls present. */

if (flag_stack_protect == 2

|| (flag_stack_protect

&& (cfun->calls_alloca || has_protected_decls)))

create_stack_guard ();

/* Assign rtl to each variable based on these partitions. */

if (stack_vars_num > 0)

{

/* Reorder decls to be protected by iterating over the variables

array multiple times, and allocating out of each phase in turn. */

/* ??? We could probably integrate this into the qsort we did

earlier, such that we naturally see these variables first,

and thus naturally allocate things in the right order. */

if (has_protected_decls)

{

/* Phase 1 contains only character arrays. */

expand_stack_vars (stack_protect_decl_phase_1);

/* Phase 2 contains other kinds of arrays. */

if (flag_stack_protect == 2)

expand_stack_vars (stack_protect_decl_phase_2);

}

expand_stack_vars (NULL);

fini_vars_expansion ();

}

/* If the target requires that FRAME_OFFSET be aligned, do it. */

if (STACK_ALIGNMENT_NEEDED)

{

HOST_WIDE_INT align = PREFERRED_STACK_BOUNDARY / BITS_PER_UNIT;

if (!FRAME_GROWS_DOWNWARD)

frame_offset += align - 1;

frame_offset &= -align;

}

}

主要包含以下几个步骤:

(1)计算当前函数堆栈(Stack Frame)的初始状态

(2) 变量展开的初始化

(3) 对可以展开的变量进行展开操作,生成该变量对应的RTX

(4) 展开与程序块范围相关的、TREE_USED为1的变量

(5) 其他的堆栈处理(优化分配)

计算当前函数堆栈(Stack Frame)的初始状态

/Compute the phase of the stack frame for this function./

{

int align = PREFERRED_STACK_BOUNDARY / BITS_PER_UNIT;

int off = STARTING_FRAME_OFFSET % align;

frame_phase = off ? align - off : 0;

}

STARTING_FRAME_OFFSET给出了实际局部变量存储区域起始地址相对于FRAME_POINTER的偏移量,其中FRAME_POINTER描述了堆栈中函数局部变量存储位置的起始地址。

变量展开的初始化

/* Prepare for expanding variables. */

static void

init_vars_expansion (void)

{

tree t;

/* Set TREE_USED on all variables in the local_decls. */

for (t = cfun->local_decls; t; t = TREE_CHAIN (t))

TREE_USED (TREE_VALUE (t)) = 1;

/* Clear TREE_USED on all variables associated with a block scope. */

clear_tree_used (DECL_INITIAL (current_function_decl));

/* Initialize local stack smashing state. */

has_protected_decls = false;

has_short_buffer = false;

}

函数中的局部变量声明节点被组织成链表,且其中第一个局部变量的声明节点保存在cfun->local_decls中,因此可以遍历这个全局链表,对该函数额局部变量的TREE_USED标记进行标记。

所谓的程序块(BLOCK)是一个描述词法作用范围的概念,在AST中应该表现为所谓的BIND_EXPR节点,每个程序块都可以有其局部的变量声明,每个程序块可以包含若干个子块(subblock),同级的程序块互相连接成一个链表,每个程序块可以包含在某个上层的程序块(supercontext)中。

下面是一些与BLOCK操作相关的宏定义:

/* In a BLOCK node. */

#define BLOCK_VARS(NODE) (BLOCK_CHECK (NODE)->block.vars)

#define BLOCK_NONLOCALIZED_VARS(NODE) (BLOCK_CHECK (NODE)->block.nonlocalized_vars)

#define BLOCK_NUM_NONLOCALIZED_VARS(NODE) VEC_length (tree, BLOCK_NONLOCALIZED_VARS (NODE))

#define BLOCK_NONLOCALIZED_VAR(NODE,N) VEC_index (tree, BLOCK_NONLOCALIZED_VARS (NODE), N)

#define BLOCK_SUBBLOCKS(NODE) (BLOCK_CHECK (NODE)->block.subblocks)

#define BLOCK_SUPERCONTEXT(NODE) (BLOCK_CHECK (NODE)->block.supercontext)

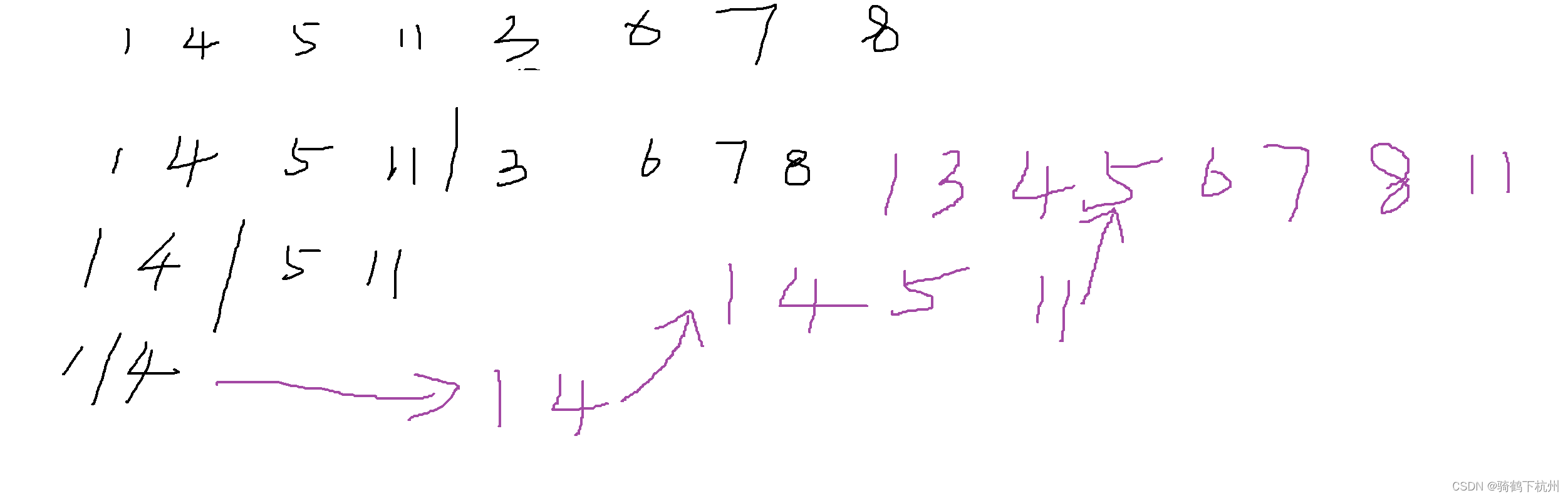

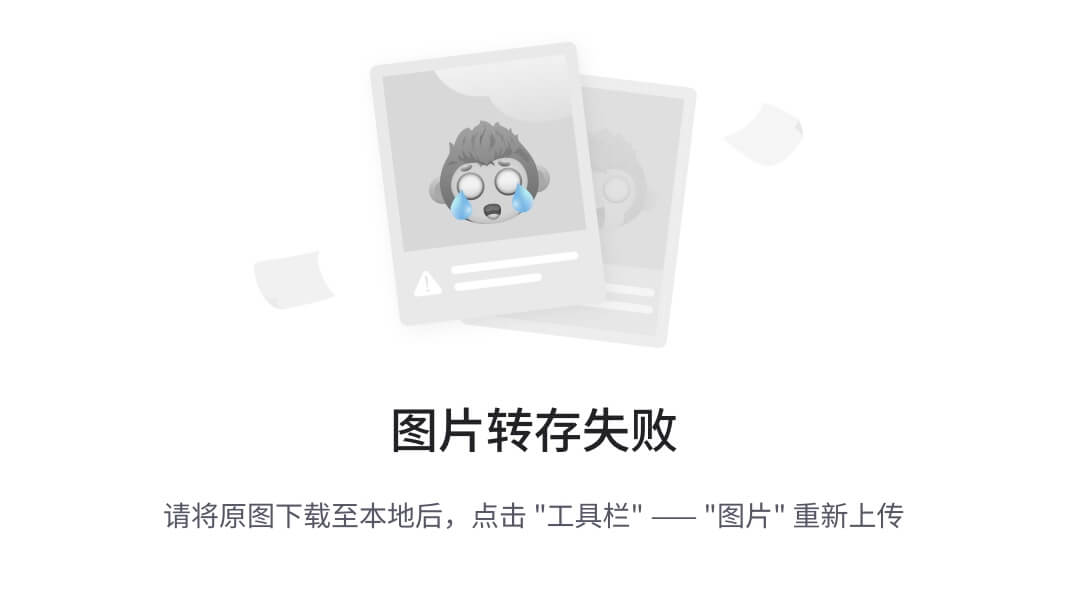

如上图中,static_sum、array均属于块<block 0xb7561fc0>,而变量j则属于块。在初始化这3个变量时,先将其TREE_USED标记为1,然后再将其TREE_USED标记为0。因此,例10-1中的代码对应的变量初始化后,其TREE_USED标记值如下图:

对可以展开的变量进行展开操作,生成该变量对应的RTX

对所有局部变量逐一判断是否可以进行展开,如果可以,则进行其RTX生成,并对所有判断过的变量,设置其TREE_USED标记为1。

对于一个变量var,可以展开的条件为:

(1)如果变量var是静态变量或外部变量,不需要在堆栈中分配空间,则可以进行展开;

(2)如果is_gimple_reg(var)返回为true,表示该变量可以使用一个GIMPLE寄存器进行展开,则可以进行展开;

(3)如果变量的TREE_USED标记为1,则表示其他与程序块无关的变量,可以进行展开;

is_gimple_reg的函数实现如下:

/* Return true if T is a non-aggregate register variable. */

bool

is_gimple_reg (tree t)

{

if (TREE_CODE (t) == SSA_NAME)

t = SSA_NAME_VAR (t);

if (MTAG_P (t))

return false;

if (!is_gimple_variable (t))

return false;

if (!is_gimple_reg_type (TREE_TYPE (t)))

return false;

/* A volatile decl is not acceptable because we can't reuse it as

needed. We need to copy it into a temp first. */

if (TREE_THIS_VOLATILE (t))

return false;

/* We define "registers" as things that can be renamed as needed,

which with our infrastructure does not apply to memory. */

if (needs_to_live_in_memory (t))

return false;

/* Hard register variables are an interesting case. For those that

are call-clobbered, we don't know where all the calls are, since

we don't (want to) take into account which operations will turn

into libcalls at the rtl level. For those that are call-saved,

we don't currently model the fact that calls may in fact change

global hard registers, nor do we examine ASM_CLOBBERS at the tree

level, and so miss variable changes that might imply. All around,

it seems safest to not do too much optimization with these at the

tree level at all. We'll have to rely on the rtl optimizers to

clean this up, as there we've got all the appropriate bits exposed. */

if (TREE_CODE (t) == VAR_DECL && DECL_HARD_REGISTER (t))

return false;

/* Complex and vector values must have been put into SSA-like form.

That is, no assignments to the individual components. */

if (TREE_CODE (TREE_TYPE (t)) == COMPLEX_TYPE

|| TREE_CODE (TREE_TYPE (t)) == VECTOR_TYPE)

return DECL_GIMPLE_REG_P (t);

return true;

}

当变量可以展开时,即expand_now=true时,则调用expand_one_var(var, true, true)函数对该变量进行“展开”操作,该函数根据变量的类型分别采取如下操作:

if (expand_now)

{

expand_one_var (var, true, true);

if (DECL_ARTIFICIAL (var) && !DECL_IGNORED_P (var))

{

rtx rtl = DECL_RTL_IF_SET (var);

/* Keep artificial non-ignored vars in cfun->local_decls

chain until instantiate_decls. */

if (rtl && (MEM_P (rtl) || GET_CODE (rtl) == CONCAT))

{

TREE_CHAIN (t) = cfun->local_decls;

cfun->local_decls = t;

continue;

}

}

}

/* A subroutine of expand_used_vars. Expand one variable according to

its flavor. Variables to be placed on the stack are not actually

expanded yet, merely recorded.

When REALLY_EXPAND is false, only add stack values to be allocated.

Return stack usage this variable is supposed to take.

*/

static HOST_WIDE_INT

expand_one_var (tree var, bool toplevel, bool really_expand)

{

if (SUPPORTS_STACK_ALIGNMENT

&& TREE_TYPE (var) != error_mark_node

&& TREE_CODE (var) == VAR_DECL)

{

unsigned int align;

/* Because we don't know if VAR will be in register or on stack,

we conservatively assume it will be on stack even if VAR is

eventually put into register after RA pass. For non-automatic

variables, which won't be on stack, we collect alignment of

type and ignore user specified alignment. */

if (TREE_STATIC (var) || DECL_EXTERNAL (var))

align = TYPE_ALIGN (TREE_TYPE (var));

else

align = DECL_ALIGN (var);

if (crtl->stack_alignment_estimated < align)

{

/* stack_alignment_estimated shouldn't change after stack

realign decision made */

gcc_assert(!crtl->stack_realign_processed);

crtl->stack_alignment_estimated = align;

}

}

if (TREE_CODE (var) != VAR_DECL)

;

else if (DECL_EXTERNAL (var))

;

else if (DECL_HAS_VALUE_EXPR_P (var))

;

else if (TREE_STATIC (var))

;

else if (DECL_RTL_SET_P (var))

;

else if (TREE_TYPE (var) == error_mark_node)

{

if (really_expand)

expand_one_error_var (var);

}

else if (DECL_HARD_REGISTER (var))

{

if (really_expand)

expand_one_hard_reg_var (var);

}

else if (use_register_for_decl (var))

{

if (really_expand)

expand_one_register_var (var);

}

else if (defer_stack_allocation (var, toplevel))

add_stack_var (var);

else

{

if (really_expand)

expand_one_stack_var (var);

return tree_low_cst (DECL_SIZE_UNIT (var), 1);

}

return 0;

}

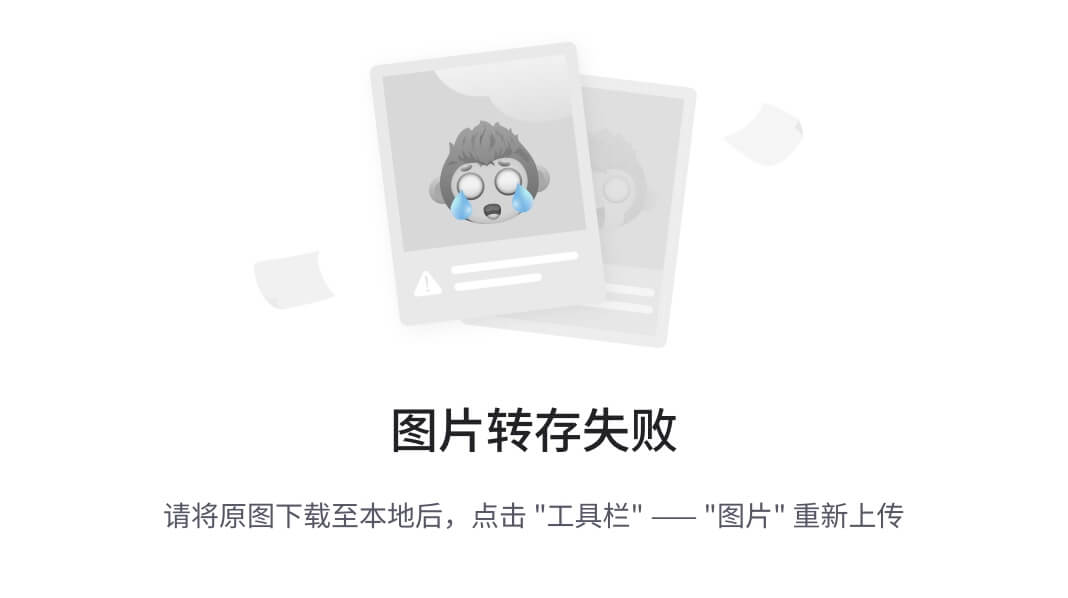

上例中的变量展开后,如下图所示:

展开与程序块范围相关的、TREE_USED为1的变量

使用expand_used_vars_for_block (outer_block, true)对于程序块相关,且TREE_USED标记为1的变量进行展开。

在测试源码中,由于变量j和变量i已经被处理过了(其DECL_RTL_SET_P已设置为true),而static_sum为静态变量,所以暂不处理,因此,只对块中的变量array进行展开,在堆栈中分配空间。

在栈中的内存分布如下:

注:函数中所有可以展开的变量都已经在堆栈或者寄存器中分配了存储空间,可以看到,所有的静态变量、外部变量等暂时不展开。一般来讲,变量展开的规则是:GIMPLE序列中的所有GIMPLE临时变量都进行虚拟寄存器的分配(虚拟寄存器的数量是无限的),函数中用户定义的其他自动变量则通常进行堆栈空间的分配。

其他的堆栈处理(优化分配)

为了进行堆栈空间的优化分配,可以推迟一些变量展开到堆栈中的过程,这样的好处是可以进行一些变量的合并,从而更加有效地利用堆栈空间。

总结

变量展开的核心功能就是为各种各样不同类型的变量分配空间,并生成对应的RTX,主要包括以下两种典型情况:

(1)对于GIMPLE语句中的GIMPLE临时变量,一般为该变量分配虚拟寄存器,创建类型为REG的RTX,通过该寄存器RTX访问该变量;

(2)如果是函数中的自动变量,则使用堆栈进行空间分配,因此必须创建内存类型为的RTX(其RTX_CODE=MEM),一般通过基址寄存器(virtual_stack_vars_rtx)+偏移量的方式给出该变量的内存地址。

参数及返回值处理

函数参数及返回值的RTL生成主要函数expand_function_start完成,其主要功能包括:

(1)生成函数返回语句的标号RTX return_label。

(2)初始化保存函数返回值的RTX。根据返回值的类型,函数返回值可以保存在寄存器中,也可以保存在内存中;一般来讲,对于复合类型的函数返回值,需要保存在内存中,对于简单的数据类型,通常保存在寄存器中。

(3)分析参数的传入方式,创建参数的RTX。

expand_function_start函数如下:

/* Start the RTL for a new function, and set variables used for

emitting RTL.

SUBR is the FUNCTION_DECL node.

PARMS_HAVE_CLEANUPS is nonzero if there are cleanups associated with

the function's parameters, which must be run at any return statement. */

void

expand_function_start (tree subr)

{

/* Make sure volatile mem refs aren't considered

valid operands of arithmetic insns. */

init_recog_no_volatile ();

crtl->profile

= (profile_flag

&& ! DECL_NO_INSTRUMENT_FUNCTION_ENTRY_EXIT (subr));

crtl->limit_stack

= (stack_limit_rtx != NULL_RTX && ! DECL_NO_LIMIT_STACK (subr));

/* Make the label for return statements to jump to. Do not special

case machines with special return instructions -- they will be

handled later during jump, ifcvt, or epilogue creation. */

return_label = gen_label_rtx ();

/* Initialize rtx used to return the value. */

/* Do this before assign_parms so that we copy the struct value address

before any library calls that assign parms might generate. */

/* Decide whether to return the value in memory or in a register. */

if (aggregate_value_p (DECL_RESULT (subr), subr))

{

/* Returning something that won't go in a register. */

rtx value_address = 0;

#ifdef PCC_STATIC_STRUCT_RETURN

if (cfun->returns_pcc_struct)

{

int size = int_size_in_bytes (TREE_TYPE (DECL_RESULT (subr)));

value_address = assemble_static_space (size);

}

else

#endif

{

rtx sv = targetm.calls.struct_value_rtx (TREE_TYPE (subr), 2);

/* Expect to be passed the address of a place to store the value.

If it is passed as an argument, assign_parms will take care of

it. */

if (sv)

{

value_address = gen_reg_rtx (Pmode);

emit_move_insn (value_address, sv);

}

}

if (value_address)

{

rtx x = value_address;

if (!DECL_BY_REFERENCE (DECL_RESULT (subr)))

{

x = gen_rtx_MEM (DECL_MODE (DECL_RESULT (subr)), x);

set_mem_attributes (x, DECL_RESULT (subr), 1);

}

SET_DECL_RTL (DECL_RESULT (subr), x);

}

}

else if (DECL_MODE (DECL_RESULT (subr)) == VOIDmode)

/* If return mode is void, this decl rtl should not be used. */

SET_DECL_RTL (DECL_RESULT (subr), NULL_RTX);

else

{

/* Compute the return values into a pseudo reg, which we will copy

into the true return register after the cleanups are done. */

tree return_type = TREE_TYPE (DECL_RESULT (subr));

if (TYPE_MODE (return_type) != BLKmode

&& targetm.calls.return_in_msb (return_type))

/* expand_function_end will insert the appropriate padding in

this case. Use the return value's natural (unpadded) mode

within the function proper. */

SET_DECL_RTL (DECL_RESULT (subr),

gen_reg_rtx (TYPE_MODE (return_type)));

else

{

/* In order to figure out what mode to use for the pseudo, we

figure out what the mode of the eventual return register will

actually be, and use that. */

rtx hard_reg = hard_function_value (return_type, subr, 0, 1);

/* Structures that are returned in registers are not

aggregate_value_p, so we may see a PARALLEL or a REG. */

if (REG_P (hard_reg))

SET_DECL_RTL (DECL_RESULT (subr),

gen_reg_rtx (GET_MODE (hard_reg)));

else

{

gcc_assert (GET_CODE (hard_reg) == PARALLEL);

SET_DECL_RTL (DECL_RESULT (subr), gen_group_rtx (hard_reg));

}

}

/* Set DECL_REGISTER flag so that expand_function_end will copy the

result to the real return register(s). */

DECL_REGISTER (DECL_RESULT (subr)) = 1;

}

/* Initialize rtx for parameters and local variables.

In some cases this requires emitting insns. */

assign_parms (subr);

/* If function gets a static chain arg, store it. */

if (cfun->static_chain_decl)

{

tree parm = cfun->static_chain_decl;

rtx local = gen_reg_rtx (Pmode);

set_decl_incoming_rtl (parm, static_chain_incoming_rtx, false);

SET_DECL_RTL (parm, local);

mark_reg_pointer (local, TYPE_ALIGN (TREE_TYPE (TREE_TYPE (parm))));

emit_move_insn (local, static_chain_incoming_rtx);

}

/* If the function receives a non-local goto, then store the

bits we need to restore the frame pointer. */

if (cfun->nonlocal_goto_save_area)

{

tree t_save;

rtx r_save;

/* ??? We need to do this save early. Unfortunately here is

before the frame variable gets declared. Help out... */

tree var = TREE_OPERAND (cfun->nonlocal_goto_save_area, 0);

if (!DECL_RTL_SET_P (var))

expand_decl (var);

t_save = build4 (ARRAY_REF, ptr_type_node,

cfun->nonlocal_goto_save_area,

integer_zero_node, NULL_TREE, NULL_TREE);

r_save = expand_expr (t_save, NULL_RTX, VOIDmode, EXPAND_WRITE);

r_save = convert_memory_address (Pmode, r_save);

emit_move_insn (r_save, targetm.builtin_setjmp_frame_value ());

update_nonlocal_goto_save_area ();

}

/* The following was moved from init_function_start.

The move is supposed to make sdb output more accurate. */

/* Indicate the beginning of the function body,

as opposed to parm setup. */

emit_note (NOTE_INSN_FUNCTION_BEG);

gcc_assert (NOTE_P (get_last_insn ()));

parm_birth_insn = get_last_insn ();

if (crtl->profile)

{

#ifdef PROFILE_HOOK

PROFILE_HOOK (current_function_funcdef_no);

#endif

}

/* After the display initializations is where the stack checking

probe should go. */

if(flag_stack_check)

stack_check_probe_note = emit_note (NOTE_INSN_DELETED);

/* Make sure there is a line number after the function entry setup code. */

force_next_line_note ();

}

初始块的处理(TODO)

基本块的RTL的RTL生成(TODO)

退出块的处理

每个函数的CFGG都包含了两个特殊的基本块,即ENTRY_BLOCK(入口块)与EXIT_BLOCK(出口块),分别使用ENTRY_BLOCK_PTR和EXIT_BLOCK_PTR对其进行访问。退出块,其名称也为exit_block,但含义有所不同,为了区分,称ENTRY_BLOCK及EXIT_BLOCK分别为“入口块”和“出口块”,而称exit_block为“退出块。

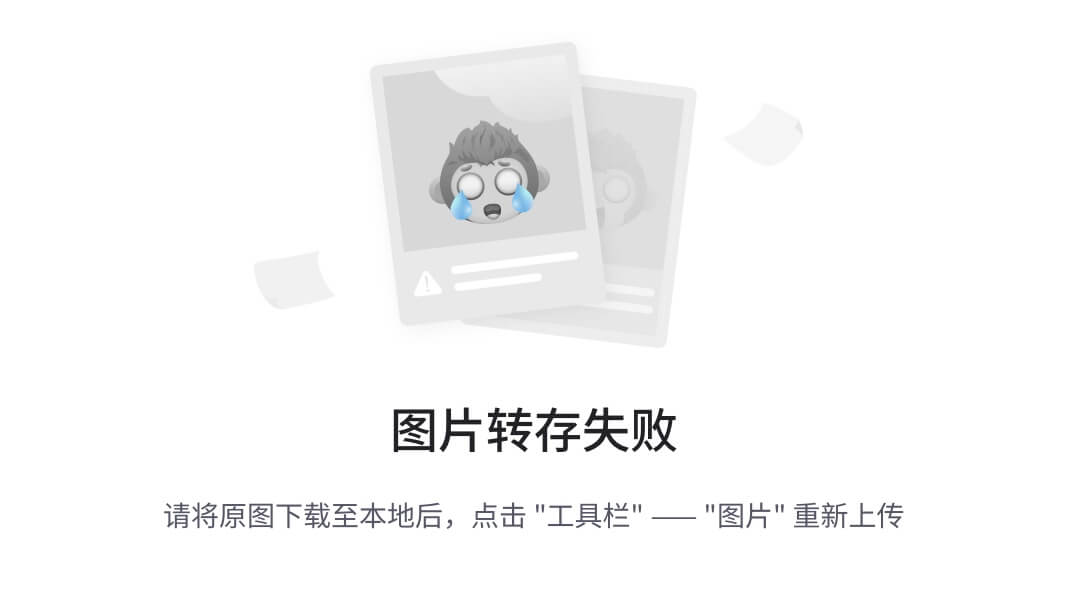

退出块exit_block的创建是通过调用函数construct_exit_block实现的,如下图所示BB-9就是退出块:

construct_exit_block函数代码如下:

/* Create a block containing landing pads and similar stuff. */

static void

construct_exit_block (void)

{

rtx head = get_last_insn ();

rtx end;

basic_block exit_block;

edge e, e2;

unsigned ix;

edge_iterator ei;

rtx orig_end = BB_END (EXIT_BLOCK_PTR->prev_bb);

rtl_profile_for_bb (EXIT_BLOCK_PTR);

/* Make sure the locus is set to the end of the function, so that

epilogue line numbers and warnings are set properly. */

if (cfun->function_end_locus != UNKNOWN_LOCATION)

input_location = cfun->function_end_locus;

/* The following insns belong to the top scope. */

set_curr_insn_block (DECL_INITIAL (current_function_decl));

/* Generate rtl for function exit. */

expand_function_end ();

end = get_last_insn ();

if (head == end)

return;

/* While emitting the function end we could move end of the last basic block.

*/

BB_END (EXIT_BLOCK_PTR->prev_bb) = orig_end;

while (NEXT_INSN (head) && NOTE_P (NEXT_INSN (head)))

head = NEXT_INSN (head);

exit_block = create_basic_block (NEXT_INSN (head), end,

EXIT_BLOCK_PTR->prev_bb);

exit_block->frequency = EXIT_BLOCK_PTR->frequency;

exit_block->count = EXIT_BLOCK_PTR->count;

ix = 0;

while (ix < EDGE_COUNT (EXIT_BLOCK_PTR->preds))

{

e = EDGE_PRED (EXIT_BLOCK_PTR, ix);

if (!(e->flags & EDGE_ABNORMAL))

redirect_edge_succ (e, exit_block);

else

ix++;

}

e = make_edge (exit_block, EXIT_BLOCK_PTR, EDGE_FALLTHRU);

e->probability = REG_BR_PROB_BASE;

e->count = EXIT_BLOCK_PTR->count;

FOR_EACH_EDGE (e2, ei, EXIT_BLOCK_PTR->preds)

if (e2 != e)

{

e->count -= e2->count;

exit_block->count -= e2->count;

exit_block->frequency -= EDGE_FREQUENCY (e2);

}

if (e->count < 0)

e->count = 0;

if (exit_block->count < 0)

exit_block->count = 0;

if (exit_block->frequency < 0)

exit_block->frequency = 0;

update_bb_for_insn (exit_block);

}

expand_function_end函数一般来讲会针对如下情况生成insn:

- 为返回标签return_label(即crtl->x_return_label)生成CODE_LABEL insn;

- 若函数的返回值保存在虚拟寄存器或堆栈空间中,需要将其复制到物理寄存器中;

- 其他情况;

/* Generate RTL for the end of the current function. */

void

expand_function_end (void)

{

rtx clobber_after;

/* If arg_pointer_save_area was referenced only from a nested

function, we will not have initialized it yet. Do that now. */

if (arg_pointer_save_area && ! crtl->arg_pointer_save_area_init)

get_arg_pointer_save_area ();

/* If we are doing generic stack checking and this function makes calls,

do a stack probe at the start of the function to ensure we have enough

space for another stack frame. */

if (flag_stack_check == GENERIC_STACK_CHECK)

{

rtx insn, seq;

for (insn = get_insns (); insn; insn = NEXT_INSN (insn))

if (CALL_P (insn))

{

start_sequence ();

probe_stack_range (STACK_OLD_CHECK_PROTECT,

GEN_INT (STACK_CHECK_MAX_FRAME_SIZE));

seq = get_insns ();

end_sequence ();

emit_insn_before (seq, stack_check_probe_note);

break;

}

}

/* End any sequences that failed to be closed due to syntax errors. */

while (in_sequence_p ())

end_sequence ();

clear_pending_stack_adjust ();

do_pending_stack_adjust ();

/* Output a linenumber for the end of the function.

SDB depends on this. */

force_next_line_note ();

set_curr_insn_source_location (input_location);

/* Before the return label (if any), clobber the return

registers so that they are not propagated live to the rest of

the function. This can only happen with functions that drop

through; if there had been a return statement, there would

have either been a return rtx, or a jump to the return label.

We delay actual code generation after the current_function_value_rtx

is computed. */

clobber_after = get_last_insn ();

/* Output the label for the actual return from the function. */

emit_label (return_label);

if (USING_SJLJ_EXCEPTIONS)

{

/* Let except.c know where it should emit the call to unregister

the function context for sjlj exceptions. */

if (flag_exceptions)

sjlj_emit_function_exit_after (get_last_insn ());

}

else

{

/* We want to ensure that instructions that may trap are not

moved into the epilogue by scheduling, because we don't

always emit unwind information for the epilogue. */

if (flag_non_call_exceptions)

emit_insn (gen_blockage ());

}

/* If this is an implementation of throw, do what's necessary to

communicate between __builtin_eh_return and the epilogue. */

expand_eh_return ();

/* If scalar return value was computed in a pseudo-reg, or was a named

return value that got dumped to the stack, copy that to the hard

return register. */

if (DECL_RTL_SET_P (DECL_RESULT (current_function_decl)))

{

tree decl_result = DECL_RESULT (current_function_decl);

rtx decl_rtl = DECL_RTL (decl_result);

if (REG_P (decl_rtl)

? REGNO (decl_rtl) >= FIRST_PSEUDO_REGISTER

: DECL_REGISTER (decl_result))

{

rtx real_decl_rtl = crtl->return_rtx;

/* This should be set in assign_parms. */

gcc_assert (REG_FUNCTION_VALUE_P (real_decl_rtl));

/* If this is a BLKmode structure being returned in registers,

then use the mode computed in expand_return. Note that if

decl_rtl is memory, then its mode may have been changed,

but that crtl->return_rtx has not. */

if (GET_MODE (real_decl_rtl) == BLKmode)

PUT_MODE (real_decl_rtl, GET_MODE (decl_rtl));

/* If a non-BLKmode return value should be padded at the least

significant end of the register, shift it left by the appropriate

amount. BLKmode results are handled using the group load/store

machinery. */

if (TYPE_MODE (TREE_TYPE (decl_result)) != BLKmode

&& targetm.calls.return_in_msb (TREE_TYPE (decl_result)))

{

emit_move_insn (gen_rtx_REG (GET_MODE (decl_rtl),

REGNO (real_decl_rtl)),

decl_rtl);

shift_return_value (GET_MODE (decl_rtl), true, real_decl_rtl);

}

/* If a named return value dumped decl_return to memory, then

we may need to re-do the PROMOTE_MODE signed/unsigned

extension. */

else if (GET_MODE (real_decl_rtl) != GET_MODE (decl_rtl))

{

int unsignedp = TYPE_UNSIGNED (TREE_TYPE (decl_result));

if (targetm.calls.promote_function_return (TREE_TYPE (current_function_decl)))

promote_mode (TREE_TYPE (decl_result), GET_MODE (decl_rtl),

&unsignedp, 1);

convert_move (real_decl_rtl, decl_rtl, unsignedp);

}

else if (GET_CODE (real_decl_rtl) == PARALLEL)

{

/* If expand_function_start has created a PARALLEL for decl_rtl,

move the result to the real return registers. Otherwise, do

a group load from decl_rtl for a named return. */

if (GET_CODE (decl_rtl) == PARALLEL)

emit_group_move (real_decl_rtl, decl_rtl);

else

emit_group_load (real_decl_rtl, decl_rtl,

TREE_TYPE (decl_result),

int_size_in_bytes (TREE_TYPE (decl_result)));

}

/* In the case of complex integer modes smaller than a word, we'll

need to generate some non-trivial bitfield insertions. Do that

on a pseudo and not the hard register. */

else if (GET_CODE (decl_rtl) == CONCAT

&& GET_MODE_CLASS (GET_MODE (decl_rtl)) == MODE_COMPLEX_INT

&& GET_MODE_BITSIZE (GET_MODE (decl_rtl)) <= BITS_PER_WORD)

{

int old_generating_concat_p;

rtx tmp;

old_generating_concat_p = generating_concat_p;

generating_concat_p = 0;

tmp = gen_reg_rtx (GET_MODE (decl_rtl));

generating_concat_p = old_generating_concat_p;

emit_move_insn (tmp, decl_rtl);

emit_move_insn (real_decl_rtl, tmp);

}

else

emit_move_insn (real_decl_rtl, decl_rtl);

}

}

/* If returning a structure, arrange to return the address of the value

in a place where debuggers expect to find it.

If returning a structure PCC style,

the caller also depends on this value.

And cfun->returns_pcc_struct is not necessarily set. */

if (cfun->returns_struct

|| cfun->returns_pcc_struct)

{

rtx value_address = DECL_RTL (DECL_RESULT (current_function_decl));

tree type = TREE_TYPE (DECL_RESULT (current_function_decl));

rtx outgoing;

if (DECL_BY_REFERENCE (DECL_RESULT (current_function_decl)))

type = TREE_TYPE (type);

else

value_address = XEXP (value_address, 0);

outgoing = targetm.calls.function_value (build_pointer_type (type),

current_function_decl, true);

/* Mark this as a function return value so integrate will delete the

assignment and USE below when inlining this function. */

REG_FUNCTION_VALUE_P (outgoing) = 1;

/* The address may be ptr_mode and OUTGOING may be Pmode. */

value_address = convert_memory_address (GET_MODE (outgoing),

value_address);

emit_move_insn (outgoing, value_address);

/* Show return register used to hold result (in this case the address

of the result. */

crtl->return_rtx = outgoing;

}

/* Emit the actual code to clobber return register. */

{

rtx seq;

start_sequence ();

clobber_return_register ();

expand_naked_return ();

seq = get_insns ();

end_sequence ();

emit_insn_after (seq, clobber_after);

}

/* Output the label for the naked return from the function. */

emit_label (naked_return_label);

/* @@@ This is a kludge. We want to ensure that instructions that

may trap are not moved into the epilogue by scheduling, because

we don't always emit unwind information for the epilogue. */

if (! USING_SJLJ_EXCEPTIONS && flag_non_call_exceptions)

emit_insn (gen_blockage ());

/* If stack protection is enabled for this function, check the guard. */

if (crtl->stack_protect_guard)

stack_protect_epilogue ();

/* If we had calls to alloca, and this machine needs

an accurate stack pointer to exit the function,

insert some code to save and restore the stack pointer. */

if (! EXIT_IGNORE_STACK

&& cfun->calls_alloca)

{

rtx tem = 0;

emit_stack_save (SAVE_FUNCTION, &tem, parm_birth_insn);

emit_stack_restore (SAVE_FUNCTION, tem, NULL_RTX);

}

/* ??? This should no longer be necessary since stupid is no longer with

us, but there are some parts of the compiler (eg reload_combine, and

sh mach_dep_reorg) that still try and compute their own lifetime info

instead of using the general framework. */

use_return_register ();

}

其他处理

在expand_gimple_cfg函数中完成了变量、参数、返回值以及基本块的RTL生成之后,还需要进行一些其他处理,对生成的RTL进行重新梳理,包括重建基本块、异常处理等其他操作。

GIMPLE语句转换成RTL

一条GIMPLE语句生成RTL时,通常先将该GIMPLE语句转换成树的存储形式,再根据树中表达式节点的TREE_CODE值,调用相应的函数生成对应的insn表示。

GIMPLE语句转换的一般转换过程

一条GIMPLE转换成RTL时,一般经历两个阶段:

GIMPLE语句转换成树形结构

一条GIMPLE语句就是从AST(抽象语法树)的某个子树转换而来的,当GIMPLE需要转换成RTL时,大多要先通过gimple_to_tree(gimple stmt)函数将GIMPLE语句重新转换成树结构,然后再转换成RTL。

此处这样做的原因是GIMPLE线性元组上进行RTL展开是非常繁琐的。

从树形结构生成RTL

从树形结构生辰insn需要解决两个关键问题:首先,构造insn指令模板的选择;其次,根据模板中的操作数等信息,从树形结构中提取操作数,并利用指令模板中提供的构造函数来构造insn的主体,从而生成insn。

这里引入标准指令模板名称SPN,作为TREE_CODE和对应指令版本之间的“映射中介”。映射关系主要在提取机器文件信息时,通过构造insn_code和optab_table[]来完成。

具体的过程如下:

(1)确定指令模板的索引号insn_code

对于部分TREE_CODE,也可以使用optab_for_tree_code函数,提取该操作的optab表项。

由于optab_table的初值是从机器描述文件中提取出来的,不同目标机器所初始化的optab_table是不同的,因此,从操作到指令模板的索引号之间的映射关系则是机器相关的,相同的optab_table中指令模板的索引号对于不同的目标机器也可能是不同的。

(2) 根据指令模板中的RTX模板完成insn的构造

在获取了指令模板的索引值icode之后,提取该指令模板中RTX构造函数,从树结构中提取该RTX模板中所需要的操作数,判断操作数的有效性,最后利用构造函数和相应的操作数构造insn主体。

通过调用GEN_FCN这个宏来完成:

/* Given an enum insn_code, access the function to construct

the body of that kind of insn. */

#define GEN_FCN(CODE) (insn_data[CODE].genfun)

struct insn_data

{

const char *const name;

#if HAVE_DESIGNATED_INITIALIZERS

union {

const char *single;

const char *const *multi;

insn_output_fn function;

} output;

#else

struct {

const char *single;

const char *const *multi;

insn_output_fn function;

} output;

#endif

const insn_gen_fn genfun;

const struct insn_operand_data *const operand;

const char n_operands;

const char n_dups;

const char n_alternatives;

const char output_format;

};

typedef rtx (*insn_gen_fn) (rtx, ...);

以gimple_assign <plus_expr、D.1250、static_sum.0D.1249和jD.1243>语句为例:

GIMPLE_GOTO语句的RTL语句生成

GIMPLE_GOTO的描述如下:

GIMPLE_GOTO <TARGET>

RTL转换过程如下:

(1)GIMPLE->TREE:

gimple_to_tree()

case GIMPLE_GOTO:

t = build1 (GOTO_EXPR, void_type_node, gimple_goto_dest (stmt));

break;

(2)TREE->RTL:

static rtx

expand_expr_real_1 (tree exp, rtx target, enum machine_mode tmode,

enum expand_modifier modifier, rtx *alt_rtl)

{

rtx op0, op1, op2, temp, decl_rtl;

tree type;

int unsignedp;

enum machine_mode mode;

enum tree_code code = TREE_CODE (exp);

optab this_optab;

rtx subtarget, original_target;

int ignore;

tree context, subexp0, subexp1;

bool reduce_bit_field;

#define REDUCE_BIT_FIELD(expr) (reduce_bit_field \

? reduce_to_bit_field_precision ((expr), \

target, \

type) \

: (expr))

type = TREE_TYPE (exp);

mode = TYPE_MODE (type);

unsignedp = TYPE_UNSIGNED (type);

ignore = (target == const0_rtx

|| ((CONVERT_EXPR_CODE_P (code)

|| code == COND_EXPR || code == VIEW_CONVERT_EXPR)

&& TREE_CODE (type) == VOID_TYPE));

/* An operation in what may be a bit-field type needs the

result to be reduced to the precision of the bit-field type,

which is narrower than that of the type's mode. */

reduce_bit_field = (!ignore

&& TREE_CODE (type) == INTEGER_TYPE

&& GET_MODE_PRECISION (mode) > TYPE_PRECISION (type));

/* If we are going to ignore this result, we need only do something

if there is a side-effect somewhere in the expression. If there

is, short-circuit the most common cases here. Note that we must

not call expand_expr with anything but const0_rtx in case this

is an initial expansion of a size that contains a PLACEHOLDER_EXPR. */

if (ignore)

{

if (! TREE_SIDE_EFFECTS (exp))

return const0_rtx;

/* Ensure we reference a volatile object even if value is ignored, but

don't do this if all we are doing is taking its address. */

if (TREE_THIS_VOLATILE (exp)

&& TREE_CODE (exp) != FUNCTION_DECL

&& mode != VOIDmode && mode != BLKmode

&& modifier != EXPAND_CONST_ADDRESS)

{

temp = expand_expr (exp, NULL_RTX, VOIDmode, modifier);

if (MEM_P (temp))

temp = copy_to_reg (temp);

return const0_rtx;

}

if (TREE_CODE_CLASS (code) == tcc_unary

|| code == COMPONENT_REF || code == INDIRECT_REF)

return expand_expr (TREE_OPERAND (exp, 0), const0_rtx, VOIDmode,

modifier);

else if (TREE_CODE_CLASS (code) == tcc_binary

|| TREE_CODE_CLASS (code) == tcc_comparison

|| code == ARRAY_REF || code == ARRAY_RANGE_REF)

{

expand_expr (TREE_OPERAND (exp, 0), const0_rtx, VOIDmode, modifier);

expand_expr (TREE_OPERAND (exp, 1), const0_rtx, VOIDmode, modifier);

return const0_rtx;

}

else if (code == BIT_FIELD_REF)

{

expand_expr (TREE_OPERAND (exp, 0), const0_rtx, VOIDmode, modifier);

expand_expr (TREE_OPERAND (exp, 1), const0_rtx, VOIDmode, modifier);

expand_expr (TREE_OPERAND (exp, 2), const0_rtx, VOIDmode, modifier);

return const0_rtx;

}

target = 0;

}

if (reduce_bit_field && modifier == EXPAND_STACK_PARM)

target = 0;

/* Use subtarget as the target for operand 0 of a binary operation. */

subtarget = get_subtarget (target);

original_target = target;

switch (code)

{

.......

case GOTO_EXPR:

if (TREE_CODE (TREE_OPERAND (exp, 0)) == LABEL_DECL)

expand_goto (TREE_OPERAND (exp, 0));

else

expand_computed_goto (TREE_OPERAND (exp, 0));

return const0_rtx;

case CONSTRUCTOR:

/* If we don't need the result, just ensure we evaluate any

subexpressions. */

if (ignore)

{

unsigned HOST_WIDE_INT idx;

tree value;

FOR_EACH_CONSTRUCTOR_VALUE (CONSTRUCTOR_ELTS (exp), idx, value)

expand_expr (value, const0_rtx, VOIDmode, EXPAND_NORMAL);

return const0_rtx;

}

......

default:

return lang_hooks.expand_expr (exp, original_target, tmode,

modifier, alt_rtl);

}

/* Here to do an ordinary binary operator. */

binop:

expand_operands (TREE_OPERAND (exp, 0), TREE_OPERAND (exp, 1),

subtarget, &op0, &op1, 0);

binop2:

this_optab = optab_for_tree_code (code, type, optab_default);

binop3:

if (modifier == EXPAND_STACK_PARM)

target = 0;

temp = expand_binop (mode, this_optab, op0, op1, target,

unsignedp, OPTAB_LIB_WIDEN);

gcc_assert (temp);

return REDUCE_BIT_FIELD (temp);

}

expand_goto是关键函数,它完成GOTO_EXPR表达式insn的生成(生成跳转到label标签的跳转指令,也就是jump insn):

/* Generate RTL code for a `goto' statement with target label LABEL.

LABEL should be a LABEL_DECL tree node that was or will later be

defined with `expand_label'. */

void

expand_goto (tree label)

{

#ifdef ENABLE_CHECKING

/* Check for a nonlocal goto to a containing function. Should have

gotten translated to __builtin_nonlocal_goto. */

tree context = decl_function_context (label);

gcc_assert (!context || context == current_function_decl);

#endif

emit_jump (label_rtx (label));

}

分析可以发现,expand_go函数的主体就是emit_jump函数,去生成一条跳转指令,这个过程本质上与机器是无关的:

首次可以分析label_rtx的生成代码,该函数返回树节点LABEL_DECL所对应的CODE_LABEL rtx,若不存在,则为其新建rtx。

/* Return the rtx-label that corresponds to a LABEL_DECL,

creating it if necessary. */

rtx

label_rtx (tree label)

{

gcc_assert (TREE_CODE (label) == LABEL_DECL);

if (!DECL_RTL_SET_P (label))

{

rtx r = gen_label_rtx ();

SET_DECL_RTL (label, r);

if (FORCED_LABEL (label) || DECL_NONLOCAL (label))

LABEL_PRESERVE_P (r) = 1;

}

return DECL_RTL (label);

}

值得注意的是,在不同的目标处理器上,“jump”指令的实现是不用的,其具体形式在目标机器的机器描述文件{target}.md中,其模板名称为“jump”的指令模板所定义的。

下面举的例子就是arc指令架构的jump指令模板:

;; Unconditional and other jump instructions.

(define_insn "jump"

[(set (pc) (label_ref (match_operand 0 "" "")))]

""

"b%* %l0"

[(set_attr "type" "uncond_branch")])

继续看emit_jump的生成过程,该函数通过gen_jump构造一个跳转到标签label的rtx:

/* Add an unconditional jump to LABEL as the next sequential instruction. */

void

emit_jump (rtx label)

{

do_pending_stack_adjust ();

emit_jump_insn (gen_jump (label));

emit_barrier ();

}

看到编译过程中生成的gen_jump:

/*in host-i686-pc-linux-gnu/gcc/insn-emit.c

../.././gcc/config/dummy/dummy.md:45 该生成函数在机器文件中的位置,即define_insn "jump"

的位置 */

rtx

gen_jump (rtx operand0 ATTRIBUTE_UNUSED)

{

return gen_rtx_SET (VOIDmode,

pc_rtx,

gen_rtx_LABEL_REF (VOIDmode,

operand0));

}

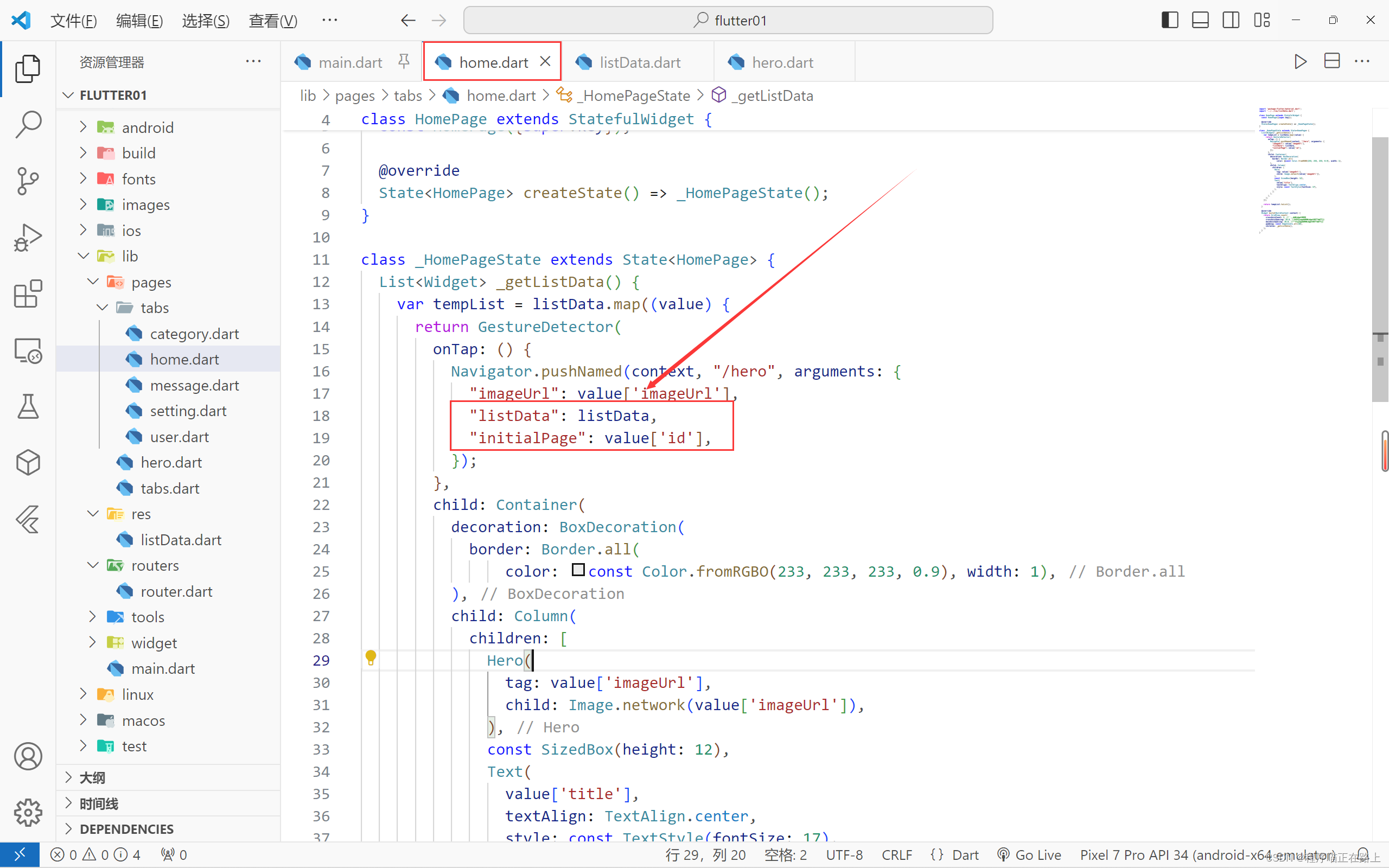

指令模板和对应的RTX构造函数对应关系图如下:

根据“jump”指令模板中RTX模板的内容,构造函数gen_jump从参数rtx label中提取操作数,完成“jump”指令模板中的rtx的构造,以此rtx作为参数,调用emit_jump_insn函数生成JUMP_INSN insn。

GIMPLE_ASSIGN语句的RTL生成

GIMPLE_ASSIGN语句的描述如下:

GIMPLE_ASSIGN <SUBCODE, LHS, RHS1[, RHS2]>

RTL转换过程如下:

(1)GIMPLE->TREE:

(2)TREE->RTL:

GIMPLE->TREE:

gimple_to_tree()

从GIMPLE_ASSIGN语句转换成树的过程包括两个主要步骤,首先提取并转换该GIMPLE语句的左操作数和右操作数,形成左操作数树节点和右操作数树节点;然后利用这两个操作数节点创建MODIFY_EXPR树节点。

switch (gimple_code (stmt))

{

case GIMPLE_ASSIGN:

{

tree lhs = gimple_assign_lhs (stmt);

t = gimple_assign_rhs_to_tree (stmt);

t = build2 (MODIFY_EXPR, TREE_TYPE (lhs), lhs, t);

if (gimple_assign_nontemporal_move_p (stmt))

MOVE_NONTEMPORAL (t) = true;

}

break;

继续看gimple_assign_lhs函数,返回GIMPLE_ASSIGN语句的op0:

/* Return the LHS of assignment statement GS. */

static inline tree

gimple_assign_lhs (const_gimple gs)

{

GIMPLE_CHECK (gs, GIMPLE_ASSIGN);

return gimple_op (gs, 0);

}

下面将调用gimple_assign_rhs_to_tree,该函数根据GIMPLE_ASSIGN中操作码及右操作数的类型(双目运算操作数、单目运算操作数、单操作数),分别构建对应的树节点:

/* Return an expression tree corresponding to the RHS of GIMPLE

statement STMT. */

tree

gimple_assign_rhs_to_tree (gimple stmt)

{

tree t;

enum gimple_rhs_class grhs_class;

grhs_class = get_gimple_rhs_class (gimple_expr_code (stmt));

if (grhs_class == GIMPLE_BINARY_RHS)

t = build2 (gimple_assign_rhs_code (stmt),

TREE_TYPE (gimple_assign_lhs (stmt)),

gimple_assign_rhs1 (stmt),

gimple_assign_rhs2 (stmt));

else if (grhs_class == GIMPLE_UNARY_RHS)

t = build1 (gimple_assign_rhs_code (stmt),

TREE_TYPE (gimple_assign_lhs (stmt)),

gimple_assign_rhs1 (stmt));

else if (grhs_class == GIMPLE_SINGLE_RHS)

t = gimple_assign_rhs1 (stmt);

else

gcc_unreachable ();

return t;

}

举例说明,若有下面的GIMPLE-ASSIGN语句:

gimple_assign <mult_expr, j, i, 2>

该语句的op0为变量j, op1为变量i, op2为整数常量2, subcode为MULT_EXPR,表示的语义是:

j = i * 2;

上述语句转换成RTL之前,首先经过gimple_to_tree()函数处理,将其转变成树形结构,其树根节点的TREE_CODE为MODIFY_EXPR,转变后生成的树节点如图所示:

TREE->RTL:

expand_expr_stmt(stmt_tree)将该树节点生成对应的RTL序列。

函数调用关系如下:

expand_expr_stmt

expand_expr

expand_expr_real

expand_expr_real_1

在GIMPLE_GOTO已经简单分析过expand_expr_real_1函数了,这里会根据不同的TREE_CODE,调用不同的函数生成,因为在前面提到当前例子的TREE_CODE=MODIFY_RXPR,下面具体展开分析:

case MODIFY_EXPR:

{

tree lhs = TREE_OPERAND (exp, 0);

tree rhs = TREE_OPERAND (exp, 1);

gcc_assert (ignore);

/* Check for |= or &= of a bitfield of size one into another bitfield

of size 1. In this case, (unless we need the result of the

assignment) we can do this more efficiently with a

test followed by an assignment, if necessary.

??? At this point, we can't get a BIT_FIELD_REF here. But if

things change so we do, this code should be enhanced to

support it. */

if (TREE_CODE (lhs) == COMPONENT_REF

&& (TREE_CODE (rhs) == BIT_IOR_EXPR

|| TREE_CODE (rhs) == BIT_AND_EXPR)

&& TREE_OPERAND (rhs, 0) == lhs

&& TREE_CODE (TREE_OPERAND (rhs, 1)) == COMPONENT_REF

&& integer_onep (DECL_SIZE (TREE_OPERAND (lhs, 1)))

&& integer_onep (DECL_SIZE (TREE_OPERAND (TREE_OPERAND (rhs, 1), 1))))

{

rtx label = gen_label_rtx ();

int value = TREE_CODE (rhs) == BIT_IOR_EXPR;

do_jump (TREE_OPERAND (rhs, 1),

value ? label : 0,

value ? 0 : label);

expand_assignment (lhs, build_int_cst (TREE_TYPE (rhs), value),

MOVE_NONTEMPORAL (exp));

do_pending_stack_adjust ();

emit_label (label);

return const0_rtx;

}

expand_assignment (lhs, rhs, MOVE_NONTEMPORAL (exp));

return const0_rtx;

}

expand_expr_real_1()函数中,对于MODIFY_EXPR节点的处理中,首先提取左操作数节点lhs和右操作数节点rhs,最后调用expand_assignment(lhs, rhs, MOVE_NONTEMPORAL (exp))函数进行RTL的生成。

expand_assignment (tree to, tree from, bool nontemporal)函数原型可以发现,完成了将from树节点赋值到to树节点的RTL生成。

/* Expand an assignment that stores the value of FROM into TO. If NONTEMPORAL

is true, try generating a nontemporal store. */

void

expand_assignment (tree to, tree from, bool nontemporal)

{

rtx to_rtx = 0;

rtx result;

/* Don't crash if the lhs of the assignment was erroneous. */

if (TREE_CODE (to) == ERROR_MARK)

{

result = expand_normal (from);

return;

}

/* Optimize away no-op moves without side-effects. */

if (operand_equal_p (to, from, 0))

return;

/* Assignment of a structure component needs special treatment

if the structure component's rtx is not simply a MEM.

Assignment of an array element at a constant index, and assignment of

an array element in an unaligned packed structure field, has the same

problem. */

if (handled_component_p (to)

|| TREE_CODE (TREE_TYPE (to)) == ARRAY_TYPE)

{

enum machine_mode mode1;

HOST_WIDE_INT bitsize, bitpos;

tree offset;

int unsignedp;

int volatilep = 0;

tree tem;

push_temp_slots ();

tem = get_inner_reference (to, &bitsize, &bitpos, &offset, &mode1,

&unsignedp, &volatilep, true);

/* If we are going to use store_bit_field and extract_bit_field,

make sure to_rtx will be safe for multiple use. */

to_rtx = expand_normal (tem);

if (offset != 0)

{

rtx offset_rtx;

if (!MEM_P (to_rtx))

{

/* We can get constant negative offsets into arrays with broken

user code. Translate this to a trap instead of ICEing. */

gcc_assert (TREE_CODE (offset) == INTEGER_CST);

expand_builtin_trap ();

to_rtx = gen_rtx_MEM (BLKmode, const0_rtx);

}

offset_rtx = expand_expr (offset, NULL_RTX, VOIDmode, EXPAND_SUM);

#ifdef POINTERS_EXTEND_UNSIGNED

if (GET_MODE (offset_rtx) != Pmode)

offset_rtx = convert_to_mode (Pmode, offset_rtx, 0);

#else

if (GET_MODE (offset_rtx) != ptr_mode)

offset_rtx = convert_to_mode (ptr_mode, offset_rtx, 0);

#endif

/* A constant address in TO_RTX can have VOIDmode, we must not try

to call force_reg for that case. Avoid that case. */

if (MEM_P (to_rtx)

&& GET_MODE (to_rtx) == BLKmode

&& GET_MODE (XEXP (to_rtx, 0)) != VOIDmode

&& bitsize > 0

&& (bitpos % bitsize) == 0

&& (bitsize % GET_MODE_ALIGNMENT (mode1)) == 0

&& MEM_ALIGN (to_rtx) == GET_MODE_ALIGNMENT (mode1))

{

to_rtx = adjust_address (to_rtx, mode1, bitpos / BITS_PER_UNIT);

bitpos = 0;

}

to_rtx = offset_address (to_rtx, offset_rtx,

highest_pow2_factor_for_target (to,

offset));

}

/* Handle expand_expr of a complex value returning a CONCAT. */

if (GET_CODE (to_rtx) == CONCAT)

{

if (TREE_CODE (TREE_TYPE (from)) == COMPLEX_TYPE)

{

gcc_assert (bitpos == 0);

result = store_expr (from, to_rtx, false, nontemporal);

}

else

{

gcc_assert (bitpos == 0 || bitpos == GET_MODE_BITSIZE (mode1));

result = store_expr (from, XEXP (to_rtx, bitpos != 0), false,

nontemporal);

}

}

else

{

if (MEM_P (to_rtx))

{

/* If the field is at offset zero, we could have been given the

DECL_RTX of the parent struct. Don't munge it. */

to_rtx = shallow_copy_rtx (to_rtx);

set_mem_attributes_minus_bitpos (to_rtx, to, 0, bitpos);

/* Deal with volatile and readonly fields. The former is only

done for MEM. Also set MEM_KEEP_ALIAS_SET_P if needed. */

if (volatilep)

MEM_VOLATILE_P (to_rtx) = 1;

if (component_uses_parent_alias_set (to))

MEM_KEEP_ALIAS_SET_P (to_rtx) = 1;

}

if (optimize_bitfield_assignment_op (bitsize, bitpos, mode1,

to_rtx, to, from))

result = NULL;

else

result = store_field (to_rtx, bitsize, bitpos, mode1, from,

TREE_TYPE (tem), get_alias_set (to),

nontemporal);

}

if (result)

preserve_temp_slots (result);

free_temp_slots ();

pop_temp_slots ();

return;

}

/* If the rhs is a function call and its value is not an aggregate,

call the function before we start to compute the lhs.

This is needed for correct code for cases such as

val = setjmp (buf) on machines where reference to val

requires loading up part of an address in a separate insn.

Don't do this if TO is a VAR_DECL or PARM_DECL whose DECL_RTL is REG

since it might be a promoted variable where the zero- or sign- extension

needs to be done. Handling this in the normal way is safe because no

computation is done before the call. */

if (TREE_CODE (from) == CALL_EXPR && ! aggregate_value_p (from, from)

&& COMPLETE_TYPE_P (TREE_TYPE (from))

&& TREE_CODE (TYPE_SIZE (TREE_TYPE (from))) == INTEGER_CST

&& ! ((TREE_CODE (to) == VAR_DECL || TREE_CODE (to) == PARM_DECL)

&& REG_P (DECL_RTL (to))))

{

rtx value;

push_temp_slots ();

value = expand_normal (from);

if (to_rtx == 0)

to_rtx = expand_expr (to, NULL_RTX, VOIDmode, EXPAND_WRITE);

/* Handle calls that return values in multiple non-contiguous locations.

The Irix 6 ABI has examples of this. */

if (GET_CODE (to_rtx) == PARALLEL)

emit_group_load (to_rtx, value, TREE_TYPE (from),

int_size_in_bytes (TREE_TYPE (from)));

else if (GET_MODE (to_rtx) == BLKmode)

emit_block_move (to_rtx, value, expr_size (from), BLOCK_OP_NORMAL);

else

{

if (POINTER_TYPE_P (TREE_TYPE (to)))

value = convert_memory_address (GET_MODE (to_rtx), value);

emit_move_insn (to_rtx, value);

}

preserve_temp_slots (to_rtx);

free_temp_slots ();

pop_temp_slots ();

return;

}

/* Ordinary treatment. Expand TO to get a REG or MEM rtx.

Don't re-expand if it was expanded already (in COMPONENT_REF case). */

if (to_rtx == 0)

to_rtx = expand_expr (to, NULL_RTX, VOIDmode, EXPAND_WRITE);

/* Don't move directly into a return register. */

if (TREE_CODE (to) == RESULT_DECL

&& (REG_P (to_rtx) || GET_CODE (to_rtx) == PARALLEL))

{

rtx temp;

push_temp_slots ();

temp = expand_expr (from, NULL_RTX, GET_MODE (to_rtx), EXPAND_NORMAL);

if (GET_CODE (to_rtx) == PARALLEL)

emit_group_load (to_rtx, temp, TREE_TYPE (from),

int_size_in_bytes (TREE_TYPE (from)));

else

emit_move_insn (to_rtx, temp);

preserve_temp_slots (to_rtx);

free_temp_slots ();

pop_temp_slots ();

return;

}

/* In case we are returning the contents of an object which overlaps

the place the value is being stored, use a safe function when copying

a value through a pointer into a structure value return block. */

if (TREE_CODE (to) == RESULT_DECL && TREE_CODE (from) == INDIRECT_REF

&& cfun->returns_struct

&& !cfun->returns_pcc_struct)

{

rtx from_rtx, size;

push_temp_slots ();

size = expr_size (from);

from_rtx = expand_normal (from);

emit_library_call (memmove_libfunc, LCT_NORMAL,

VOIDmode, 3, XEXP (to_rtx, 0), Pmode,

XEXP (from_rtx, 0), Pmode,

convert_to_mode (TYPE_MODE (sizetype),

size, TYPE_UNSIGNED (sizetype)),

TYPE_MODE (sizetype));

preserve_temp_slots (to_rtx);

free_temp_slots ();

pop_temp_slots ();

return;

}

/* Compute FROM and store the value in the rtx we got. */

push_temp_slots ();

result = store_expr (from, to_rtx, 0, nontemporal);

preserve_temp_slots (result);

free_temp_slots ();

pop_temp_slots ();

return;

}

总结

从GIMPLE到RTL的过程是非常复杂的,而且针对不同树节点有数量众多的展开函数。

RTL的生成以函数为单位,分别进行变量rtx生成、参数处理、基本块处理等关键过程。基本块中每条GIMPLE语句的转换则主要根据其转换成树结构的TREE_CODE选择相应的展开函数,并最终根据TREE_CODE及机器模式等关键信息,查找optab中对应的表项,获取构造insn的指令模板索引号,从而使用该模板中的构造函数完成insn的构造。