一、高光谱数据集简介

1.1 数据集简介

数据集链接在这:高光谱数据集(.mat.csv)-科研学术

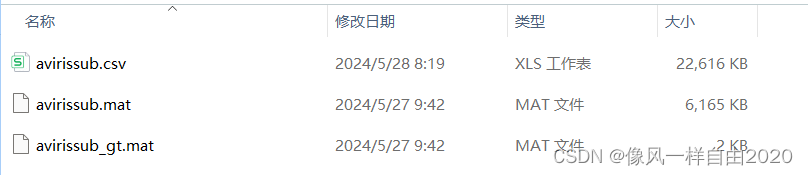

数据集包含下面三个文件:

文件中包含.mat与.csv,145x145x220,

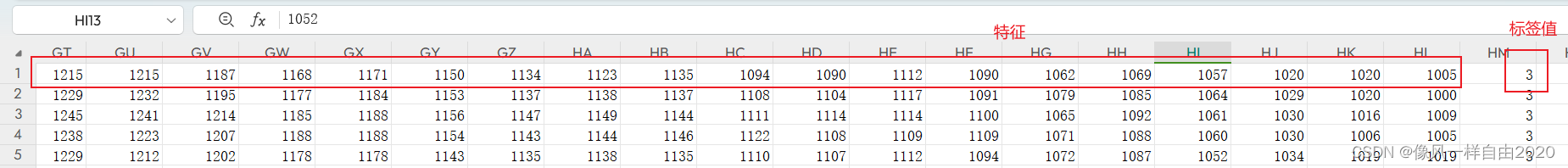

其实主要使用avirissub.csv文件,在代码上只是将mat文件转成了csv文件。具体avirissub.csv如下:145x145x220,每行代表一个数据,每行前220列代表特征,最后一列代表标签值,共17类标签。

1.2.软件环境与配置:

安装TensorFlow2.12.0版本。指令如下:

pip install tensorflow==2.12.0

这个版本最关键,其他库,以此安装即可。

二、基线模型实现:

该代码旨在通过构建和训练卷积神经网络(CNN)模型来进行分类任务。下面是代码的详细解释和网络模型结构的说明:

2.1. 环境设置和数据加载

import pandas as pd

from tensorflow import keras

from tensorflow.keras.layers import Dense, Dropout, Conv1D, MaxPooling1D, Flatten

from tensorflow.keras.models import Sequential

from tensorflow.keras import optimizers

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.callbacks import ModelCheckpoint, ReduceLROnPlateau

from keras.utils import np_utils

import scipy.io as sio

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

np.random.seed(42)

num_epoch = []

result_mean = []

result_std_y = []

result_std_w = []

- 引入所需库,包括Pandas、TensorFlow、Keras、Scipy等。

- 设置环境变量以使用指定的GPU设备。

- 设置随机种子以确保结果可重现。

2.2. 数据加载和预处理

data = sio.loadmat('D:/python_test/data/avirissub.mat')

data_L = sio.loadmat('D:/python_test/data/avirissub_gt.mat')

print(sio.whosmat('D:/python_test/data/avirissub.mat'))

print(sio.whosmat('D:/python_test/data/avirissub_gt.mat'))

data_D = data['x92AV3C']

data_L = data_L['x92AV3C_gt']

data_D_flat = data_D.reshape(-1, data_D.shape[-1])

print(data_D_flat.shape)

data_combined = pd.DataFrame(data_D_flat)

data_combined['label'] = data_L.flatten()

data_combined.to_csv('D:/python_test/data/avirissub.csv', index=False, header=False)

data = pd.read_csv('D:/python_test/data/avirissub.csv', header=None)

data = data.values

data_D = data[:, :-1]

data_L = data[:, -1]

print(data_D.shape)

data_D = data_D / np.max(np.max(data_D))

data_D_F = data_D / np.max(np.max(data_D))

data_train, data_test, label_train, label_test = train_test_split(data_D_F, data_L, test_size=0.8, random_state=42, stratify=data_L)

data_train = data_train.reshape(data_train.shape[0], data_train.shape[1], 1)

data_test = data_test.reshape(data_test.shape[0], data_test.shape[1], 1)

print(np.unique(label_train))

label_train = np_utils.to_categorical(label_train, None)

label_test = np_utils.to_categorical(label_test, None)

- 加载数据和标签,查看文件中的键和形状。

- 数据预处理:将多维数据展平成二维数组,合并数据和标签,保存为CSV文件,并从CSV文件中读取数据。

- 对特征数据进行归一化。

- 划分训练集和测试集,并调整数据形状以与Conv1D层兼容。

- 对标签数据进行独热编码。

2.3. 定义卷积神经网络模型

def CNN(num):

result = []

num_epoch.append(num)

for i in range(3):

time_S = time.time()

model = Sequential()

model.add(Conv1D(filters=6, kernel_size=8, input_shape=inputShape, activation='relu', name='spec_conv1'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool1'))

model.add(Conv1D(filters=12, kernel_size=7, activation='relu', name='spec_conv2'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool2'))

model.add(Conv1D(filters=24, kernel_size=8, activation='relu', name='spec_conv3'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool3'))

model.add(Flatten(name='spe_fla'))

model.add(Dense(256, activation='relu', name='spe_De'))

model.add(Dense(17, activation='softmax'))

adam = optimizers.Adam(learning_rate=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-8)

model.compile(loss='categorical_crossentropy', optimizer=adam, metrics=['accuracy'])

filepath = "../model/model_spe(5%).h5"

checkpointer = ModelCheckpoint(filepath, monitor='val_acc', save_weights_only=False, mode='max', save_best_only=True, verbose=0)

callback = [checkpointer]

reduce_lr = ReduceLROnPlateau(monitor='val_acc', factor=0.9, patience=10, verbose=0, mode='auto', epsilon=0.000001, cooldown=0, min_lr=0)

history = model.fit(data_train, label_train, epochs=num, batch_size=5, shuffle=True, validation_split=0.1, verbose=0)

scores = model.evaluate(data_test, label_test, verbose=0)

print("\n%s: %.2f%%" % (model.metrics_names[1], scores[1] * 100))

result.append(scores[1] * 100)

time_E = time.time()

print("costTime:", time_E - time_S, 's')

print(result)

result_mean.append(np.mean(result))

print("均值是:%.4f" % np.mean(result))

result_std_y.append(np.std(result))

print("标准差(有偏)是:%.4f" % np.std(result))

result_std_w.append(np.std(result, ddof=1))

print("标准差(无偏)是:%.4f" % np.std(result, ddof=1))

- 定义CNN函数,构建并训练卷积神经网络模型。

- 网络模型结构包括:

Conv1D层:一维卷积层,用于提取特征。共三个卷积层,每层有不同的过滤器数量和卷积核大小。MaxPooling1D层:最大池化层,用于下采样。每个卷积层后都有一个池化层。Flatten层:将多维特征图展平成一维。Dense层:全连接层,包含256个神经元,激活函数为ReLU。- 最后一层

Dense层:输出层,包含17个神经元,对应17个类别,激活函数为Softmax。

2.4. 模型训练和评估

if __name__ == '__main__':

CNN(5)

- 调用CNN函数并设置迭代次数为5。

完整的基线模型版本代码如下

:

from __future__ import print_function

import pandas as pd

from tensorflow import keras

from tensorflow.keras.layers import Dense, Dropout, Conv1D, MaxPooling1D, Flatten

from tensorflow.keras.models import Sequential

from tensorflow.keras import optimizers

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.callbacks import ModelCheckpoint, ReduceLROnPlateau

from keras.utils import np_utils

import scipy.io as sio

import os

# 设置环境变量,指定使用的 GPU 设备

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

# 设置随机种子以便实验结果可重现

np.random.seed(42)

# 初始化存储结果的列表

num_epoch = []

result_mean = []

result_std_y = []

result_std_w = []

# 加载数据

data = sio.loadmat('D:/python_test/data/avirissub.mat') # 加载数据

data_L = sio.loadmat('D:/python_test/data/avirissub_gt.mat') # 加载标签

# 查看.mat文件中包含的键和它们的形状

print(sio.whosmat('D:/python_test/data/avirissub.mat'))

print(sio.whosmat('D:/python_test/data/avirissub_gt.mat'))

# 提取数据和标签

data_D = data['x92AV3C']

data_L = data_L['x92AV3C_gt']

# 将多维数据展平成二维数组

data_D_flat = data_D.reshape(-1, data_D.shape[-1])

print(data_D_flat.shape)

# 将数据和标签合并

data_combined = pd.DataFrame(data_D_flat)

data_combined['label'] = data_L.flatten()

# 保存为.csv文件

data_combined.to_csv('D:/python_test/data/avirissub.csv', index=False, header=False)

# 从 CSV 文件中读取数据

data = pd.read_csv('D:/python_test/data/avirissub.csv', header=None) # 14 类可以用于分类

data = data.values

data_D = data[:, :-1] # 提取特征 提取了 data 矩阵的所有行和除了最后一列之外的所有列,这就是特征数据。

data_L = data[:, -1] # 提取标签 提取了 data 矩阵的所有行的最后一列,这就是标签数据

print(data_D.shape) # 打印特征数据的形状

# 对特征数据进行归一化

data_D = data_D / np.max(np.max(data_D))

data_D_F = data_D / np.max(np.max(data_D))

# 将数据划分为训练集和测试集

data_train, data_test, label_train, label_test = train_test_split(data_D_F, data_L, test_size=0.8, random_state=42,

stratify=data_L)

# 将数据重新调整为与 Conv1D 层兼容的形状

data_train = data_train.reshape(data_train.shape[0], data_train.shape[1], 1)

data_test = data_test.reshape(data_test.shape[0], data_test.shape[1], 1)

# 打印标签数据的唯一值,确保它们的范围是正确的

print(np.unique(label_train))

# 根据类来自动定义独热编码

label_train = np_utils.to_categorical(label_train, None)

label_test = np_utils.to_categorical(label_test, None)

inputShape = data_train[0].shape # 输入形状

import time

def CNN(num):

result = []

num_epoch.append(num)

# for i in range(50):

for i in range(3):

time_S = time.time()

model = Sequential()

# 定义模型结构

model.add(Conv1D(filters=6, kernel_size=8, input_shape=inputShape, activation='relu', name='spec_conv1'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool1'))

#

model.add(Conv1D(filters=12, kernel_size=7, activation='relu', name='spec_conv2'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool2'))

#

model.add(Conv1D(filters=24, kernel_size=8, activation='relu', name='spec_conv3'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool3'))

# model.add(Conv1D(filters=48, kernel_size=10, activation='relu', name='spec_conv4'))

# model.add(MaxPooling1D(pool_size=2, name='spec_pool4'))

model.add(Flatten(name='spe_fla'))

model.add(Dense(256, activation='relu', name='spe_De'))

# model.add(Dropout(0.5,name = 'drop'))

model.add(Dense(17, activation='softmax'))

# 设置优化器和损失函数,并编译模型

adam = optimizers.Adam(learning_rate=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-8)

model.compile(loss='categorical_crossentropy', optimizer=adam, metrics=['accuracy'])

filepath = "../model/model_spe(5%).h5"

checkpointer = ModelCheckpoint(filepath, monitor='val_acc', save_weights_only=False, mode='max',

save_best_only=True, verbose=0)

callback = [checkpointer]

reduce_lr = ReduceLROnPlateau(monitor='val_acc', factor=0.9, patience=10, verbose=0, mode='auto',

epsilon=0.000001,

cooldown=0, min_lr=0)

# 训练模型并计算评分

history = model.fit(data_train, label_train, epochs=num, batch_size=5, shuffle=True, validation_split=0.1,

verbose=0)

scores = model.evaluate(data_test, label_test, verbose=0)

print("\n%s: %.2f%%" % (model.metrics_names[1], scores[1] * 100))

# 保存模型

result.append(scores[1] * 100)

time_E = time.time()

print("costTime:", time_E - time_S, 's')

print(result)

result_mean.append(np.mean(result))

print("均值是:%.4f" % np.mean(result))

result_std_y.append(np.std(result))

print("标准差(有偏)是:%.4f" % np.std(result))

result_std_w.append(np.std(result, ddof=1))

print("标准差(无偏)是:%.4f" % np.std(result, ddof=1))

if __name__ == '__main__':

# 调用 CNN 函数并设置迭代次数为 50

# CNN(50)

CNN(5)

三、创新点实现:

这段代码在原有基础上引入了一些创新点,主要包括自定义卷积层和自定义回调函数。下面是具体创新点的详细解释:

3.1. 高斯核函数和自定义卷积层

高斯核函数

def gaussian_kernel(x, y, sigma=1.0):

return tf.exp(-tf.reduce_sum(tf.square(x - y), axis=-1) / (2 * sigma ** 2))

- 定义高斯核函数,用于计算输入片段与卷积核之间的相似性。

自定义卷积层

class GaussianKernelConv1D(Layer):

def __init__(self, filters, kernel_size, sigma=1.0, **kwargs):

super(GaussianKernelConv1D, self).__init__(**kwargs)

self.filters = filters

self.kernel_size = kernel_size

self.sigma = sigma

def build(self, input_shape):

self.kernel = self.add_weight(name='kernel',

shape=(self.kernel_size, int(input_shape[-1]), self.filters),

initializer='uniform',

trainable=True)

super(GaussianKernelConv1D, self).build(input_shape)

def call(self, inputs):

output = []

for i in range(inputs.shape[1] - self.kernel_size + 1):

slice = inputs[:, i:i+self.kernel_size, :]

slice = tf.expand_dims(slice, -1)

kernel = tf.expand_dims(self.kernel, 0)

similarity = gaussian_kernel(slice, kernel, self.sigma)

output.append(tf.reduce_sum(similarity, axis=2))

return tf.stack(output, axis=1)

GaussianKernelConv1D是一个自定义的一维卷积层,使用高斯核函数来计算相似性。build方法中定义了卷积核,并设置为可训练参数。call方法中实现了卷积操作,通过滑动窗口方式计算输入片段和卷积核之间的相似性,并累加这些相似性值。

3.2. 自定义回调函数

自定义回调函数用于在每个 epoch 结束时输出训练信息

class TrainingProgressCallback(Callback):

def on_epoch_end(self, epoch, logs=None):

logs = logs or {}

print(f"Epoch {epoch + 1}/{self.params['epochs']}, Loss: {logs.get('loss')}, Accuracy: {logs.get('accuracy')}, "

f"Val Loss: {logs.get('val_loss')}, Val Accuracy: {logs.get('val_accuracy')}")

TrainingProgressCallback是一个自定义回调函数,用于在每个 epoch 结束时输出训练进度,包括损失和准确率。

3.3. 模型构建、训练和评估

CNN 函数

def CNN(num):

result = []

num_epoch.append(num)

for i in range(3):

time_S = time.time()

model = Sequential()

# 定义模型结构

model.add(GaussianKernelConv1D(filters=6, kernel_size=8, input_shape=inputShape, name='spec_conv1'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool1'))

model.add(GaussianKernelConv1D(filters=12, kernel_size=7, name='spec_conv2'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool2'))

model.add(GaussianKernelConv1D(filters=24, kernel_size=8, name='spec_conv3'))

model.add(MaxPooling1D(pool_size=2, name='spec_pool3'))

model.add(Flatten(name='spe_fla'))

model.add(Dense(256, activation='relu', name='spe_De'))

model.add(Dense(17, activation='softmax'))

# 设置优化器和损失函数,并编译模型

adam = optimizers.Adam(learning_rate=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-8)

model.compile(loss='categorical_crossentropy', optimizer=adam, metrics=['accuracy'])

filepath = "../model/model_spe(5%).h5"

checkpointer = ModelCheckpoint(filepath, monitor='val_accuracy', save_weights_only=False, mode='max',

save_best_only=True, verbose=0)

callback = [checkpointer, TrainingProgressCallback()]

reduce_lr = ReduceLROnPlateau(monitor='val_accuracy', factor=0.9, patience=10, verbose=0, mode='auto',

min_delta=0.000001,

cooldown=0, min_lr=0)

callback.append(reduce_lr)

# 训练模型并计算评分

history = model.fit(data_train, label_train, epochs=num, batch_size=5, shuffle=True, validation_split=0.1,

verbose=1, callbacks=callback)

scores = model.evaluate(data_test, label_test, verbose=0)

print("\n%s: %.2f%%" % (model.metrics_names[1], scores[1] * 100))

result.append(scores[1] * 100)

time_E = time.time()

print("costTime:", time_E - time_S, 's')

print(result)

result_mean.append(np.mean(result))

print("均值是:%.4f" % np.mean(result))

result_std_y.append(np.std(result))

print("标准差(有偏)是:%.4f" % np.std(result))

result_std_w.append(np.std(result, ddof=1))

print("标准差(无偏)是:%.4f" % np.std(result, ddof=1))

- 在

CNN函数中,模型结构与之前类似,但卷积层替换为自定义的GaussianKernelConv1D层。 - 使用

TrainingProgressCallback在每个 epoch 结束时输出训练进度。 - 训练模型并评估其性能。

四、总结

相对于原代码,新的代码主要创新点包括:

- 引入高斯核函数和自定义卷积层:使用高斯核函数来计算输入片段与卷积核之间的相似性,增加了模型的灵活性和非线性特征提取能力。

- 自定义回调函数:用于在每个 epoch 结束时输出训练进度,提供更详细的训练信息,便于实时监控和调整模型。