Jetson Orin Nano v6.0 + tensorflow2.15.0+nv24.05 GPU版本安装

- 1. 源由

- 2. 步骤

- 2.1 Step1:系统安装

- 2.2 Step2: nvidia-jetpack安装

- 2.3 Step3:jtop安装

- 2.4 Step4:h5py安装

- 2.5 Step5:tensorflow安装

- 2.6 Step6:jupyterlab安装

- 3. 测试

- 4. 参考资料

- 5. 补充

- 5.1 直接安装tensorflow==2.15.0+nv24.05 - “Failed to build h5py”

- 5.2 直接安装h5py - “Failed to build h5py”

1. 源由

- Jetson Orin Nano Linux 36.2 6.0DP 对tensorflow支持上存在BUG,导致某些场景异常。不推荐使用6.0DP, Develop View Version,详见:Jammy@Jetson Orin Nano - Tensorflow GPU版本安装

- NVIDIA对于三方库(tensorflow)的支持不是很给力,可能源于内部商业逻辑,研发资源投入不足。发布的版本,仍然存在诸多安装问题。

虽然NVIDIA存在诸多资源配置上的问题,但是对开源还是有些许资源配给和验证,证明了这块热点区域的价值。

为此,我们特地整理一份资料,以便对于Jetson Orin Nano v6.0 + tensorflow2.15.0+nv24.05 GPU版本的安装提供解决方法。

2. 步骤

2.1 Step1:系统安装

详细请参考:

- Linux 36.3@Jetson Orin Nano之系统安装

- Linux 36.2@Jetson Orin Nano之基础环境构建

2.2 Step2: nvidia-jetpack安装

注:默认不安装nvidia-jetpack。

$ sudo apt update

$ sudo apt install nvidia-jetpack

2.3 Step3:jtop安装

用于查看nvidia-jetpack安装情况。

$ sudo apt update

$ sudo apt install python3-pip

$ sudo pip3 install -U jetson-stats

$ sudo systemctl restart jtop.service

2.4 Step4:h5py安装

注:这个步骤非常重要,如果不做会出现补充部分描述的h5py编译失败错误。相关解决方法在jetson nano上就有,但是到了jetson orin nano上依然存在:Failed to build wheel for h5py , in JETSON NANO。

$ sudo apt-get install python3-pip

$ sudo apt-get install libhdf5-serial-dev hdf5-tools libhdf5-dev

$ sudo pip3 install cython

$ sudo pip3 install h5py

Collecting h5py

Using cached h5py-3.11.0.tar.gz (406 kB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... done

Requirement already satisfied: numpy>=1.17.3 in /usr/lib/python3/dist-packages (from h5py) (1.21.5)

Building wheels for collected packages: h5py

Building wheel for h5py (pyproject.toml) ... - done

Created wheel for h5py: filename=h5py-3.11.0-cp310-cp310-linux_aarch64.whl size=6906150 sha256=e91885c8ae20d8207e79bd0aee4f794338ba8df1bd4634a8d41926c2f230697e

Stored in directory: /root/.cache/pip/wheels/54/6c/66/4f9de317fb7a5505a348881fc3666b289fde493612707458a3

Successfully built h5py

Installing collected packages: h5py

Successfully installed h5py-3.11.0

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

2.5 Step5:tensorflow安装

虽然这里提示不少问题,重点放在第一点:

- tensorflow-2.15.0+nv24.5安装成功

Successfully installed MarkupSafe-2.1.5 absl-py-2.1.0 astunparse-1.6.3 cachetools-5.3.3 flatbuffers-24.3.25 gast-0.5.4 google-auth-2.29.0 google-auth-oauthlib-1.2.0 google-pasta-0.2.0 grpcio-1.64.0 keras-2.15.0 libclang-18.1.1 ml-dtypes-0.2.0 numpy-1.26.4 opt-einsum-3.3.0 protobuf-4.25.3 pyasn1-0.6.0 pyasn1-modules-0.4.0 requests-oauthlib-2.0.0 rsa-4.9 tensorboard-2.15.2 tensorboard-data-server-0.7.2 tensorflow-2.15.0+nv24.5 tensorflow-estimator-2.15.0 tensorflow-io-gcs-filesystem-0.37.0 termcolor-2.4.0 werkzeug-3.0.3 wrapt-1.14.1

- pip的依赖关系可能存在问题

ERROR: pip’s dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

onnx-graphsurgeon 0.3.12 requires onnx, which is not installed.

sudo安装友情提示

WARNING: Running pip as the ‘root’ user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

$ sudo pip3 install --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v60 tensorflow==2.15.0+nv24.05

Looking in indexes: https://pypi.org/simple, https://developer.download.nvidia.com/compute/redist/jp/v60

Collecting tensorflow==2.15.0+nv24.05

Using cached https://developer.download.nvidia.cn/compute/redist/jp/v60/tensorflow/tensorflow-2.15.0%2Bnv24.05-cp310-cp310-linux_aarch64.whl (465.5 MB)

Collecting absl-py>=1.0.0 (from tensorflow==2.15.0+nv24.05)

Using cached absl_py-2.1.0-py3-none-any.whl.metadata (2.3 kB)

Collecting astunparse>=1.6.0 (from tensorflow==2.15.0+nv24.05)

Using cached astunparse-1.6.3-py2.py3-none-any.whl.metadata (4.4 kB)

Collecting flatbuffers>=23.5.26 (from tensorflow==2.15.0+nv24.05)

Using cached flatbuffers-24.3.25-py2.py3-none-any.whl.metadata (850 bytes)

Collecting gast!=0.5.0,!=0.5.1,!=0.5.2,>=0.2.1 (from tensorflow==2.15.0+nv24.05)

Using cached gast-0.5.4-py3-none-any.whl.metadata (1.3 kB)

Collecting google-pasta>=0.1.1 (from tensorflow==2.15.0+nv24.05)

Using cached google_pasta-0.2.0-py3-none-any.whl.metadata (814 bytes)

Requirement already satisfied: h5py>=2.9.0 in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.15.0+nv24.05) (3.11.0)

Collecting libclang>=13.0.0 (from tensorflow==2.15.0+nv24.05)

Using cached libclang-18.1.1-py2.py3-none-manylinux2014_aarch64.whl.metadata (5.2 kB)

Collecting ml-dtypes~=0.2.0 (from tensorflow==2.15.0+nv24.05)

Using cached ml_dtypes-0.2.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.metadata (20 kB)

Collecting numpy<2.0.0,>=1.23.5 (from tensorflow==2.15.0+nv24.05)

Using cached numpy-1.26.4-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.metadata (62 kB)

Collecting opt-einsum>=2.3.2 (from tensorflow==2.15.0+nv24.05)

Using cached opt_einsum-3.3.0-py3-none-any.whl.metadata (6.5 kB)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.15.0+nv24.05) (24.0)

Collecting protobuf!=4.21.0,!=4.21.1,!=4.21.2,!=4.21.3,!=4.21.4,!=4.21.5,<5.0.0dev,>=3.20.3 (from tensorflow==2.15.0+nv24.05)

Using cached protobuf-4.25.3-cp37-abi3-manylinux2014_aarch64.whl.metadata (541 bytes)

Requirement already satisfied: setuptools in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.15.0+nv24.05) (70.0.0)

Requirement already satisfied: six>=1.12.0 in /usr/lib/python3/dist-packages (from tensorflow==2.15.0+nv24.05) (1.16.0)

Collecting termcolor>=1.1.0 (from tensorflow==2.15.0+nv24.05)

Using cached termcolor-2.4.0-py3-none-any.whl.metadata (6.1 kB)

Requirement already satisfied: typing-extensions>=3.6.6 in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.15.0+nv24.05) (4.12.0)

Collecting wrapt<1.15,>=1.11.0 (from tensorflow==2.15.0+nv24.05)

Using cached wrapt-1.14.1-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.metadata (6.7 kB)

Collecting tensorflow-io-gcs-filesystem>=0.23.1 (from tensorflow==2.15.0+nv24.05)

Using cached tensorflow_io_gcs_filesystem-0.37.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.metadata (14 kB)

Collecting grpcio<2.0,>=1.24.3 (from tensorflow==2.15.0+nv24.05)

Using cached grpcio-1.64.0-cp310-cp310-manylinux_2_17_aarch64.whl.metadata (3.3 kB)

Collecting tensorboard<2.16,>=2.15 (from tensorflow==2.15.0+nv24.05)

Using cached tensorboard-2.15.2-py3-none-any.whl.metadata (1.7 kB)

Collecting tensorflow-estimator<2.16,>=2.15.0 (from tensorflow==2.15.0+nv24.05)

Using cached tensorflow_estimator-2.15.0-py2.py3-none-any.whl.metadata (1.3 kB)

Collecting keras<2.16,>=2.15.0 (from tensorflow==2.15.0+nv24.05)

Using cached keras-2.15.0-py3-none-any.whl.metadata (2.4 kB)

Requirement already satisfied: wheel<1.0,>=0.23.0 in /usr/local/lib/python3.10/dist-packages (from astunparse>=1.6.0->tensorflow==2.15.0+nv24.05) (0.43.0)

Collecting google-auth<3,>=1.6.3 (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached google_auth-2.29.0-py2.py3-none-any.whl.metadata (4.7 kB)

Collecting google-auth-oauthlib<2,>=0.5 (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached google_auth_oauthlib-1.2.0-py2.py3-none-any.whl.metadata (2.7 kB)

Requirement already satisfied: markdown>=2.6.8 in /usr/lib/python3/dist-packages (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.3.6)

Requirement already satisfied: requests<3,>=2.21.0 in /usr/local/lib/python3.10/dist-packages (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (2.32.2)

Collecting tensorboard-data-server<0.8.0,>=0.7.0 (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached tensorboard_data_server-0.7.2-py3-none-any.whl.metadata (1.1 kB)

Collecting werkzeug>=1.0.1 (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached werkzeug-3.0.3-py3-none-any.whl.metadata (3.7 kB)

Collecting cachetools<6.0,>=2.0.0 (from google-auth<3,>=1.6.3->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached cachetools-5.3.3-py3-none-any.whl.metadata (5.3 kB)

Collecting pyasn1-modules>=0.2.1 (from google-auth<3,>=1.6.3->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached pyasn1_modules-0.4.0-py3-none-any.whl.metadata (3.4 kB)

Collecting rsa<5,>=3.1.4 (from google-auth<3,>=1.6.3->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached rsa-4.9-py3-none-any.whl.metadata (4.2 kB)

Collecting requests-oauthlib>=0.7.0 (from google-auth-oauthlib<2,>=0.5->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached requests_oauthlib-2.0.0-py2.py3-none-any.whl.metadata (11 kB)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.3)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/lib/python3/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (1.26.5)

Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (2020.6.20)

Collecting MarkupSafe>=2.1.1 (from werkzeug>=1.0.1->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached MarkupSafe-2.1.5-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.metadata (3.0 kB)

Collecting pyasn1<0.7.0,>=0.4.6 (from pyasn1-modules>=0.2.1->google-auth<3,>=1.6.3->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05)

Using cached pyasn1-0.6.0-py2.py3-none-any.whl.metadata (8.3 kB)

Requirement already satisfied: oauthlib>=3.0.0 in /usr/lib/python3/dist-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<2,>=0.5->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.2.0)

Using cached absl_py-2.1.0-py3-none-any.whl (133 kB)

Using cached astunparse-1.6.3-py2.py3-none-any.whl (12 kB)

Using cached flatbuffers-24.3.25-py2.py3-none-any.whl (26 kB)

Using cached gast-0.5.4-py3-none-any.whl (19 kB)

Using cached google_pasta-0.2.0-py3-none-any.whl (57 kB)

Using cached grpcio-1.64.0-cp310-cp310-manylinux_2_17_aarch64.whl (5.4 MB)

Using cached keras-2.15.0-py3-none-any.whl (1.7 MB)

Using cached libclang-18.1.1-py2.py3-none-manylinux2014_aarch64.whl (23.8 MB)

Using cached ml_dtypes-0.2.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (1.0 MB)

Using cached numpy-1.26.4-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (14.2 MB)

Using cached opt_einsum-3.3.0-py3-none-any.whl (65 kB)

Using cached protobuf-4.25.3-cp37-abi3-manylinux2014_aarch64.whl (293 kB)

Using cached tensorboard-2.15.2-py3-none-any.whl (5.5 MB)

Using cached tensorflow_estimator-2.15.0-py2.py3-none-any.whl (441 kB)

Using cached tensorflow_io_gcs_filesystem-0.37.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (4.8 MB)

Using cached termcolor-2.4.0-py3-none-any.whl (7.7 kB)

Using cached wrapt-1.14.1-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (78 kB)

Using cached google_auth-2.29.0-py2.py3-none-any.whl (189 kB)

Using cached google_auth_oauthlib-1.2.0-py2.py3-none-any.whl (24 kB)

Using cached tensorboard_data_server-0.7.2-py3-none-any.whl (2.4 kB)

Using cached werkzeug-3.0.3-py3-none-any.whl (227 kB)

Using cached cachetools-5.3.3-py3-none-any.whl (9.3 kB)

Using cached MarkupSafe-2.1.5-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (26 kB)

Using cached pyasn1_modules-0.4.0-py3-none-any.whl (181 kB)

Using cached requests_oauthlib-2.0.0-py2.py3-none-any.whl (24 kB)

Using cached rsa-4.9-py3-none-any.whl (34 kB)

Using cached pyasn1-0.6.0-py2.py3-none-any.whl (85 kB)

Installing collected packages: libclang, flatbuffers, wrapt, termcolor, tensorflow-io-gcs-filesystem, tensorflow-estimator, tensorboard-data-server, pyasn1, protobuf, numpy, MarkupSafe, keras, grpcio, google-pasta, gast, cachetools, astunparse, absl-py, werkzeug, rsa, requests-oauthlib, pyasn1-modules, opt-einsum, ml-dtypes, google-auth, google-auth-oauthlib, tensorboard, tensorflow

Attempting uninstall: protobuf

Found existing installation: protobuf 3.12.4

Uninstalling protobuf-3.12.4:

Successfully uninstalled protobuf-3.12.4

Attempting uninstall: numpy

Found existing installation: numpy 1.21.5

Uninstalling numpy-1.21.5:

Successfully uninstalled numpy-1.21.5

Attempting uninstall: MarkupSafe

Found existing installation: MarkupSafe 2.0.1

Uninstalling MarkupSafe-2.0.1:

Successfully uninstalled MarkupSafe-2.0.1

Attempting uninstall: gast

Found existing installation: gast 0.5.2

Uninstalling gast-0.5.2:

Successfully uninstalled gast-0.5.2

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

onnx-graphsurgeon 0.3.12 requires onnx, which is not installed.

Successfully installed MarkupSafe-2.1.5 absl-py-2.1.0 astunparse-1.6.3 cachetools-5.3.3 flatbuffers-24.3.25 gast-0.5.4 google-auth-2.29.0 google-auth-oauthlib-1.2.0 google-pasta-0.2.0 grpcio-1.64.0 keras-2.15.0 libclang-18.1.1 ml-dtypes-0.2.0 numpy-1.26.4 opt-einsum-3.3.0 protobuf-4.25.3 pyasn1-0.6.0 pyasn1-modules-0.4.0 requests-oauthlib-2.0.0 rsa-4.9 tensorboard-2.15.2 tensorboard-data-server-0.7.2 tensorflow-2.15.0+nv24.5 tensorflow-estimator-2.15.0 tensorflow-io-gcs-filesystem-0.37.0 termcolor-2.4.0 werkzeug-3.0.3 wrapt-1.14.1

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

2.6 Step6:jupyterlab安装

$ sudo pip3 install jupyterlab

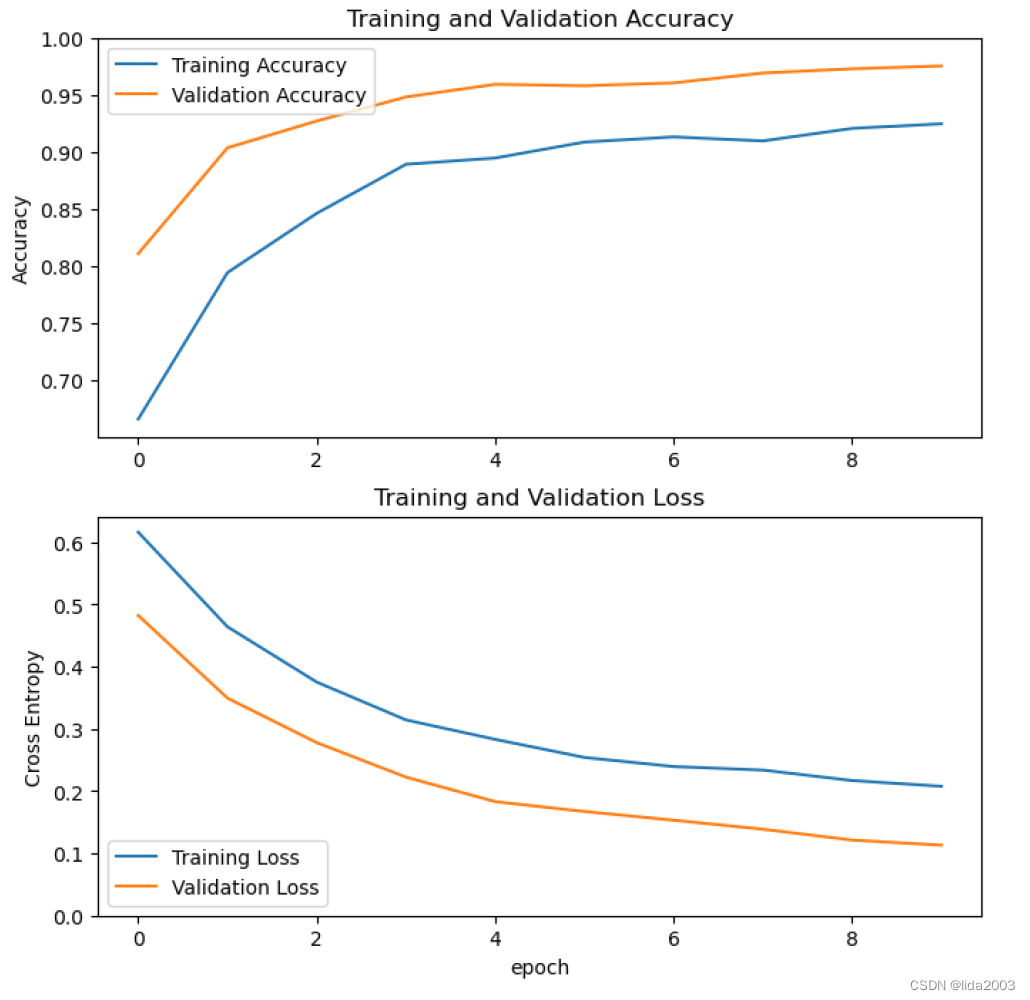

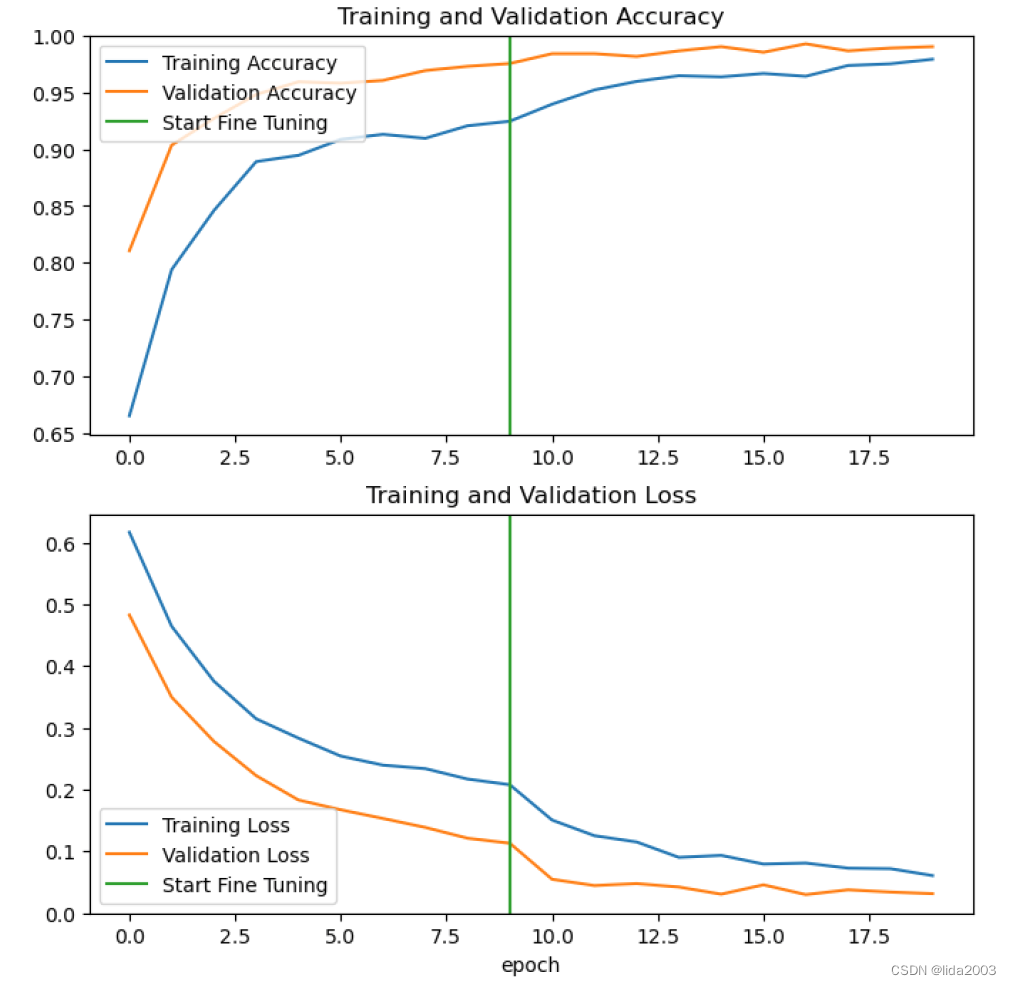

3. 测试

在v6.0DP版本中存在Inconsistency of NVIDIA 2.15.0+nv24.03 v.s. Colab v.s. Tensorflow Documentation问题。

Jetson Orin Nano v6.0 + tensorflow2.15.0+nv24.05 GPU版本不存在上述问题,经验证:

4. 参考资料

【1】Linux 36.2@Jetson Orin Nano之Hello AI World!

【2】ubuntu22.04@Jetson Orin Nano之OpenCV安装

【3】ubuntu22.04@Jetson Orin Nano之CSI IMX219安装

【4】ubuntu22.04@Jetson Orin Nano安装&配置VNC服务端

5. 补充

5.1 直接安装tensorflow==2.15.0+nv24.05 - “Failed to build h5py”

$ sudo pip3 install --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v60 tensorflow==2.15.0+nv24.05

[sudo] password for daniel:

Looking in indexes: https://pypi.org/simple, https://developer.download.nvidia.com/compute/redist/jp/v60

Collecting tensorflow==2.15.0+nv24.05

Downloading https://developer.download.nvidia.cn/compute/redist/jp/v60/tensorflow/tensorflow-2.15.0%2Bnv24.05-cp310-cp310-linux_aarch64.whl (465.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 465.5/465.5 MB 2.0 MB/s eta 0:00:00

Collecting wrapt<1.15,>=1.11.0

Using cached wrapt-1.14.1-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (78 kB)

Collecting absl-py>=1.0.0

Using cached absl_py-2.1.0-py3-none-any.whl (133 kB)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.15.0+nv24.05) (24.0)

Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from tensorflow==2.15.0+nv24.05) (59.6.0)

Collecting protobuf!=4.21.0,!=4.21.1,!=4.21.2,!=4.21.3,!=4.21.4,!=4.21.5,<5.0.0dev,>=3.20.3

Using cached protobuf-4.25.3-cp37-abi3-manylinux2014_aarch64.whl (293 kB)

Collecting gast!=0.5.0,!=0.5.1,!=0.5.2,>=0.2.1

Using cached gast-0.5.4-py3-none-any.whl (19 kB)

Collecting grpcio<2.0,>=1.24.3

Using cached grpcio-1.64.0-cp310-cp310-manylinux_2_17_aarch64.whl (5.4 MB)

Collecting google-pasta>=0.1.1

Using cached google_pasta-0.2.0-py3-none-any.whl (57 kB)

Collecting astunparse>=1.6.0

Using cached astunparse-1.6.3-py2.py3-none-any.whl (12 kB)

Collecting opt-einsum>=2.3.2

Using cached opt_einsum-3.3.0-py3-none-any.whl (65 kB)

Collecting keras<2.16,>=2.15.0

Using cached keras-2.15.0-py3-none-any.whl (1.7 MB)

Collecting tensorflow-estimator<2.16,>=2.15.0

Using cached tensorflow_estimator-2.15.0-py2.py3-none-any.whl (441 kB)

Collecting numpy<2.0.0,>=1.23.5

Using cached numpy-1.26.4-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (14.2 MB)

Collecting flatbuffers>=23.5.26

Using cached flatbuffers-24.3.25-py2.py3-none-any.whl (26 kB)

Requirement already satisfied: six>=1.12.0 in /usr/lib/python3/dist-packages (from tensorflow==2.15.0+nv24.05) (1.16.0)

Collecting libclang>=13.0.0

Using cached libclang-18.1.1-py2.py3-none-manylinux2014_aarch64.whl (23.8 MB)

Collecting ml-dtypes~=0.2.0

Using cached ml_dtypes-0.2.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (1.0 MB)

Collecting termcolor>=1.1.0

Using cached termcolor-2.4.0-py3-none-any.whl (7.7 kB)

Collecting tensorboard<2.16,>=2.15

Using cached tensorboard-2.15.2-py3-none-any.whl (5.5 MB)

Collecting h5py>=2.9.0

Using cached h5py-3.11.0.tar.gz (406 kB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... done

Collecting tensorflow-io-gcs-filesystem>=0.23.1

Using cached tensorflow_io_gcs_filesystem-0.37.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (4.8 MB)

Requirement already satisfied: typing-extensions>=3.6.6 in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.15.0+nv24.05) (4.12.0)

Requirement already satisfied: wheel<1.0,>=0.23.0 in /usr/lib/python3/dist-packages (from astunparse>=1.6.0->tensorflow==2.15.0+nv24.05) (0.37.1)

Requirement already satisfied: markdown>=2.6.8 in /usr/lib/python3/dist-packages (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.3.6)

Requirement already satisfied: requests<3,>=2.21.0 in /usr/local/lib/python3.10/dist-packages (from tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (2.32.2)

Collecting google-auth-oauthlib<2,>=0.5

Using cached google_auth_oauthlib-1.2.0-py2.py3-none-any.whl (24 kB)

Collecting werkzeug>=1.0.1

Using cached werkzeug-3.0.3-py3-none-any.whl (227 kB)

Collecting tensorboard-data-server<0.8.0,>=0.7.0

Using cached tensorboard_data_server-0.7.2-py3-none-any.whl (2.4 kB)

Collecting google-auth<3,>=1.6.3

Using cached google_auth-2.29.0-py2.py3-none-any.whl (189 kB)

Collecting rsa<5,>=3.1.4

Using cached rsa-4.9-py3-none-any.whl (34 kB)

Collecting pyasn1-modules>=0.2.1

Using cached pyasn1_modules-0.4.0-py3-none-any.whl (181 kB)

Collecting cachetools<6.0,>=2.0.0

Using cached cachetools-5.3.3-py3-none-any.whl (9.3 kB)

Collecting requests-oauthlib>=0.7.0

Using cached requests_oauthlib-2.0.0-py2.py3-none-any.whl (24 kB)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.3)

Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (2020.6.20)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/lib/python3/dist-packages (from requests<3,>=2.21.0->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (1.26.5)

Collecting MarkupSafe>=2.1.1

Using cached MarkupSafe-2.1.5-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (26 kB)

Collecting pyasn1<0.7.0,>=0.4.6

Using cached pyasn1-0.6.0-py2.py3-none-any.whl (85 kB)

Requirement already satisfied: oauthlib>=3.0.0 in /usr/lib/python3/dist-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<2,>=0.5->tensorboard<2.16,>=2.15->tensorflow==2.15.0+nv24.05) (3.2.0)

Building wheels for collected packages: h5py

Building wheel for h5py (pyproject.toml) ... error

error: subprocess-exited-with-error

× Building wheel for h5py (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [7 lines of output]

running bdist_wheel

running build

running build_ext

Loading library to get build settings and version: libhdf5.so

error: Unable to load dependency HDF5, make sure HDF5 is installed properly

Library dirs checked: []

error: libhdf5.so: cannot open shared object file: No such file or directory

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for h5py

Failed to build h5py

ERROR: Could not build wheels for h5py, which is required to install pyproject.toml-based projects

5.2 直接安装h5py - “Failed to build h5py”

$ sudo pip3 install h5py

Collecting h5py

Using cached h5py-3.11.0.tar.gz (406 kB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... done

Requirement already satisfied: numpy>=1.17.3 in /usr/lib/python3/dist-packages (from h5py) (1.21.5)

Building wheels for collected packages: h5py

Building wheel for h5py (pyproject.toml) ... error

error: subprocess-exited-with-error

× Building wheel for h5py (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [75 lines of output]

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-aarch64-cpython-310

creating build/lib.linux-aarch64-cpython-310/h5py

copying h5py/ipy_completer.py -> build/lib.linux-aarch64-cpython-310/h5py

copying h5py/__init__.py -> build/lib.linux-aarch64-cpython-310/h5py

copying h5py/h5py_warnings.py -> build/lib.linux-aarch64-cpython-310/h5py

copying h5py/version.py -> build/lib.linux-aarch64-cpython-310/h5py

creating build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/files.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/group.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/selections.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/compat.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/datatype.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/__init__.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/filters.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/attrs.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/dataset.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/vds.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/dims.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/base.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

copying h5py/_hl/selections2.py -> build/lib.linux-aarch64-cpython-310/h5py/_hl

creating build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5d_direct_chunk.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_objects.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_group.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_file2.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5o.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/conftest.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_dimension_scales.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_dataset_swmr.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_attrs_data.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_selections.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_file.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5z.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_completions.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_dtype.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_dataset_getitem.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_base.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/__init__.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_filters.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_attrs.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5pl.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/common.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5t.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_dataset.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5f.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_file_image.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_ros3.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_errors.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_datatype.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_h5p.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_attribute_create.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_slicing.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_big_endian_file.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_file_alignment.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

copying h5py/tests/test_dims_dimensionproxy.py -> build/lib.linux-aarch64-cpython-310/h5py/tests

creating build/lib.linux-aarch64-cpython-310/h5py/tests/data_files

copying h5py/tests/data_files/__init__.py -> build/lib.linux-aarch64-cpython-310/h5py/tests/data_files

creating build/lib.linux-aarch64-cpython-310/h5py/tests/test_vds

copying h5py/tests/test_vds/test_virtual_source.py -> build/lib.linux-aarch64-cpython-310/h5py/tests/test_vds

copying h5py/tests/test_vds/test_lowlevel_vds.py -> build/lib.linux-aarch64-cpython-310/h5py/tests/test_vds

copying h5py/tests/test_vds/__init__.py -> build/lib.linux-aarch64-cpython-310/h5py/tests/test_vds

copying h5py/tests/test_vds/test_highlevel_vds.py -> build/lib.linux-aarch64-cpython-310/h5py/tests/test_vds

copying h5py/tests/data_files/vlen_string_dset_utc.h5 -> build/lib.linux-aarch64-cpython-310/h5py/tests/data_files

copying h5py/tests/data_files/vlen_string_s390x.h5 -> build/lib.linux-aarch64-cpython-310/h5py/tests/data_files

copying h5py/tests/data_files/vlen_string_dset.h5 -> build/lib.linux-aarch64-cpython-310/h5py/tests/data_files

running build_ext

Loading library to get build settings and version: libhdf5.so

error: Unable to load dependency HDF5, make sure HDF5 is installed properly

Library dirs checked: []

error: libhdf5.so: cannot open shared object file: No such file or directory

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for h5py

Failed to build h5py

ERROR: Could not build wheels for h5py, which is required to install pyproject.toml-based projects