我们很多时候在开发或测试环境中使用的Kubernetes集群基本都是云厂商提供或者说基于有网环境快速搭建的,但是到了客户的生产环境,往往基于安全考虑他们是不允许服务器连接外部网络的,这时我们就不得不在离线环境下完成部署工作。

1、前言

1.1 服务器配置

| 主机名 | IP | 配置 | 说明 |

| k8s-master | 192.168.8.190 | 4核8G,50G系统盘 | 主节点 |

| k8s-node1 | 192.168.8.191 | 4核8G,50G系统盘 | 工作节点1 |

| k8s-node2 | 192.168.8.192 | 4核8G,50G系统盘 | 工作节点2 |

| hunkchou | 192.168.126.100 | 2核2G | 本地虚拟机,用于下载离线安装包 |

1.2 环境及软件版本信息

- 操作系统:CentOS 7.8 ×86_64

- docker:24.0.7

- kubeadm、kubectl、kubelet:v1.26.9

- kubernetes:v1.26.9

- flannel:v0.24.0

1.3 离线依赖安装包、镜像下载

请大家提前准备好一台可以连接互联网的机器,在安装过程中需要通过该机器提前下载相关的依赖包、配置文件以及相关镜像。 为了帮大家免去下载带来的痛苦,我已将本次部署过程中所需联网机器下载的相关文件全部上传至网盘,请自行前往下载,《K8s离线安装文件》 。

2、环境准备(所有节点)

2.1 配置主机名

# 注意每个节点的名字要不一样,下面以master节点为例,其他两个节点分别设置为k8s-node1、k8s-node2

hostnamectl set-hostname k8s-master

# 查看修改后的主机名

hostname

# 所有节点配置hosts,注意修改自己的服务器IP地址

cat >> /etc/hosts <<EOF

192.168.8.190 k8s-master

192.168.8.191 k8s-node1

192.168.8.192 k8s-node2

EOF2.2 关闭防火墙

# 关闭防火墙并设置开机不启动

systemctl stop firewalld

systemctl disable firewalld2.3 关闭swap分区

# 临时关闭

swapoff -a

# 永久关闭,删除或注释掉/etc/fstab里的swap设备的挂载命令即可

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 重启使其生效

reboot

# 检查是否生效,执行free -m命令,查看Swap一栏total、used、free是否全部为0

free -m2.4 关闭selinux

# 临时关闭,即刻生效

setenforce 0

# 永久关闭,重启生效

sed -i 's/enforcing/disabled/' /etc/selinux/config

# 查看状态,若返回Disabled则表示处于关闭状态

getenforce2.5 配置iptables规则

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

# 将桥接的IPv4流量传递到iptables的链

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 使网桥生效

sysctl --system2.6 配置时间同步

yum install ntpdate -y ntpdate time.windows.com2.7 升级CentOS7内核(可选)

CentOS 7.8自带的内核版本是3.10.0-1127.el7.x86_64,版本相对较低。曾在其他文章中看到过说新版本的Kubernetes及其组件功能特性需要依赖于高版本的系统内核。由于尚未进行验证也无法给出具体说明。若是想要安装新版本Kubernetes的话,虽然该章节内容为可选操作,但也建议执行升级操作,避免后续使用过程中出现其他幺蛾子。

# 查看目前系统内核版本

[root@k8s-master ~]# uname -a

Linux k8s-master 3.10.0-1127.el7.x86_64 #1 SMP Tue Mar 31 23:36:51 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

# 查看可升级的内核版本,一般来讲看到的都是3.10.x版本,没有能够用来直接进行升级的

[root@k8s-master ~]# yum list kernel --showduplicates

# 导入ELRepo软件仓库的公共秘钥,需要在联网机器提前下载 https://www.elrepo.org/RPM-GPG-KEY-elrepo.org,并上传至各节点/k8s/centos 目录下

[root@k8s-master ~]# cd /k8s/centos

[root@k8s-master ~]# rpm --import RPM-GPG-KEY-elrepo.org

# 安装ELRepo,需要在联网机器提前下载 https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm,并上传至各节点/k8s/centos 目录下

[root@k8s-master ~]# yum install elrepo-release-7.el7.elrepo.noarch.rpm

# 查看ELRepo提供的内核版本,注意:kernel-lt:表示longterm,即长期支持的内核;kernel-ml:表示mainline,即当前主线的内核。

[root@k8s-master ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

# 选择新版主线内核并安装

[root@k8s-master ~]# yum --enablerepo=elrepo-kernel install kernel-ml.x86_64

# 查看系统可用内核,并设置启动项

[root@k8s-master ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

[root@k8s-master ~]# sudo awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

# 指定系统默认内核为新版本内核

[root@k8s-master ~]# cp /etc/default/grub /etc/default/grub-bak

[root@k8s-master ~]# grub2-set-default 0

# 重新创建内核配置

[root@k8s-master ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

# 更新软件包,重启系统,使用新内核引导系统

[root@k8s-master ~]# yum makecache

[root@k8s-master ~]# reboot3、安装docker(所有节点)

本次安装的是 docker-24.0.7 版本,docker离线安装在此不再赘述,请自行百度或者参考网盘内部署手册。

4、安装cri-dockerd(所有节点)

kubernetes官方表示1.24及以上版本已不安装cri,我们本次安装的是1.26版本,所以我们需要手动安装cri。

4.1 下载安装包

在联网机器上执行以下命令提前下载所需rpm安装包,如下所示。

# 在线获取cri-dockerd安装包

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1-3.el7.x86_64.rpm4.2 离线安装

将安装包上传至所有节点服务器的/k8s/docker目录下,执行以下命令进行安装。

# 离线安装

cd /k8s/docker

rpm -ivh cri-dockerd-0.3.1-3.el7.x86_64.rpm

# 修改/usr/lib/systemd/system/cri-docker.service文件中的ExecStart配置

vim /usr/lib/systemd/system/cri-docker.service

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

# 重新加载配置文件并设置开机自启

5、安装kubernetes组件

5.1 下载安装包

在联网机器上执行以下命令提前下载好kubeadm、kubelet、kubectl的所有依赖安装包,如下所示:

# 下载所需工具依赖包

yum install --downloadonly --downloaddir=/k8s/kubernetes ntpdate wget httpd createrepo vim telnet netstat lrzsz

# 添加kubernetes阿里云YUM软件源

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

enabled=1

EOF

# 查看kubeadm可安装的软件包

yum list kubeadm --showduplicates

.....

kubeadm.x86_64 1.25.14-0 kubernetes

kubeadm.x86_64 1.26.0-0 kubernetes

kubeadm.x86_64 1.26.1-0 kubernetes

kubeadm.x86_64 1.26.2-0 kubernetes

kubeadm.x86_64 1.26.3-0 kubernetes

kubeadm.x86_64 1.26.4-0 kubernetes

kubeadm.x86_64 1.26.5-0 kubernetes

kubeadm.x86_64 1.26.6-0 kubernetes

kubeadm.x86_64 1.26.7-0 kubernetes

kubeadm.x86_64 1.26.8-0 kubernetes

kubeadm.x86_64 1.26.9-0 kubernetes

kubeadm.x86_64 1.27.0-0 kubernetes

.....

# 我们选择1.26.9版本进行下载,并下载至/k8s/kubernetes目录下

yum install --downloadonly --downloaddir=/k8s/kubernetes kubeadm-1.26.9 kubelet-1.26.9 kubectl-1.26.95.2 安装组件(所有节点)

在所有节点服务器创建/k8s/kubernetes目录,并将上一步下载的所有安装包上传至所有节点该目录下。执行以下命令进行安装,如下所示。

# 进入安装包目录,开始安装k8s组件

cd /k8s/kubernetes

yum install /k8s/kubernetes/*.rpm

# 配置开机自启

sudo systemctl enable --now kubelet

# 指定容器运行时为containerd

crictl config runtime-endpoint /run/containerd/containerd.sock

# 查看版本

kubeadm version6、初始化master控制面板

6.1 下载镜像

在执行kubeadm init命令时会用到一些镜像,需要我们在联网机器上提前拉取下载下来,如下所示。

# 查看需要用到哪些镜像

kubeadm config images list

W0106 14:58:49.628728 15989 version.go:105] falling back to the local client version: v1.26.9

registry.k8s.io/kube-apiserver:v1.26.9

registry.k8s.io/kube-controller-manager:v1.26.9

registry.k8s.io/kube-scheduler:v1.26.9

registry.k8s.io/kube-proxy:v1.26.9

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.6-0

registry.k8s.io/coredns/coredns:v1.9.3正常来讲,即便是我们的联网机器也是无法访问到registry.k8s.io这个地址的,那怎么办呢?我们另辟蹊径,可以借助国内镜像源下载并重新对镜像打标来达到目的。具体操作过程参考如下:在联网机器上通过docker search命令进行搜索,我们以镜像kube-apiserver为例进行说明,其他镜像同样操作。

[root@hunkchou ~]# docker search kube-apiserver

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

aiotceo/kube-apiserver end of support, please pull kubestation/kube… 20

mirrorgooglecontainers/kube-apiserver 18

kubesphere/kube-apiserver 7

kubeimage/kube-apiserver-amd64 k8s.gcr.io/kube-apiserver-amd64 5

empiregeneral/kube-apiserver-amd64 kube-apiserver-amd64 4 [OK]

docker/desktop-kubernetes-apiserver Mirror of selected tags from k8s.gcr.io/kube… 2

dyrnq/kube-apiserver registry.k8s.io/kube-apiserver 1

mirrorgcrio/kube-apiserver mirror of k8s.gcr.io/kube-apiserver:v1.23.6 1

v5cn/kube-apiserver 1

opsdockerimage/kube-apiserver 1

rancher/kube-apiserver 0

cjk2atmb/kube-apiserver 0

boy530/kube-apiserver 0

forging2012/kube-apiserver 0

giantswarm/kube-apiserver 0

kubestation/kube-apiserver registry.k8s.io/kube-apiserver 0

mesosphere/kube-apiserver-amd64 0

mesosphere/kube-apiserver 0

ggangelo/kube-apiserver 0

lbbi/kube-apiserver k8s.gcr.io 0

woshitiancai/kube-apiserver 0

kope/kube-apiserver-healthcheck 0

projectaccuknox/kube-apiserver 0

k8smx/kube-apiserver 0

lchdzh/kube-apiserver kubernetes原版基础镜像,Registry为k8s.gcr.io 0通过搜索获取到该镜像可选列表,原则上我们尽量选择STARS数量多的,基于这个原则我们选择第一个aiotceo/kube-apiserver,然后通过docker pull aiotceo/kube-apiserver:v1.26.9命令拉取对应版本镜像,此时提示错误Error response from daemon: manifest for aiotceo/kube-apiserver:v1.26.9 not found没有找到该版本镜像,我们只能换其他版本再次尝试。最后我们拉取的是kubesphere/kube-apiserver:v1.26.9。

# 拉取镜像

docker pull kubesphere/kube-apiserver:v1.26.9

# 为了在kubeadm初始化时能够找到registry.k8s.io下面的镜像,重新对镜像名称打标

docker tag kubesphere/kube-apiserver:v1.26.9 registry.k8s.io/kube-apiserver:v1.26.9

# 打包

docker save -o kube-apiserver.tar registry.k8s.io/kube-apiserver:v1.26.9

# 删除旧的镜像

docker rmi kubesphere/kube-apiserver:v1.26.9按照上面的步骤将其他镜像依次下载打包。此过程还是需要耗费一定时间的,所以再次提醒大家可关注本公众号并回复“k8s”即可获取下载链接。

6.2 加载kubeadm初始化镜像(所有节点)

将上一步下载的所有镜像包上传至k8s集群所有节点服务器的/k8s/kubadm-init-images目录下,执行以下命令来完成镜像加载,如下所示。

# 加载kubeadm初始化所需的镜像

find /k8s/kubadm-init-images/ -type f -name "*.tar" -exec docker load -i {} \;6.3 初始化master节点(master节点)

# 在初始化之前,我们可以执行kubeadm init --help命令查看相关参数用法,具体如下:

# apiserver-advertise-address:指定apiserver的IP,即master节点的IP,本文是10.193.31.24

# image-repository:设置镜像仓库为国内镜像仓库

# kubernetes-version:设置k8s的版本,必须跟kubeadm版本一致

# service-cidr:设置node节点的网络,可以保持原样

# pod-network-cidr:这是设置node节点的网络,可以保持原样

# cri-socket:设置cri使用cri-dockerd

# 执行初始化命令

kubeadm init \

--apiserver-advertise-address=192.168.8.190 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.26.9 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock \

--ignore-preflight-errors=all

echo "192.168.8.190 cluster-endpoint" >> /etc/hosts

kubeadm init \

--apiserver-advertise-address=192.168.8.190 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.26.9 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock --ignore-preflight-errors=all

执行后若返回以下信息说明控制面板已初始化成功,信息如下。

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token cpu5gu.3qe8y1onbl4lza0g \

--discovery-token-ca-cert-hash sha256:4092831bff2381f16460017044246d541e4dacb7053c43bbae44aa2749fba441 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token cpu5gu.3qe8y1onbl4lza0g \

--discovery-token-ca-cert-hash sha256:4092831bff2381f16460017044246d541e4dacb7053c43bbae44aa2749fba441 按照提示信息继续在master节点执行以下命令完成安装,如下所示。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf6.4 将node节点加入集群(node节点)

# 在你要加入的node节点上执行kubeadm join, 注意调整自己集群master节点ip

kubeadm join cluster-endpoint:6443 --token cpu5gu.3qe8y1onbl4lza0g \

--discovery-token-ca-cert-hash sha256:4092831bff2381f16460017044246d541e4dacb7053c43bbae44aa2749fba441 \

--cri-socket unix:///var/run/cri-dockerd.sock

# 如果上面的令牌忘记了,或者有新的node节点加入,在master节点上执行下面的命令,生成新的令牌

kubeadm token create --print-join-command出现以下表示加入成功

[root@k8s-node1 kubeadm-init-images]# kubeadm join cluster-endpoint:6443 --token cpu5gu.3qe8y1onbl4lza0g \

> --discovery-token-ca-cert-hash sha256:4092831bff2381f16460017044246d541e4dacb7053c43bbae44aa2749fba441 \

> --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.6.5 查看集群节点状态

# 查看当前集群节点状态

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 16m v1.26.9

k8s-node1 NotReady <none> 6m2s v1.26.9

k8s-node2 NotReady <none> 3m28s v1.26.9从上面打印出来的节点状态看,所有节点目前都处于NotReady状态,这是因为还没有安装网络插件,节点之间无法通信的缘故,后面我们安装网络插件后可再次验证。此外,如果在node节点上执行kubectl get nodes查看集群节点信息时可能出现如下错误,如下所示。

# 错误信息

The connection to the server localhost:8080 was refused - did you specify the right host or port?此时只需将master节点中的/etc/kubernetes/admin.conf文件复制到node节点相同目录下,更改文件权限,最后配置环境变量,使之生效。

# 赋读写权限

chmod 666 /etc/kubernetes/admin.conf

# 配置环境变量并使之生效

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile7、安装Flannel或者calico网络插件(所有节点)

在上一节中查看集群节点时都是NotReady状态,我们提到是因为未安装网络插件,节点之间无法通信造成的。首先简单说一下在kubernetes中主流的一些网络插件,比如Flannel、Calico、Weave、Cilium等等,这些网络插件提供了不同的功能特性和性能特点,大家若想继续深入了解可自行去网上查阅。我们这次选择的是Flannel,接下来就以Flannel为例进行搭建说明。

7.1 下载镜像及配置文件

在联网的机器上执行以下命令下载kube-flannel.yml文件,如下所示。

# 下载kube-flannel.yml文件

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

# 更改network地址,是初始化时的pod地址范围

vim kube-flannel.yml

...

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

...在联网的机器上执行以下命令查看所需要下载的镜像列表,离线镜像下载方式同章节 6.1,这里不再赘述。

# 查看所需的镜像

cat kube-flannel.yml | grep image

image: docker.io/flannel/flannel:v0.24.0

image: docker.io/flannel/flannel-cni-plugin:v1.2.0

image: docker.io/flannel/flannel:v0.24.07.2 安装flannel

把下载好的kube-flannel.yml及镜像上传至所有节点服务器的/k8s/flannel目录下,执行以下命令进行安装,如下所示。

# 加载镜像

find /k8s/flannel -type f -name "*.tar" -exec docker load -i {} \;

# 安装flannel

cd /k8s/flannel

kubectl apply -f kube-flannel.yml安装完成以后,再次查看集群节点以及pod运行状态,一切正常!

[root@k8s-master flannel]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 88m v1.26.9

k8s-node1 Ready <none> 77m v1.26.9

k8s-node2 Ready <none> 75m v1.26.9

[root@k8s-master flannel]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-tx425 1/1 Running 0 15m

kube-flannel kube-flannel-ds-vpw29 1/1 Running 0 15m

kube-flannel kube-flannel-ds-w26wr 1/1 Running 0 15m

kube-system coredns-567c556887-24cf7 1/1 Running 0 89m

kube-system coredns-567c556887-f6k94 1/1 Running 0 89m

kube-system etcd-k8s-master 1/1 Running 0 89m

kube-system kube-apiserver-k8s-master 1/1 Running 0 89m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 89m

kube-system kube-proxy-7ltmx 1/1 Running 0 79m

kube-system kube-proxy-l6kkq 1/1 Running 0 77m

kube-system kube-proxy-x922q 1/1 Running 0 89m

kube-system kube-scheduler-k8s-master 1/1 Running 0 89m7.3 安装calico

下载地址Release v3.28.0 · projectcalico/calico · GitHub

将下载的包上传到服务器,解压tar -zxvf release-v3.10.3.tar

[root@k8s-master opt]# tar -xf release-v3.28.0.tgz

[root@k8s-master opt]# ls

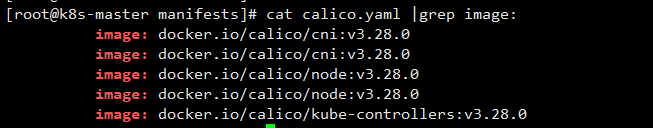

cni containerd cri-dockerd-0.3.1-3.el7.x86_64.rpm docker25.0 flannel kubeadm-init-images kubernetes release-v3.28.0 release-v3.28.0.tgz执行cat calico.yaml |grep image:查看calico所需要的镜像包

可查看到需要以上四个镜像

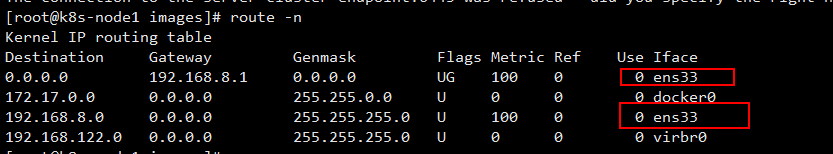

find ./ -name "*.tar" -exec docker load -i {} \;使用 ctr namespace ls 命令查看containerd的命名空间。k8s的命名空间为moby

[root@k8s-master manifests]# ctr namespace ls

NAME LABELS

moby 使用containerd的导入镜像命令将calico的离线镜像包导入到k8s的命名空间中

[root@k8s-master images]# ctr -n moby images import calico-cni.tar

unpacking docker.io/calico/cni:v3.28.0 (sha256:2da41a4fcb31618b20817de9ec9fd13167344f5e2e034cee8baf73d89e212b4e)...done

[root@k8s-master images]# ctr -n moby images import calico-pod2daemon.tar

unpacking docker.io/calico/pod2daemon-flexvol:v3.28.0 (sha256:3d5dadd7384372a60c8cc52ab85a635a50cd5ec9454255cee51c4497f67f9d9d)...done

[root@k8s-master images]# ctr -n moby images import calico-node.tar

unpacking docker.io/calico/node:v3.28.0 (sha256:5a4942472d32549581ed34d785c3724ecffd0d4a7c805e5f64ef1d89d5aaa947)...done

[root@k8s-master images]# ctr -n moby images import calico-kube-controllers.tar

unpacking docker.io/calico/kube-controllers:v3.28.0 (sha256:83e080cba8dbb2bf2168af368006921fcb940085ba6326030a4867963d2be2b3)...done

[root@k8s-master images]# 编辑calico yaml文件配置

vi calico.yaml

#在大概4551行的位置编辑以下配置

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16" #填写为配置k8s集群时,设置的pod网络地址段

- name: IP_AUTODETECTION_METHOD

value: "interface=ens192" #宿主机的网卡信息,这项配置是要手动加入的,calico原本没有

#在大概4521行的位置编辑以下配置

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Never" #默认配置为Always,配置为Always时使用的时IPIP模式,更改为Never时使用的是bgp模式,使用bgp模式性能更高开始安装

进入k8s-manifests文件夹下开始安装,执行kubectl apply -f calico.yaml

[root@k8s-master manifests]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

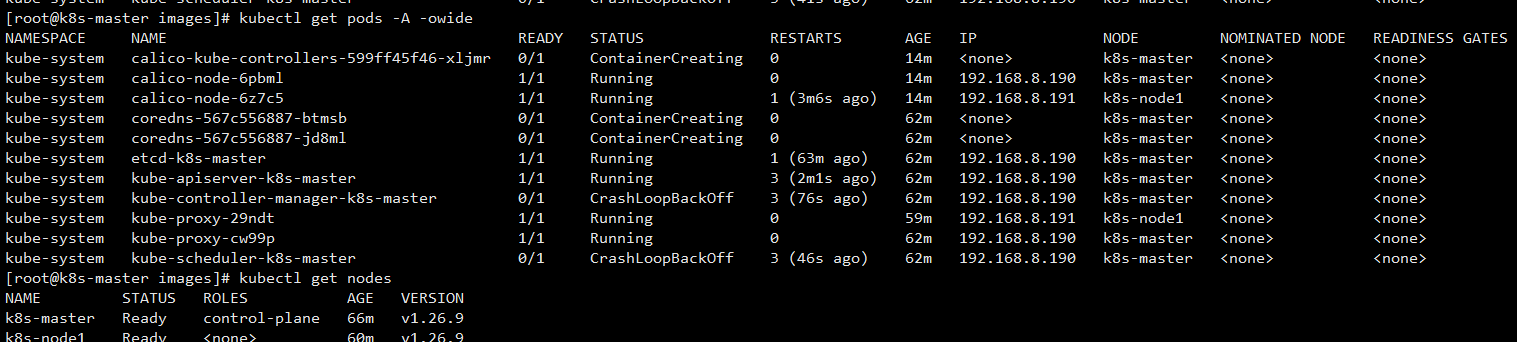

deployment.apps/calico-kube-controllers created执行命令kubectl get pod -A可以看到calico正在运行

(ens33说明的BGP模式)

查询集群状态已ready

8、常用命令

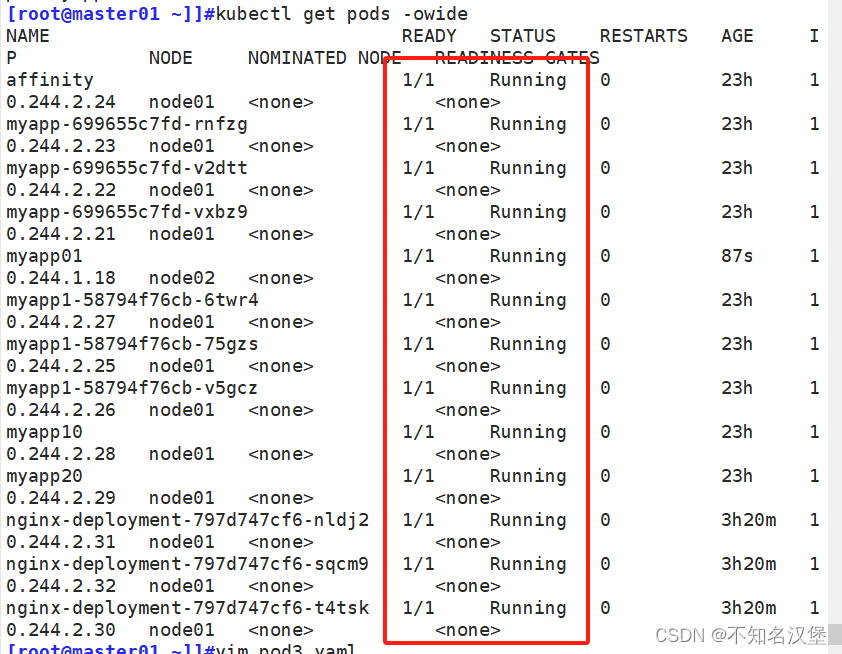

8.1 查看Pod列表

查看所有资源列表:kubectl get pod -A

[root@k8s-master ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-tx425 1/1 Running 1 (3d21h ago) 4d21h

kube-flannel kube-flannel-ds-vpw29 1/1 Running 2 (2d ago) 4d21h

kube-flannel kube-flannel-ds-w26wr 1/1 Running 2 (2d ago) 4d21h

kube-system coredns-567c556887-24cf7 1/1 Running 2 (2d ago) 4d22h

kube-system coredns-567c556887-f6k94 1/1 Running 2 (2d ago) 4d22h

kube-system etcd-k8s-master 1/1 Running 2 (2d ago) 4d22h

kube-system kube-apiserver-k8s-master 1/1 Running 2 (2d ago) 4d22h

......查看某个命名空间下的资源列表:kubectl get pods -n <命名空间>

[root@k8s-master ~]# kubectl get pods -n kube-flannel

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-tx425 1/1 Running 1 (3d21h ago) 4d21h

kube-flannel-ds-vpw29 1/1 Running 2 (2d ago) 4d21h

kube-flannel-ds-w26wr 1/1 Running 2 (2d ago) 4d21h8.2 查看Pod详细信息

查看资源详细信息:kubectl describe pod <资源名称> -n <命名空间>

[root@k8s-master ~]# kubectl describe pod kube-flannel-ds-tx425 -n kube-flannel

Name: kube-flannel-ds-tx425

Namespace: kube-flannel

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: flannel

Node: k8s-node2/10.193.31.26

Start Time: Tue, 02 Jan 2024 18:54:53 +0800

Labels: app=flannel

controller-revision-hash=d88848b67

k8s-app=flannel

pod-template-generation=1

tier=node

Annotations: <none>

Status: Running

IP: 10.193.31.26

IPs:

IP: 10.193.31.26

Controlled By: DaemonSet/kube-flannel-ds

Init Containers:

install-cni-plugin:

Container ID: docker://ab6154f74ebfd54da8fea53d7f3c17cc967c8429a842320a544475776ea6e529

Image: docker.io/flannel/flannel-cni-plugin:v1.2.0

Image ID: docker://sha256:a55d1bad692b776e7c632739dfbeffab2984ef399e1fa633e0751b1662ea8bb4

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/flannel

/opt/cni/bin/flannel

State: Terminated

Reason: Completed

Exit Code: 0

Started: Wed, 03 Jan 2024 18:17:35 +0800

Finished: Wed, 03 Jan 2024 18:17:35 +0800

Ready: True

Restart Count: 1

Environment: <none>

Mounts:

/opt/cni/bin from cni-plugin (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-448cd (ro)

install-cni:

Container ID: docker://1229816d9becc8760f979692e31a509e8ae96f51adf97b5f3dd89ad638833544

Image: docker.io/flannel/flannel:v0.24.0

Image ID: docker://sha256:0dc86fe0f22e650c80700c8e26f75e4930c332700ef6e0cd7cc4080ccccdd58b

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/etc/kube-flannel/cni-conf.json

/etc/cni/net.d/10-flannel.conflist

State: Terminated

Reason: Completed

Exit Code: 0

Started: Wed, 03 Jan 2024 18:17:36 +0800

Finished: Wed, 03 Jan 2024 18:17:36 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/etc/cni/net.d from cni (rw)

/etc/kube-flannel/ from flannel-cfg (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-448cd (ro)

Containers:

kube-flannel:

Container ID: docker://26ba17d8dd8d69103b04808318bdbd44e382d26a1288453bcbc869c27fbf4aa1

Image: docker.io/flannel/flannel:v0.24.0

Image ID: docker://sha256:0dc86fe0f22e650c80700c8e26f75e4930c332700ef6e0cd7cc4080ccccdd58b

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

Args:

--ip-masq

--kube-subnet-mgr

State: Running

Started: Wed, 03 Jan 2024 18:17:37 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 02 Jan 2024 18:54:56 +0800

Finished: Wed, 03 Jan 2024 18:17:34 +0800

Ready: True

Restart Count: 1

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_NAME: kube-flannel-ds-tx425 (v1:metadata.name)

POD_NAMESPACE: kube-flannel (v1:metadata.namespace)

EVENT_QUEUE_DEPTH: 5000

Mounts:

/etc/kube-flannel/ from flannel-cfg (rw)

/run/flannel from run (rw)

/run/xtables.lock from xtables-lock (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-448cd (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

run:

Type: HostPath (bare host directory volume)

Path: /run/flannel

HostPathType:

cni-plugin:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

flannel-cfg:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-flannel-cfg

Optional: false

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

kube-api-access-448cd:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoSchedule op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/network-unavailable:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events: <none>如果看到某个pod运行状态异常时,可通过查看详细信息中的Events来定位问题。

8.3 查看节点和服务

查看节点及服务:kubectl get node,svc -owide

[root@k8s-master ~]# kubectl get node,svc -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node/k8s-master Ready control-plane 4d22h v1.26.9 10.193.31.24 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://24.0.2

node/k8s-node1 Ready <none> 4d22h v1.26.9 10.193.31.25 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://24.0.2

node/k8s-node2 Ready <none> 4d22h v1.26.9 10.193.31.26 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://24.0.2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d22h <none>

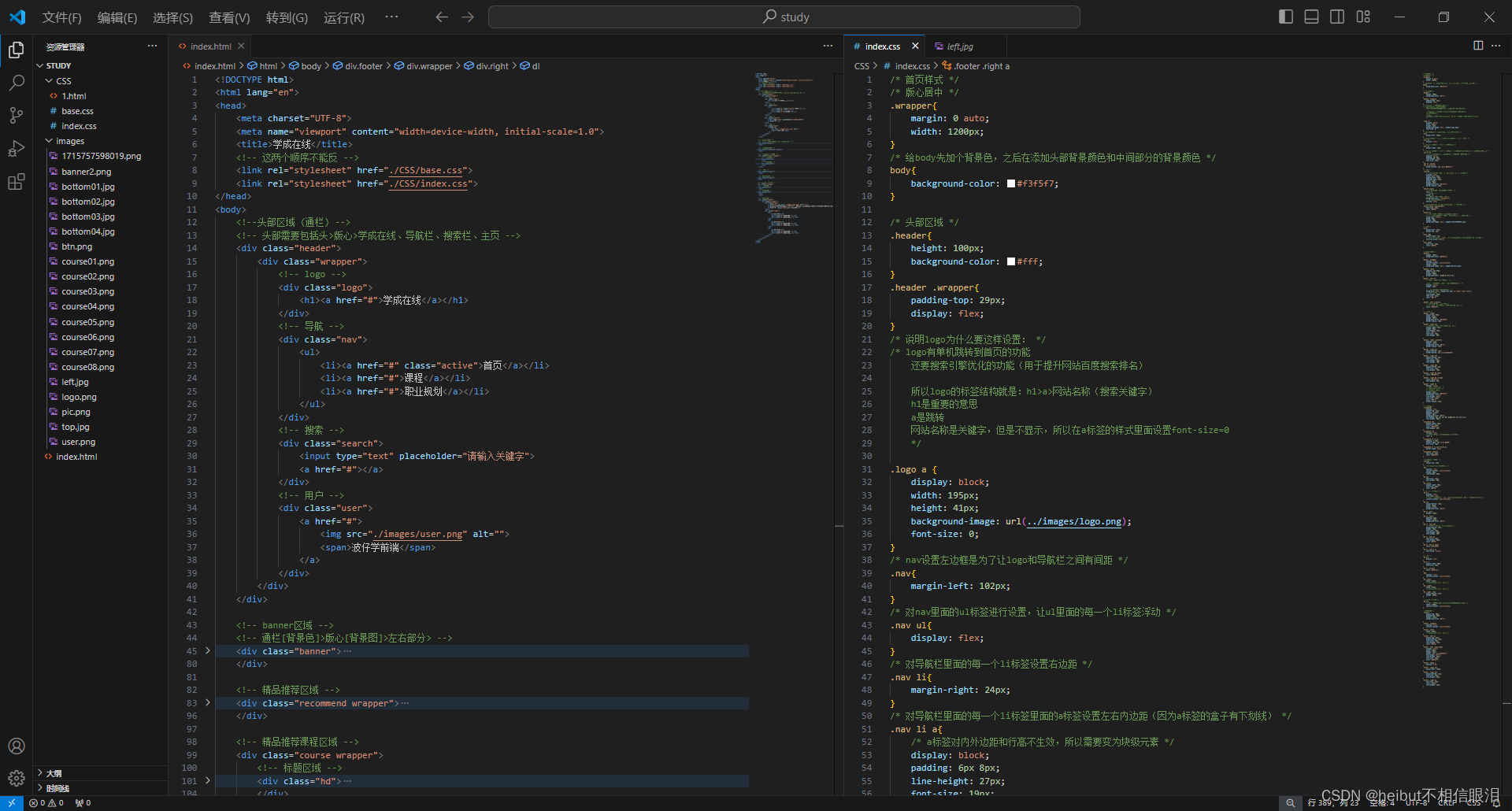

9.部署dashboard

9.1您需要安装 Kubernetes Dashboard。执⾏以下命令:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml📎recommended.yaml

为了可以在集群外⾯访问,我们把recommended.yaml⾥访问⽅式调整为nodeport我

或者

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard9.2 创建 Dashboard ⽤户

Dashboard 默认启⽤了令牌认证,因此您需要创建⼀个⽤户帐户来登录。⾸先,创建⼀个 YAML ⽂件

(例如 dashboard-adminuser.yaml):

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard然后,通过以下命令创建⽤户:

kubectl apply -f dashboard-adminuser.yaml获取令牌

要获取登录到 Dashboard 所需的令牌,请运⾏以下命令:

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')这将显示⼀个⻓令牌字符串,将其复制以备⽤。

查看端⼝

kubectl get pod,svc -n kubernetes-dashboard这样我们通过主机的ip+端口就可以访问dashboard了。下⾯⽤的ip是主机的ip,并不是上⾯出现的

cluster-ip,cluster-ip是集群内部访问的ip

9.3 token没有⽣成

创建serviceaccount

kubectl create serviceaccount admin -n kubernetes-dashboard查看账户信息

kubectl get serviceaccount admin -o yaml -n kubernetes-dashboard绑定⻆⾊

kubectl create clusterrolebinding admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:admin -n kubernetes-dashboard⽣成token

kubectl create token admin -n kubernetes-dashboard