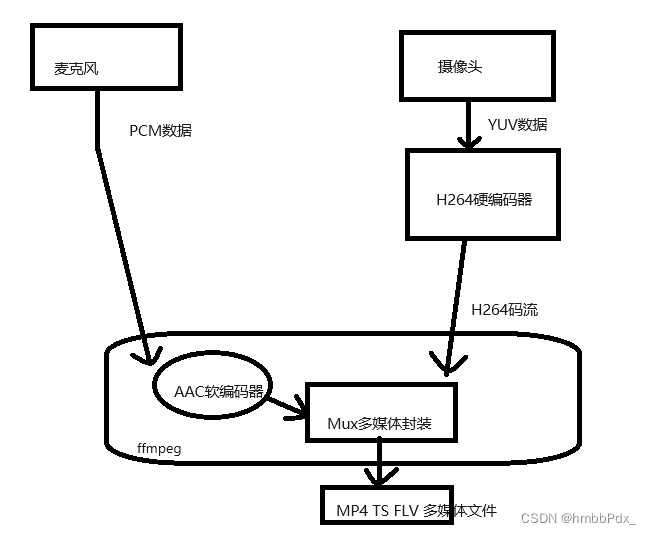

在有一些嵌入式平台中,H264数据流一般来自芯片内部的硬编码器, AAC音频数据则是通过采集PCM进行软编码,但是如何对它实时进行封装多媒体文件 ,参考ffmpeg example,花了一些时间终于实现了该功能。

流程图如下:

本文只展示DEMO

一.视频输入流 创建

//内存数据回调部分

static int read_packet(void *opaque, uint8_t *buf, int buf_size)

{

char * input_filename = (char *)opaque;

static FILE *fl = NULL;

if(fl == NULL){

fl = fopen(input_filename,"r");

}

static unsigned long long read_len=0;

static unsigned long long fps_count=0;

int len=0;

int i =0;

if(!feof(fl))

len = fread(buf,1,buf_size,fl);

else

return AVERROR_EOF;

read_len+= len;

printf("%s len:%d read_len:%d\n",__FUNCTION__, len ,read_len);

for(i=0;i<4091;i++){

if(buf[i+0] == 0

&&buf[i+1] == 0

&&buf[i+2] == 0

&&buf[i+3] == 1)

{

// int data = buf[i+4] &=31;

printf("0 0 0 1 %x %d\n",buf[i+4],fps_count);

fps_count++;

}

}

return len;

}

static AVFormatContext * getInputVideoCtx(const char *fileName) {

uint8_t *avio_ctx_buffer = NULL;

AVIOContext *avio_ctx = NULL;

//缓存buffersize

size_t buffer_size, avio_ctx_buffer_size = 4096;

AVFormatContext * video_fmt_ctx = NULL;

int ret = 0;

if (!(video_fmt_ctx = avformat_alloc_context())) {

ret = AVERROR(ENOMEM);

return NULL;

}

//创建数据缓存Buffer

avio_ctx_buffer = av_malloc(avio_ctx_buffer_size);

if (!avio_ctx_buffer) {

ret = AVERROR(ENOMEM);

return NULL;

}

avio_ctx = avio_alloc_context(avio_ctx_buffer, avio_ctx_buffer_size,

0, fileName, &read_packet, NULL, NULL);

if (!avio_ctx) {

ret = AVERROR(ENOMEM);

return NULL;

}

video_fmt_ctx->pb = avio_ctx;

//打开数据

ret = avformat_open_input(&video_fmt_ctx, NULL, NULL, NULL);

if (ret < 0) {

fprintf(stderr, "Could not open input\n");

return NULL;

}

//获取数据格式

ret = avformat_find_stream_info(video_fmt_ctx, NULL);

if (ret < 0) {

fprintf(stderr, "Could not find stream information\n");

return NULL;

}

//打印数据参数

av_dump_format(video_fmt_ctx, 0, fileName, 0);

return video_fmt_ctx;

}1.注册内存回调read_packet,avformat_find_stream_info会从回调里读取大概2S的h264视频数据并解析。首先会读取SPS PPS,然后是帧数据,读取2S的数据结束,如果给的数据不对,解析不正常会一直读,所以要确保刚开始给的数据是否正常。av_dump_format打印出数据格式

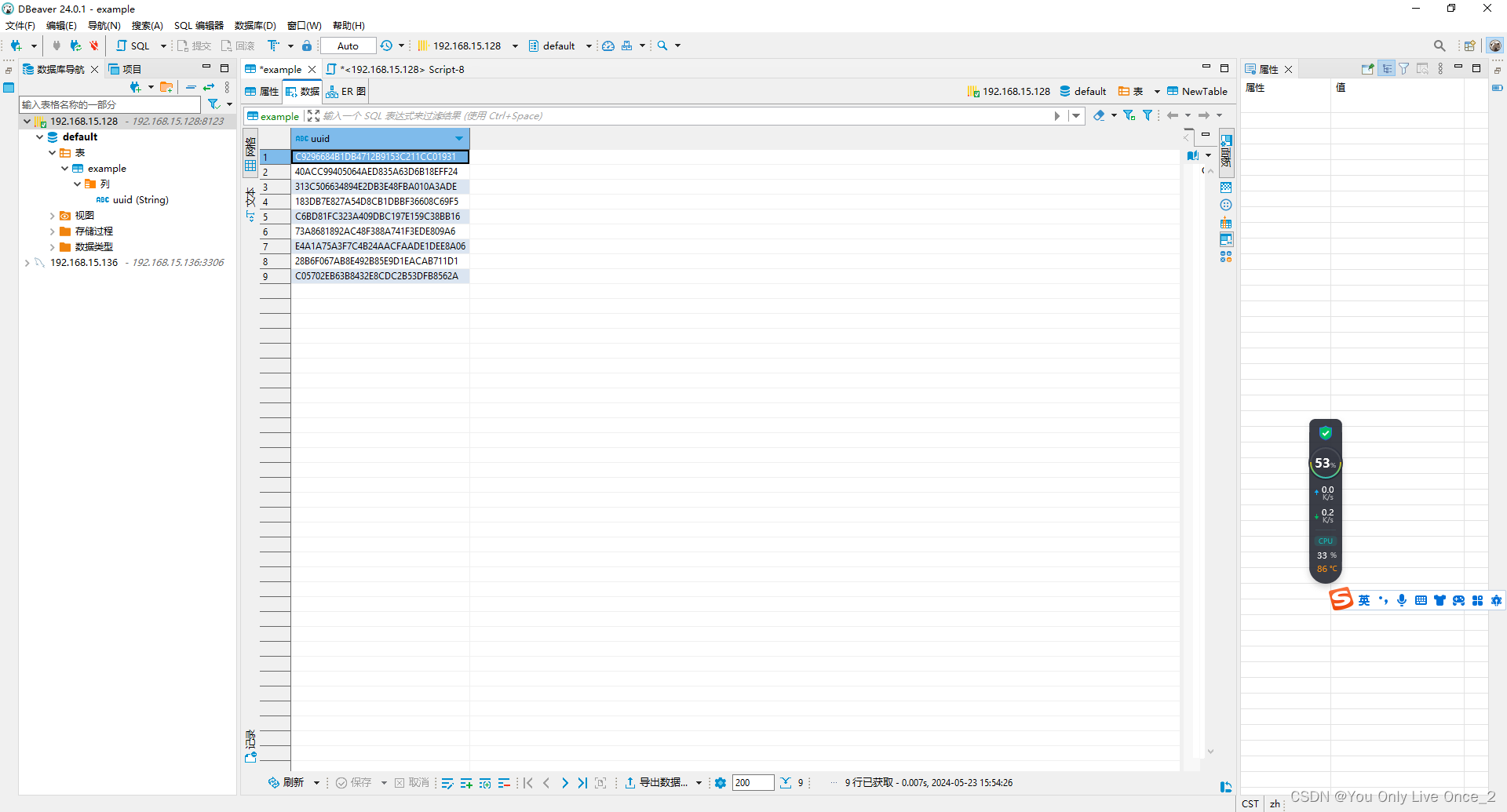

执行如下:

二.创建多媒体输出,添加视频输出流音频输出流

avformat_alloc_output_context2(&oc, NULL, NULL, filename);

...

//

fmt = oc->oformat;

if (fmt->video_codec != AV_CODEC_ID_NONE) {

add_video_stream(&video_st, oc, video_fmt_ctx, fmt->video_codec);

...

}

/* Add the audio and video streams using the default format codecs

* and initialize the codecs. */

if (fmt->audio_codec != AV_CODEC_ID_NONE) {

add_audio_stream(&audio_st, oc, &audio_codec, fmt->audio_codec);

...

}

1.添加视频流和初始化

/* media file output */

static void add_video_stream(OutputStream *ost, AVFormatContext *oc,

const AVFormatContext *video_fmt_ctx,

enum AVCodecID codec_id)

{

...

//创建一个输出流

ost->st = avformat_new_stream(oc, NULL);

...

ost->st->id = oc->nb_streams-1;

c = avcodec_alloc_context3(NULL);

...

//流的time_base初始化

for (i = 0; i < video_fmt_ctx->nb_streams; i++) {

if(video_fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO){

avcodec_parameters_to_context(c, video_fmt_ctx->streams[i]->codecpar);

video_fmt_ctx->streams[i]->time_base.den = video_fmt_ctx->streams[i]->avg_frame_rate.num;

}

}

//初始化av_packet

ost->tmp_pkt = av_packet_alloc();

...

ost->enc = c;

}

2.添加音频流 初始化编解码器

/* Add an output stream. */

static void add_audio_stream(OutputStream *ost, AVFormatContext *oc,

const AVCodec **codec,

enum AVCodecID codec_id)

{

*codec = avcodec_find_encoder(codec_id);

...

//初始化有音频packet

ost->tmp_pkt = av_packet_alloc();

...

//初始化流

ost->st = avformat_new_stream(oc, NULL);

...

switch ((*codec)->type) {

case AVMEDIA_TYPE_AUDIO:

c->sample_fmt = (*codec)->sample_fmts ?

(*codec)->sample_fmts[0] : AV_SAMPLE_FMT_FLTP;

c->bit_rate = 64000;

c->sample_rate = 44100;//采样率

if ((*codec)->supported_samplerates) {

c->sample_rate = (*codec)->supported_samplerates[0];

for (i = 0; (*codec)->supported_samplerates[i]; i++) {

if ((*codec)->supported_samplerates[i] == 44100)

c->sample_rate = 44100;

}

}

av_channel_layout_copy(&c->ch_layout, &(AVChannelLayout)AV_CHANNEL_LAYOUT_STEREO);

//输出audio流的time_base初始化

ost->st->time_base = (AVRational){ 1, c->sample_rate };

break;

default:

break;

}

}3.初始化输出流音频和视频codecpar

static int open_video(AVFormatContext *oc, const AVCodec *codec,AVFormatContext *vedio_fmt_ctx,

OutputStream *ost)

{

...

ret = avcodec_parameters_copy(ost->st->codecpar, vedio_fmt_ctx->streams[index]->codecpar);

...

}

static void open_audio(AVFormatContext *oc, const AVCodec *codec,

OutputStream *ost, AVDictionary *opt_arg)

{

...

/* copy the stream parameters to the muxer */

ret = avcodec_parameters_from_context(ost->st->codecpar, c);

if (ret < 0) {

fprintf(stderr, "Could not copy the stream parameters\n");

exit(1);

}

...

}三.开始写入多媒体文件

1.比较写入音视频的时间戳,判断下一次要写入音频还是视频

while (encode_video) {

/* select the stream to encode */

if (encode_video &&

( !encode_audio || av_compare_ts(video_st.next_pts, video_fmt_ctx->streams[v_ctx_index]->time_base,

audio_st.next_pts, audio_st.enc->time_base) <= 0)) {

encode_video = !write_video_frame(oc, video_fmt_ctx, &video_st, video_st.tmp_pkt);

} else {

encode_audio = !write_audio_frame(oc, &audio_st);

}

}av_compare_ts 通过对比当前Audio Video帧的写入量判断当前要写入Audio 还是Video

(例如: Video= 写入10帧* 1/25 > Audio 写入 10240*1/44100 则写入audio)

2.写入一帧Video

static int write_video_frame(AVFormatContext *oc,AVFormatContext *vic, OutputStream *ost, AVPacket *pkt)

{

int ret,i;

static int frame_index = 0;

AVStream *in_stream, *out_stream;

int stream_index;

stream_index = av_find_best_stream(vic, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);

//读一帧H264

ret = av_read_frame(vic, pkt);

if(ret == AVERROR_EOF)

return ret == AVERROR_EOF ? 1 : 0;

av_packet_rescale_ts(pkt, ost->enc->time_base, ost->st->time_base);

if(pkt->pts==AV_NOPTS_VALUE){

in_stream = vic->streams[stream_index];

out_stream = ost->st;

//Write PTS

AVRational time_base1=in_stream->time_base;

int64_t calc_duration=(double)AV_TIME_BASE/av_q2d(in_stream->avg_frame_rate);

//计算出包的解码时间

pkt->pts=(double)(frame_index*calc_duration)/(double)(av_q2d(time_base1)*AV_TIME_BASE);

pkt->dts=pkt->pts;

pkt->duration=(double)calc_duration/(double)(av_q2d(time_base1)*AV_TIME_BASE);

//帧的计数累加

frame_index++;

//pkt的pts dts是输入流的时间戳 要转换成 输出流的时间戳

av_packet_rescale_ts(pkt, in_stream->time_base, out_stream->time_base);

pkt->pos = -1;

pkt->stream_index=ost->st->index;

}

//写入到多媒体文件

ret = av_interleaved_write_frame(oc, pkt);

if (ret < 0) {

fprintf(stderr, "Error while writing output packet: %s\n", av_err2str(ret));

exit(1);

}

return ret == AVERROR_EOF ? 1 : 0;

}av_read_frame会回调read_packet 获取一帧H264数据,再通过计算时间戳 pts dts 再转换对应的输出流时间戳才能写入多媒体文件

3.写入一帧Audio

//获取一帧原始的Audio PCM 数据

/* Prepare a 16 bit dummy audio frame of 'frame_size' samples and

* 'nb_channels' channels. */

static AVFrame *get_audio_frame(OutputStream *ost)

{

...

c = ost->enc;

for (j = 0; j <frame->nb_samples; j++) {

v = (int)(sin(ost->t) * 10000);

for (i = 0; i < ost->enc->ch_layout.nb_channels; i++)

*q++ = v;

ost->t += ost->tincr;

ost->tincr += ost->tincr2;

}

...

frame->pts = ost->next_pts;

ost->next_pts += frame->nb_samples;

count++;

return frame;

}

static int write_audio_frame(AVFormatContext *oc, OutputStream *ost)

{

....

//获取一帧原始的Audio PCM 数据

frame = get_audio_frame(ost);

if (frame) {

dst_nb_samples = av_rescale_rnd(swr_get_delay(ost->swr_ctx, c->sample_rate) + frame->nb_samples,

c->sample_rate, c->sample_rate, AV_ROUND_UP);

ret = av_frame_make_writable(ost->frame);

/* convert to destination format */

ret = swr_convert(ost->swr_ctx,

ost->frame->data, dst_nb_samples,

(const uint8_t **)frame->data, frame->nb_samples);

frame = ost->frame;

frame->pts = av_rescale_q(ost->samples_count, (AVRational){1, c->sample_rate}, c->time_base);

ost->samples_count += dst_nb_samples;

}

//先送去编码再写入多媒体文件

return write_frame(oc, c, ost, frame, ost->tmp_pkt);

}

static int write_frame(AVFormatContext *fmt_ctx, AVCodecContext *c,

OutputStream *ost, AVFrame *frame, AVPacket *pkt)

{

...

ret = avcodec_send_frame(c, frame);

...

while (ret >= 0) {

ret = avcodec_receive_packet(c, pkt);

...

/* rescale output packet timestamp values from codec to stream timebase */

av_packet_rescale_ts(pkt, c->time_base, st->time_base);

printf("%d %d\n", c->time_base.den, st->time_base.den);

pkt->stream_index = st->index;

ret = av_interleaved_write_frame(fmt_ctx, pkt);

...

count++;

}

return ret == AVERROR_EOF ? 1 : 0;

}四.写入多媒体尾部结束:

av_write_trailer(oc);

一些BUG:

控制写入时间,可以在写入循环里添加break。写入数据过长会出现音视频不同步的情况,建议写入时间不超过30分钟

DEMO

有需要源码可以后台私信我