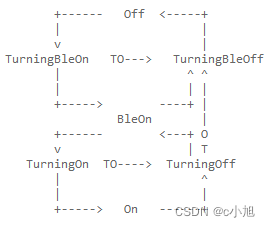

模型分类:

GPT类型: auto-regressive(decoder模型,过去时刻的输出也是现在的输入,例如要得到y8还需要知道y1到y7,但不能使用y9,例如用于文本生成任务)

GPT

GPT2

CTRL

Transformer XL

BERT类型:auto-encoding(encoder模型,对全部上下文有认识,例如序列分类任务)

bert

albert

distillbert

electra

roberta

BART类型: sequence-to-sequence(encoder-decoder模型,依靠输入的序列去生成新的序列;解码器使用特征向量组和已经产生的输出来预测新的输出,例如y3使用y1到y2;例如翻译任务)

BART

mBART

Marian

T5

encoder与decoder不共享权重,并且可以自己组合选择不同的预训练模型

语言模型:

models have been trained on large amounts of raw text in a self-supervised fashion

Self-supervised learning is a type of training in which the objective is automatically computed from the inputs of the model. That means that humans are not needed to label the data

transfer learning:the model is fine-tuned in a supervised way — that is, using human-annotated labels — on a given task.预训练虽然可以转移知识,但也会转移原模型的偏差。

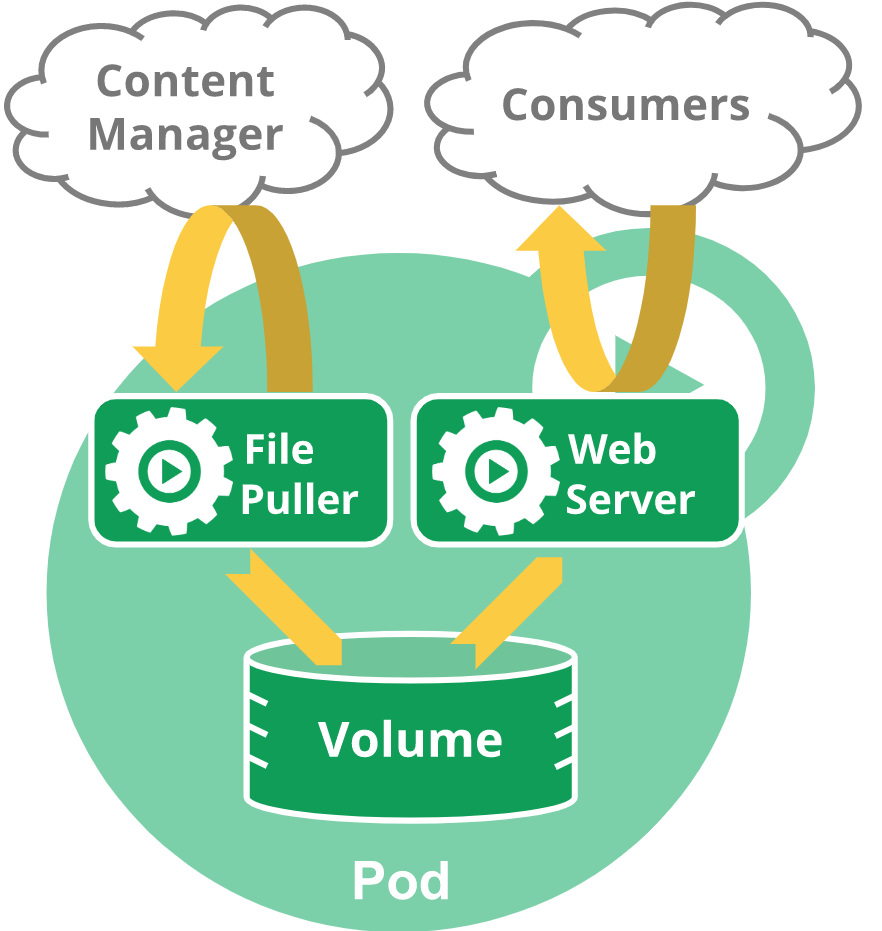

Transformer架构

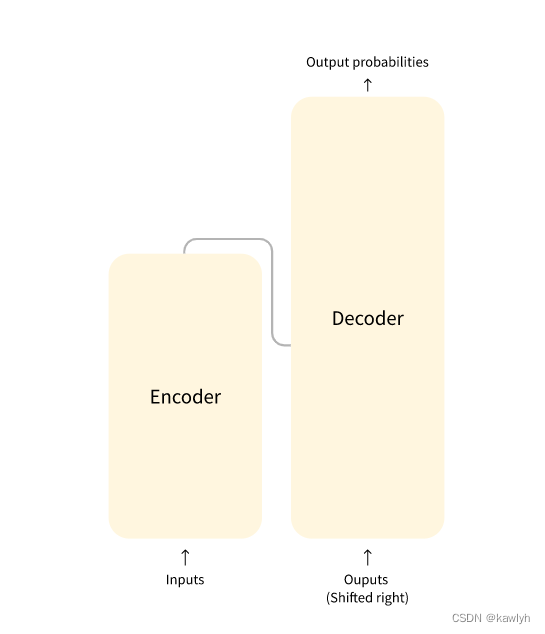

模型为编码器-解码器架构:编码器获得输入并构建其特征表达式;解码器利用编码器得到的特征和其他输入来得到最后的序列

Encoder-only models: Good for tasks that require understanding of the input, such as sentence classification and named entity recognition.

Decoder-only models: Good for generative tasks such as text generation

Encoder-decoder models or sequence-to-sequence models: Good for generative tasks that require an input, such as translation or summarization.

注意力机制attention is all you need

a word by itself has a meaning, but that meaning is deeply affected by the context, which can be any other word (or words) before or after the word being studied.

encoder:可以使用所有单词

decoder:只能使用已经输出的单词和全部的encoder的输出,例如翻译任务中,要得到y4需要知道y1到y3

attention mask: prevent the model from paying attention to some special words

![[数据结构基础]链式二叉树及其前序、中序和后序遍历](https://img-blog.csdnimg.cn/40764d5adb9f464ca7b02e9816ede04c.png)