目录

- 报错截图

- 关键问题

- nvcc -V 查看 cuda 版本

- 查看 usr/local/cuda-* 安装的cuda版本

- 设置 cuda-12.0 (添加入环境变量)

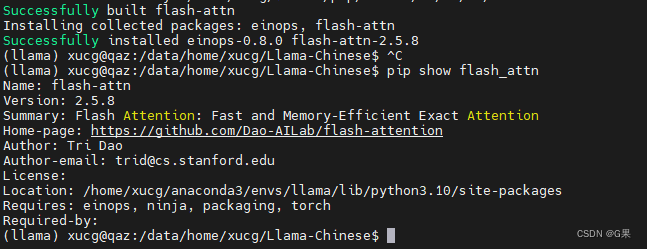

- FlashAttention 安装成功

报错截图

ImportError: This modeling file requires the following packages that were not found in your environment: flash_attn. Run pip install flash_attn

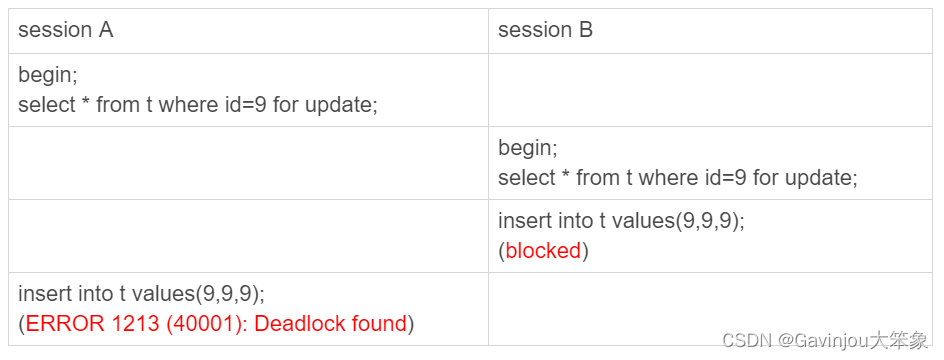

关键问题

RuntimeError: FlashAttention is only supported on CUDA 11.6 and above. Note: make sure nvcc has a supported version by running nvcc -V.(FalshAttention库只支持cuda>=11.6的)

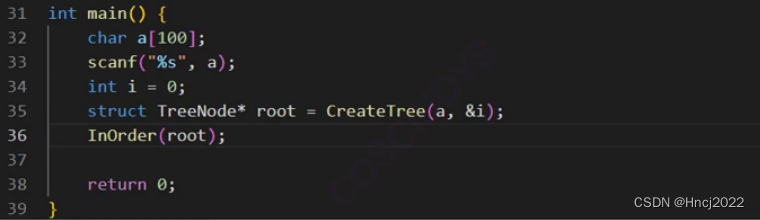

nvcc -V 查看 cuda 版本

查看 usr/local/cuda-* 安装的cuda版本

使用的是远程服务器,个人账户没有权限安装新的cuda,考虑现成的

发现已有cuda-11.1和cuda-12.0两个版本

设置 cuda-12.0 (添加入环境变量)

export PATH=/usr/local/cuda-12.0/bin:$PATH

再次使用nvcc -V查看,版本已经更新

FlashAttention 安装成功

(llama) xucg@qaz:/data/home/xucg/Llama-Chinese$ pip install flash-attn

Collecting flash-attn

Using cached flash_attn-2.5.8.tar.gz (2.5 MB)

Preparing metadata (setup.py) ... done

Requirement already satisfied: torch in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from flash-attn ) (2.1.2)

Collecting einops (from flash-attn)

Using cached einops-0.8.0-py3-none-any.whl.metadata (12 kB)

Requirement already satisfied: packaging in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from flash- attn) (24.0)

Requirement already satisfied: ninja in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from flash-attn ) (1.11.1.1)

Requirement already satisfied: filelock in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from torch-> flash-attn) (3.14.0)

Requirement already satisfied: typing-extensions in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (fro m torch->flash-attn) (4.11.0)

Requirement already satisfied: sympy in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from torch->fla sh-attn) (1.12)

Requirement already satisfied: networkx in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from torch-> flash-attn) (3.3)

Requirement already satisfied: jinja2 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from torch->fl ash-attn) (3.1.3)

Requirement already satisfied: fsspec in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from torch->fl ash-attn) (2024.3.1)

Requirement already satisfied: nvidia-cuda-nvrtc-cu12==12.1.105 in /home/xucg/anaconda3/envs/llama/lib/python3.10/sit e-packages (from torch->flash-attn) (12.1.105)

Requirement already satisfied: nvidia-cuda-runtime-cu12==12.1.105 in /home/xucg/anaconda3/envs/llama/lib/python3.10/s ite-packages (from torch->flash-attn) (12.1.105)

Requirement already satisfied: nvidia-cuda-cupti-cu12==12.1.105 in /home/xucg/anaconda3/envs/llama/lib/python3.10/sit e-packages (from torch->flash-attn) (12.1.105)

Requirement already satisfied: nvidia-cudnn-cu12==8.9.2.26 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-pac kages (from torch->flash-attn) (8.9.2.26)

Requirement already satisfied: nvidia-cublas-cu12==12.1.3.1 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-pa ckages (from torch->flash-attn) (12.1.3.1)

Requirement already satisfied: nvidia-cufft-cu12==11.0.2.54 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-pa ckages (from torch->flash-attn) (11.0.2.54)

Requirement already satisfied: nvidia-curand-cu12==10.3.2.106 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site- packages (from torch->flash-attn) (10.3.2.106)

Requirement already satisfied: nvidia-cusolver-cu12==11.4.5.107 in /home/xucg/anaconda3/envs/llama/lib/python3.10/sit e-packages (from torch->flash-attn) (11.4.5.107)

Requirement already satisfied: nvidia-cusparse-cu12==12.1.0.106 in /home/xucg/anaconda3/envs/llama/lib/python3.10/sit e-packages (from torch->flash-attn) (12.1.0.106)

Requirement already satisfied: nvidia-nccl-cu12==2.18.1 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packag es (from torch->flash-attn) (2.18.1)

Requirement already satisfied: nvidia-nvtx-cu12==12.1.105 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-pack ages (from torch->flash-attn) (12.1.105)

Requirement already satisfied: triton==2.1.0 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from to rch->flash-attn) (2.1.0)

Requirement already satisfied: nvidia-nvjitlink-cu12 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from nvidia-cusolver-cu12==11.4.5.107->torch->flash-attn) (12.4.127)

Requirement already satisfied: MarkupSafe>=2.0 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from jinja2->torch->flash-attn) (2.1.5)

Requirement already satisfied: mpmath>=0.19 in /home/xucg/anaconda3/envs/llama/lib/python3.10/site-packages (from sym py->torch->flash-attn) (1.3.0)

Using cached einops-0.8.0-py3-none-any.whl (43 kB)

Building wheels for collected packages: flash-attn

Building wheel for flash-attn (setup.py) ... done

Created wheel for flash-attn: filename=flash_attn-2.5.8-cp310-cp310-linux_x86_64.whl size=120616671 sha256=1e782463 ba32d2193924771c940805d74c365435d4142df18a261fc5a2fdff82

Stored in directory: /home/xucg/.cache/pip/wheels/9b/5b/2b/dea8af4e954161c49ef1941938afcd91bb93689371ed12a226

Successfully built flash-attn

Installing collected packages: einops, flash-attn

Successfully installed einops-0.8.0 flash-attn-2.5.8