最近大模型很火,也试试搭一下,这个是openai 开源的whisper,用来语音转文字。

安装

按照此文档安装,个人习惯先使用第一个pip命令安装,然后再用第二个安装剩下的依赖(主要是tiktoken)

https://github.com/openai/whisper?tab=readme-ov-file

pip install -U openai-whisper #安装pypi包(这个缺少tiktoken

pip install git+https://github.com/openai/whisper.git #安装最新更新以及依赖

pip install --upgrade --no-deps --force-reinstall git+https://github.com/openai/whisper.git #更新用这个

安装ffmpeg,转码用

# on Ubuntu or Debian

sudo apt update && sudo apt install ffmpeg

# on Arch Linux

sudo pacman -S ffmpeg

# on MacOS using Homebrew (https://brew.sh/)

brew install ffmpeg

# on Windows using Chocolatey (https://chocolatey.org/)

choco install ffmpeg

# on Windows using Scoop (https://scoop.sh/)

scoop install ffmpeg

测试模型

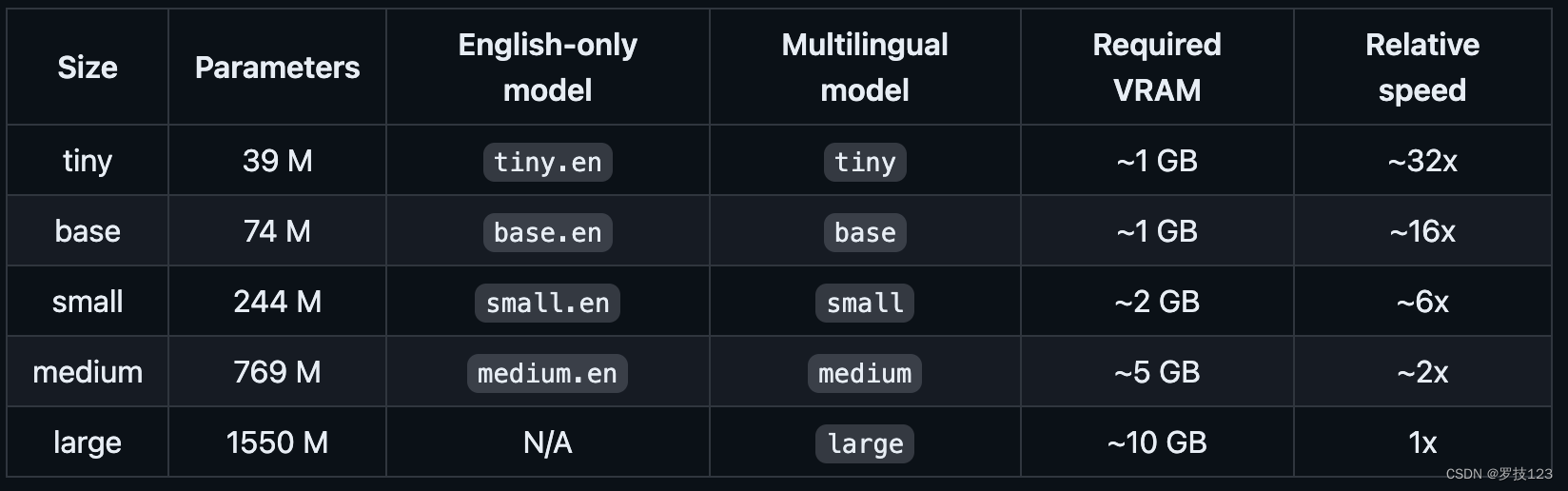

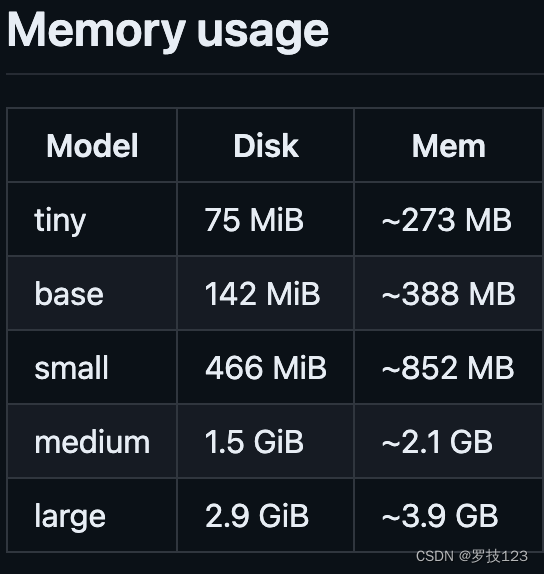

默认模型是base,模型和需要的显存大小是

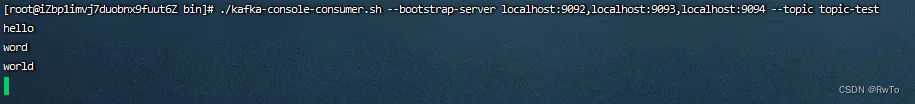

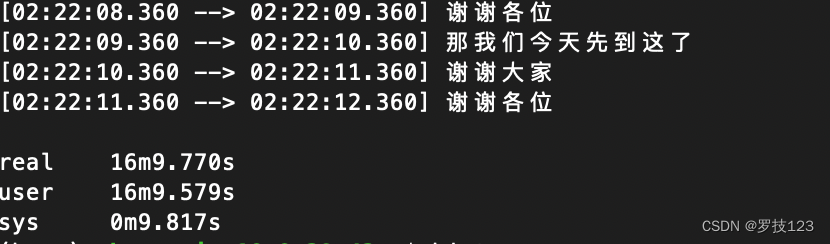

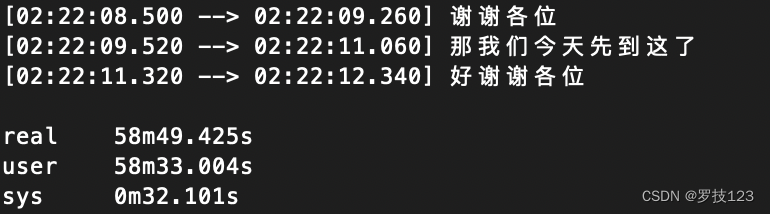

(base) ubuntu@ip-10-0-29-42:~$ time whisper 1.mp4

wDetecting language using up to the first 30 seconds. Use --language to specify the language

Detected language: Chinese

使用 --model 指定模型,这里使用最大的模型

(base) ubuntu@ip-10-0-29-42:~$ time whisper 1.mp4 --model large

Detecting language using up to the first 30 seconds. Use --language to specify the language

Detected language: Chinese

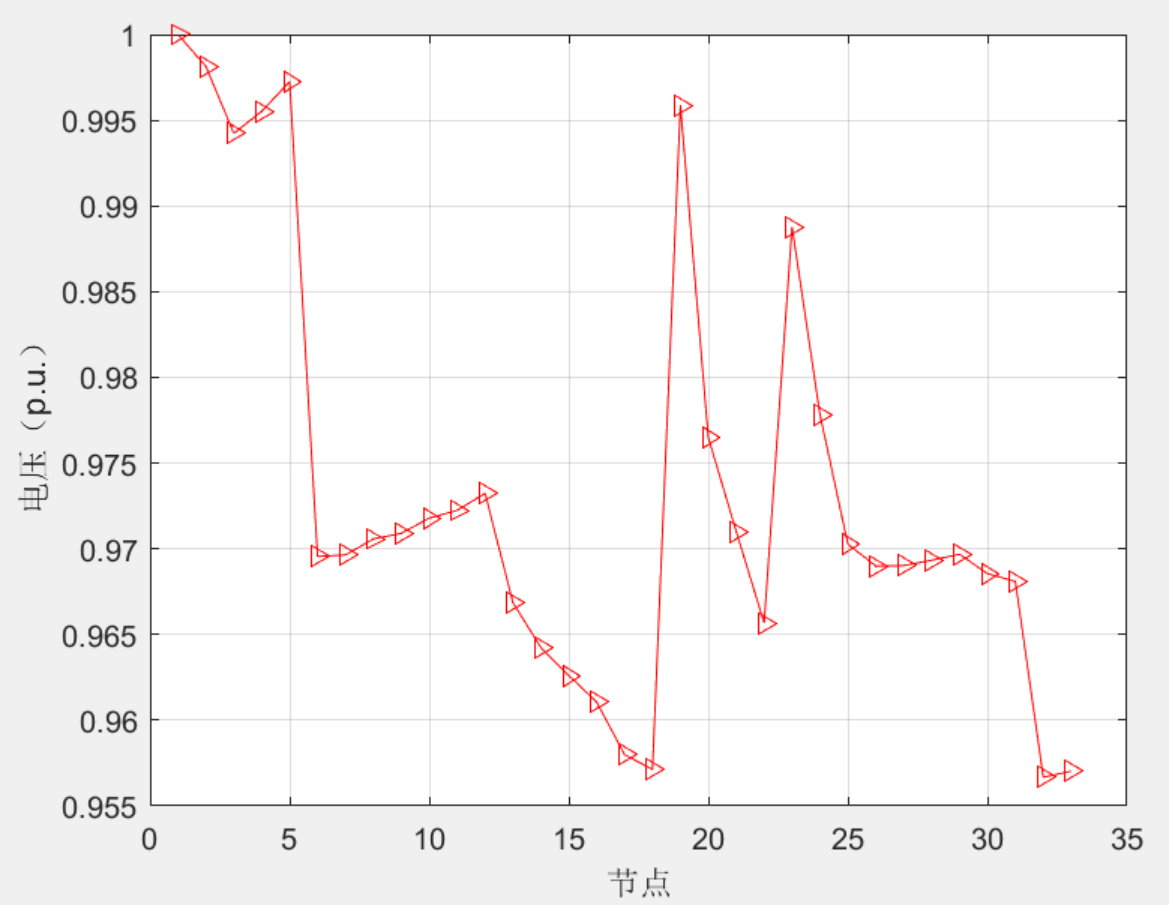

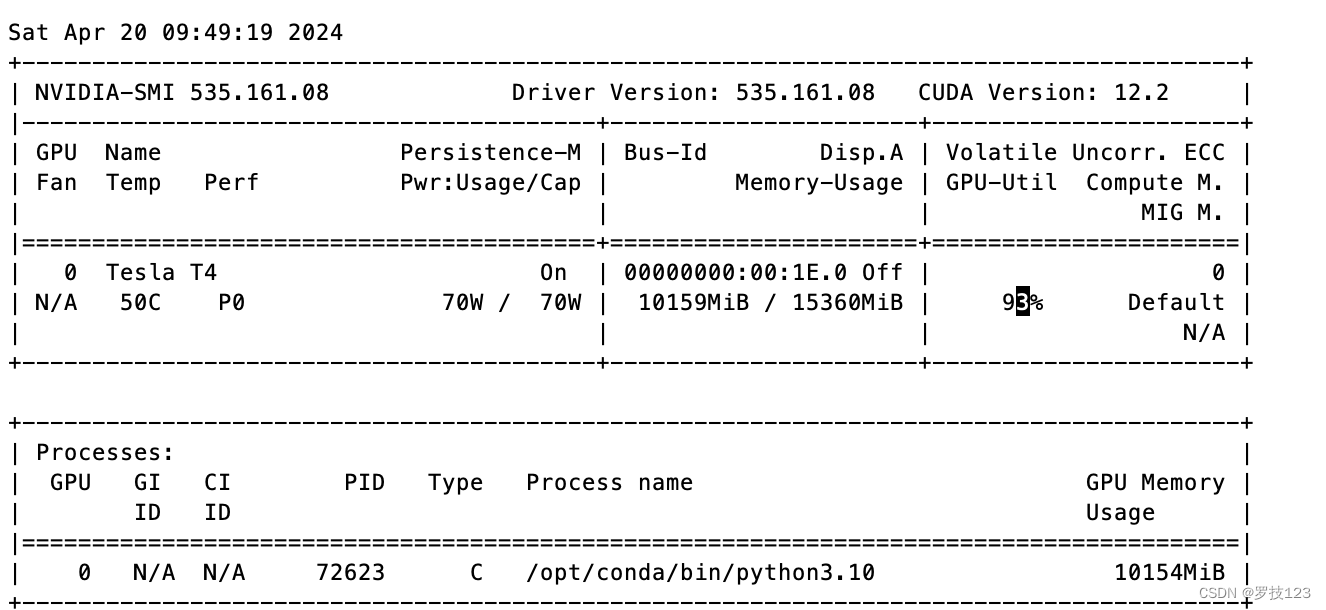

大模型的显存占用如下:

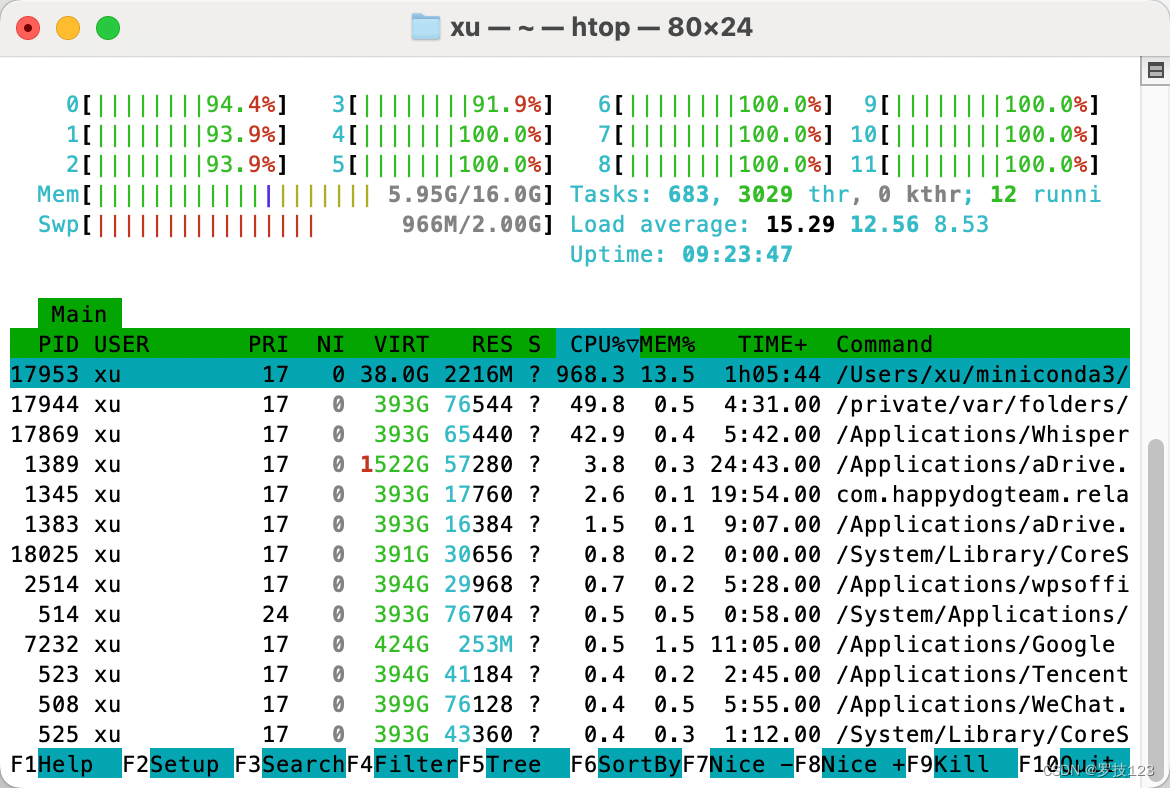

这个默认使用cuda 进行,但是并不支持Apple Silicon的MPS。

从htop看还是使用的CPU进行的推理,受用–device mps也不支持。

脚本清洗

然后使用脚本清洗出现的时间线以及多出来的空格换行。

def remove_timestamps_and_empty_lines(input_file_path, output_file_path):

# Read the file

with open(input_file_path, "r", encoding="utf-8") as file:

lines = file.readlines()

# Remove the timestamp from each line and filter out empty lines

cleaned_lines = [

line.strip().split("] ", 1)[-1].strip() # Split and remove the timestamp

for line in lines if "] " in line and line.strip().split("] ", 1)[-1].strip() # Check if there is text after removing timestamp

]

# Write the cleaned, non-empty lines to a new file

with open(output_file_path, "w", encoding="utf-8") as file:

file.write(",".join(cleaned_lines))

# Example usage

input_file_path = '1.txt' # Specify the path to your input file

output_file_path = '2.txt' # Specify the path to your output file

remove_timestamps_and_empty_lines(input_file_path, output_file_path)

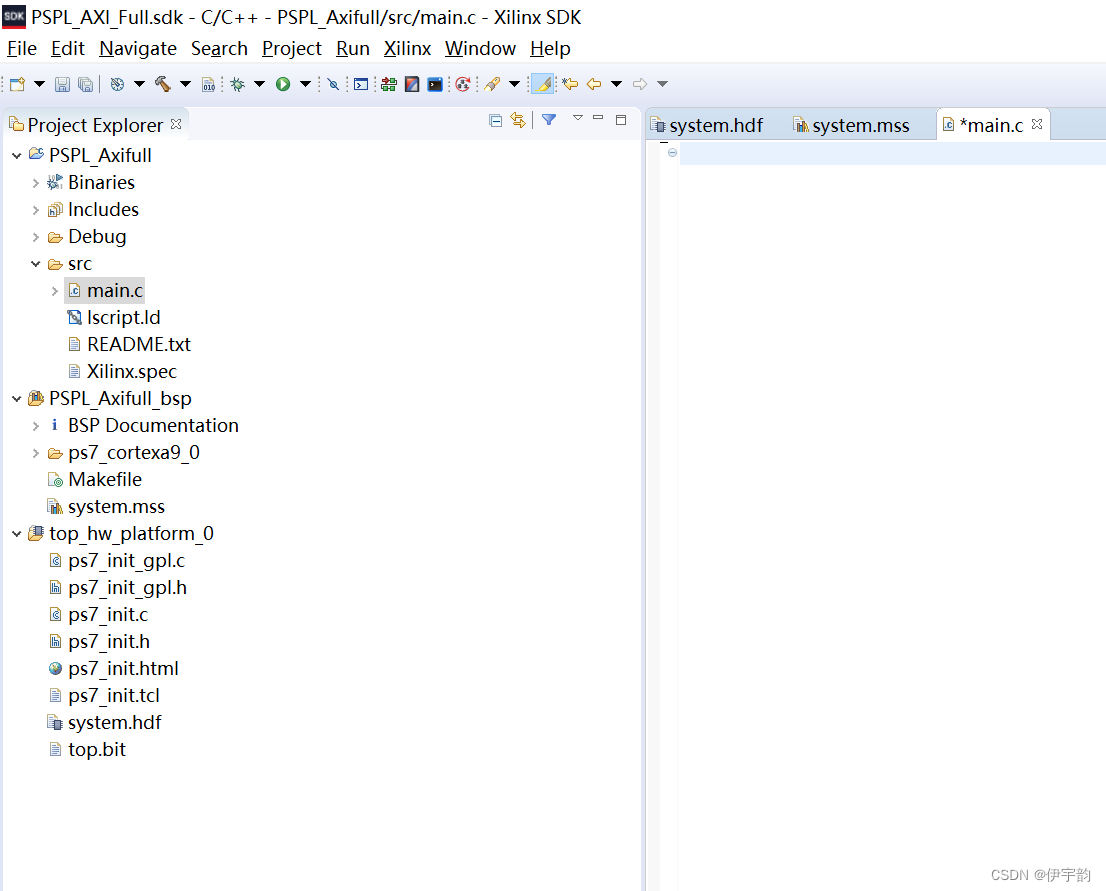

利用Apple Silicon

为了不浪费Apple Silicon的性能,查了youtube发现一个可以使用Apple 显存进行加速的项目。https://www.youtube.com/watch?v=lPg9NbFrFPI

Github如下:

https://github.com/ggerganov/whisper.cpp

安装如下:

# Install Python dependencies needed for the creation of the Core ML model:

pip install ane_transformers

pip install openai-whisper

pip install coremltools

# using Makefile

make clean

WHISPER_COREML=1 make -j

### 下载模型

make base

### Generate a Core ML model.

./models/generate-coreml-model.sh base

# This will generate the folder models/ggml-base.en-encoder.mlmodelc

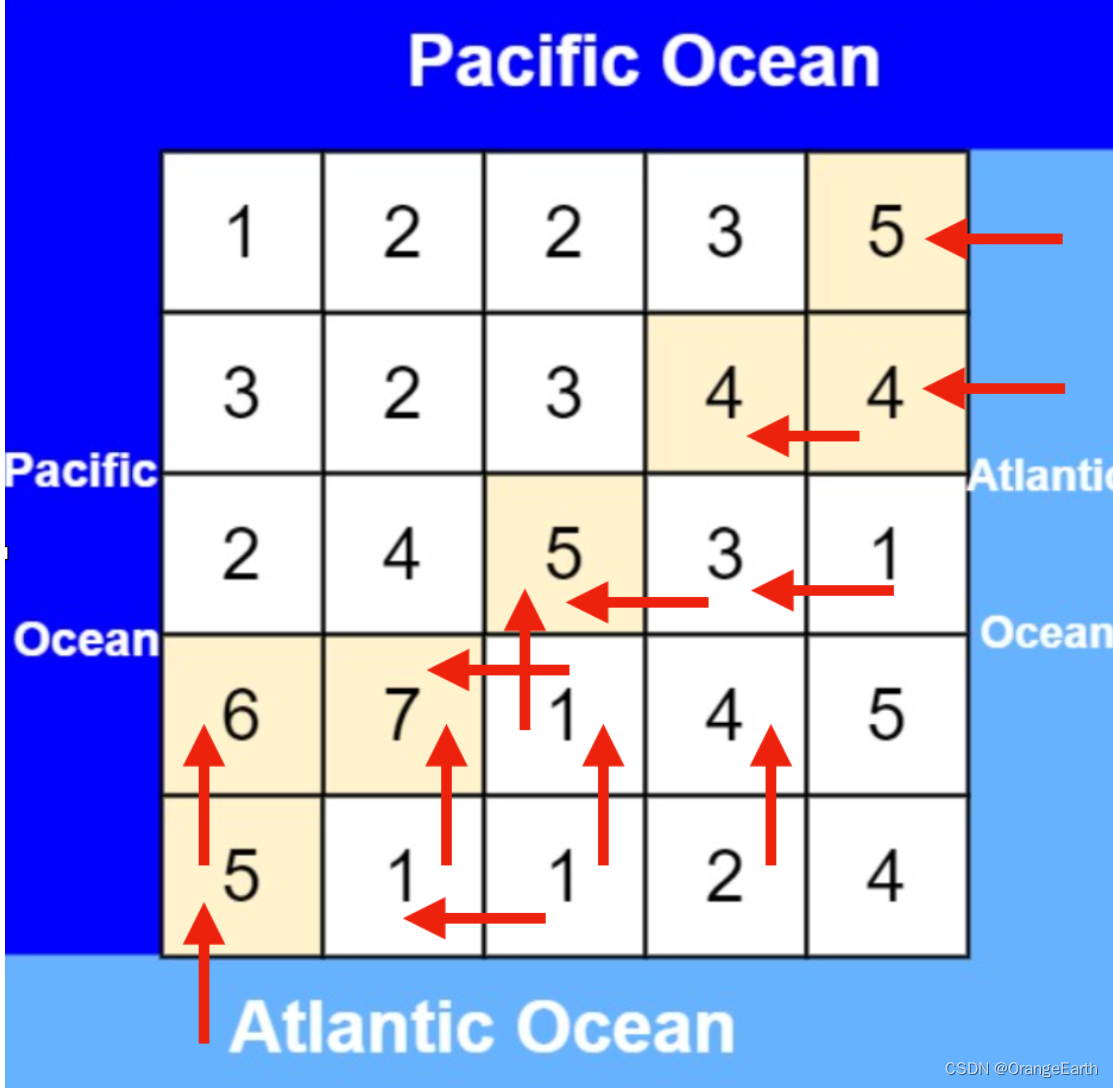

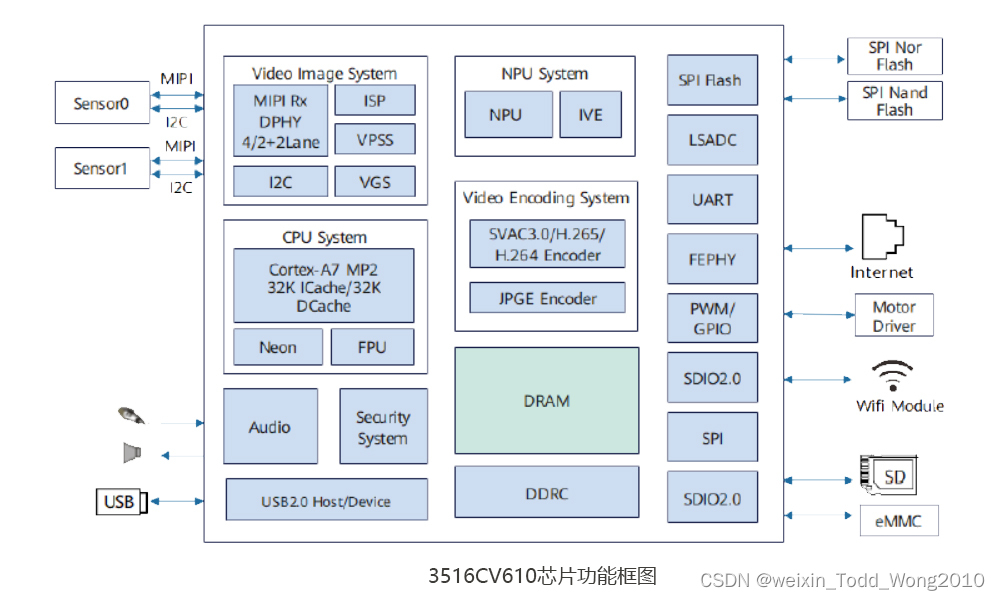

各个模型的显存占用与模型大小:

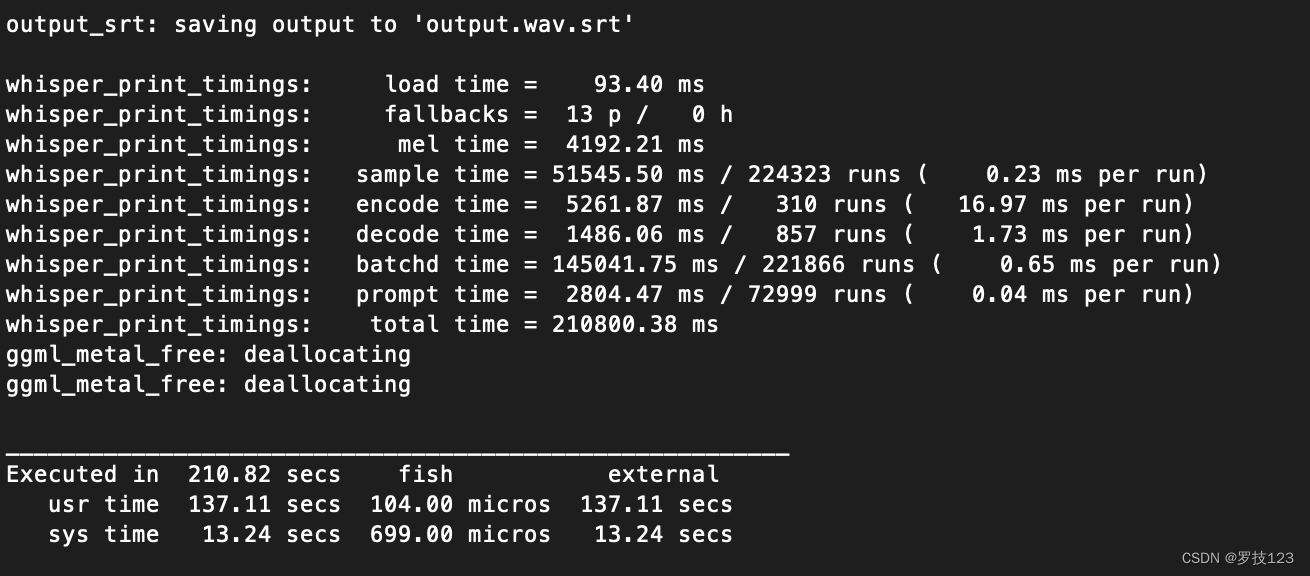

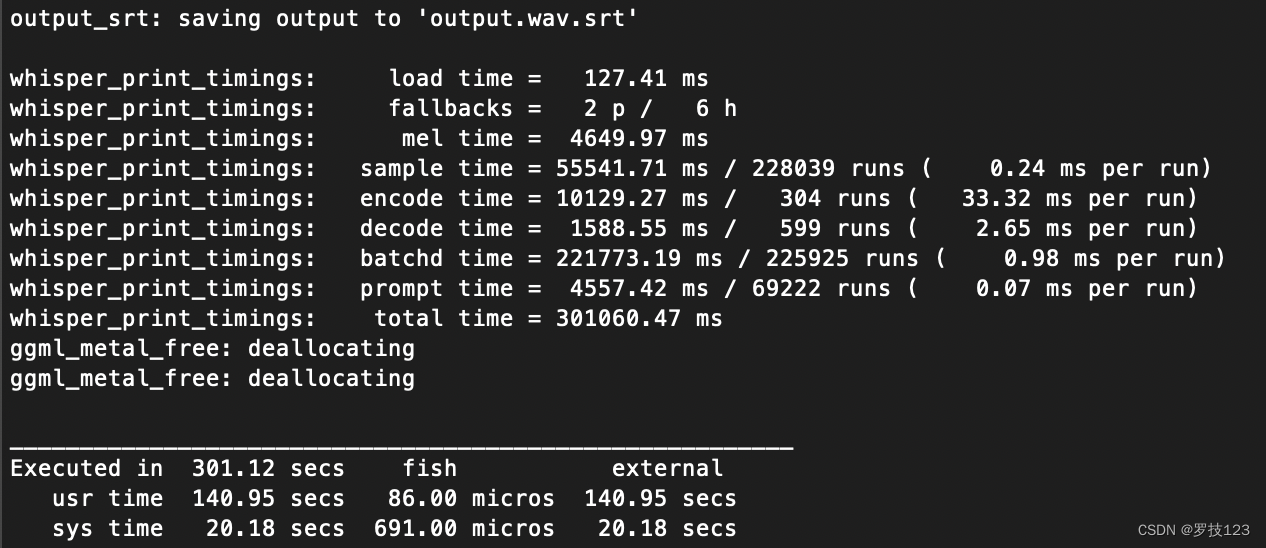

同样推理一个两个半小时的视频,时间如下:

# 先用ffmpeg转码

ffmpeg -i 1.mp4 -ar 16000 -ac 1 output.wav

# 执行ggml-{model}.bin

time ./main -m models/ggml-base.bin -l zh -osrt -f 'output.wav'

这个视频在Tesla T4的卡上base模型要17分钟,large 1小时。(传统wispper)

Tiny:210S

base:301S

large V3:

![[Linux][进程信号][二][信号如何被保存][信号处理][可重入函数]详细解读](https://img-blog.csdnimg.cn/direct/46d8e263aa0c44efbf5af1102ae09647.png)