源码地址

1. 源码概述

源码里一共包含了5个py文件

- 单机模型(Normal_ResNet_HAM10000.py)

- 联邦模型(FL_ResNet_HAM10000.py)

- 本地模拟的SFLV1(SFLV1_ResNet_HAM10000.py)

- 网络socket下的SFLV2(SFLV2_ResNet_HAM10000.py)

- 使用了DP+PixelDP隐私技术(SL_ResNet_HAM10000.py)

使用的数据集是:HAM10000 数据集是常见色素性皮肤病变的多源皮肤图像大集合。

做的是图像分类的工作。

2. Normal_ResNet_HAM10000.py

这是一个基础模型,可以在单机上进行训练和验证(有点基础的同学应该都可以看懂)。让我们来分析一下这个文件中一些主要类和方法:

2.1 SkinData(Dataset)

自定义的数据集,继承自Dataset,主要实现

class SkinData(Dataset):

def __init__(self, df, transform = None):

self.df = df

self.transform = transform

def __len__(self):

return len(self.df)

def __getitem__(self, index):

X = Image.open(self.df['path'][index]).resize((64, 64))

y = torch.tensor(int(self.df['target'][index]))

// 进行数据增强

if self.transform:

X = self.transform(X)

return X, y

2.2 ResNet18模型

def conv3x3(in_planes, out_planes, stride=1):

"3x3 convolution with padding"

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

padding=1, bias=False)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class ResNet18(nn.Module):

def __init__(self, block, layers, num_classes=1000):

self.inplanes = 64

super(ResNet18, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AvgPool2d(7)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

2.3 训练+验证

def calculate_accuracy(fx, y):

preds = fx.max(1, keepdim=True)[1]

correct = preds.eq(y.view_as(preds)).sum()

acc = correct.float()/preds.shape[0]

return acc

#==========================================================================================================================

def train(model, device, iterator, optimizer, criterion):

epoch_loss = 0

epoch_acc = 0

model.train()

ell = len(iterator)

for (x, y) in iterator:

x = x.to(device)

y = y.to(device)

optimizer.zero_grad() # initialize gradients to zero

# ------------- Forward propagation ----------

fx = model(x)

loss = criterion(fx, y)

acc = calculate_accuracy (fx , y)

# -------- Backward propagation -----------

loss.backward()

optimizer.step()

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / ell, epoch_acc / ell

def evaluate(model, device, iterator, criterion):

epoch_loss = 0

epoch_acc = 0

model.eval()

ell = len(iterator)

with torch.no_grad():

for (x,y) in iterator:

x = x.to(device)

y = y.to(device)

optimizer.zero_grad()

fx = model(x)

loss = criterion(fx, y)

acc = calculate_accuracy (fx , y)

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss/ell, epoch_acc/ell

2.4 组合代码进行训练

epochs = 200 #迭代次数

LEARNING_RATE = 0.0001 #学习率

criterion = nn.CrossEntropyLoss() #损失函数

optimizer = torch.optim.Adam(net_glob.parameters(), lr = LEARNING_RATE) #优化器

loss_train_collect = []

loss_test_collect = []

acc_train_collect = []

acc_test_collect = []

start_time = time.time()

for epoch in range(epochs):

train_loss, train_acc = train(net_glob, device, train_iterator, optimizer, criterion) #训练

test_loss, test_acc = evaluate(net_glob, device, test_iterator, criterion) #验证

loss_train_collect.append(train_loss)

loss_test_collect.append(test_loss)

acc_train_collect.append(train_acc)

acc_test_collect.append(test_acc)

prRed(f'Train => Epoch: {epoch} \t Acc: {train_acc*100:05.2f}% \t Loss: {train_loss:.3f}')

prGreen(f'Test => \t Acc: {test_acc*100:05.2f}% \t Loss: {test_loss:.3f}')

elapsed = (time.time() - start_time)/60

print(f'Total Training Time: {elapsed:.2f} min')

3. FL_ResNet_HAM10000.py

接下来来解读这个文件:这个文件是一个本地模拟联邦的文件。模型大体上的代码是差不多的,让我们来看一下差异之处。

3.1 DatasetSplit

这是一个数据集,使用idxs来切分不同的数据。

class DatasetSplit(Dataset):

def __init__(self, dataset, idxs):

self.dataset = dataset

self.idxs = list(idxs)

def __len__(self):

# 数据的长度是idx列表的长度

return len(self.idxs)

def __getitem__(self, item):

image, label = self.dataset[self.idxs[item]]

return image, label

3.2 LocalUpdate

与训练和测试有关的客户端功能

class LocalUpdate(object):

def __init__(self, idx, lr, device, dataset_train = None, dataset_test = None, idxs = None, idxs_test = None):

self.idx = idx #本地客户端编号

self.device = device

self.lr = lr

self.local_ep = 1

self.loss_func = nn.CrossEntropyLoss()

self.selected_clients = []

self.ldr_train = DataLoader(DatasetSplit(dataset_train, idxs), batch_size = 256, shuffle = True)

self.ldr_test = DataLoader(DatasetSplit(dataset_test, idxs_test), batch_size = 256, shuffle = True)

def train(self, net):

net.train()

......

return net.state_dict(), sum(epoch_loss) / len(epoch_loss), sum(epoch_acc) / len(epoch_acc)

def evaluate(self, net):

net.eval()

.....

return sum(epoch_loss) / len(epoch_loss), sum(epoch_acc) / len(epoch_acc)

如何生成对应的数据集的idx,即如何模拟各个客户端拥有一部分数据,通过dataset_iid(dataset_train, num_users)这个函数完成。

def dataset_iid(dataset, num_users):

num_items = int(len(dataset) / num_users)

dict_users, all_idxs = {}, [i for i in range(len(dataset))]

for i in range(num_users):

# 随机从集合中获取num_items个idx

dict_users[i] = set(np.random.choice(all_idxs, num_items, replace=False))

# 从集合中删除已经分配掉的idx

all_idxs = list(set(all_idxs) - dict_users[i])

return dict_users

3.3 代码整合

net_glob.train() #将模型切换为训练模式

w_glob = net_glob.state_dict() #拷贝模型的权重

loss_train_collect = []

acc_train_collect = []

loss_test_collect = []

acc_test_collect = []

for iter in range(epochs):

#

w_locals, loss_locals_train, acc_locals_train, loss_locals_test, acc_locals_test = [], [], [], [], []

m = max(int(frac * num_users), 1)

idxs_users = np.random.choice(range(num_users), m, replace = False) #生成用户idxs的序列

# 对于每一个客户端进行模型训练

for idx in idxs_users: # each client

local = LocalUpdate(idx, lr, device, dataset_train = dataset_train, dataset_test = dataset_test, idxs = dict_users[idx], idxs_test = dict_users_test[idx])

# Training ------------------收集每一个客户端的w, loss_train, acc_train

w, loss_train, acc_train = local.train(net = copy.deepcopy(net_glob).to(device))# 使用服务端的参数进行模型的训练,经过该客户端本地的数据训练后产生一个新的模型参数

w_locals.append(copy.deepcopy(w))

loss_locals_train.append(copy.deepcopy(loss_train))

acc_locals_train.append(copy.deepcopy(acc_train))

# Testing -------------------收集每一个客户端的loss_test, acc_test

loss_test, acc_test = local.evaluate(net = copy.deepcopy(net_glob).to(device))

loss_locals_test.append(copy.deepcopy(loss_test))

acc_locals_test.append(copy.deepcopy(acc_test))

# Federation process 聚合各个客户端的w

w_glob = FedAvg(w_locals)

print("------------------------------------------------")

print("------ Federation process at Server-Side -------")

print("------------------------------------------------")

# update global model --- copy weight to net_glob -- distributed the model to all users //更新全局模型

net_glob.load_state_dict(w_glob)

# Train/Test accuracy 添加训练和测试的准确率

acc_avg_train = sum(acc_locals_train) / len(acc_locals_train)

acc_train_collect.append(acc_avg_train)

acc_avg_test = sum(acc_locals_test) / len(acc_locals_test)

acc_test_collect.append(acc_avg_test)

# Train/Test loss 添加训练和测试的loss

loss_avg_train = sum(loss_locals_train) / len(loss_locals_train)

loss_train_collect.append(loss_avg_train)

loss_avg_test = sum(loss_locals_test) / len(loss_locals_test)

loss_test_collect.append(loss_avg_test)

print('------------------- SERVER ----------------------------------------------')

print('Train: Round {:3d}, Avg Accuracy {:.3f} | Avg Loss {:.3f}'.format(iter, acc_avg_train, loss_avg_train))

print('Test: Round {:3d}, Avg Accuracy {:.3f} | Avg Loss {:.3f}'.format(iter, acc_avg_test, loss_avg_test))

print('-------------------------------------------------------------------------')

#===================================================================================

print("Training and Evaluation completed!")

4. SFLV1_ResNet_HAM10000.py

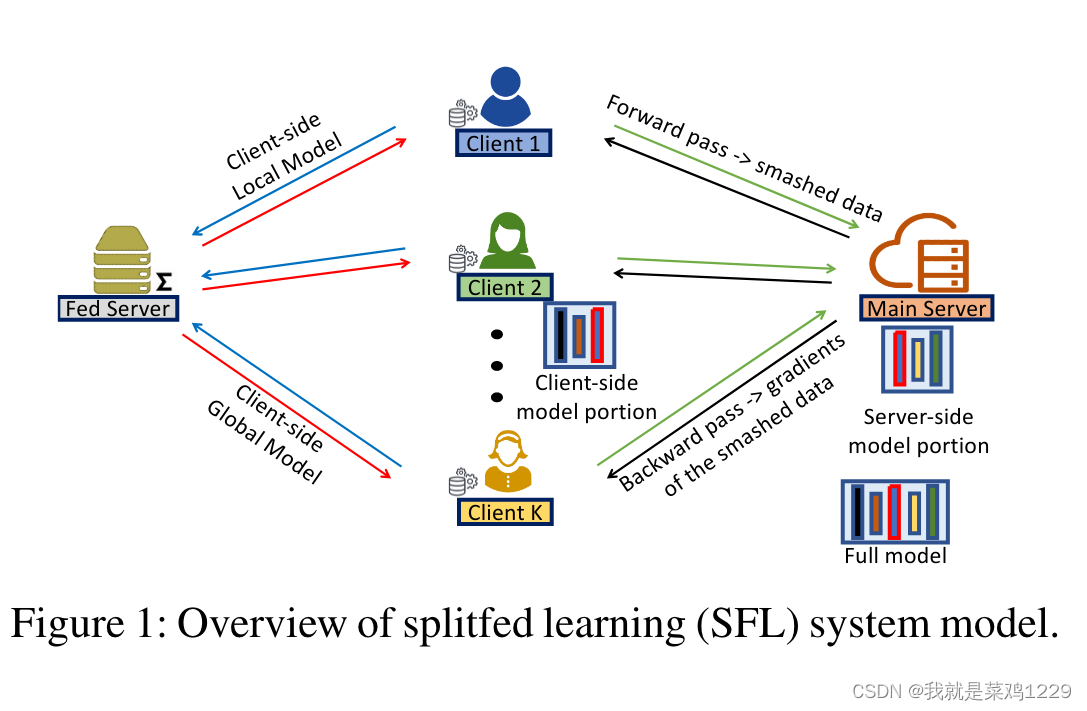

这个文件是论文中主要提到的模型,实现了拆分学习和联邦学习的结合。

4.1 ResNet18_client_side

这段代码定义了客户端部分的数据提取部分:

class ResNet18_client_side(nn.Module):

def __init__(self):

super(ResNet18_client_side, self).__init__()

self.layer1 = nn.Sequential (

nn.Conv2d(3, 64, kernel_size = 7, stride = 2, padding = 3, bias = False),

nn.BatchNorm2d(64),

nn.ReLU (inplace = True),

nn.MaxPool2d(kernel_size = 3, stride = 2, padding =1),

)

self.layer2 = nn.Sequential (

nn.Conv2d(64, 64, kernel_size = 3, stride = 1, padding = 1, bias = False),

nn.BatchNorm2d(64),

nn.ReLU (inplace = True),

nn.Conv2d(64, 64, kernel_size = 3, stride = 1, padding = 1),

nn.BatchNorm2d(64),

)

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def forward(self, x):

resudial1 = F.relu(self.layer1(x))

out1 = self.layer2(resudial1)

out1 = out1 + resudial1 # adding the resudial inputs -- downsampling not required in this layer

resudial2 = F.relu(out1)

return resudial2

4.2 ResNet18_server_side

我们可以看出客户端的模型+服务器的模型才是一个完整的模型

class ResNet18_server_side(nn.Module):

def __init__(self, block, num_layers, classes):

super(ResNet18_server_side, self).__init__()

self.input_planes = 64

self.layer3 = nn.Sequential (

nn.Conv2d(64, 64, kernel_size = 3, stride = 1, padding = 1),

nn.BatchNorm2d(64),

nn.ReLU (inplace = True),

nn.Conv2d(64, 64, kernel_size = 3, stride = 1, padding = 1),

nn.BatchNorm2d(64),

)

self.layer4 = self._layer(block, 128, num_layers[0], stride = 2)

self.layer5 = self._layer(block, 256, num_layers[1], stride = 2)

self.layer6 = self._layer(block, 512, num_layers[2], stride = 2)

self. averagePool = nn.AvgPool2d(kernel_size = 7, stride = 1)

self.fc = nn.Linear(512 * block.expansion, classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def _layer(self, block, planes, num_layers, stride = 2):

dim_change = None

if stride != 1 or planes != self.input_planes * block.expansion:

dim_change = nn.Sequential(nn.Conv2d(self.input_planes, planes*block.expansion, kernel_size = 1, stride = stride),

nn.BatchNorm2d(planes*block.expansion))

netLayers = []

netLayers.append(block(self.input_planes, planes, stride = stride, dim_change = dim_change))

self.input_planes = planes * block.expansion

for i in range(1, num_layers):

netLayers.append(block(self.input_planes, planes))

self.input_planes = planes * block.expansion

return nn.Sequential(*netLayers)

def forward(self, x):

out2 = self.layer3(x)

out2 = out2 + x # adding the resudial inputs -- downsampling not required in this layer

x3 = F.relu(out2)

x4 = self. layer4(x3)

x5 = self.layer5(x4)

x6 = self.layer6(x5)

x7 = F.avg_pool2d(x6, 7)

x8 = x7.view(x7.size(0), -1)

y_hat =self.fc(x8)

return y_hat

4.3 服务器端的训练函数

# fx_client 客户端提取后的输出

# y 对应的标签

# l_epoch_count epoch的总数

# l_epoch 当前是第i轮epoch

# idx 客户端标识

# len_batch batch的大小

def train_server(fx_client, y, l_epoch_count, l_epoch, idx, len_batch):

#声明全局变量,方便直接进行修改外部同名变量

global net_model_server, criterion, optimizer_server, device, batch_acc_train, batch_loss_train, l_epoch_check, fed_check

global loss_train_collect, acc_train_collect, count1, acc_avg_all_user_train, loss_avg_all_user_train, idx_collect, w_locals_server, w_glob_server, net_server

global loss_train_collect_user, acc_train_collect_user, lr

net_server = copy.deepcopy(net_model_server[idx]).to(device)#根据idx获取对应的服务端的模型

net_server.train()

optimizer_server = torch.optim.Adam(net_server.parameters(), lr = lr)

# train and update

optimizer_server.zero_grad()

fx_client = fx_client.to(device)# 将客户端返回的中间数据放入device

y = y.to(device)

#---------forward prop模型推理-------------

fx_server = net_server(fx_client)

# calculate loss

loss = criterion(fx_server, y)

# calculate accuracy

acc = calculate_accuracy(fx_server, y)

#--------backward prop--------------

loss.backward()

dfx_client = fx_client.grad.clone().detach()#获得模型的梯度并返回

optimizer_server.step()

batch_loss_train.append(loss.item())

batch_acc_train.append(acc.item())

# 更新当前轮次对应的server-side模型

net_model_server[idx] = copy.deepcopy(net_server)

# count1: to track the completion of the local batch associated with one client

count1 += 1

if count1 == len_batch:# 判断是否完成一个本地轮次:当count1等于len_batch时,计算本批次平均精度和损失,清空训练损失和精度集合,重置计数器,并打印训练信息。

acc_avg_train = sum(batch_acc_train)/len(batch_acc_train)

loss_avg_train = sum(batch_loss_train)/len(batch_loss_train)

batch_acc_train = []

batch_loss_train = []

count1 = 0

prRed('Client{} Train => Local Epoch: {} \tAcc: {:.3f} \tLoss: {:.4f}'.format(idx, l_epoch_count, acc_avg_train, loss_avg_train))

# 保存当前模型权重:保存当前服务器端模型权重到w_server

w_server = net_server.state_dict()

if l_epoch_count == l_epoch-1:# 判断是否完成一个一定数量的epoch

l_epoch_check = True

#将当前模型权重添加到本地权重列表w_locals_server

w_locals_server.append(copy.deepcopy(w_server))

#计算并保存当前客户端最后一个批次的精度和损失(非平均值)

acc_avg_train_all = acc_avg_train

loss_avg_train_all = loss_avg_train

loss_train_collect_user.append(loss_avg_train_all)

acc_train_collect_user.append(acc_avg_train_all)

# 将当前客户端索引添加到用户索引集合idx_collect

if idx not in idx_collect:

idx_collect.append(idx)

# 如果已收集到所有用户的索引,设置fed_check为True,表示触发联邦过程

if len(idx_collect) == num_users:

fed_check = True # to

# 聚合通过各个客户端训练得到的服务器模型

w_glob_server = FedAvg(w_locals_server)

# 服务器端的全局模型更新

net_glob_server.load_state_dict(w_glob_server)

net_model_server = [net_glob_server for i in range(num_users)]

w_locals_server = []

idx_collect = []

acc_avg_all_user_train = sum(acc_train_collect_user)/len(acc_train_collect_user)

loss_avg_all_user_train = sum(loss_train_collect_user)/len(loss_train_collect_user)

loss_train_collect.append(loss_avg_all_user_train)

acc_train_collect.append(acc_avg_all_user_train)

acc_train_collect_user = []

loss_train_collect_user = []

# send gradients to the client

return dfx_client

4.4 Client

class Client(object):

# net_client_mode 客户端模型

# idx 客户端id

# lr 学习率

# device 设备

# dataset_train 训练的数据集

# dataset_test 测试的数据集

# idxs 训练数据的子集

# idxs_test 测试数据的子集

def __init__(self, net_client_model, idx, lr, device, dataset_train = None, dataset_test = None, idxs = None, idxs_test = None):

self.idx = idx

self.device = device

self.lr = lr

self.local_ep = 1 #定义了本地的epoch

self.ldr_train = DataLoader(DatasetSplit(dataset_train, idxs), batch_size = 256, shuffle = True)

self.ldr_test = DataLoader(DatasetSplit(dataset_test, idxs_test), batch_size = 256, shuffle = True)

def train(self, net):

net.train()

optimizer_client = torch.optim.Adam(net.parameters(), lr = self.lr) #客户端的优化器

for iter in range(self.local_ep):

len_batch = len(self.ldr_train) #获取batch的长度

for batch_idx, (images, labels) in enumerate(self.ldr_train):

images, labels = images.to(self.device), labels.to(self.device)

optimizer_client.zero_grad()

fx = net(images) # 正向传播

client_fx = fx.clone().detach().requires_grad_(True) # 客户端提取的数据信息

# 获得反向传播的梯度

dfx = train_server(client_fx, labels, iter, self.local_ep, self.idx, len_batch)

#--------backward prop -------------

fx.backward(dfx) # 在客户端继续反向传播

optimizer_client.step()

# 返回更新后的网络参数

return net.state_dict()

def evaluate(self, net, ell):

net.eval()

with torch.no_grad():

len_batch = len(self.ldr_test)

for batch_idx, (images, labels) in enumerate(self.ldr_test):

images, labels = images.to(self.device), labels.to(self.device)

#---------forward prop-------------

fx = net(images) # 正向传播

evaluate_server(fx, labels, self.idx, len_batch, ell)

return

4.5 整合技术

#------------ Training And Testing -----------------

net_glob_client.train()

# 拷贝权重

w_glob_client = net_glob_client.state_dict()

# 联邦学习n轮

for iter in range(epochs):

m = max(int(frac * num_users), 1)

# 生成每个用户所拥有的数据的idx

idxs_users = np.random.choice(range(num_users), m, replace = False)

w_locals_client = []

for idx in idxs_users:

local = Client(net_glob_client, idx, lr, device, dataset_train = dataset_train, dataset_test = dataset_test, idxs = dict_users[idx], idxs_test = dict_users_test[idx])

# Training ------------------

# 训练,传给服务端,反向传播,更新后获得新的客户端模型参数

w_client = local.train(net = copy.deepcopy(net_glob_client).to(device))

w_locals_client.append(copy.deepcopy(w_client))

# Testing -------------------

local.evaluate(net = copy.deepcopy(net_glob_client).to(device), ell= iter)

# 对客户端的模型参数求平均

w_glob_client = FedAvg(w_locals_client)

# 更新客户端的全局模型

net_glob_client.load_state_dict(w_glob_client)

print("Training and Evaluation completed!")

5.总结

优点:

- 代码实现了分割学习和联邦学习的结合模拟实验

- 代码注释多,结构清晰

缺点:

- 仅仅只是一个单机实验,没有在真实的多机环境中进行实验

![春秋云境:CVE-2022-32991[漏洞复现]](https://img-blog.csdnimg.cn/direct/c4773e1bdfc849a79441e95bad7b7a61.png)