什么是Aurora

Amazon Aurora是一种基于云且完全托管关系型数据库服务,与MySQL 和 PostgreSQL 数据库兼容,完全托管意味着自动对数据库进行管理,包括管理数据备份、硬件配置和软件更新等操作

Amazon Aurora提供了企业级性能

Amazon Aurora提供了多种实例类型,不同的实例类型有不同的CPU,内存,带宽等配置,用户可以根据需求选择合适的实例类型来支持自己的业务

Amazon Aurora 提供了可扩展性,可以根据需要自动扩大或缩小存储规模。Amazon Aurora 将自动从最小 10 GB 存储扩展到 64 TB 存储,每次增量为 10 GB,且扩展不会影响数据库的性能

什么是Redshift

Amazon Redshift 是一个基于云的完全托管的数据仓库服务。开发人员可以使用 redshift 来构建、查询和维护多达 PB 或更多的数据。

每个 Redshift 数据仓库都是完全托管的,这意味着备份创建、安全和配置等管理任务都是自动化的。

Redshift 中的每个数据仓库都由一组节点组成。这些节点被组织成集群,使得对大量数据运行分析查询变得容易。

许多现有的基于 SQL 的客户端,以及范围广泛的数据源和数据分析工具,都可以与 Redshift 一起使用。它具有稳定的架构,可以轻松地与各种商业智能工具进行交互。

由于 Redshift 旨在处理大量数据,因此其模块化设计使其能够轻松扩展。它的多层结构使一次处理多个查询变得简单。

可以从 Redshift 集群创建切片,允许对数据集进行更精细的检查

Redshift 提供的性能指标可用于跟踪数据库的健康状况

为什么会有从Aurora到Redshift数据同步的需求

Aurora 是一个基于行的数据库,这使得它最适合事务查询(OLTP)业务,比如Web 应用程序。想根据用户 ID 查找用户名?使用 Aurora 很容易做到。

Redshift 是一个基于列的数据库,旨在高效处理对大量数据的复杂查询,这使得它最适合数据分析(OLAP)业务,比如电商网站用户行为分析。

比如我们可以用Aurora来实现电商网站的基本业务功能,用户管理,订单管理,商品管理等基本功能,然后用Redshift来实现用户行为分析,商品销售情况分析等等需要处理海量数据的查询功能。

AWS Data Migration Service

AWS Database Migration Service (AWS DMS) 用于管理和迁移云上的数据库。

借助 AWS DMS,客户可以跨许多流行的商业数据库同步数据,包括 Microsoft SQL Server、Amazon Aurora 和 MySQL。

使用 AWS DMS,用户可以运行一次性迁移或设置持续同步。

在同步数据库之前,需要在云服务器上创建一个复制实例。此实例读取源数据并将其加载到目标。

如何使用AWS DMS从Aurora同步数据到Redshift

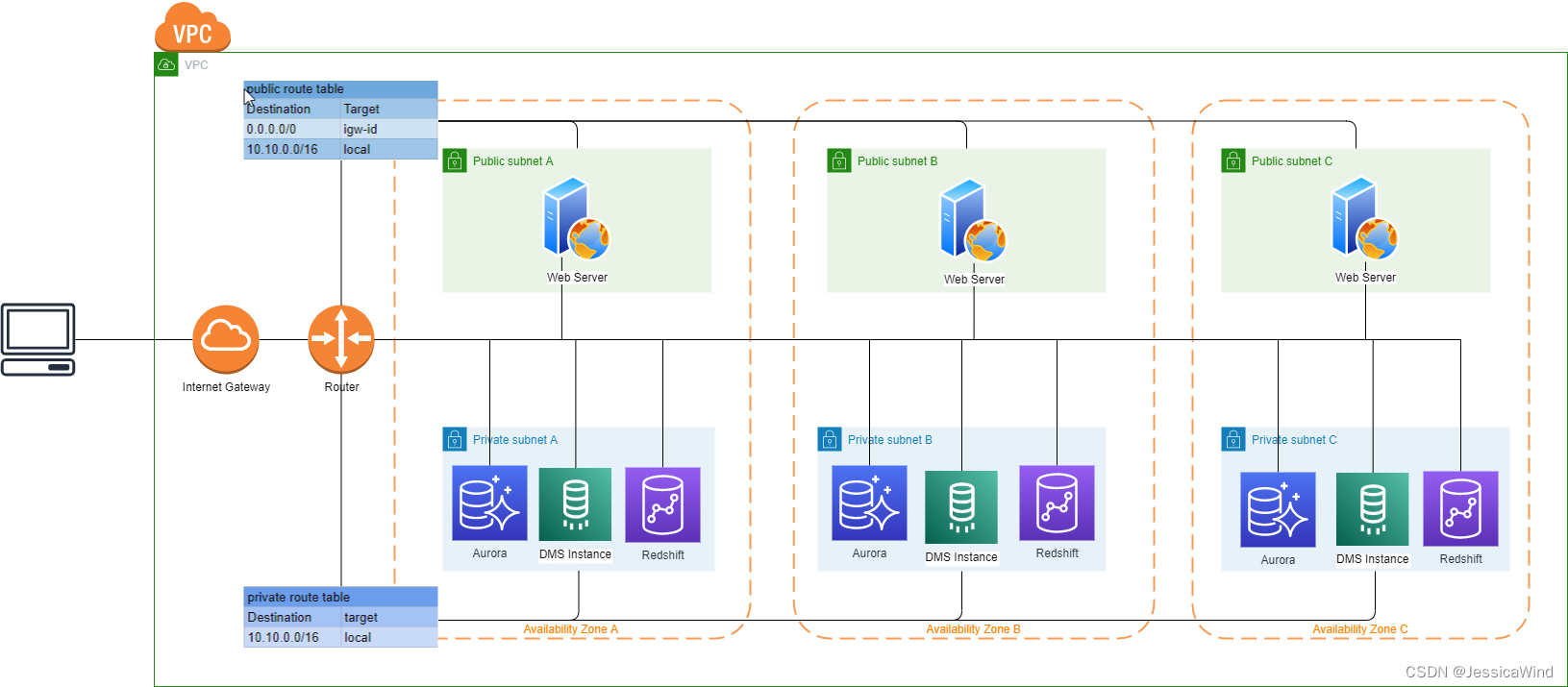

在实际开发过程中,我们的web服务器位于VPC public subnet中,允许任何客户端进行访问

用户数据库一般位于VPC的private subnet中,且只允许同一个VPC的web服务器进行访问,以保证数据库的安全

DMS进行数据同步使用的instance也只需要位于private subnet,无需对外开放

因此我们首先需要创建一个VPC,vpc的架构如下:

接下来我们详细介绍每一部分需要创建的资源。

IAM

如果在资源创建过程中出现类似如下没有权限的错误,则需要在AWS Web Console IAM页面为用户添加相应的权限。

User: arn:aws:iam::****:user/*** is not authorized to perform: dms:CreateReplicationSubnetGroup on resource: arn:aws:dms:ap-southeast-1:****:subgrp:aurora-to-redshift-instance because no identity-based policy allows the dms:CreateReplicationSubnetGroup action (Service: AWSDatabaseMigrationService; Status Code: 400; Error Code: AccessDeniedException; ; Proxy: null)本文中用到的权限如下:

AmazonS3FullAccess: AWS Managed Policy

AmazonVPCFullAccess: AWS Managed Policy

AmazonRDSFullAccess: AWS Managed Policy

AmazonRedshiftFullAccess: AWS Managed Policy

DMS没有找到AWS Managed Policy,需要自己手动创建一下,内容如下:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "dms:*",

"Resource": "*"

}

]

}VPC

vpc

cird block: 10.10.0.0/16

internet gateway

public subnet

三个Availability Zone

private subnet

三个Availability Zone

public route table

destination: 0.0.0.0/0 target: internet-gateway-id(允许与外界进行通信)

destination:10.10.0.0/16 local(内部通信)

public route table

destination:10.10.0.0/16 local(内部通信)

web server security group

允许任意ip对443端口进行访问

允许自己的ipdui22端口进行访问,以便ssh到服务器上向数据库插入数据

dms security group

只允许vpc内部访问

public aurora security group

允许web server security group对3306端口进行访问

允许dms security group对3306端口进行访问

为了连接数据库插入数据,这里我们额外加一条允许自己电脑的ip对3306端口进行访问

private aurora security group

允许web server security group对3306端口进行访问

允许dms security group对3306端口进行访问

redshift aurora security group

允许web server security group对5439端口进行访问

允许dms security group对5439端口进行访问

VPC全部资源的serverless文件:

custom:bucketNamePrefix 替换为自己的创建的bucket

resources:Parameters:MyIP 替换为自己的public ip

service: aurora-to-redshift-vpc

custom:

bucketNamePrefix: "bucketName"

provider:

name: aws

region: ${opt:region, "ap-southeast-1"}

stackName: ${self:service}

deploymentBucket:

name: com.${self:custom.bucketNamePrefix}.deploy-bucket

serverSideEncryption: AES256

resources:

Parameters:

VpcName:

Type: String

Default: "test-vpc"

MyIP:

Type: String

Default: "your public ip"

Resources:

VPC:

Type: "AWS::EC2::VPC"

Properties:

CidrBlock: "10.10.0.0/16"

EnableDnsSupport: true

EnableDnsHostnames: true

InstanceTenancy: default

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}"

# Internet Gateway

InternetGateway:

Type: "AWS::EC2::InternetGateway"

Properties:

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_InternetGateway"

VPCGatewayAttachment:

Type: "AWS::EC2::VPCGatewayAttachment"

Properties:

VpcId: !Ref VPC

InternetGatewayId: !Ref InternetGateway

# web server security group

WebServerSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Allow access from public

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 443

ToPort: 443

CidrIp: "0.0.0.0/0"

- IpProtocol: tcp

FromPort: 22

ToPort: 22

CidrIp: !Sub "${MyIP}/32"

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_WebServerSecurityGroup"

# public route table

RouteTablePublic:

Type: "AWS::EC2::RouteTable"

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_RouteTablePublic"

RouteTablePublicInternetRoute:

Type: "AWS::EC2::Route"

DependsOn: VPCGatewayAttachment

Properties:

RouteTableId: !Ref RouteTablePublic

DestinationCidrBlock: "0.0.0.0/0"

GatewayId: !Ref InternetGateway

# public subnet

SubnetAPublic:

Type: "AWS::EC2::Subnet"

Properties:

AvailabilityZone: !Select [0, !GetAZs ""]

CidrBlock: "10.10.0.0/24"

MapPublicIpOnLaunch: true

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_SubnetAPublic"

RouteTableAssociationAPublic:

Type: "AWS::EC2::SubnetRouteTableAssociation"

Properties:

SubnetId: !Ref SubnetAPublic

RouteTableId: !Ref RouteTablePublic

SubnetBPublic:

Type: "AWS::EC2::Subnet"

Properties:

AvailabilityZone: !Select [1, !GetAZs ""]

CidrBlock: "10.10.32.0/24"

MapPublicIpOnLaunch: true

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_SubnetBPublic"

RouteTableAssociationBPublic:

Type: "AWS::EC2::SubnetRouteTableAssociation"

Properties:

SubnetId: !Ref SubnetBPublic

RouteTableId: !Ref RouteTablePublic

SubnetCPublic:

Type: "AWS::EC2::Subnet"

Properties:

AvailabilityZone: !Select [2, !GetAZs ""]

CidrBlock: "10.10.64.0/24"

MapPublicIpOnLaunch: true

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_SubnetCPublic"

RouteTableAssociationCPublic:

Type: "AWS::EC2::SubnetRouteTableAssociation"

Properties:

SubnetId: !Ref SubnetCPublic

RouteTableId: !Ref RouteTablePublic

# instance security group

PrivateDMSInstanceSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Allow http to client host

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 443

ToPort: 443

CidrIp: "10.10.0.0/16"

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_PrivateDMSInstanceSecurityGroup"

# aurora security group

PrivateAuroraSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Allow access from dms instance securty group

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 3306

ToPort: 3306

SourceSecurityGroupId: !Ref PrivateDMSInstanceSecurityGroup

- IpProtocol: tcp

FromPort: 3306

ToPort: 3306

SourceSecurityGroupId: !Ref WebServerSecurityGroup

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_PrivateAuroraSecurityGroup"

# redshift security group

PrivateRedshiftSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Allow access from dms instance securty group

VpcId: !Ref VPC

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 5439

ToPort: 5439

SourceSecurityGroupId: !Ref PrivateDMSInstanceSecurityGroup

- IpProtocol: tcp

FromPort: 5439

ToPort: 5439

SourceSecurityGroupId: !Ref WebServerSecurityGroup

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_PrivateRedshiftSecurityGroup"

# private route table

RouteTablePrivate:

Type: "AWS::EC2::RouteTable"

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_RouteTablePrivate"

# private subnet

SubnetAPrivate:

Type: "AWS::EC2::Subnet"

Properties:

AvailabilityZone: !Select [0, !GetAZs ""]

CidrBlock: "10.10.16.0/24"

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_SubnetAPrivate"

RouteTableAssociationAPrivate:

Type: "AWS::EC2::SubnetRouteTableAssociation"

Properties:

SubnetId: !Ref SubnetAPrivate

RouteTableId: !Ref RouteTablePrivate

SubnetBPrivate:

Type: "AWS::EC2::Subnet"

Properties:

AvailabilityZone: !Select [1, !GetAZs ""]

CidrBlock: "10.10.48.0/24"

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_SubnetBPrivate"

RouteTableAssociationBPrivate:

Type: "AWS::EC2::SubnetRouteTableAssociation"

Properties:

SubnetId: !Ref SubnetBPrivate

RouteTableId: !Ref RouteTablePrivate

SubnetCPrivate:

Type: "AWS::EC2::Subnet"

Properties:

AvailabilityZone: !Select [2, !GetAZs ""]

CidrBlock: "10.10.80.0/24"

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub "VPC_${VpcName}_SubnetCPrivate"

RouteTableAssociationCPrivate:

Type: "AWS::EC2::SubnetRouteTableAssociation"

Properties:

SubnetId: !Ref SubnetCPrivate

RouteTableId: !Ref RouteTablePrivate

Outputs:

VPC:

Description: "VPC."

Value: !Ref VPC

Export:

Name: !Sub "${self:provider.stackName}"

SubnetsPublic:

Description: "Subnets public."

Value:

!Join [

",",

[!Ref SubnetAPublic, !Ref SubnetBPublic, !Ref SubnetCPublic],

]

Export:

Name: !Sub "${self:provider.stackName}-PublicSubnets"

SubnetsPrivate:

Description: "Subnets private."

Value:

!Join [

",",

[!Ref SubnetAPrivate, !Ref SubnetBPrivate, !Ref SubnetCPrivate],

]

Export:

Name: !Sub "${self:provider.stackName}-PrivateSubnets"

DefaultSecurityGroup:

Description: "VPC Default Security Group"

Value: !GetAtt VPC.DefaultSecurityGroup

Export:

Name: !Sub "${self:provider.stackName}-DefaultSecurityGroup"

WebServerSecurityGroup:

Description: "VPC Web Server Security Group"

Value: !Ref WebServerSecurityGroup

Export:

Name: !Sub "${self:provider.stackName}-WebServerSecurityGroup"

PrivateDMSInstanceSecurityGroup:

Description: "The id of the InstanceSecurityGroup"

Value: !Ref PrivateDMSInstanceSecurityGroup

Export:

Name: !Sub "${self:provider.stackName}-PrivateDMSInstanceSecurityGroup"

PrivateAuroraSecurityGroup:

Description: "The id of the AuroraSecurityGroup"

Value: !Ref PrivateAuroraSecurityGroup

Export:

Name: !Sub "${self:provider.stackName}-PrivateAuroraSecurityGroup"

PrivateRedshiftSecurityGroup:

Description: "The id of the RedshiftSecurityGroup"

Value: !Ref PrivateRedshiftSecurityGroup

Export:

Name: !Sub "${self:provider.stackName}-PrivateRedshiftSecurityGroup"

Aurora

DB Cluster Parameter Group

要使用DMS对aurora进行数据同步,需要打开binlog相关的配置,默认的Cluster Parameter Group中binlog的配置是关闭的,并且默认Cluster Parameter Group是不可修改的

因此必须创建一个自己的Cluster Parameter Group ,并设置binlog相关的配置

Family: aurora-mysql8.0

Parameters:

binlog_format: "ROW"

binlog_row_image: "Full"

binlog_checksum: "NONE"

VpcSecurityGroupIds

private aurora security group

DB Parameter Group

Family: aurora-mysql8.0

Subnet group

创建一个包含private subnet的private subnet group就可以

DB Cluster

subnet group

private subnet group

Engine: aurora-mysql

EngineVersion: 8.0.mysql_aurora.3.02.0

可以使用如下命令查看支持的EngineVersion:

aws rds describe-db-engine-versions --engine aurora --query "DBEngineVersions[].EngineVersion"DB Cluster Instance

Engine: aurora-mysql

DBInstanceClass: db.t2.small

可以使用如下命令查看支持的instance类型:

aws rds describe-orderable-db-instance-options --engine aurora-mysql --engine-version "8.0.mysql_aurora.3.02.2" --query "OrderableDBInstanceOptions

[].DBInstanceClass"aurora全部资源的serverless文件:

custom:bucketNamePrefix 替换为自己的创建的bucket

service: aurora-to-redshift-aurora

custom:

bucketNamePrefix: "bucketName"

provider:

name: aws

region: ${opt:region, "ap-southeast-1"}

stackName: ${self:service}

deploymentBucket:

name: com.${self:custom.bucketNamePrefix}.deploy-bucket

serverSideEncryption: AES256

resources:

Parameters:

ServiceName:

Type: String

Default: aurora-to-redshift

Resources:

DBClusterParameterGroup:

Type: "AWS::RDS::DBClusterParameterGroup"

Properties:

DBClusterParameterGroupName: cluster-aurora-mysql8-with-binlog

Description: DB Cluster Parameter Group with aurora-mysql8.0 engine, and binlog enabled

Family: aurora-mysql8.0

Parameters:

binlog_format: "ROW"

binlog_row_image: "Full"

binlog_checksum: "NONE"

DBParameterGroup:

Type: "AWS::RDS::DBParameterGroup"

Properties:

DBParameterGroupName: instance-mysql8

Description: DB Instance Parameter Group

Family: aurora-mysql8.0

PrivateDBSubnetGroup:

Type: "AWS::RDS::DBSubnetGroup"

Properties:

DBSubnetGroupName: private-subnet

DBSubnetGroupDescription: "private subnet"

SubnetIds:

Fn::Split:

- ","

- Fn::ImportValue: !Sub "${ServiceName}-vpc-PrivateSubnets"

RDSCluster:

Type: "AWS::RDS::DBCluster"

Properties:

DBClusterIdentifier: test-data-sync-aurora

DBClusterParameterGroupName:

Ref: DBClusterParameterGroup

DBSubnetGroupName:

Ref: PrivateDBSubnetGroup

VpcSecurityGroupIds:

- Fn::ImportValue: !Sub "${ServiceName}-vpc-PrivateAuroraSecurityGroup"

Engine: aurora-mysql

EngineVersion: 8.0.mysql_aurora.3.02.2

DatabaseName: dev

MasterUserPassword: aurora_admin_2022

MasterUsername: admin

RDSDBInstance:

Type: "AWS::RDS::DBInstance"

Properties:

DBClusterIdentifier:

Ref: RDSCluster

DBInstanceIdentifier: test-data-sync-aurora-instance

DBInstanceClass: db.t3.medium

DBParameterGroupName:

Ref: DBParameterGroup

DBSubnetGroupName:

Ref: PrivateDBSubnetGroup

Engine: aurora-mysql

Outputs:

AuroraEndpoint:

Description: "Aurora endpoint"

Value: !GetAtt RDSCluster.Endpoint.Address

Export:

Name: !Sub "${self:provider.stackName}-AuroraEndpoint"

AuroraPort:

Description: "Aurora port"

Value: !GetAtt RDSCluster.Endpoint.Port

Export:

Name: !Sub "${self:provider.stackName}-AuroraPort"

在创建完资源后,连接数据库,执行一下sql创建数据

连接数据库需要创建一个位于vpc public subnet的服务器,创建过程参考这里:

https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/CHAP_Tutorials.WebServerDB.LaunchEC2.html

vpc: 选择上面步骤中我们自己创建的vpc

subnet:选择任意一个public subnet

security group:web server security group

key pair: 创建一个名为tutorial-ec2-instance-web-server的key pari,选择pem(ssh)格式

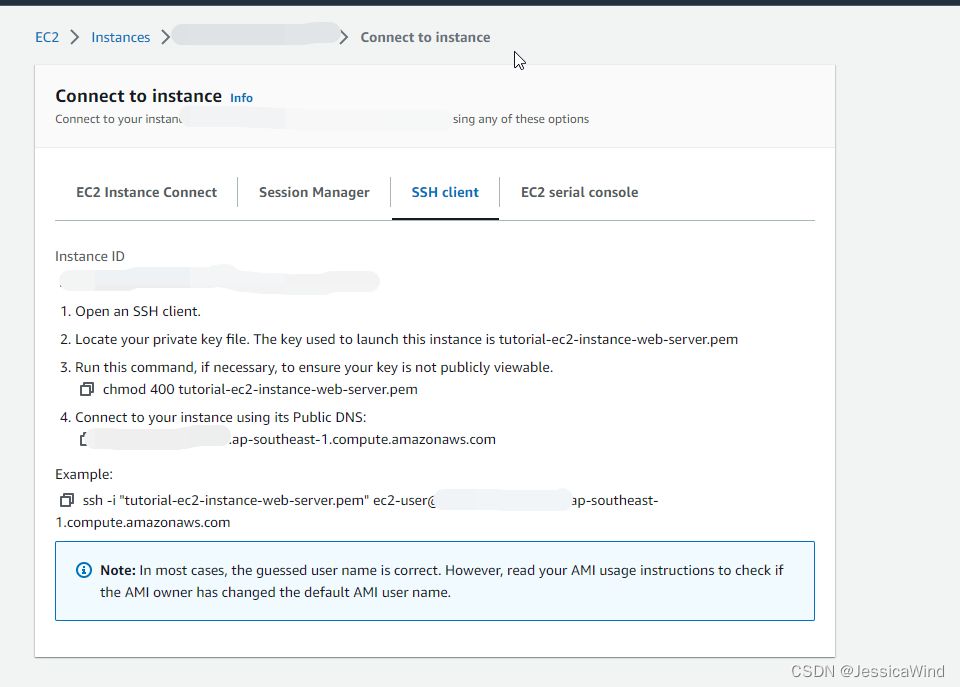

连接web服务器:参考https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/Aurora.Connecting.html#Aurora.Connecting.AuroraMySQL

打开终端或者SSH Client

找到创建key pair时下载的文件: tutorial-ec2-instance-web-server.pem

执行一下命令修改文件的执行权限

chmod 400 tutorial-ec2-instance-web-server.pem使用Public DNS连接到ec2实例,可以在aws web console打开ec2实例的页面,点击右上角的Connect来找到如何连接

ssh -i "tutorial-ec2-instance-web-server.pem" ec2-user@${ec2_public_dns}.ap-southeast-1.compute.amazonaws.com

连接完成后,安装mysql

sudo yum install mysql连接aurora,输入以下命令,然后输入密码继续

mysql -h {aurora-cluster}.ap-southeast-1.rds.amazonaws.com -P 3306 -u admin -p执行以下sql插入数据

USE dev;

CREATE TABLE person (

person_id int,

last_name varchar(255),

first_name varchar(255),

city varchar(255)

);

INSERT INTO person VALUES (1, 'win', 'jessica', 'sh');Redshift

Cluster subnet group

创建一个包含private subnet的private subnet group

Cluster

ClusterSubnetGroupName

使用private subnet group

VpcSecurityGroupIds

使用private redshift security group

NodeType: dc2.large

ClusterType: single-node

redshift全部资源的serverless文件:

custom:bucketNamePrefix 替换为自己的创建的bucket

service: aurora-to-redshift-redshift

custom:

bucketNamePrefix: "bucketName"

provider:

name: aws

region: ${opt:region, "ap-southeast-1"}

stackName: ${self:service}

deploymentBucket:

name: com.${self:custom.bucketNamePrefix}.deploy-bucket

serverSideEncryption: AES256

resources:

Parameters:

ServiceName:

Type: String

Default: aurora-to-redshift

Resources:

ClusterSubnetGroup:

Type: "AWS::Redshift::ClusterSubnetGroup"

Properties:

Description: Private Cluster Subnet Group

SubnetIds:

Fn::Split:

- ","

- Fn::ImportValue: !Sub ${ServiceName}-vpc-PrivateSubnets

Tags:

- Key: Name

Value: private-subnet

Cluster:

Type: "AWS::Redshift::Cluster"

Properties:

ClusterIdentifier: test-data-sync-redshift

ClusterSubnetGroupName: !Ref ClusterSubnetGroup

VpcSecurityGroupIds:

- Fn::ImportValue: !Sub ${ServiceName}-vpc-PrivateRedshiftSecurityGroup

DBName: dev

MasterUsername: admin

MasterUserPassword: Redshift_admin_2022

NodeType: dc2.large

ClusterType: single-node

Outputs:

RedshiftEndpoint:

Description: "Redshift endpoint"

Value: !GetAtt Cluster.Endpoint.Address

Export:

Name: !Sub "${self:provider.stackName}-RedshiftEndpoint"

RedshiftPort:

Description: "Redshift port"

Value: !GetAtt Cluster.Endpoint.Port

Export:

Name: !Sub "${self:provider.stackName}-RedshiftPort"

DMS

Subnet group

创建一个包含private subnet的private subnet group

Replication instance

ReplicationInstanceClass: dms.t2.small

ReplicationSubnetGroupIdentifier:

使用private subnet group

VpcSecurityGroupIds

使用dms security group

Source endpoint

EndpointType: source

EngineName: aurora

ServerName: aurora cluster endpoint

Port: 3306

Target endpoint

EndpointType: target

EngineName: ednpoint

ServerName: redshift cluster endpoint

Port: 5439

Database migration task

MigrationType: full-load-and-cdc

ReplicationInstanceArn

TableMappings:"{ \"rules\": [ { \"rule-type\": \"selection\", \"rule-id\": \"1\", \"rule-name\": \"1\", \"object-locator\": { \"schema-name\": \"dev\", \"table-name\": \"test-aurora-to-redshift\" }, \"rule-action\": \"include\" } ] }"

ReplicationTaskSettings: "{ \"FullLoadSettings\": {\"TargetTablePrepMode\": \"DO_NOTHING\"}}"

dms全部资源的serverless文件

custom:bucketNamePrefix 替换为自己的创建的bucket

service: aurora-to-redshift-dms

custom:

bucketNamePrefix: "bucketName"

provider:

name: aws

region: ${opt:region, "ap-southeast-1"}

stackName: ${self:service}

deploymentBucket:

name: com.${self:custom.bucketNamePrefix}.deploy-bucket

serverSideEncryption: AES256

resources:

Parameters:

ServiceName:

Type: String

Default: aurora-to-redshift

Resources:

ReplicationSubnetGroup:

Type: "AWS::DMS::ReplicationSubnetGroup"

Properties:

ReplicationSubnetGroupDescription: instance to sync data from aurora to redshift

ReplicationSubnetGroupIdentifier: aurora-to-redshift-instance

SubnetIds:

Fn::Split:

- ","

- Fn::ImportValue: !Sub ${ServiceName}-vpc-PrivateSubnets

ReplicationInstance:

Type: "AWS::DMS::ReplicationInstance"

Properties:

ReplicationInstanceIdentifier: aurora-to-redshift-instance

ReplicationInstanceClass: dms.t2.small

ReplicationSubnetGroupIdentifier: !Ref ReplicationSubnetGroup

PubliclyAccessible: false

VpcSecurityGroupIds:

- Fn::ImportValue: !Sub ${ServiceName}-vpc-PrivateDMSInstanceSecurityGroup

AuroraSourceEndpoint:

Type: "AWS::DMS::Endpoint"

Properties:

EndpointIdentifier: aurora

EndpointType: source

ServerName:

Fn::ImportValue: !Sub ${ServiceName}-aurora-AuroraEndpoint

Port:

Fn::ImportValue: !Sub ${ServiceName}-aurora-AuroraPort

Username: admin

Password: aurora_admin_2022

EngineName: aurora

RedshiftTargetEndpoint:

Type: "AWS::DMS::Endpoint"

Properties:

EndpointIdentifier: redshift

EndpointType: target

ServerName:

Fn::ImportValue: !Sub ${ServiceName}-redshift-RedshiftEndpoint

Port:

Fn::ImportValue: !Sub ${ServiceName}-redshift-RedshiftPort

DatabaseName: dev

Username: admin

Password: Redshift_admin_2022

EngineName: redshift

ReplicationTask:

Type: "AWS::DMS::ReplicationTask"

Properties:

ReplicationTaskIdentifier: aurora-to-redshift-task

MigrationType: full-load-and-cdc

ReplicationInstanceArn: !Ref ReplicationInstance

SourceEndpointArn: !Ref AuroraSourceEndpoint

TableMappings: '{ "rules": [ { "rule-type": "selection", "rule-id": "1", "rule-name": "1", "object-locator": { "schema-name": "dev", "table-name": "%" }, "rule-action": "include" } ] }'

TargetEndpointArn: !Ref RedshiftTargetEndpoint

ReplicationTaskSettings: '{ "FullLoadSettings": {"TargetTablePrepMode": "DO_NOTHING"}}'

数据同步

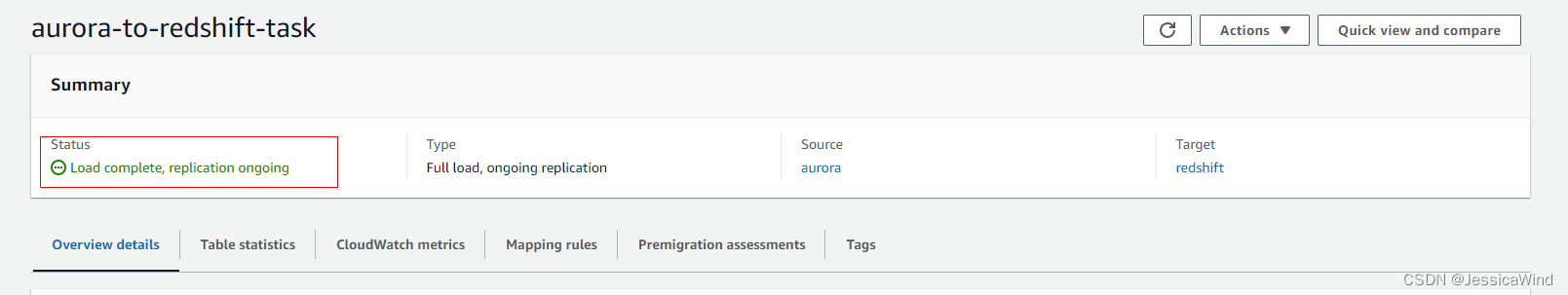

上述资源创建完毕后,可以在aws web console运行DMS的Database migration task,执行成功后status会是Load complete, replication ongoing

同步完成后,可以通过Redshift query editor来确认数据是否同步成功。

继续修改,添加,删除mysql数据,然后通过Redshift query editor来确认数据是否同步成功。

其他同步方法

Amazon Aurora zero-ETL integration with Amazon Redshift

Amazon即将推出一个Aurora的新功能,实现与 Amazon Redshift 的零 ETL 集成,目前这个功能还没有正式推出,处于Preview阶段,且在美国东部(弗吉尼亚北部)区域可用。以下来自Amazon公告,原文链接在此:

Amazon Aurora 现在支持与 Amazon Redshift 的零 ETL 集成,以使用 Amazon Redshift 对来自 Aurora 的数 PB 交易数据进行近乎实时的分析和机器学习 (ML)。在事务数据写入 Aurora 的几秒钟内,数据就可以在 Amazon Redshift 中使用,因此您不必构建和维护复杂的数据管道来执行提取、转换和加载 (ETL) 操作。

这种零 ETL 集成还使您能够在同一个新的或现有的 Amazon Redshift 实例中分析来自多个 Aurora 数据库集群的数据,以获得跨多个应用程序或分区的整体见解。通过近乎实时地访问交易数据,您可以利用 Amazon Redshift 的分析和功能,例如内置 ML、物化视图、数据共享以及对多个数据存储和数据湖的联合访问,从交易数据和其他数据中获得洞察力。

Amazon Aurora 与 Amazon Redshift 的零 ETL 集成现已在美国东部(弗吉尼亚北部)区域推出兼容 MySQL 8.0 的 Amazon Aurora MySQL 3 的有限预览版。

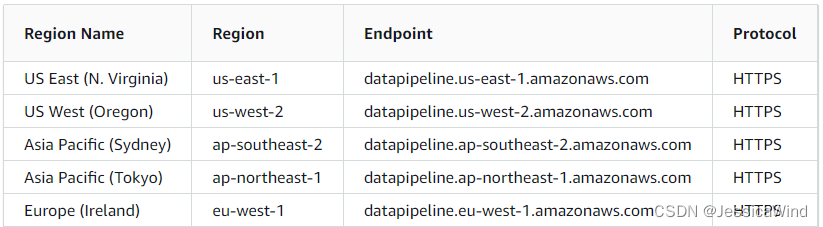

AWS Data Pipeline

AWS Data Pipeline也支持aurora到redshift的持续同步

相比于DMS的缺点

AWS Data Pipeline目前只支持以下region

一个AWS Data Pipeline只能同步一张aurora表,aurora表名必须完全匹配

相比于DMS的优点

同步过程中支持自定义查询语句,可以对源表数据进行筛选之后同步到redshift

AWS Glue

AWS Glue也支持aurora到redshift同步

相比于DMS的缺点

一个AWS Data Pipeline只能同步一张aurora表

不支持持续同步,只支持全表数据同步

需要在glue管理源表和目标表的scheme

相比于DMS的优点

可以在数据同步过程中对数据列应用mapping规则,可以按一定规则修改列值,可以删除某些列(目标表比源表少某些列),目标表与源表列名也可以不同

如何进行选择

如果需要持续同步且要使用的region不支持AWS Data Pipeline,则只能使用AWS DMS

如果需要持续同步且要使用的region支持AWS Data Pipeline,则可以根据其他需求决定使用哪个service,比如如果需要对数据进行筛选则可以选择AWS Data Pipeline

如果只需要整表一次性同步,且没有数据修改的需求,则可以选择任意一个

如果只需要整表一次性同步,且需要在同步过程中对数据进行一定规则的修改,则选择AWS Glue

github

https://github.com/JessicaWin/aws-in-action

![[前端笔记] 1.WEB基本概念](https://img-blog.csdnimg.cn/82d7df4ff0b2451b9cb26c015f7ce2cb.png)