忘记过去,超越自己

- ❤️ 博客主页 单片机菜鸟哥,一个野生非专业硬件IOT爱好者 ❤️

- ❤️ 本篇创建记录 2023-01-12 ❤️

- ❤️ 本篇更新记录 2023-01-12 ❤️

- 🎉 欢迎关注 🔎点赞 👍收藏 ⭐️留言📝

- 🙏 此博客均由博主单独编写,不存在任何商业团队运营,如发现错误,请留言轰炸哦!及时修正!感谢支持!

- 🔥 Arduino ESP8266教程累计帮助过超过1W+同学入门学习硬件网络编程,入选过选修课程,刊登过无线电杂志 🔥

目录

- 1. 前言

- 1.1 Redis的应用场景有哪些?

- 1.2 Redis的数据类型

- 2. Linux安装Redis

- 2.1 创建安装目录/usr/local/redis

- 2.2 进入/usr/local/src下载安装包

- 2.3 进行解压

- 2.4 安装到指定目录 /usr/local/redis

- 2.5 拷贝redis.conf配置文件到安装目录 /usr/local/redis/bin/

- 2.6 修改配置文件 redis.conf

- 2.7 进入安装目录/usr/local/redis/bin,运行启动命令

- 3. 操作redis

- 3.1 Redis 键(key)

- 3.2 Redis 字符串(String)

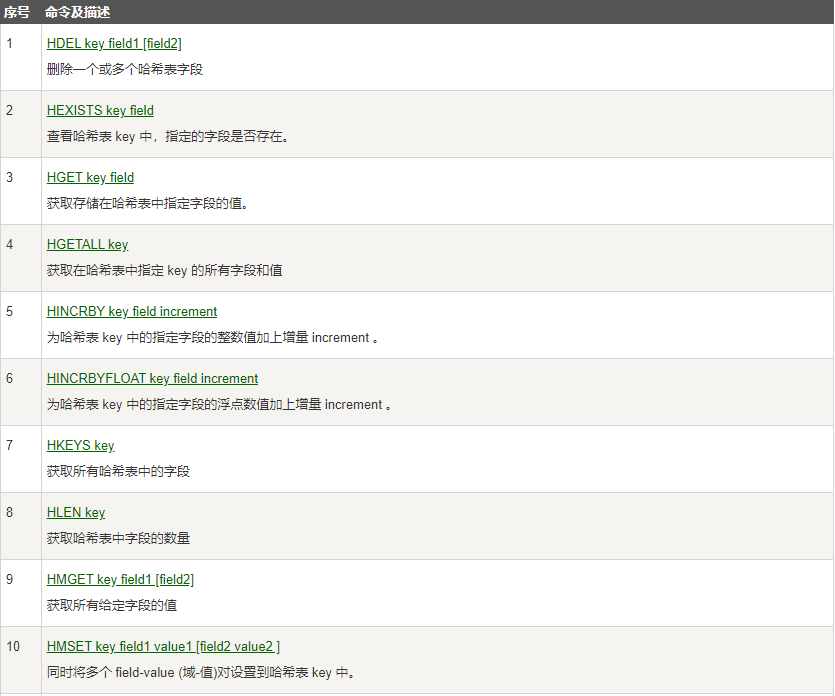

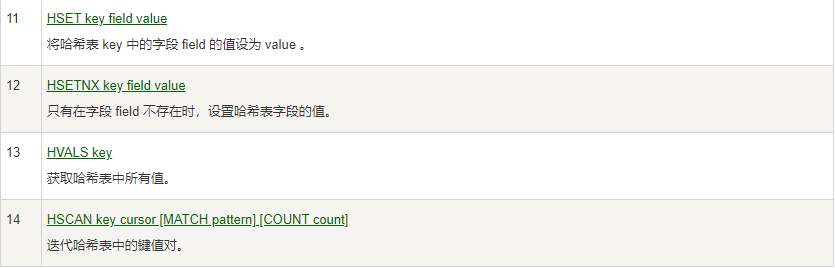

- 3.3 Redis 哈希(Hash)

- 3.4 Redis 列表(List)

- 3.5 Redis 集合(Set)

- 3.6 Redis 有序集合(sorted set)

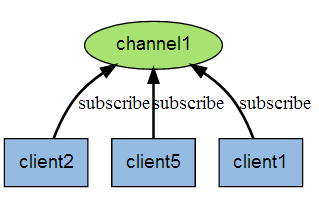

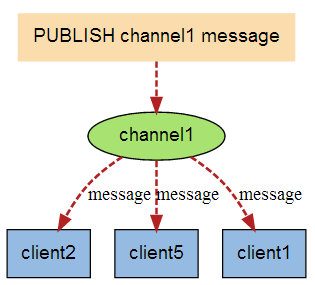

- 4. Redis 发布订阅(类比mqtt)

- 5. Redis 连接命令

- 6. Redis 服务器命令

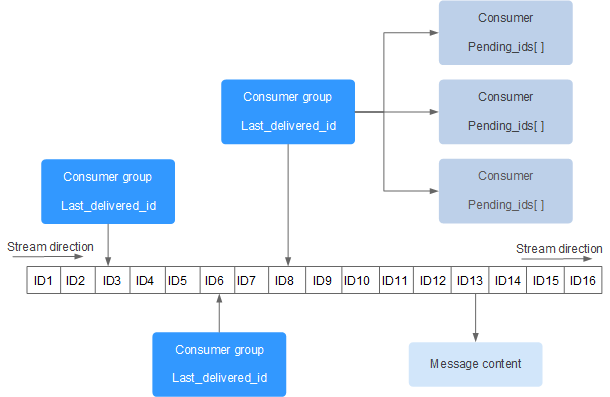

- 7.Redis Stream

1. 前言

学习资料:

Redis 菜鸟教程

Redis 官网

Redis是什么?看这一篇就够了

Redis是现在最受欢迎的NoSQL数据库之一,Redis是一个使用ANSI C编写的开源、包含多种数据结构、支持网络、基于内存、可选持久性的键值对存储数据库,其具备如下特性:

- 基于内存运行,性能高效

- 支持分布式,理论上可以无限扩展

- Redis不仅仅支持简单的key-value类型的数据,同时还提供list,set,zset,hash等数据结构的存储

开源的使用ANSI C语言编写、遵守BSD协议、支持网络、可基于内存亦可持久化的日志型、Key-Value数据库,并提供多种语言的API

1.1 Redis的应用场景有哪些?

Redis 的应用场景包括:缓存系统(“热点”数据:高频读、低频写)、计数器、消息队列系统、排行榜、社交网络和实时系统。

除此之外,包括抢票、商品限时秒杀等等业务也会应用到Redis。

1.2 Redis的数据类型

Redis支持五种数据类型:string(字符串),hash(哈希),list(列表),set(集合)及zset(sorted set:有序集合)。

2. Linux安装Redis

按照Linux的一些规范。

- 安装目录:希望将redis安装到此目录

/usr/local/redis - 下载目录:希望将安装包下载到此目录

/usr/local/src

2.1 创建安装目录/usr/local/redis

执行linux命令:

mkdir /usr/local/redis

2.2 进入/usr/local/src下载安装包

首先要找到需要下载的redis对应版本。

https://download.redis.io/releases/

这里我们选择最新稳定版本。

执行linux命令:

cd /usr/local/src

wget命令下载安装包:

[root@VM-8-12-centos local]# cd /usr/local/src

[root@VM-8-12-centos src]# cd /usr/local/src

[root@VM-8-12-centos src]# wget http://download.redis.io/releases/redis-7.0.7.tar.gz

--2023-01-12 16:48:58-- http://download.redis.io/releases/redis-7.0.7.tar.gz

正在解析主机 download.redis.io (download.redis.io)... 45.60.125.1

正在连接 download.redis.io (download.redis.io)|45.60.125.1|:80... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2979019 (2.8M) [application/octet-stream]

正在保存至: “redis-7.0.7.tar.gz”

100%[===============================================================>] 2,979,019 3.32MB/s 用时 0.9s

2023-01-12 16:48:59 (3.32 MB/s) - 已保存 “redis-7.0.7.tar.gz” [2979019/2979019])

[root@VM-8-12-centos src]#

2.3 进行解压

执行linux命令:

tar -xzvf redis-7.0.7.tar.gz

[root@VM-8-12-centos src]# tar -xzvf redis-7.0.7.tar.gz

redis-7.0.7/

redis-7.0.7/.codespell/

redis-7.0.7/.codespell/.codespellrc

redis-7.0.7/.codespell/requirements.txt

redis-7.0.7/.codespell/wordlist.txt

redis-7.0.7/.gitattributes

redis-7.0.7/.github/

省略若干行

[root@VM-8-12-centos src]# ls -al

总用量 2924

drwxr-xr-x. 3 root root 4096 1月 12 16:52 .

drwxr-xr-x. 20 root root 4096 1月 12 09:46 ..

drwxrwxr-x 8 root root 4096 12月 16 18:52 redis-7.0.7

-rw-r--r-- 1 root root 2979019 12月 16 19:01 redis-7.0.7.tar.gz

[root@VM-8-12-centos src]#

2.4 安装到指定目录 /usr/local/redis

执行linux命令:

- cd redis-7.0.7

- make PREFIX=/usr/local/redis install

[root@VM-8-12-centos src]# cd redis-7.0.7/

[root@VM-8-12-centos redis-7.0.7]# ls -al

总用量 288

drwxrwxr-x 8 root root 4096 12月 16 18:52 .

drwxr-xr-x. 3 root root 4096 1月 12 16:52 ..

-rw-rw-r-- 1 root root 40017 12月 16 18:52 00-RELEASENOTES

-rw-rw-r-- 1 root root 51 12月 16 18:52 BUGS

-rw-rw-r-- 1 root root 5027 12月 16 18:52 CODE_OF_CONDUCT.md

drwxrwxr-x 2 root root 4096 12月 16 18:52 .codespell

-rw-rw-r-- 1 root root 2634 12月 16 18:52 CONTRIBUTING.md

-rw-rw-r-- 1 root root 1487 12月 16 18:52 COPYING

drwxrwxr-x 7 root root 4096 12月 16 18:52 deps

-rw-rw-r-- 1 root root 405 12月 16 18:52 .gitattributes

drwxrwxr-x 4 root root 4096 12月 16 18:52 .github

-rw-rw-r-- 1 root root 535 12月 16 18:52 .gitignore

-rw-rw-r-- 1 root root 11 12月 16 18:52 INSTALL

-rw-rw-r-- 1 root root 151 12月 16 18:52 Makefile

-rw-rw-r-- 1 root root 6888 12月 16 18:52 MANIFESTO

-rw-rw-r-- 1 root root 22441 12月 16 18:52 README.md

-rw-rw-r-- 1 root root 106545 12月 16 18:52 redis.conf

-rwxrwxr-x 1 root root 279 12月 16 18:52 runtest

-rwxrwxr-x 1 root root 283 12月 16 18:52 runtest-cluster

-rwxrwxr-x 1 root root 1613 12月 16 18:52 runtest-moduleapi

-rwxrwxr-x 1 root root 285 12月 16 18:52 runtest-sentinel

-rw-rw-r-- 1 root root 1695 12月 16 18:52 SECURITY.md

-rw-rw-r-- 1 root root 14005 12月 16 18:52 sentinel.conf

drwxrwxr-x 4 root root 4096 12月 16 18:52 src

drwxrwxr-x 11 root root 4096 12月 16 18:52 tests

-rw-rw-r-- 1 root root 3055 12月 16 18:52 TLS.md

drwxrwxr-x 8 root root 4096 12月 16 18:52 utils

[root@VM-8-12-centos redis-7.0.7]# make PREFIX=/usr/local/redis install

中间省略非常多打印内容

Hint: It's a good idea to run 'make test' ;)

INSTALL redis-server

INSTALL redis-benchmark

INSTALL redis-cli

make[1]: 离开目录“/usr/local/src/redis-7.0.7/src”

[root@VM-8-12-centos redis-7.0.7]#

到这一步就已经编译成功。

2.5 拷贝redis.conf配置文件到安装目录 /usr/local/redis/bin/

执行linux命令:

cp redis.conf /usr/local/redis/bin/

[root@VM-8-12-centos redis-7.0.7]# pwd

/usr/local/src/redis-7.0.7

[root@VM-8-12-centos redis-7.0.7]# cp redis.conf /usr/local/redis/bin/

[root@VM-8-12-centos redis-7.0.7]# cd /usr/local/redis/bin/

[root@VM-8-12-centos bin]# ls -al

总用量 21636

drwxr-xr-x 2 root root 4096 1月 12 17:44 .

drwxr-xr-x 3 root root 4096 1月 12 17:23 ..

-rwxr-xr-x 1 root root 5197752 1月 12 17:23 redis-benchmark

lrwxrwxrwx 1 root root 12 1月 12 17:23 redis-check-aof -> redis-server

lrwxrwxrwx 1 root root 12 1月 12 17:23 redis-check-rdb -> redis-server

-rwxr-xr-x 1 root root 5411072 1月 12 17:23 redis-cli

-rw-r--r-- 1 root root 106545 1月 12 17:44 redis.conf

lrwxrwxrwx 1 root root 12 1月 12 17:23 redis-sentinel -> redis-server

-rwxr-xr-x 1 root root 11421920 1月 12 17:23 redis-server

[root@VM-8-12-centos bin]# cd ..

[root@VM-8-12-centos redis]# ls -al

总用量 12

drwxr-xr-x 3 root root 4096 1月 12 17:23 .

drwxr-xr-x. 20 root root 4096 1月 12 09:46 ..

drwxr-xr-x 2 root root 4096 1月 12 17:44 bin

[root@VM-8-12-centos redis]# pwd

/usr/local/redis

[root@VM-8-12-centos redis]#

这里涉及到配置文件,那么还是要看看配置文件有什么?

[root@VM-8-12-centos bin]# cat redis.conf | grep -v ^# | grep -v ^$

bind 127.0.0.1 -::1

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile ""

databases 16

always-show-logo no

set-proc-title yes

proc-title-template "{title} {listen-addr} {server-mode}"

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

rdb-del-sync-files no

dir ./

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync yes

repl-diskless-sync-delay 5

repl-diskless-sync-max-replicas 0

repl-diskless-load disabled

repl-disable-tcp-nodelay no

replica-priority 100

acllog-max-len 128

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

lazyfree-lazy-user-del no

lazyfree-lazy-user-flush no

oom-score-adj no

oom-score-adj-values 0 200 800

disable-thp yes

appendonly no

appendfilename "appendonly.aof"

appenddirname "appendonlydir"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

aof-timestamp-enabled no

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-listpack-entries 512

hash-max-listpack-value 64

list-max-listpack-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-listpack-entries 128

zset-max-listpack-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

jemalloc-bg-thread yes

[root@VM-8-12-centos bin]#

这里需要知道一些常用命令。

# 是否以后台进程运行

daemonize yes

# pid文件位置

pidfile /var/run/redis/redis-server.pid

# 监听端口

port 6379

# 绑定地址,如外网需要连接,设置0.0.0.0

bind 127.0.0.1

# 连接超时时间,单位秒

timeout 300

##日志级别,分别有:

# debug :适用于开发和测试

# verbose :更详细信息

# notice :适用于生产环境

# warning :只记录警告或错误信息

loglevel notice

# 日志文件位置

logfile /var/log/redis/redis-server.log

# 是否将日志输出到系统日志

syslog-enabled no

# 设置数据库数量,默认数据库为0

databases 16

############### 快照方式 ###############

# 在900s(15m)之后,至少有1个key发生变化,则快照

save 900 1

# 在300s(5m)之后,至少有10个key发生变化,则快照

save 300 10

# 在60s(1m)之后,至少有1000个key发生变化,则快照

save 60 10000

# dump时是否压缩数据

rdbcompression yes

# 数据库(dump.rdb)文件存放目录

dir /var/lib/redis

############### 主从复制 ###############

#主从复制使用,用于本机redis作为slave去连接主redis

slaveof <masterip> <masterport>

#当master设置密码认证,slave用此选项指定master认证密码

masterauth <master-password>

#当slave与master之间的连接断开或slave正在与master进行数据同步时,如果有slave请求,当设置为yes时,slave仍然响应请求,此时可能有问题,如果设置no时,slave会返回"SYNC with master in progress"错误信息。但INFO和SLAVEOF命令除外。

slave-serve-stale-data yes

############### 安全 ###############

#配置redis连接认证密码

requirepass foobared

############### 限制 ###############

#设置最大连接数,0为不限制

maxclients 128

##内存清理策略,如果达到此值,将采取以下动作:

# volatile-lru :默认策略,只对设置过期时间的key进行LRU算法删除

# allkeys-lru :删除不经常使用的key

# volatile-random :随机删除即将过期的key

# allkeys-random :随机删除一个key

# volatile-ttl :删除即将过期的key

# noeviction :不过期,写操作返回报错

maxmemory <bytes>

# 如果达到maxmemory值,采用此策略

maxmemory-policy volatile-lru

# 默认随机选择3个key,从中淘汰最不经常用的

maxmemory-samples 3

############### 附加模式 ###############

# AOF持久化,是否记录更新操作日志,默认redis是异步(快照)把数据写入本地磁盘

appendonly no

# 指定更新日志文件名

appendfilename appendonly.aof

## AOF持久化三种同步策略:

# appendfsync always #每次有数据发生变化时都会写入appendonly.aof

# appendfsync everysec #默认方式,每秒同步一次到appendonly.aof

# appendfsync no #不同步,数据不会持久化

# 当AOF日志文件即将增长到指定百分比时,redis通过调用BGREWRITEAOF是否自动重写AOF日志文件。

no-appendfsync-on-rewrite no

############### 虚拟内存 ###############

# 是否启用虚拟内存机制,虚拟内存机将数据分页存放,把很少访问的页放到swap上,内存占用多,最好关闭虚拟内存

vm-enabled no

# 虚拟内存文件位置

vm-swap-file /var/lib/redis/redis.swap

# redis使用的最大内存上限,保护redis不会因过多使用物理内存影响性能

vm-max-memory 0

# 每个页面的大小为32字节

vm-page-size 32

# 设置swap文件中页面数量

vm-pages 134217728

# 访问swap文件的线程数

vm-max-threads 4

############### 高级配置 ###############

# 哈希表中元素(条目)总个数不超过设定数量时,采用线性紧凑格式存储来节省空间

hash-max-zipmap-entries 512

# 哈希表中每个value的长度不超过多少字节时,采用线性紧凑格式存储来节省空间

hash-max-zipmap-value 64

# list数据类型多少节点以下会采用去指针的紧凑存储格式

list-max-ziplist-entries 512

# list数据类型节点值大小小于多少字节会采用紧凑存储格式

list-max-ziplist-value 64

# set数据类型内部数据如果全部是数值型,且包含多少节点以下会采用紧凑格式存储

set-max-intset-entries 512

# 是否激活重置哈希

activerehashing yes

完整文件翻译(百度翻译搞的,也可能不对;欢迎指出)

# Redis configuration file example.

# Redis配置文件示例。

#

# Note that in order to read the configuration file, Redis must be

# started with the file path as first argument:

# 注意,为了读取配置文件,Redis必须以文件路径作为第一个参数开始:

#

# ./redis-server /path/to/redis.conf

# Note on units: when memory size is needed, it is possible to specify

# it in the usual form of 1k 5GB 4M and so forth:

# 关于单位的说明:当需要内存大小时,可以指定它通常采用1k 5GB 4M等形式:

#

# 1k => 1000 bytes

# 1kb => 1024 bytes

# 1m => 1000000 bytes

# 1mb => 1024*1024 bytes

# 1g => 1000000000 bytes

# 1gb => 1024*1024*1024 bytes

#

# units are case insensitive so 1GB 1Gb 1gB are all the same.

# 单元不区分大小写,所以1GB 1Gb 1gB 都是一样的。

################################## INCLUDES ###################################

################################## 包含 ###################################

# Include one or more other config files here. This is useful if you

# have a standard template that goes to all Redis servers but also need

# to customize a few per-server settings. Include files can include

# other files, so use this wisely.

# 在此处包含一个或多个其他配置文件。这是有用的,如果你

# 有一个标准的模板,去所有的Redis服务器,但也需要

# 自定义每个服务器的一些设置。包含文件可以包含

# 其他文件,所以明智地使用它。

#

#

# Notice option "include" won't be rewritten by command "CONFIG REWRITE"

# from admin or Redis Sentinel. Since Redis always uses the last processed

# line as value of a configuration directive, you'd better put includes

# at the beginning of this file to avoid overwriting config change at runtime.

# 注意选项“include”不会被命令“CONFIG REWRITE”重写

# 来自管理员或Redis Sentinel。因为Redis总是使用最后处理的

# 行作为配置指令的值,最好将includes

# 以避免在运行时覆盖配置更改。

#

#

# If instead you are interested in using includes to override configuration

# options, it is better to use include as the last line.

# 如果您对使用includes覆盖配置感兴趣

# 选项,最好使用include作为最后一行。

#

#

# include /path/to/local.conf

# include /path/to/other.conf

# 包含

################################## MODULES #####################################

################################## 模块 #####################################

# Load modules at startup. If the server is not able to load modules

# it will abort. It is possible to use multiple loadmodule directives.

# 启动时加载模块。如果服务器无法加载模块

# 它将中止。可以使用多个loadmodule指令。

#

#

# loadmodule /path/to/my_module.so

# loadmodule /path/to/other_module.so

################################## NETWORK #####################################

################################## 网络 #####################################

# By default, if no "bind" configuration directive is specified, Redis listens

# for connections from all the network interfaces available on the server.

# It is possible to listen to just one or multiple selected interfaces using

# the "bind" configuration directive, followed by one or more IP addresses.

# 认情况下,如果没有指定“bind”配置指令,Redis将侦听

# 服务器上所有可用网络接口的连接。

# 可以使用以下命令只侦听一个或多个选定的接口

# “bind”配置指令,后跟一个或多个IP地址。

#

#

# Examples:

# 示例:

#

# bind 192.168.1.100 10.0.0.1

# bind 127.0.0.1 ::1

#

# ~~~ WARNING ~~~ If the computer running Redis is directly exposed to the

# internet, binding to all the interfaces is dangerous and will expose the

# instance to everybody on the internet. So by default we uncomment the

# following bind directive, that will force Redis to listen only into

# the IPv4 loopback interface address (this means Redis will be able to

# accept connections only from clients running into the same computer it

# is running).

# ~~~警告~~~如果运行Redis的计算机直接暴露于

# 在internet上,绑定到所有接口是危险的,并且会暴露

# 向互联网上的每个人提供实例。因此默认情况下,我们取消注释

# 遵循bind指令,这将强制Redis只监听

# IPv4环回接口地址(这意味着Redis将能够

# 只接受运行在同一台计算机上的客户端的连接

# 正在运行)。

#

#

# IF YOU ARE SURE YOU WANT YOUR INSTANCE TO LISTEN TO ALL THE INTERFACES

# JUST COMMENT THE FOLLOWING LINE.

# 如果您确定希望实例侦听所有接口

# 只需评论下一行。

#

# ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

#bind 127.0.0.1

# Protected mode is a layer of security protection, in order to avoid that

# Redis instances left open on the internet are accessed and exploited.

# 保护模式是一层安全保护,以避免这种情况

# 互联网上开放的Redis实例被访问和利用。

#

#

# When protected mode is on and if:

# 打开保护模式时,如果

#

# 1) The server is not binding explicitly to a set of addresses using the

# "bind" directive.

# 1)服务器没有使用“绑定”指令。

#

# 2) No password is configured.

# 2)未配置密码。

#

# The server only accepts connections from clients connecting from the

# IPv4 and IPv6 loopback addresses 127.0.0.1 and ::1, and from Unix domain

# sockets.

# 服务器只接受来自从服务器连接的客户端的连接

# IPv4和IPv6环回地址127.0.0.1和::1,并且来自Unix域插座。

#

#

# By default protected mode is enabled. You should disable it only if

# you are sure you want clients from other hosts to connect to Redis

# even if no authentication is configured, nor a specific set of interfaces

# are explicitly listed using the "bind" directive.

# 默认情况下,启用保护模式。你应该禁用它只有在

# 您确定希望其他主机的客户端连接到Redis吗

# 即使没有配置身份验证,也没有特定的一组接口

# 使用“bind”指令显式列出。

protected-mode no

# Accept connections on the specified port, default is 6379 (IANA #815344).

# If port 0 is specified Redis will not listen on a TCP socket.

# 接受指定端口上的连接,默认值为6379(IANA#815344)。

# 如果指定了端口0,Redis将不会侦听TCP套接字。

port 6379

# TCP listen() backlog.

# TCP listen()积压工作。

#

# In high requests-per-second environments you need an high backlog in order

# to avoid slow clients connections issues. Note that the Linux kernel

# will silently truncate it to the value of /proc/sys/net/core/somaxconn so

# make sure to raise both the value of somaxconn and tcp_max_syn_backlog

# in order to get the desired effect.

# 在每秒请求数高的环境中,您需要一个高积压工作

# 以避免客户端连接速度慢的问题。请注意,Linux内核

# 将静默地将其截断为/proc/sys/net/core/somaxconn so的值

# 确保同时提高somaxconn和tcp\u max\u syn\u backlog的值

# 为了达到预期的效果。

tcp-backlog 511

# Unix socket.

#

# Specify the path for the Unix socket that will be used to listen for

# incoming connections. There is no default, so Redis will not listen

# on a unix socket when not specified.

# 指定将用于侦听的Unix套接字的路径

# 传入连接。没有默认值,所以Redis不会监听

# 在未指定的unix套接字上。

#

# unixsocket /tmp/redis.sock

# unixsocketperm 700

# Close the connection after a client is idle for N seconds (0 to disable)

# 客户端空闲N秒后关闭连接(0表示禁用)

timeout 0

# TCP keepalive.

# TCP保持连接。

#

# If non-zero, use SO_KEEPALIVE to send TCP ACKs to clients in absence

# of communication. This is useful for two reasons:

# 如果不为零,则使用sou KEEPALIVE向缺席的客户端发送TCP ack

# 沟通的方式。这有两个原因:

#

# 1) Detect dead peers.

# 1)检测死点。

#

# 2) Take the connection alive from the point of view of network

# equipment in the middle.

# 2)从网络的角度看连接是活的设备在中间。

#

# On Linux, the specified value (in seconds) is the period used to send ACKs.

# Note that to close the connection the double of the time is needed.

# On other kernels the period depends on the kernel configuration.

# 在Linux上,指定的值(以秒为单位)是用于发送ACK的时间段。

# 请注意,要关闭连接,需要两倍的时间。

# 在其他内核上,周期取决于内核配置。

#

# A reasonable value for this option is 300 seconds, which is the new

# Redis default starting with Redis 3.2.1.

# 此选项的合理值为300秒,这是新的

# Redis默认从Redis 3.2.1开始。

tcp-keepalive 300

################################# GENERAL #####################################

################################# 总则 #####################################

# By default Redis does not run as a daemon. Use 'yes' if you need it.

# Note that Redis will write a pid file in /var/run/redis.pid when daemonized.

# 默认情况下,Redis不作为守护进程运行。如果需要,请使用“是”。

# 注意,当daemonized时,Redis将在/var/run/Redis.pid中写入一个pid文件。

daemonize yes

# If you run Redis from upstart or systemd, Redis can interact with your

# supervision tree. Options:

# supervised no - no supervision interaction

# supervised upstart - signal upstart by putting Redis into SIGSTOP mode

# supervised systemd - signal systemd by writing READY=1 to $NOTIFY_SOCKET

# supervised auto - detect upstart or systemd method based on

# UPSTART_JOB or NOTIFY_SOCKET environment variables

# Note: these supervision methods only signal "process is ready."

# They do not enable continuous liveness pings back to your supervisor.

# 如果您从upstart或systemd运行Redis,Redis可以与您的

# 监督树。选项:

# 无监督-无监督互动

# 监督upstart-通过将Redis置于SIGSTOP模式来发出upstart信号

# 受监控的systemd-通过写入READY=1到$NOTIFY\u SOCKET发送信号systemd

# 基于遗传算法的有监督自动检测upstart或systemd方法

# UPSTART \u JOB或NOTIFY \u SOCKET环境变量

# 注:这些监督方法仅表示“过程准备就绪”

# 它们不会使连续的活动ping返回给您的主管

supervised no

# If a pid file is specified, Redis writes it where specified at startup

# and removes it at exit.

# 如果指定了pid文件,Redis会在启动时将其写入指定的位置在出口处移除。

#

#

# When the server runs non daemonized, no pid file is created if none is

# specified in the configuration. When the server is daemonized, the pid file

# is used even if not specified, defaulting to "/var/run/redis.pid".

# 当服务器运行非守护进程时,如果没有创建pid文件,则不会创建pid文件

# 在配置中指定。当服务器被后台监控时,pid文件

# 即使未指定也会使用,默认为“/var/run/redis.pid”。

#

#

# Creating a pid file is best effort: if Redis is not able to create it

# nothing bad happens, the server will start and run normally.

# 如果Redis无法创建pid文件,那么创建pid文件是最好的选择

# 没有什么不好的事情发生,服务器将正常启动和运行。

pidfile /var/run/redis_6379.pid

# Specify the server verbosity level.

# This can be one of:

# debug (a lot of information, useful for development/testing)

# verbose (many rarely useful info, but not a mess like the debug level)

# notice (moderately verbose, what you want in production probably)

# warning (only very important / critical messages are logged)

# 指定服务器详细级别。

# 这可以是以下情况之一:

# 调试(大量信息,对开发/测试有用)

# 冗长(许多很少有用的信息,但不像调试级别那样混乱)

# 注意(适度冗长,可能是生产中需要的内容)

# 警告(只记录非常重要/关键的消息)

loglevel notice

# Specify the log file name. Also the empty string can be used to force

# Redis to log on the standard output. Note that if you use standard

# output for logging but daemonize, logs will be sent to /dev/null

# 指定日志文件名。空字符串也可以用来强制

# Redis登录标准输出。请注意,如果您使用标准

# 日志输出但是daemonize,日志将被发送到/dev/null

logfile /var/log/redis/redis.log

# To enable logging to the system logger, just set 'syslog-enabled' to yes,

# and optionally update the other syslog parameters to suit your needs.

# 要启用到系统记录器的日志记录,只需将“syslog enabled”设置为yes,

# 还可以根据需要更新其他syslog参数。

# syslog-enabled no

# Specify the syslog identity.

# 指定系统日志标识。

# syslog-ident redis

# Specify the syslog facility. Must be USER or between LOCAL0-LOCAL7.

# 指定系统日志工具。必须是USER或介于LOCAL0-LOCAL7之间

# syslog-facility local0

# Set the number of databases. The default database is DB 0, you can select

# a different one on a per-connection basis using SELECT <dbid> where

# dbid is a number between 0 and 'databases'-1

# 设置数据库数。默认数据库是db0,您可以选择

# 在每个连接上使用SELECT<dbid>where创建一个不同的连接

# dbid是介于0和“databases”之间的数字-1

databases 16

# By default Redis shows an ASCII art logo only when started to log to the

# standard output and if the standard output is a TTY. Basically this means

# that normally a logo is displayed only in interactive sessions.

# 默认情况下,Redis仅在开始登录到

# 标准输出,如果标准输出是TTY。基本上这意味着

# 通常只有在交互式会话中才会显示徽标。

#

# However it is possible to force the pre-4.0 behavior and always show a

# ASCII art logo in startup logs by setting the following option to yes.

# 但是,可以强制4.0之前的行为并始终显示

# 通过将以下选项设置为“是”,可以在启动日志中显示ASCII艺术徽标。

always-show-logo yes

################################ SNAPSHOTTING ################################

################################ 快照 ################################

#

# Save the DB on disk:

# 将数据库保存在磁盘上:

#

# save <seconds> <changes>

#

# Will save the DB if both the given number of seconds and the given

# number of write operations against the DB occurred.

# 如果给定的秒数和给定的对数据库执行的写入操作数。

#

# In the example below the behaviour will be to save:

# after 900 sec (15 min) if at least 1 key changed

# after 300 sec (5 min) if at least 10 keys changed

# after 60 sec if at least 10000 keys changed

# 在下面的示例中,行为将是保存:

# 900秒(15分钟)后,如果至少有一个键更改

# 300秒(5分钟)后,如果至少有10个键更改

# 60秒后,如果至少10000个密钥发生更改

#

# Note: you can disable saving completely by commenting out all "save" lines.

# 注意:您可以通过注释掉所有“save”行来完全禁用保存。

#

# It is also possible to remove all the previously configured save

# points by adding a save directive with a single empty string argument

# like in the following example:

# 也可以删除以前配置的所有保存

# 通过添加带有单个空字符串参数的save指令

# 如以下示例所示:

#

# save ""

save 900 1

save 300 10

save 60 10000

# By default Redis will stop accepting writes if RDB snapshots are enabled

# (at least one save point) and the latest background save failed.

# This will make the user aware (in a hard way) that data is not persisting

# on disk properly, otherwise chances are that no one will notice and some

# disaster will happen.

# 默认情况下,如果启用RDB快照,Redis将停止接受写操作

#(至少一个保存点)和最新的后台保存失败。

# 这将使用户意识到(以一种困难的方式)数据没有持久化

# 在磁盘上正确,否则很可能没有人会注意到和一些

# 灾难就会发生。

#

# If the background saving process will start working again Redis will

# automatically allow writes again.

# 如果后台保存过程将重新开始工作,Redis将

# 自动允许再次写入。

#

# However if you have setup your proper monitoring of the Redis server

# and persistence, you may want to disable this feature so that Redis will

# continue to work as usual even if there are problems with disk,

# permissions, and so forth.

# 但是,如果您已经设置了对Redis服务器的适当监视

# 和持久性,您可能希望禁用此功能,以便Redis

# 即使磁盘有问题,也要照常工作,

# 权限等。

stop-writes-on-bgsave-error yes

# Compress string objects using LZF when dump .rdb databases?

# For default that's set to 'yes' as it's almost always a win.

# If you want to save some CPU in the saving child set it to 'no' but

# the dataset will likely be bigger if you have compressible values or keys.

# 转储.rdb数据库时使用LZF压缩字符串对象?

# 默认设置为“是”,因为它几乎总是一个胜利。

# 如果您想在保存子进程中保存一些CPU,请将其设置为“否”,但是

# 如果有可压缩的值或键,数据集可能会更大。

rdbcompression yes

# Since version 5 of RDB a CRC64 checksum is placed at the end of the file.

# This makes the format more resistant to corruption but there is a performance

# hit to pay (around 10%) when saving and loading RDB files, so you can disable it

# for maximum performances.

# 由于RDB版本5,CRC64校验和放在文件的末尾。

# 这使得格式更能抵抗腐败,但有一个性能

# 在保存和加载RDB文件时按需付费(大约10%),因此您可以禁用它

# 以获得最佳性能。

#

# RDB files created with checksum disabled have a checksum of zero that will

# tell the loading code to skip the check.

# 在禁用校验和的情况下创建的RDB文件的校验和为零,这将导致

# 告诉加载代码跳过检查。

rdbchecksum yes

# The filename where to dump the DB

# 将数据库转储到的文件名

dbfilename dump.rdb

# The working directory.

# 工作目录。

#

# The DB will be written inside this directory, with the filename specified

# above using the 'dbfilename' configuration directive.

# 数据库将被写入这个目录,并指定文件名

# 使用“dbfilename”配置指令。

#

# The Append Only File will also be created inside this directory.

# 只附加的文件也将在这个目录中创建。

#

# Note that you must specify a directory here, not a file name.

# 请注意,必须在此处指定目录,而不是文件名

dir /var/lib/redis

################################# REPLICATION #################################

################################# 复制 #################################

# Master-Replica replication. Use replicaof to make a Redis instance a copy of

# another Redis server. A few things to understand ASAP about Redis replication.

# 主副本复制。使用replicaof使Redis实例成为

# 另一个Redis服务器。关于Redis复制,需要尽快了解的一些事情。

#

# +------------------+ +---------------+

# | Master | ---> | Replica |

# | 主 | ---> | 副 |

# | (receive writes) | | (exact copy) |

# | (接收写入) | | (精确副本) |

# +------------------+ +---------------+

#

# 1) Redis replication is asynchronous, but you can configure a master to

# stop accepting writes if it appears to be not connected with at least

# a given number of replicas.

# 1)Redis复制是异步的,但是您可以配置一个主机来

# 停止接受写入,如果它似乎没有连接到至少

# 给定数量的副本。

# 2) Redis replicas are able to perform a partial resynchronization with the

# master if the replication link is lost for a relatively small amount of

# time. You may want to configure the replication backlog size (see the next

# sections of this file) with a sensible value depending on your needs.

# 2)Redis副本能够与

# 如果复制链接丢失的时间相对较少,则为master

# 时间。您可能需要配置复制积压工作大小(请参阅下一页)

# 此文件的节),根据您的需要使用合理的值。

# 3) Replication is automatic and does not need user intervention. After a

# network partition replicas automatically try to reconnect to masters

# and resynchronize with them.

# 3)复制是自动的,不需要用户干预。过了一段时间

# 网络分区副本会自动尝试重新连接到主机

# 并与它们重新同步。

#

# replicaof <masterip> <masterport>

# If the master is password protected (using the "requirepass" configuration

# directive below) it is possible to tell the replica to authenticate before

# starting the replication synchronization process, otherwise the master will

# refuse the replica request.

# 如果主机受密码保护(使用“requirepass”配置

# 指令)之前可以告诉复制副本进行身份验证

# 正在启动复制同步过程,否则主机将

# 拒绝副本请求。

#

# masterauth <master-password>

# When a replica loses its connection with the master, or when the replication

# is still in progress, the replica can act in two different ways:

# 当复制副本失去与主机的连接时,或者当复制

# 如果仍在进行中,复制副本可以以两种不同的方式进行操作:

#

# 1) if replica-serve-stale-data is set to 'yes' (the default) the replica will

# still reply to client requests, possibly with out of date data, or the

# data set may just be empty if this is the first synchronization.

# 1)如果replica serve stale data设置为“yes”(默认值),则复制副本将

# 仍然回复客户端请求,可能包含过期数据,或者

# 如果这是第一次同步,数据集可能只是空的。

#

# 2) if replica-serve-stale-data is set to 'no' the replica will reply with

# an error "SYNC with master in progress" to all the kind of commands

# but to INFO, replicaOF, AUTH, PING, SHUTDOWN, REPLCONF, ROLE, CONFIG,

# SUBSCRIBE, UNSUBSCRIBE, PSUBSCRIBE, PUNSUBSCRIBE, PUBLISH, PUBSUB,

# COMMAND, POST, HOST: and LATENCY.

# 2)如果replica serve stale data设置为“no”,则replica将用

# 所有类型的命令都出现“正在与主控同步”错误

# 但是对于INFO,replicaOF,AUTH,PING,SHUTDOWN,REPLCONF,ROLE,CONFIG,

# 命令、POST、主机:和延迟。

#

replica-serve-stale-data yes

# You can configure a replica instance to accept writes or not. Writing against

# a replica instance may be useful to store some ephemeral data (because data

# written on a replica will be easily deleted after resync with the master) but

# may also cause problems if clients are writing to it because of a

# misconfiguration.

# 您可以将副本实例配置为是否接受写入。反对

# 副本实例可能有助于存储一些临时数据(因为

# 写在副本上的内容在与主机重新同步后将很容易被删除),但是

# 如果客户机因为

# 配置错误。

#

# Since Redis 2.6 by default replicas are read-only.

# 因为redis2.6默认情况下副本是只读的

#

# Note: read only replicas are not designed to be exposed to untrusted clients

# on the internet. It's just a protection layer against misuse of the instance.

# Still a read only replica exports by default all the administrative commands

# such as CONFIG, DEBUG, and so forth. To a limited extent you can improve

# security of read only replicas using 'rename-command' to shadow all the

# administrative / dangerous commands.

# 注意:只读副本的设计不允许向不受信任的客户端公开

# 在互联网上。它只是一个防止实例被滥用的保护层。

# 默认情况下,只读副本仍然导出所有管理命令

# 例如配置、调试等。在一定程度上你可以提高

# 使用“rename command”对所有

# 行政/危险命令。

replica-read-only yes

# Replication SYNC strategy: disk or socket.

# 复制同步策略:磁盘或套接字。

#

# -------------------------------------------------------

# WARNING: DISKLESS REPLICATION IS EXPERIMENTAL CURRENTLY

# 警告:无盘复制目前处于试验阶段

# -------------------------------------------------------

#

# New replicas and reconnecting replicas that are not able to continue the replication

# process just receiving differences, need to do what is called a "full

# synchronization". An RDB file is transmitted from the master to the replicas.

# The transmission can happen in two different ways:

# 无法继续复制的新复制副本和重新连接的复制副本

# 只是接受过程中的分歧,需要做的就是所谓的“满分”

# 同步”。RDB文件从主机传输到副本。

# 传输有两种不同的方式:

#

# 1) Disk-backed: The Redis master creates a new process that writes the RDB

# file on disk. Later the file is transferred by the parent

# process to the replicas incrementally.

# 1)磁盘备份:Redis master创建一个新进程来写入RDB

# 磁盘上的文件。稍后,该文件由父级传输

# 以增量方式处理复制副本。

# 2) Diskless: The Redis master creates a new process that directly writes the

# RDB file to replica sockets, without touching the disk at all.

# 2)无盘:Redis master创建一个新进程,直接写入

# RDB文件到副本套接字,完全不接触磁盘。

#

# With disk-backed replication, while the RDB file is generated, more replicas

# can be queued and served with the RDB file as soon as the current child producing

# the RDB file finishes its work. With diskless replication instead once

# the transfer starts, new replicas arriving will be queued and a new transfer

# will start when the current one terminates.

# 使用磁盘备份复制,在生成RDB文件的同时,会生成更多的副本

# 可以在当前子对象生成时立即排队并与RDB文件一起提供服务

# RDB文件完成了它的工作。而不是一次无盘复制

# 传输开始,到达的新副本将排队等待新的传输

# 将在当前的终止时开始。

#

# When diskless replication is used, the master waits a configurable amount of

# time (in seconds) before starting the transfer in the hope that multiple replicas

# will arrive and the transfer can be parallelized.

# 当使用无盘复制时,主机将等待一个可配置的时间量

# 开始传输之前的时间(秒),希望多个复制副本

# 将到达,传输可以并行。

#

# With slow disks and fast (large bandwidth) networks, diskless replication

# works better.

# 使用慢速磁盘和快速(大带宽)网络,无盘复制

# 效果更好。

repl-diskless-sync no

# When diskless replication is enabled, it is possible to configure the delay

# the server waits in order to spawn the child that transfers the RDB via socket

# to the replicas.

# 启用用无盘复制时,可以配置延迟

# 服务器等待生成通过套接字传输RDB的子级

# 复制品。

#

# This is important since once the transfer starts, it is not possible to serve

# new replicas arriving, that will be queued for the next RDB transfer, so the server

# waits a delay in order to let more replicas arrive.

# 这一点很重要,因为一旦开始转移,就不可能发球

# 新副本到达时,将排队等待下一次RDB传输,因此服务器

# 等待延迟以便让更多副本到达。

#

# The delay is specified in seconds, and by default is 5 seconds. To disable

# it entirely just set it to 0 seconds and the transfer will start ASAP.

# 延迟以秒为单位指定,默认为5秒。禁用

# 只需将其设置为0秒,传输就会尽快开始。

repl-diskless-sync-delay 5

# Replicas send PINGs to server in a predefined interval. It's possible to change

# this interval with the repl_ping_replica_period option. The default value is 10

# seconds.

# 副本以预定义的间隔向服务器发送ping。有可能改变

# 此间隔使用repl\u ping\u replica\u period选项。默认值为10秒。

#

# repl-ping-replica-period 10

# The following option sets the replication timeout for:

# 以下选项设置的复制超时:

#

# 1) Bulk transfer I/O during SYNC, from the point of view of replica.

# 1) 从副本的角度来看,同步期间的批量传输I/O。

# 2) Master timeout from the point of view of replicas (data, pings).

# 2) 从副本(数据、ping)的角度看主超时。

# 3) Replica timeout from the point of view of masters (REPLCONF ACK pings).

# 3) 从主机(REPLCONF ACK pings)的角度来看,复制超时。

#

# It is important to make sure that this value is greater than the value

# specified for repl-ping-replica-period otherwise a timeout will be detected

# every time there is low traffic between the master and the replica.

# 确保此值大于

# 为复制副本周期指定,否则将检测到超时

# 每次主服务器和副本之间的通信量低时。

#

# repl-timeout 60

# Disable TCP_NODELAY on the replica socket after SYNC?

# 同步后在副本套接字上禁用TCP\U节点?

#

# If you select "yes" Redis will use a smaller number of TCP packets and

# less bandwidth to send data to replicas. But this can add a delay for

# the data to appear on the replica side, up to 40 milliseconds with

# Linux kernels using a default configuration.

# 如果您选择“是”,Redis将使用较少的TCP数据包和

# 将数据发送到副本的带宽更少。但这会增加延迟

# 数据将显示在副本端,最多40毫秒

# 使用默认配置的Linux内核。

#

# If you select "no" the delay for data to appear on the replica side will

# be reduced but more bandwidth will be used for replication.

# 如果您选择“否”,则数据在副本侧出现的延迟将

# 但更多的带宽将用于复制。

#

# By default we optimize for low latency, but in very high traffic conditions

# or when the master and replicas are many hops away, turning this to "yes" may

# be a good idea.

# 默认情况下,我们优化低延迟,但在非常高的流量条件下

# 或者当主机和复制品有许多跳跃距离时,将此转换为“是”可能会

# 是个好主意。

repl-disable-tcp-nodelay no

# Set the replication backlog size. The backlog is a buffer that accumulates

# replica data when replicas are disconnected for some time, so that when a replica

# wants to reconnect again, often a full resync is not needed, but a partial

# resync is enough, just passing the portion of data the replica missed while

# disconnected.

# 设置复制积压大小。积压工作是一个累积的缓冲区

# 当复制副本断开连接一段时间后,复制副本数据

# 想要重新连接,通常不需要完全重新同步,而是部分重新同步

# 重新同步就足够了,只需传递复制副本丢失的部分数据

# 断开连接。

#

# The bigger the replication backlog, the longer the time the replica can be

# disconnected and later be able to perform a partial resynchronization.

# 复制积压工作越大,复制副本的保存时间就越长

# 已断开连接,稍后可以执行部分重新同步。

#

# The backlog is only allocated once there is at least a replica connected.

# 只有在至少连接了一个复制副本时,才会分配backlog。

#

# repl-backlog-size 1mb

# After a master has no longer connected replicas for some time, the backlog

# will be freed. The following option configures the amount of seconds that

# need to elapse, starting from the time the last replica disconnected, for

# the backlog buffer to be freed.

# 在主机不再连接复制副本一段时间后,积压工作

# 将被释放。以下选项配置

# 从最后一个复制副本断开连接的时间开始,需要经过

# 要释放的backlog缓冲区。

#

# Note that replicas never free the backlog for timeout, since they may be

# promoted to masters later, and should be able to correctly "partially

# resynchronize" with the replicas: hence they should always accumulate backlog.

# 请注意,复制副本永远不会为超时释放积压工作,因为它们可能是

# 以后升格为硕士,应该能够正确地“部分”

# 与副本“重新同步”:因此它们应该总是累积积压工作

#

# A value of 0 means to never release the backlog.

# 值为0表示从不释放积压工作。

#

# repl-backlog-ttl 3600

# The replica priority is an integer number published by Redis in the INFO output.

# It is used by Redis Sentinel in order to select a replica to promote into a

# master if the master is no longer working correctly.

# 副本优先级是Redis在信息输出中发布的整数。

# Redis Sentinel使用它来选择一个副本以升级到

# 如果主机不再正常工作。

#

# A replica with a low priority number is considered better for promotion, so

# for instance if there are three replicas with priority 10, 100, 25 Sentinel will

# pick the one with priority 10, that is the lowest.

# 优先级较低的副本被认为更适合升级,因此

# 例如,如果有三个优先级为10、100、25的副本,Sentinel将

# 选择优先级为10的,这是最低的

#

# However a special priority of 0 marks the replica as not able to perform the

# role of master, so a replica with priority of 0 will never be selected by

# Redis Sentinel for promotion.

# 但是,0的特殊优先级会将复制副本标记为无法执行

# 主服务器的角色,因此不会选择优先级为0的副本

# Redis是晋升的哨兵。

#

# By default the priority is 100.

# 默认情况下,优先级为100。

replica-priority 100

# It is possible for a master to stop accepting writes if there are less than

# N replicas connected, having a lag less or equal than M seconds.

# 如果少于,则主机可以停止接受写入

# N个连接的副本,延迟小于或等于M秒。

#

# The N replicas need to be in "online" state.

# N个副本需要处于“联机”状态。

#

# The lag in seconds, that must be <= the specified value, is calculated from

# the last ping received from the replica, that is usually sent every second.

# 滞后(以秒为单位)必须<=指定值,根据

# 从复制副本接收的最后一个ping,通常每秒发送一次。

#

# This option does not GUARANTEE that N replicas will accept the write, but

# will limit the window of exposure for lost writes in case not enough replicas

# are available, to the specified number of seconds.

# 此选项不保证N个复制副本将接受写入,但

# 如果没有足够的副本,将限制丢失写入的曝光窗口

# 在指定的秒数内可用。

#

# For example to require at least 3 replicas with a lag <= 10 seconds use:

# 例如,要要求至少3个延迟<=10秒的副本,请使用:

#

# min-replicas-to-write 3

# min-replicas-max-lag 10

#

# Setting one or the other to 0 disables the feature.

# 将其中一个设置为0将禁用该功能。

#

# By default min-replicas-to-write is set to 0 (feature disabled) and

# min-replicas-max-lag is set to 10.

# 默认情况下,要写入的最小副本数设置为0(功能已禁用)和

# min-max lag设置为10。

# A Redis master is able to list the address and port of the attached

# replicas in different ways. For example the "INFO replication" section

# offers this information, which is used, among other tools, by

# Redis Sentinel in order to discover replica instances.

# Another place where this info is available is in the output of the

# "ROLE" command of a master.

# Redis主机可以列出所连接的服务器的地址和端口

# 以不同的方式复制。例如,“信息复制”部分

# 提供此信息,除其他工具外,由

# Redis Sentinel以发现副本实例。

# 此信息可用的另一个地方是

# 主人的“角色”命令。

#

# The listed IP and address normally reported by a replica is obtained

# in the following way:

# 获得通常由副本报告的列出的IP和地址

# 按以下方式:

#

# IP: The address is auto detected by checking the peer address

# of the socket used by the replica to connect with the master.

# IP:通过检查对等地址自动检测地址

# 复制副本用于连接主机的套接字的。

#

# Port: The port is communicated by the replica during the replication

# handshake, and is normally the port that the replica is using to

# listen for connections.

# 端口:复制过程中复制副本与端口通信

# 握手,通常是复制副本用于

# 倾听联系。

#

# However when port forwarding or Network Address Translation (NAT) is

# used, the replica may be actually reachable via different IP and port

# pairs. The following two options can be used by a replica in order to

# report to its master a specific set of IP and port, so that both INFO

# and ROLE will report those values.

# 然而,当端口转发或网络地址转换(NAT)是

# 使用时,副本实际上可以通过不同的IP和端口访问

# 成对的。复制副本可以使用以下两个选项来

# 向其主机报告一组特定的IP和端口,以便

# 角色将报告这些值。

#

# There is no need to use both the options if you need to override just

# the port or the IP address.

# 如果您只需要重写,则不需要同时使用这两个选项

# 端口或IP地址。

#

# replica-announce-ip 5.5.5.5

# replica-announce-port 1234

# 副本发布ip 5.5.5.5

# 副本通知端口1234

################################## SECURITY ###################################

################################## 安全 ###################################

# Require clients to issue AUTH <PASSWORD> before processing any other

# commands. This might be useful in environments in which you do not trust

# others with access to the host running redis-server.

# 要求客户端在处理任何其他文件之前发出AUTH<PASSWORD>

# 命令。这在您不信任的环境中可能很有用

# 其他人可以访问运行redis服务器的主机。

#

# This should stay commented out for backward compatibility and because most

# people do not need auth (e.g. they run their own servers).

# 为了向后兼容性和大多数

# 人们不需要身份验证(例如,他们运行自己的服务器)。

#

# Warning: since Redis is pretty fast an outside user can try up to

# 150k passwords per second against a good box. This means that you should

# use a very strong password otherwise it will be very easy to break.

# 警告:由于Redis非常快,外部用户可以尝试

# 每秒150k个密码。这意味着你应该

# 使用一个非常强大的密码,否则将很容易打破。

#

# requirepass foobared

# 要求通过foobered

# Command renaming.

# 命令重命名。

#

# It is possible to change the name of dangerous commands in a shared

# environment. For instance the CONFIG command may be renamed into something

# hard to guess so that it will still be available for internal-use tools

# but not available for general clients.

# 可以更改共享文件夹中危险命令的名称

# 环境。例如,CONFIG命令可能被重命名为

# 很难猜测,所以它仍然可以用于内部使用的工具

# 但不适用于一般客户。

#

# Example:

# 示例:

#

# rename-command CONFIG b840fc02d524045429941cc15f59e41cb7be6c52

#

# It is also possible to completely kill a command by renaming it into

# an empty string:

# 也可以通过将命令重命名为空字符串:

#

# rename-command CONFIG ""

#

# Please note that changing the name of commands that are logged into the

# AOF file or transmitted to replicas may cause problems.

# 请注意,更改登录到

# AOF文件或传输到副本可能会导致问题。

################################### CLIENTS ####################################

################################### 客户 ####################################

# Set the max number of connected clients at the same time. By default

# this limit is set to 10000 clients, however if the Redis server is not

# able to configure the process file limit to allow for the specified limit

# the max number of allowed clients is set to the current file limit

# minus 32 (as Redis reserves a few file descriptors for internal uses).

# 设置同时连接的最大客户端数。默认情况下

# 但是,如果Redis服务器不可用,则此限制设置为10000个客户端

# 能够配置进程文件限制以允许指定的限制

# 允许的最大客户端数设置为当前文件限制

# 减32(因为Redis为内部使用保留了一些文件描述符)。

#

# Once the limit is reached Redis will close all the new connections sending

# an error 'max number of clients reached'.

# 一旦达到限制,Redis将关闭所有新的发送连接

# “已达到最大客户端数”错误。

#

# maxclients 10000

# 最大客户端数10000

############################## MEMORY MANAGEMENT ################################

############################## 内存管理 ################################

# Set a memory usage limit to the specified amount of bytes.

# When the memory limit is reached Redis will try to remove keys

# according to the eviction policy selected (see maxmemory-policy).

# 将内存使用限制设置为指定的字节数。

# 当达到内存限制时,Redis将尝试删除密钥

# 根据选择的逐出策略(请参阅maxmemory策略)。

#

# If Redis can't remove keys according to the policy, or if the policy is

# set to 'noeviction', Redis will start to reply with errors to commands

# that would use more memory, like SET, LPUSH, and so on, and will continue

# to reply to read-only commands like GET.

# 如果Redis不能根据策略删除密钥,或者如果策略是

# 设置为“noeviction”,Redis将开始用错误回复命令

# 这将使用更多的内存,如SET、LPUSH等,并将继续

# 回复GET之类的只读命令。

#

# This option is usually useful when using Redis as an LRU or LFU cache, or to

# set a hard memory limit for an instance (using the 'noeviction' policy).

# 当使用Redis作为LRU或LFU缓存或

# 为实例设置硬内存限制(使用“noeviction”策略)。

#

# WARNING: If you have replicas attached to an instance with maxmemory on,

# the size of the output buffers needed to feed the replicas are subtracted

# from the used memory count, so that network problems / resyncs will

# not trigger a loop where keys are evicted, and in turn the output

# buffer of replicas is full with DELs of keys evicted triggering the deletion

# of more keys, and so forth until the database is completely emptied.

# 警告:如果将副本连接到maxmemory打开的实例,

# 将减去馈送副本所需的输出缓冲区的大小

# 从已用内存计数,以便网络问题/重新同步

# 不触发一个循环,其中键被逐出,并依次输出

# 复制副本的缓冲区已满,退出的密钥的增量触发删除

# 等等,直到数据库完全清空。

#

# In short... if you have replicas attached it is suggested that you set a lower

# limit for maxmemory so that there is some free RAM on the system for replica

# output buffers (but this is not needed if the policy is 'noeviction').

# 总之。。。如果附加了副本,建议您将

# 限制maxmemory,以便系统上有一些可用RAM用于复制

# 输出缓冲区(但如果策略为“noeviction”,则不需要此缓冲区)。

#

# maxmemory <bytes>

# MAXMEMORY POLICY: how Redis will select what to remove when maxmemory

# is reached. You can select among five behaviors:

# MAXMEMORY策略:当MAXMEMORY

# 到达。您可以从五种行为中选择:

#

# volatile-lru -> Evict using approximated LRU among the keys with an expire set.

# volatile lru->在带有expire集的键中使用近似lru逐出。

# allkeys-lru -> Evict any key using approximated LRU.

# allkeys lru->使用近似lru逐出任何键。

# volatile-lfu -> Evict using approximated LFU among the keys with an expire set.

# volatile lfu->在带有expire集的键中使用近似lfu逐出。

# allkeys-lfu -> Evict any key using approximated LFU.

# allkeys lfu->使用近似lfu逐出任何键。

# volatile-random -> Remove a random key among the ones with an expire set.

# volatile random->在具有过期集的密钥中移除随机密钥。

# allkeys-random -> Remove a random key, any key.

# allkeys random->删除随机键,任意键。

# volatile-ttl -> Remove the key with the nearest expire time (minor TTL)

# volatile ttl->删除过期时间最近的密钥(minor ttl)

# noeviction -> Don't evict anything, just return an error on write operations.

# noeviction->不逐出任何内容,只返回写操作错误。

#

# LRU means Least Recently Used

# LRU表示最近最少使用

# LFU means Least Frequently Used

# LFU表示使用频率最低

#

# Both LRU, LFU and volatile-ttl are implemented using approximated

# randomized algorithms.

# LRU、LFU和volatile-ttl都是用近似方法实现的

# 随机算法。

#

# Note: with any of the above policies, Redis will return an error on write

# operations, when there are no suitable keys for eviction.

# 注意:对于上述任何策略,Redis都会在写入时返回一个错误

# 操作,当没有合适的键进行逐出时。

#

# At the date of writing these commands are: set setnx setex append

# incr decr rpush lpush rpushx lpushx linsert lset rpoplpush sadd

# sinter sinterstore sunion sunionstore sdiff sdiffstore zadd zincrby

# zunionstore zinterstore hset hsetnx hmset hincrby incrby decrby

# getset mset msetnx exec sort

# 在编写之日,这些命令是:set setnx setex append

# incr decr rpush lpushx lpushx linsert lset rpoplpush sadd

# sinter sinterstoresunion sunionstore sdiff sdiffstore zadd zincrby

# zunionstore zinterstore hset hsetnx hmset hincrby incrby decrby

# getset mset msetnx exec sort

#

# The default is:

# 默认值为:

#

# maxmemory-policy noeviction

# maxmemory策略无效

# LRU, LFU and minimal TTL algorithms are not precise algorithms but approximated

# algorithms (in order to save memory), so you can tune it for speed or

# accuracy. For default Redis will check five keys and pick the one that was

# used less recently, you can change the sample size using the following

# configuration directive.

# LRU、LFU和最小TTL算法不是精确算法,而是近似算法

# 算法(为了节省内存),所以你可以调整它的速度或速度

# 准确度。默认情况下,Redis将检查五个键并选择一个

# 最近使用较少,您可以使用以下命令更改样本大小

# 配置指令。

#

# The default of 5 produces good enough results. 10 Approximates very closely

# true LRU but costs more CPU. 3 is faster but not very accurate.

# 默认值5会产生足够好的结果。10非常接近

# 真正的LRU,但成本更高的CPU.3更快,但不是很准确。

# maxmemory-samples 5

# Starting from Redis 5, by default a replica will ignore its maxmemory setting

# (unless it is promoted to master after a failover or manually). It means

# that the eviction of keys will be just handled by the master, sending the

# DEL commands to the replica as keys evict in the master side.

# 从Redis 5开始,默认情况下,复制副本将忽略其maxmemory设置

#(除非在故障转移后或手动将其升级为主服务器)。意思是

# 钥匙的取出将由主人处理,发送

# DEL命令在主端退出密钥时发送到复制副本。

#

# This behavior ensures that masters and replicas stay consistent, and is usually

# what you want, however if your replica is writable, or you want the replica to have

# a different memory setting, and you are sure all the writes performed to the

# replica are idempotent, then you may change this default (but be sure to understand

# what you are doing).

# 此行为可确保主副本和副本保持一致,并且通常

# 但是,如果您的复制副本是可写的,或者您希望复制副本具有

# 一个不同的内存设置,并且您确定对

# 如果副本是幂等的,那么您可以更改此默认值(但请务必理解

# 你在做什么)。

#

# Note that since the replica by default does not evict, it may end using more

# memory than the one set via maxmemory (there are certain buffers that may

# be larger on the replica, or data structures may sometimes take more memory and so

# forth). So make sure you monitor your replicas and make sure they have enough

# memory to never hit a real out-of-memory condition before the master hits

# the configured maxmemory setting.

# 请注意,由于复制副本在默认情况下不逐出,因此它可能会使用更多

# 内存大于通过maxmemory设置的内存(有某些缓冲区可能

# 复制副本的大小可能会更大,或者数据结构有时可能占用更多内存等等

# 第四)。所以一定要监视你的复制品,确保它们有足够的复制品

# 在主程序命中之前,内存永远不会命中真正的内存不足状态

# 配置的maxmemory设置。

#

# replica-ignore-maxmemory yes

############################# LAZY FREEING ####################################

# Redis has two primitives to delete keys. One is called DEL and is a blocking

# deletion of the object. It means that the server stops processing new commands

# in order to reclaim all the memory associated with an object in a synchronous

# way. If the key deleted is associated with a small object, the time needed

# in order to execute the DEL command is very small and comparable to most other

# O(1) or O(log_N) commands in Redis. However if the key is associated with an

# aggregated value containing millions of elements, the server can block for

# a long time (even seconds) in order to complete the operation.

# Redis有两个删除键的原语。一个叫做DEL,是一个blocking

# 删除对象。这意味着服务器停止处理新命令

# 为了在同步进程中回收与对象相关联的所有内存

# 是的。如果删除的键与小对象关联,则所需的时间

# 为了执行DEL命令,它非常小,与大多数其他命令相当

# Redis中的O(1)或O(logn)命令。但是,如果密钥与

# 包含数百万个元素的聚合值,服务器可以为

# 长时间(甚至几秒钟)以完成操作。

#

# For the above reasons Redis also offers non blocking deletion primitives

# such as UNLINK (non blocking DEL) and the ASYNC option of FLUSHALL and

# FLUSHDB commands, in order to reclaim memory in background. Those commands

# are executed in constant time. Another thread will incrementally free the

# object in the background as fast as possible.

# 基于上述原因,Redis还提供了非阻塞删除原语

# 例如UNLINK(non-blocking DEL)和FLUSHALL的ASYNC选项

# FLUSHDB命令,以便在后台回收内存。那些命令

# 在固定时间内执行。另一个线程将逐渐释放

# 尽可能快地在背景中创建对象。

#

# DEL, UNLINK and ASYNC option of FLUSHALL and FLUSHDB are user-controlled.

# It's up to the design of the application to understand when it is a good

# idea to use one or the other. However the Redis server sometimes has to

# delete keys or flush the whole database as a side effect of other operations.

# Specifically Redis deletes objects independently of a user call in the

# following scenarios:

# FLUSHDB和FLUSHDB的DEL、UNLINK和ASYNC选项由用户控制。

# 应用程序的设计决定了什么时候是一个好的应用程序

# 使用其中一个的想法。但是Redis服务器有时不得不

# 删除键或刷新整个数据库作为其他操作的副作用。

# 具体来说,Redis独立于数据库中的用户调用删除对象

# 以下场景:

#

# 1) On eviction, because of the maxmemory and maxmemory policy configurations,

# in order to make room for new data, without going over the specified

# memory limit.

# 1)逐出时,由于maxmemory和maxmemory策略配置,

# 为了给新的数据腾出空间,而不超过指定的

# 内存限制。

# 2) Because of expire: when a key with an associated time to live (see the

# EXPIRE command) must be deleted from memory.

# 2)因为过期:当一个密钥与一个相关联的生存时间(参见

# EXPIRE命令)必须从内存中删除。

# 3) Because of a side effect of a command that stores data on a key that may

# already exist. For example the RENAME command may delete the old key

# content when it is replaced with another one. Similarly SUNIONSTORE

# or SORT with STORE option may delete existing keys. The SET command

# itself removes any old content of the specified key in order to replace

# it with the specified string.

# 3)由于在键上存储数据的命令的副作用

# 已经存在。例如,RENAME命令可以删除旧密钥

# 当内容被另一个内容替换时。类似SUNIONSTORE

# 或使用存储选项排序可能会删除现有密钥。SET命令

# 它本身删除指定键的任何旧内容以便替换

# 它使用指定的字符串。

# 4) During replication, when a replica performs a full resynchronization with

# its master, the content of the whole database is removed in order to

# load the RDB file just transferred.

# 4)在复制期间,当复制副本与执行完全重新同步时

# 它的主人,整个数据库的内容被删除,以便

# 加载刚传输的RDB文件。

#

# In all the above cases the default is to delete objects in a blocking way,

# like if DEL was called. However you can configure each case specifically

# in order to instead release memory in a non-blocking way like if UNLINK

# was called, using the following configuration directives:

# 在上述所有情况下,默认情况是以阻塞方式删除对象,

# 好像戴尔被叫来了。但是,您可以具体配置每个案例

# 以一种非阻塞的方式释放内存,就像取消链接一样

# 使用以下配置指令调用:

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

############################## APPEND ONLY MODE ###############################

############################## 仅附加模式 ###############################

# By default Redis asynchronously dumps the dataset on disk. This mode is

# good enough in many applications, but an issue with the Redis process or

# a power outage may result into a few minutes of writes lost (depending on

# the configured save points).

# 默认情况下,Redis将数据集异步转储到磁盘上。此模式为

# 在许多应用程序中已经足够好了,但是Redis进程或

# 断电可能会导致几分钟的写操作丢失(取决于

# 配置的保存点)。

#

# The Append Only File is an alternative persistence mode that provides

# much better durability. For instance using the default data fsync policy

# (see later in the config file) Redis can lose just one second of writes in a

# dramatic event like a server power outage, or a single write if something

# wrong with the Redis process itself happens, but the operating system is

# still running correctly.

# 仅附加文件是一种提供

# 更好的耐用性。例如,使用默认数据fsync策略

#(请参阅后面的配置文件)Redis在一段时间内只会丢失一秒钟的写操作

# 戏剧性的事件,比如服务器断电,或者某个事件发生时的一次写入

# Redis进程本身出错,但操作系统是错误的

# 仍然正常运行。

#

# AOF and RDB persistence can be enabled at the same time without problems.

# If the AOF is enabled on startup Redis will load the AOF, that is the file

# with the better durability guarantees.

# AOF和RDB持久性可以同时启用而不会出现问题。

# 如果启动时启用了AOF,Redis将加载AOF,即文件

# 具有更好的耐久性保证。

#

# Please check http://redis.io/topics/persistence for more information.

appendonly no

# The name of the append only file (default: "appendonly.aof")

# 仅附加文件的名称(默认值:“appendonly.aof”)

appendfilename "appendonly.aof"

# The fsync() call tells the Operating System to actually write data on disk

# instead of waiting for more data in the output buffer. Some OS will really flush

# data on disk, some other OS will just try to do it ASAP.

# fsync()调用告诉操作系统在磁盘上实际写入数据

# 而不是在输出缓冲区中等待更多的数据。有些操作系统会很流行

# 磁盘上的数据,其他一些操作系统会尽快尝试这样做。

#

# Redis supports three different modes:

# Redis支持三种不同的模式:

#

# no: don't fsync, just let the OS flush the data when it wants. Faster.

# always: fsync after every write to the append only log. Slow, Safest.

# everysec: fsync only one time every second. Compromise.

# 否:不要fsync,只要让操作系统在需要时刷新数据即可。更快。

# 总是:每次写入仅附加日志后进行fsync。慢,最安全。

# everysec:fsync每秒只同步一次。妥协。

#

# The default is "everysec", as that's usually the right compromise between

# speed and data safety. It's up to you to understand if you can relax this to

# "no" that will let the operating system flush the output buffer when

# it wants, for better performances (but if you can live with the idea of

# some data loss consider the default persistence mode that's snapshotting),

# or on the contrary, use "always" that's very slow but a bit safer than

# everysec.

# 默认值是“everysec”,因为这通常是

# 速度和数据安全。这取决于你是否能放松一下

# “no”将允许操作系统在

# 它想要更好的表演(但是如果你能接受某些数据丢失考虑默认的持久模式(SNAP),

# 或者相反,使用“总是”这是非常缓慢,但有点安全比

# 埃弗里塞克。

#

# More details please check the following article:

# 更多详情请查看以下文章:

# http://antirez.com/post/redis-persistence-demystified.html

#

# If unsure, use "everysec".

# 如果不确定,请使用“everysec”。

# appendfsync always

appendfsync everysec

# appendfsync no

# When the AOF fsync policy is set to always or everysec, and a background

# saving process (a background save or AOF log background rewriting) is

# performing a lot of I/O against the disk, in some Linux configurations

# Redis may block too long on the fsync() call. Note that there is no fix for

# this currently, as even performing fsync in a different thread will block

# our synchronous write(2) call.

# 当AOF fsync策略设置为always或everysec时,背景

# 保存过程(后台保存或AOF日志后台重写)是

# 在某些Linux配置中,对磁盘执行大量I/O操作

# Redis可能在fsync()调用上阻塞太长。请注意,没有修复

# 目前,这是因为即使在不同的线程中执行fsync也会阻塞

# 我们的同步写入(2)调用。

#

# In order to mitigate this problem it's possible to use the following option

# that will prevent fsync() from being called in the main process while a

# BGSAVE or BGREWRITEAOF is in progress.

# 为了缓解这个问题,可以使用以下选项

# 这将防止在主进程中调用fsync(),而

# 正在进行BGSAVE或BGREWRITEAOF。

#

# This means that while another child is saving, the durability of Redis is

# the same as "appendfsync none". In practical terms, this means that it is

# possible to lose up to 30 seconds of log in the worst scenario (with the

# default Linux settings).

# 这意味着,当另一个孩子在存钱时,Redis的耐用性就变了

# 与“appendfsync none”相同。实际上,这意味着

# 在最坏的情况下(使用

# 默认Linux设置)。

#

# If you have latency problems turn this to "yes". Otherwise leave it as

# "no" that is the safest pick from the point of view of durability.

# 如果您有延迟问题,请将此设置为“是”。否则就留着吧

# 从耐用性的角度来看,“不”是最安全的选择。

no-appendfsync-on-rewrite no

# Automatic rewrite of the append only file.

# Redis is able to automatically rewrite the log file implicitly calling

# BGREWRITEAOF when the AOF log size grows by the specified percentage.

# 自动重写仅附加的文件。

# Redis能够自动重写隐式调用的日志文件

# 当AOF日志大小按指定的百分比增长时,BGREWRITEAOF。

#

# This is how it works: Redis remembers the size of the AOF file after the

# latest rewrite (if no rewrite has happened since the restart, the size of

# the AOF at startup is used).

# 这就是它的工作原理:Redis会记住

# 最新重写(如果重新启动后没有发生重写,则

# 使用启动时的AOF)。

#

# This base size is compared to the current size. If the current size is

# bigger than the specified percentage, the rewrite is triggered. Also

# you need to specify a minimal size for the AOF file to be rewritten, this

# is useful to avoid rewriting the AOF file even if the percentage increase

# is reached but it is still pretty small.

# 此基本大小与当前大小进行比较。如果当前大小为

# 大于指定的百分比时,将触发重写。阿尔索

# 您需要为要重写的AOF文件指定最小大小,如下所示

# 有助于避免重写AOF文件,即使百分比增加

# 但它仍然很小。

#

# Specify a percentage of zero in order to disable the automatic AOF

# rewrite feature.

# 指定0的百分比以禁用自动AOF

# 重写功能。

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

# An AOF file may be found to be truncated at the end during the Redis

# startup process, when the AOF data gets loaded back into memory.

# This may happen when the system where Redis is running

# crashes, especially when an ext4 filesystem is mounted without the

# data=ordered option (however this can't happen when Redis itself

# crashes or aborts but the operating system still works correctly).

# 在Redis过程中,可能会发现AOF文件在末尾被截断

# 启动过程,当AOF数据被加载回内存时。

# 这可能发生在运行Redis的系统中

# 崩溃,尤其是在没有

# data=ordered选项(但是当Redis本身

# 崩溃或中止,但操作系统仍正常工作)。

#

# Redis can either exit with an error when this happens, or load as much

# data as possible (the default now) and start if the AOF file is found

# to be truncated at the end. The following option controls this behavior.

# Redis可以在出现错误时退出,也可以加载尽可能多的数据

# 如果找到AOF文件,则启动

# 最后被截断。以下选项控制此行为。

#

# If aof-load-truncated is set to yes, a truncated AOF file is loaded and

# the Redis server starts emitting a log to inform the user of the event.

# Otherwise if the option is set to no, the server aborts with an error

# and refuses to start. When the option is set to no, the user requires

# to fix the AOF file using the "redis-check-aof" utility before to restart

# the server.

# 如果aof load truncated设置为yes,则加载并删除一个截断的aof文件

# Redis服务器开始发出一个日志来通知用户该事件。

# 否则,如果该选项设置为“否”,则服务器将中止并返回一个错误

# 拒绝开始。当选项设置为“否”时,用户需要

# 在重新启动之前,使用“redis check AOF”实用程序修复AOF文件

# 服务器。

#

# Note that if the AOF file will be found to be corrupted in the middle

# the server will still exit with an error. This option only applies when

# Redis will try to read more data from the AOF file but not enough bytes

# will be found.

# 请注意,如果发现AOF文件在中间被损坏

# 服务器仍将退出并出现错误。此选项仅适用于

# Redis将尝试从AOF文件中读取更多数据,但字节不足

# 会被发现的。

aof-load-truncated yes

# When rewriting the AOF file, Redis is able to use an RDB preamble in the

# AOF file for faster rewrites and recoveries. When this option is turned

# on the rewritten AOF file is composed of two different stanzas:

# 当重写AOF文件时,Redis能够在

# AOF文件,用于更快的重写和恢复。启用此选项时

# 重写的AOF文件由两个不同的节组成:

#

# [RDB file][AOF tail]

#

# When loading Redis recognizes that the AOF file starts with the "REDIS"

# string and loads the prefixed RDB file, and continues loading the AOF

# tail.

# 加载Redis时,会识别出AOF文件以“Redis”开头

# 字符串并加载带前缀的RDB文件,然后继续加载AOF

# 尾巴。

aof-use-rdb-preamble yes

################################ LUA SCRIPTING ###############################

################################ LUA脚本 ###############################

# Max execution time of a Lua script in milliseconds.

# Lua脚本的最大执行时间(毫秒)。

#

# If the maximum execution time is reached Redis will log that a script is

# still in execution after the maximum allowed time and will start to

# reply to queries with an error.

# 如果达到最长执行时间,Redis将记录脚本正在运行

# 在允许的最长时间后仍在执行,并将开始

# 答复有错误的查询。

#

# When a long running script exceeds the maximum execution time only the

# SCRIPT KILL and SHUTDOWN NOSAVE commands are available. The first can be

# used to stop a script that did not yet called write commands. The second

# is the only way to shut down the server in the case a write command was

# already issued by the script but the user doesn't want to wait for the natural

# termination of the script.

# 当长时间运行的脚本超过最大执行时间时,只有

# 脚本KILL和SHUTDOWN NOSAVE命令可用。第一个可以是

# 用于停止尚未调用write命令的脚本。第二个

# 是在执行写入命令时关闭服务器的唯一方法

# 已由脚本发出,但用户不想等待

# 脚本的终止。

#

# Set it to 0 or a negative value for unlimited execution without warnings.

# 将其设置为0或负值,以便在没有警告的情况下无限执行。

lua-time-limit 5000

################################ REDIS CLUSTER ###############################

################################ REDIS集群 ###############################

#

# ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# WARNING EXPERIMENTAL: Redis Cluster is considered to be stable code, however

# in order to mark it as "mature" we need to wait for a non trivial percentage

# of users to deploy it in production.

# 警告:Redis集群被认为是稳定的代码

# 为了将它标记为“成熟”,我们需要等待一个不小的百分比

# 在生产中部署它的用户数。

# ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#

# Normal Redis instances can't be part of a Redis Cluster; only nodes that are

# started as cluster nodes can. In order to start a Redis instance as a

# cluster node enable the cluster support uncommenting the following:

# 普通Redis实例不能是Redis集群的一部分;仅限于

# 以群集节点可以启动的方式启动。为了启动一个Redis实例作为

# 群集节点启用群集支持取消注释以下内容:

#

# cluster-enabled yes

# Every cluster node has a cluster configuration file. This file is not

# intended to be edited by hand. It is created and updated by Redis nodes.

# Every Redis Cluster node requires a different cluster configuration file.

# Make sure that instances running in the same system do not have

# overlapping cluster configuration file names.

# 每个集群节点都有一个集群配置文件。此文件不可用

# 打算手工编辑的。它由Redis节点创建和更新。

# 每个Redis集群节点都需要不同的集群配置文件。

# 确保在同一系统中运行的实例没有

# 重叠的群集配置文件名。

#

# cluster-config-file nodes-6379.conf

# Cluster node timeout is the amount of milliseconds a node must be unreachable

# for it to be considered in failure state.

# Most other internal time limits are multiple of the node timeout.

# Cluster node timeout是节点必须无法访问的毫秒数

# 在失效状态下考虑。

# 大多数其他内部时间限制是节点超时的倍数。

#

# cluster-node-timeout 15000

# A replica of a failing master will avoid to start a failover if its data

# looks too old.

# 如果发生故障的主机的复制副本的数据

# 看起来太老了。

#

# There is no simple way for a replica to actually have an exact measure of

# its "data age", so the following two checks are performed:

# 对于一个复制品来说,没有一种简单的方法能够真正精确地测量

# 它的“数据时代”,因此执行以下两项检查:

#

# 1) If there are multiple replicas able to failover, they exchange messages

# in order to try to give an advantage to the replica with the best

# replication offset (more data from the master processed).

# Replicas will try to get their rank by offset, and apply to the start

# of the failover a delay proportional to their rank.

# 1)如果有多个副本可以进行故障切换,它们将交换消息

# 为了给复制品以最好的优势

# 复制偏移量(处理来自主服务器的更多数据)。

# 复制副本将尝试通过偏移量获得它们的排名,并应用到起始位置

# 故障转移的延迟与它们的等级成比例。

#

# 2) Every single replica computes the time of the last interaction with

# its master. This can be the last ping or command received (if the master

# is still in the "connected" state), or the time that elapsed since the

# disconnection with the master (if the replication link is currently down).

# If the last interaction is too old, the replica will not try to failover

# at all.

# 2)每个副本计算最后一次与

# 它的主人。这可以是最后收到的ping或命令(如果主机

# 仍处于“已连接”状态),或自

# 与主机断开连接(如果复制链路当前已关闭)。

# 如果上一次交互太旧,复制副本将不会尝试故障转移

# 完全没有。

#

# The point "2" can be tuned by user. Specifically a replica will not perform

# the failover if, since the last interaction with the master, the time

# elapsed is greater than:

# 点“2”可由用户调整。特别是复制副本将不执行

# 如果自上次与主机交互后,故障转移

# 已用时间大于:

#

# (node-timeout * replica-validity-factor) + repl-ping-replica-period

# (节点超时*副本有效期)

#

# So for example if node-timeout is 30 seconds, and the replica-validity-factor

# is 10, and assuming a default repl-ping-replica-period of 10 seconds, the

# replica will not try to failover if it was not able to talk with the master

# for longer than 310 seconds.

# 例如,如果节点超时为30秒,那么副本有效性因子

# 为10,并且假设默认的repl-ping复制周期为10秒,则

# 如果复制副本无法与主机通信,则它不会尝试故障转移

# 超过310秒。

#

# A large replica-validity-factor may allow replicas with too old data to failover

# a master, while a too small value may prevent the cluster from being able to

# elect a replica at all.

# 较大的副本有效性系数可能会使数据太旧的副本无法进行故障切换

# 一个主节点,而太小的值可能会阻止集群

# 选择一个复制品。

#

# For maximum availability, it is possible to set the replica-validity-factor

# to a value of 0, which means, that replicas will always try to failover the

# master regardless of the last time they interacted with the master.

# (However they'll always try to apply a delay proportional to their

# offset rank).

# 为了获得最大可用性,可以设置副本有效性因子

# 值为0,这意味着复制副本将始终尝试故障转移

# 不管他们最后一次和师父互动。

#(然而,他们总是尝试应用一个与他们的时间成比例的延迟

# 偏移秩)。

#

# Zero is the only value able to guarantee that when all the partitions heal

# the cluster will always be able to continue.

# 零是唯一能够保证当所有分区都恢复时

# 群集将始终能够继续。

#

# cluster-replica-validity-factor 10

# Cluster replicas are able to migrate to orphaned masters, that are masters

# that are left without working replicas. This improves the cluster ability

# to resist to failures as otherwise an orphaned master can't be failed over

# in case of failure if it has no working replicas.

# 群集副本能够迁移到孤立的主服务器,即主服务器

# 没有工作副本。这提高了集群能力

# 抵抗失败,否则一个孤立的主人就不能被故障转移

# 如果没有工作副本,则会发生故障。

#

# Replicas migrate to orphaned masters only if there are still at least a

# given number of other working replicas for their old master. This number

# is the "migration barrier". A migration barrier of 1 means that a replica

# will migrate only if there is at least 1 other working replica for its master

# and so forth. It usually reflects the number of replicas you want for every

# master in your cluster.

# 仅当仍有至少一个副本时,副本才会迁移到孤立的主副本

# 给他们的老主人其他工作副本的数量。这个号码

# 是“移民壁垒”。迁移屏障为1意味着复制副本

# 仅当其主服务器至少有一个其他工作副本时,才会进行迁移

# 等等。它通常反映您希望为每个

# 你群里的主人。

#

# Default is 1 (replicas migrate only if their masters remain with at least

# one replica). To disable migration just set it to a very large value.

# A value of 0 can be set but is useful only for debugging and dangerous

# in production.

# 默认值为1(复制副本仅在其主副本保留至少

# 一个副本)。要禁用迁移,只需将其设置为非常大的值。

# 可以设置值0,但仅对调试和安装有用

# 在生产中。

#

# cluster-migration-barrier 1

# By default Redis Cluster nodes stop accepting queries if they detect there

# is at least an hash slot uncovered (no available node is serving it).

# This way if the cluster is partially down (for example a range of hash slots

# are no longer covered) all the cluster becomes, eventually, unavailable.

# It automatically returns available as soon as all the slots are covered again.

# 默认情况下,Redis集群节点在检测到查询时停止接受查询

# 至少有一个哈希槽未覆盖(没有可用的节点为其提供服务)。

# 如果集群部分关闭(例如一系列哈希槽),则使用这种方法

# 不再覆盖)所有集群最终都变得不可用。

# 一旦所有插槽被覆盖,它就会自动返回可用状态。

#

# However sometimes you want the subset of the cluster which is working,

# to continue to accept queries for the part of the key space that is still

# covered. In order to do so, just set the cluster-require-full-coverage

# option to no.

# 但是有时你需要集群的子集,

# 继续接受对仍然存在的密钥空间部分的查询

# 覆盖。为此,只需将集群设置为需要完全覆盖

# 选择否。

#

# cluster-require-full-coverage yes

# This option, when set to yes, prevents replicas from trying to failover its

# master during master failures. However the master can still perform a

# manual failover, if forced to do so.

# 此选项设置为“是”时,可防止复制副本尝试故障转移

# 主设备故障时的主设备。但是,主人仍然可以执行

# 手动故障切换(如果强制执行)。

#

# This is useful in different scenarios, especially in the case of multiple

# data center operations, where we want one side to never be promoted if not

# in the case of a total DC failure.

# 这在不同的场景中非常有用,尤其是在多个场景中

# 数据中心运营,我们希望一方永远不会得到提升

# 在完全直流故障的情况下。

#

# cluster-replica-no-failover no

# In order to setup your cluster make sure to read the documentation

# 为了设置集群,请务必阅读文档

# available at http://redis.io web site.

########################## CLUSTER DOCKER/NAT support ########################

########################## 群集DOCKER/NAT支持 ########################

# In certain deployments, Redis Cluster nodes address discovery fails, because

# addresses are NAT-ted or because ports are forwarded (the typical case is

# Docker and other containers).

# 在某些部署中,Redis集群节点地址发现失败,因为

# 地址被NAT-ted或者因为端口被转发(典型的情况是

# 码头工人和其他集装箱)。

#

# In order to make Redis Cluster working in such environments, a static

# configuration where each node knows its public address is needed. The

# following two options are used for this scope, and are:

# 为了使Redis集群能够在这样的环境中工作,一个静态的

# 每个节点都知道需要其公共地址的配置。这个

# 此范围使用以下两个选项:

#

# * cluster-announce-ip

# * 群集ip

# * cluster-announce-port

# * 群集通告端口

# * cluster-announce-bus-port

# * 群集总线端口

#

# Each instruct the node about its address, client port, and cluster message

# bus port. The information is then published in the header of the bus packets

# so that other nodes will be able to correctly map the address of the node

# publishing the information.

# 每个节点都向节点指示其地址、客户端端口和集群消息

# 总线端口。然后在总线包的报头中发布信息

# 以便其他节点能够正确映射该节点的地址

# 发布信息。

#

# If the above options are not used, the normal Redis Cluster auto-detection

# will be used instead.

# 如果不使用上述选项,则正常的Redis集群自动检测

# 将改为使用。

#

# Note that when remapped, the bus port may not be at the fixed offset of