目录

一.本文基于上一篇文章keepalived环境来做的,主机信息如下

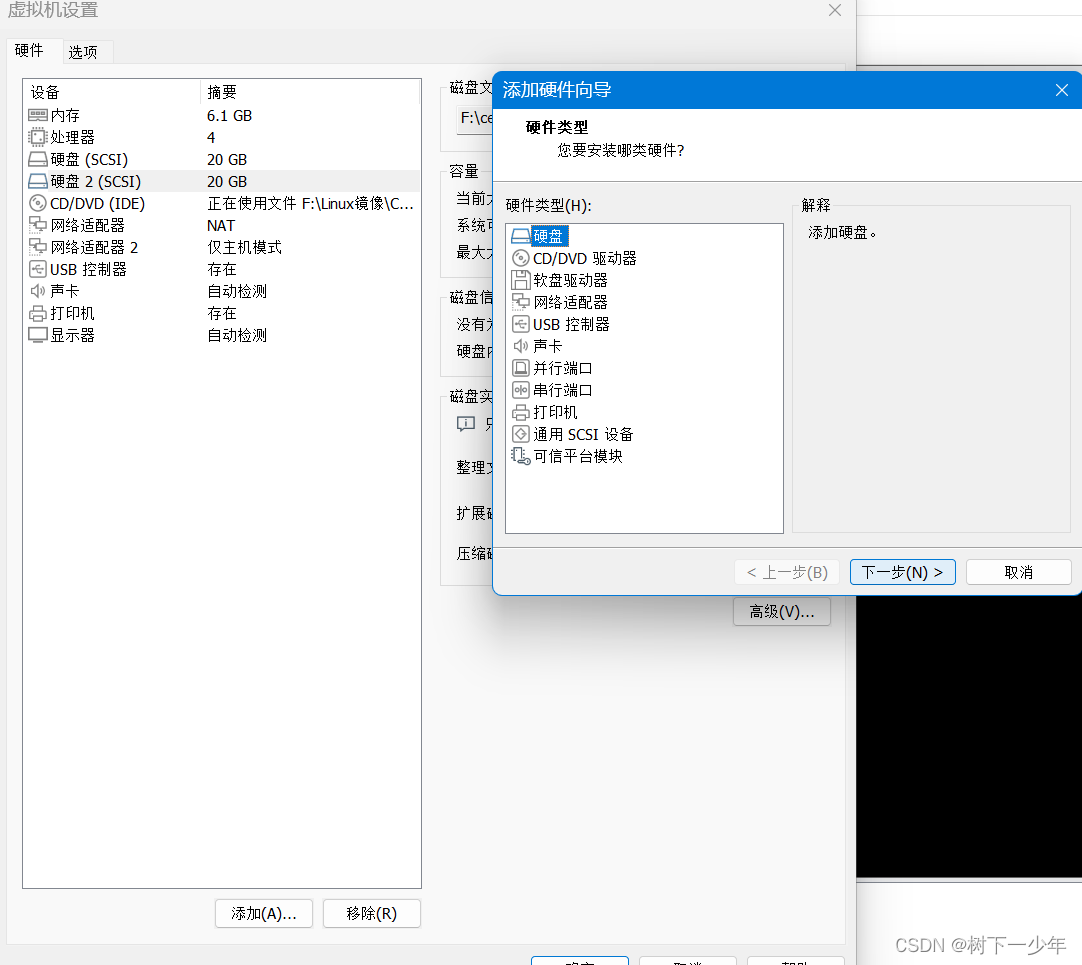

二.为两台虚拟机准备添加一块新硬盘设备

三.安装drbd9

1.使用扩展源的rpm包来下载

2.创建资源并挂载到新增的硬盘

3.主设备升级身份

4.主备两个设备手动切换身份演示

四.安装配置nfs

五.安装keeepalived

六.编辑keepalived.conf配置文件

1.主设备配置文件如下

2.备设备配置文件如下

3.脚本文件

七.测试

1.主设备nfs掉点,查看keepalived调用探测脚本是否能够将nfs重新启动

2.主设备keepalived掉点,查看VIP和挂载目录是否能够飘逸到备设备

一.本文基于上一篇文章keepalived环境来做的,主机信息如下

主keepalived+drbd:192.168.2.130(main)

备keepalived+drbd:192.168.2.133(serverc)

VIP:192.168.2.100

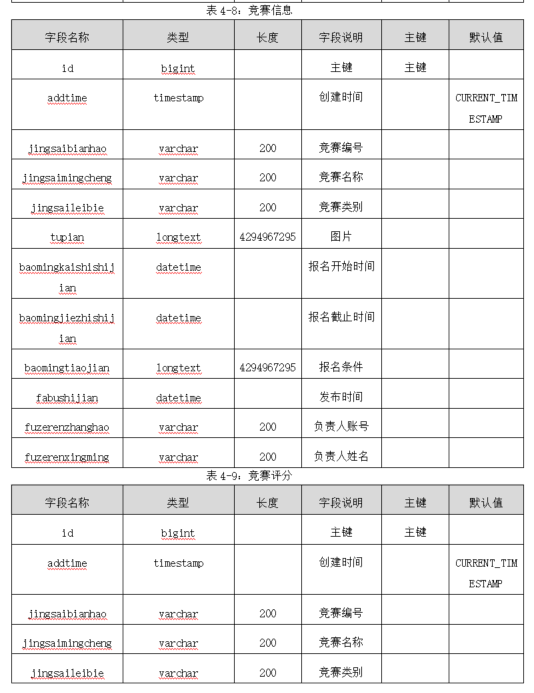

二.为两台虚拟机准备添加一块新硬盘设备

关机状态下进行(开机状态下新增后需要重启)如果没有特殊名称要求,直接都点下一步即可,两台设备都做

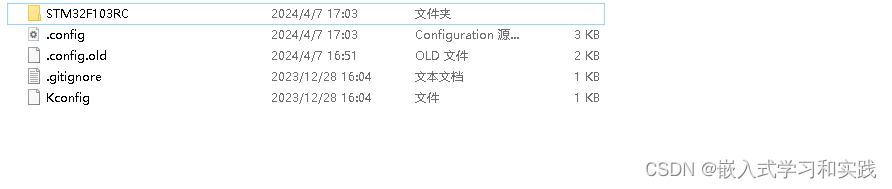

[root@main ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm

sdb 8:16 0 20G 0 disk #刚才新增的

sr0 11:0 1 4.4G 0 rom /cdrom三.安装drbd9

1.使用扩展源的rpm包来下载

主备都做

[root@main ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org && rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm && yum install gcc gcc-c++ make glibc flex kernel* -y && yum install -y drbd90-utils kmod-drbd90 && modprobe drbd && echo drbd > /etc/modules-load.d/drbd.conf && lsmod | grep drbd

drbd 654854 0

libcrc32c 12644 5 xfs,drbd,ip_vs,nf_nat,nf_conntrack

[root@main drbd.d]# systemctl start drbd #启动drbd

[root@main ~]# tree /etc/drbd.d #配置文件和资源存放路径如下

/etc/drbd.d

├── global_common.conf

└── r0.res

0 directories, 2 files

[root@main ~]# cat /etc/drbd.conf

# You can find an example in /usr/share/doc/drbd.../drbd.conf.example

include "drbd.d/global_common.conf";

include "drbd.d/*.res";2.创建资源并挂载到新增的硬盘

[root@main drbd.d]# cat r0.res #创建r0资源,主备都做,可以拷贝进行

resource r0 {

disk /dev/sdb;

device /dev/drbd0;

meta-disk internal;

on main {

address 192.168.2.130:7789;

}

on serverc {

address 192.168.2.133:7789;

}

}

[root@main drbd.d]# drbdadm create-md r0

initializing activity log

initializing bitmap (640 KB) to all zero

Writing meta data...

New drbd meta data block successfully created.

[root@main drbd.d]# drbdadm up r03.主设备升级身份

刚安装完成两台设备应该都是secondary,主备手动升级身份

初始化过程在9版本用watch drbdadm status 资源名称看

[root@main drbd.d]# drbdadm primary r0 --force

[root@main drbd.d]# mkfs.xfs /dev/drbd0 #主做备不做

[root@main drbd.d]# mkdir /data

[root@main drbd.d]# mount /dev/drbd0 /data

[root@main drbd.d]# drbdadm status r0 #查看状态,只查看角色可以使用drbdadm role r0

r0 role:Primary

disk:UpToDate

serverc role:Secondary

peer-disk:UpToDate

[root@serverc ~]# drbdadm status r0 #备设备的状态

r0 role:Secondary

disk:UpToDate

main role:Primary

peer-disk:UpToDate

[root@main ~]# mkfs.xfs /dev/drbd0

meta-data=/dev/drbd0 isize=512 agcount=4, agsize=1310678 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242711, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@main ~]# mount /dev/drbd0 /data #主设备查看挂载情况,写入测试文件

[root@main ~]# df -h | grep data

/dev/drbd0 20G 33M 20G 1% /data

[root@main drbd.d]# ll /data

total 0

[root@main drbd.d]# echo hello > /data/a.txt

[root@main drbd.d]# ll /data/

total 4

-rw-r--r-- 1 root root 6 Mar 29 21:53 a.txt

[root@main drbd.d]# cat /data/a.txt

hello4.主备两个设备手动切换身份演示

先在主备降级并卸载后到备设备升级挂载

[root@main ~]# drbdadm role r0

Primary

[root@main ~]# umount /data

[root@main ~]# drbdadm secondary r0

[root@main ~]# drbdadm role r0

Secondary

[root@serverc ~]# drbdadm role r0

Secondary

[root@serverc ~]# drbdadm primary r0

[root@serverc ~]# mount /dev/drbd0 /data

[root@serverc ~]# drbdadm role r0

Primary

#在日志中是可以清除查看到这个身份转换过程的

#tail -f /var/log/messages

Mar 30 13:38:53 main kernel: XFS (drbd0): Unmounting Filesystem

Mar 30 13:39:05 main kernel: drbd r0: Preparing cluster-wide state change 1582556808 (1->-1 3/2)

Mar 30 13:39:05 main kernel: drbd r0: State change 1582556808: primary_nodes=0, weak_nodes=0

Mar 30 13:39:05 main kernel: drbd r0: Committing cluster-wide state change 1582556808 (1ms)

Mar 30 13:39:05 main kernel: drbd r0: role( Primary -> Secondary ) [secondary]

Mar 30 13:39:35 main kernel: drbd r0 serverc: Preparing remote state change 1834794712

Mar 30 13:39:35 main kernel: drbd r0 serverc: Committing remote state change 1834794712 (primary_nodes=1)

Mar 30 13:39:35 main kernel: drbd r0 serverc: peer( Secondary -> Primary ) [remote]四.安装配置nfs

主备都做

[root@main drbd.d]# yum install -y rpcbind nfs-utils

[root@main drbd.d]# vim /etc/exports

[root@main ~]# cat /etc/exports

/data 192.168.2.0/24(rw,sync,no_root_squash)

[root@serverc ~]# cat /etc/exports

/data 192.168.2.0/24(rw,sync,no_root_squash)

systemctl start rpcbind nfs五.安装keeepalived

参考上一篇文章部署

六.编辑keepalived.conf配置文件

1.主设备配置文件如下

[root@main keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.2.130

smtp_connect_timeout 30

router_id LVS_DEVEL1

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nfs { #这个要写在引用部分前,不然引用不上这个脚本,在这还是耽误了挺长时间,还有后面track_script及后面三个部分的顺序好像也是有影响,可以自己具体实验一下

script "/etc/keepalived/chk_nfs.sh"

interval 2 #每隔多少秒检测

weight -40

}

vrrp_instance VI_1 {

state MASTER #主

interface ens33

virtual_router_id 51

mcast_src_ip 192.168.2.130

priority 100

advert_int 1

nopreempt #非抢占模式,优先级生效

authentication {

auth_type PASS

auth_pass 123456

}

track_script { #引入验证块

chk_nfs

}

notify_master /etc/keepalived/notify_master.sh #以下是几个日志脚本,稍后写

notify_backup /etc/keepalived/notify_backup.sh

notify_fault /etc/keepalived/notify_fault.sh

virtual_ipaddress { #VIP

192.168.2.100

}

}2.备设备配置文件如下

[root@serverc keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL2

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nfs {

script "/etc/keepalived/chk_nfs.sh"

interval 2

weught 40

}

vrrp_instance VI_1 {

state BACKUP #从

interface ens33

virtual_router_id 51

priority 80 #优先级低于主

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

track_script {

chk_nfs

}

notify_master /etc/keepalived/notify_master.sh

notify_backup /etc/keepalived/notify_backup.sh

notify_fault /etc/keepalived/notify_fault.sh

virtual_ipaddress {

192.168.2.100

}

}3.脚本文件

主备都做

[root@main keepalived]# cat chk_nfs.sh #nfs检测脚本

#!/bin/bash

/usr/bin/systemctl status nfs

if [ $? -ne 0 ] #校测到没有启动就尝试启动nfs

then

systemctl start nfs

if [ $? -ne 0 ]

then #如果仍然启动不了就卸载,降级,停止keealived服务

umount -v /dev/drbd0

drbdadm secondary r0

systemctl stop keepalived

fi

fi

[root@main keepalived]# cat notify_master.sh

#!/bin/bash

time=`date "+%F %H:%M:%S"` #日志时间格式

log=/etc/keepalived/logs

echo -e "$time ------notify_master------\n" >> $log/notify_master.log

drbdadm primary r0 &>> $log/notify_master.log #当为主设备时先升级,挂载,重启nfs

mount -v /dev/drbd0 /data &>> $log/notify_master.log

systemctl restart nfs &>> $log/notify_master.log

echo -e "\n" >> $log/notify_master.log

[root@main keepalived]# cat notify_backup.sh

#!/bin/bash

time=`date "+%F %H:%M:%S"`

log=/etc/keepalived/logs

echo -e "$time ------notify_backup------\n" >> $log/notify_backup.log

systemctl stop nfs &>> $log/notify_backup.log #当为备设备时,先停止nfs,卸载,降级

umount -v /dev/drbd0 &>> $log/notify_backup.log

drbdadm secondary r0 &>> $log/notify_backup.log

echo -e "\n" >> $log/notify_backup.log

[root@main keepalived]# cat notify_fault.sh

#!/bin/bash

time=`date "+%F %H:%M:%S"`

echo -e "$time ------notify_fault------\n" >> /etc/keepalived/logs/notify_fault.log

systemctl stop nfs &>> /etc/keepalived/logs/notify_fault.log

umount -v /dev/drbd0 &>> /etc/keepalived/logs/notify_fault.log

drbdadm secondary r0 &>> /etc/keepalived/logs/notify_fault.log

echo -e "\n" >> /etc/keepalived/logs/notify_fault.log

chmod +x *.sh七.测试

1.主设备nfs掉点,查看keepalived调用探测脚本是否能够将nfs重新启动

[root@main keepalived]# systemctl stop nfs

[root@main keepalived]# systemctl status nfs

● nfs-server.service - NFS server and services

Loaded: loaded (/usr/lib/systemd/system/nfs-server.service; disabled; vendor preset: disabled)

Drop-In: /run/systemd/generator/nfs-server.service.d

└─order-with-mounts.conf

Active: active (exited) since Sat 2024-03-30 14:15:02 CST; 5s ago

Process: 82157 ExecStopPost=/usr/sbin/exportfs -f (code=exited, status=0/SUCCESS)

Process: 82153 ExecStopPost=/usr/sbin/exportfs -au (code=exited, status=0/SUCCESS)

Process: 82151 ExecStop=/usr/sbin/rpc.nfsd 0 (code=exited, status=0/SUCCESS)

Process: 82283 ExecStartPost=/bin/sh -c if systemctl -q is-active gssproxy; then systemctl reload gssproxy ; fi (code=exited, status=0/SUCCESS)

Process: 82266 ExecStart=/usr/sbin/rpc.nfsd $RPCNFSDARGS (code=exited, status=0/SUCCESS)

Process: 82264 ExecStartPre=/usr/sbin/exportfs -r (code=exited, status=0/SUCCESS)

Main PID: 82266 (code=exited, status=0/SUCCESS)

Tasks: 0

Memory: 0B

CGroup: /system.slice/nfs-server.service

Mar 30 14:15:02 main systemd[1]: Starting NFS server and services...

Mar 30 14:15:02 main systemd[1]: Started NFS server and services.

[root@main keepalived]# df -h |grep data

/dev/drbd0 20G 33M 20G 1% /data

#具体日志文件如下

Mar 30 14:15:00 main systemd: Stopping NFS server and services...

Mar 30 14:15:00 main systemd: Stopped NFS server and services.

Mar 30 14:15:00 main systemd: Stopping NFSv4 ID-name mapping service...

Mar 30 14:15:00 main systemd: Stopping NFS Mount Daemon...

Mar 30 14:15:00 main rpc.mountd[65956]: Caught signal 15, un-registering and exiting.

Mar 30 14:15:00 main systemd: Stopped NFSv4 ID-name mapping service.

Mar 30 14:15:00 main kernel: nfsd: last server has exited, flushing export cache

Mar 30 14:15:00 main systemd: Stopped NFS Mount Daemon.

Mar 30 14:15:02 main systemd: Starting Preprocess NFS configuration...

Mar 30 14:15:02 main systemd: Started Preprocess NFS configuration.

Mar 30 14:15:02 main systemd: Starting NFSv4 ID-name mapping service...

Mar 30 14:15:02 main systemd: Starting NFS Mount Daemon...

Mar 30 14:15:02 main systemd: Started NFSv4 ID-name mapping service.

Mar 30 14:15:02 main rpc.mountd[82263]: Version 1.3.0 starting

Mar 30 14:15:02 main systemd: Started NFS Mount Daemon.

Mar 30 14:15:02 main systemd: Starting NFS server and services...

Mar 30 14:15:02 main kernel: NFSD: starting 90-second grace period (net ffffffffb0b16200)

Mar 30 14:15:02 main systemd: Reloading GSSAPI Proxy Daemon.

Mar 30 14:15:02 main systemd: Reloaded GSSAPI Proxy Daemon.

Mar 30 14:15:02 main systemd: Started NFS server and services.

Mar 30 14:15:02 main systemd: Starting Notify NFS peers of a restart...

Mar 30 14:15:02 main sm-notify[82289]: Version 1.3.0 starting

Mar 30 14:15:02 main sm-notify[82289]: Already notifying clients; Exiting!

Mar 30 14:15:02 main systemd: Started Notify NFS peers of a restart.2.主设备keepalived掉点,查看VIP和挂载目录是否能够飘逸到备设备

[root@main keepalived]# systemctl stop keepalived.service

[root@serverc keepalived]# ip a |grep ens33 -A1 #VIP已漂移

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2b:95:b3 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.133/24 brd 192.168.2.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.2.100/32 scope global ens33

valid_lft foreve preferred_lft forever

[root@serverc keepalived]# df -h #挂载漂移

Filesystem Size Used Avail Use% Mounted on

devtmpfs 2.9G 0 2.9G 0% /dev

tmpfs 2.9G 0 2.9G 0% /dev/shm

tmpfs 2.9G 20M 2.9G 1% /run

tmpfs 2.9G 0 2.9G 0% /sys/fs/cgroup

/dev/mapper/centos-root 17G 4.1G 13G 25% /

/dev/sda1 1014M 272M 743M 27% /boot

/dev/sr0 4.4G 4.4G 0 100% /cdrom

tmpfs 585M 0 585M 0% /run/user/0

/dev/drbd0 20G 33M 20G 1% /data

#日志情况如下

Mar 30 15:08:13 main Keepalived_vrrp[116817]: Reset promote_secondaries counter 0

Mar 30 15:08:13 main Keepalived_vrrp[116817]: (VI_1) Entering BACKUP STATE (init)

Mar 30 15:08:13 main Keepalived_vrrp[116817]: VRRP sockpool: [ifindex( 2), family(IPv4), proto(112), fd(12,13) multicast, address(224.0.0.18)]

Mar 30 15:08:13 main Keepalived_vrrp[116817]: VRRP_Script(chk_nfs) succeeded

Mar 30 15:08:13 main rpc.mountd[114695]: Caught signal 15, un-registering and exiting.

Mar 30 15:08:13 main kernel: drbd r0 serverc: Preparing remote state change 1490261391

Mar 30 15:08:13 main kernel: drbd r0 serverc: Committing remote state change 1490261391 (primary_nodes=1)

Mar 30 15:08:13 main kernel: drbd r0 serverc: peer( Secondary -> Primary ) [remote]

Mar 30 15:08:13 main kernel: drbd r0/0 drbd0: Disabling local AL-updates (

[root@serverc keepalived]# drbdadm status r0 #serverc此时变为主,#main变为备

r0 role:Primary

disk:UpToDate

main role:Secondary

peer-disk:UpToDate