上个月,我们宣布两款高性能的 Mistral AI 模型(即 Mistral 7B 和 Mixtral 8x7B)已在 Amazon Bedrock 上线。作为 Mistral 的首个基础模型,Mistral 7B 支持英语文本生成任务,并具备自然编码能力;Mixtral 8x7B 是一种受欢迎的优质稀疏专家混合(MoE)模型,非常适用于文本摘要、问题解答、文本分类、文本完善和代码补全。

现在,Mistral Large 已在 Amazon Bedrock 上线。Mistral Large 非常适合需要强大推理能力的复杂任务或高度专业化的任务,例如合成文本生成或代码生成。

以下是有关 Mistral Large 的一些信息:

它精通英语、法语、西班牙语、德语和意大利语,对语法和文化背景有细致入微的理解。

它有一个 32K 令牌上下文窗口,让您可以从大型文档中准确调用信息。

它精确遵循指令,使您能够设计自己的审核策略。Mistral AI 的员工用它来设置测试版助手演示程序 le Chat 的系统级审核。您与大语言模型(LLM)的首次互动将会围绕提示(prompts)展开,因此,编写有效的提示是 LLM 生成期望响应的关键。关于向 Mistral AI 模型发出推理请求的更多细节,请参考此篇 Amazon Bedrock 指南:

https://docs.aws.amazon.com/bedrock/latest/userguide/model-parameters-mistral.html#model-parameters-mistral-request-response

Mistral Large 入门

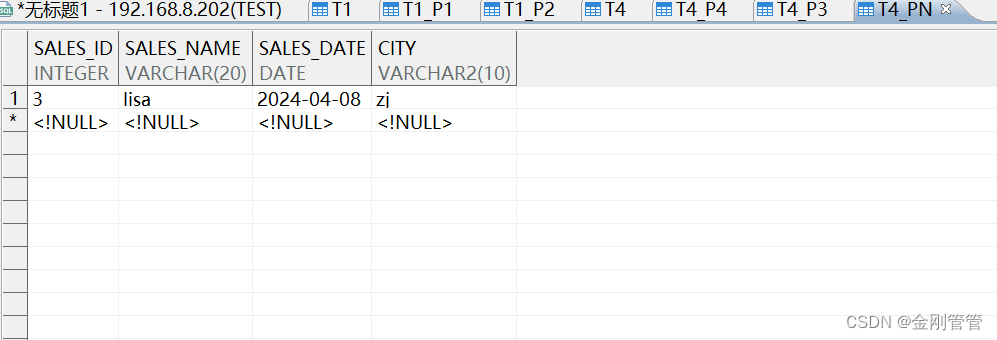

要开始在 Amazon Bedrock 上使用 Mistral Large,您首先需要获得模型的访问权限。在 Amazon Bedrock 控制台上,选择模型访问权限,然后选择管理模型访问权限。接下来,选择 Mistral Large,然后选择保存更改。

获得 Mistral Large 的访问权限后,即可在 Amazon Bedrock 上使用该模型。刷新基础模型表以查看更新后的状态。

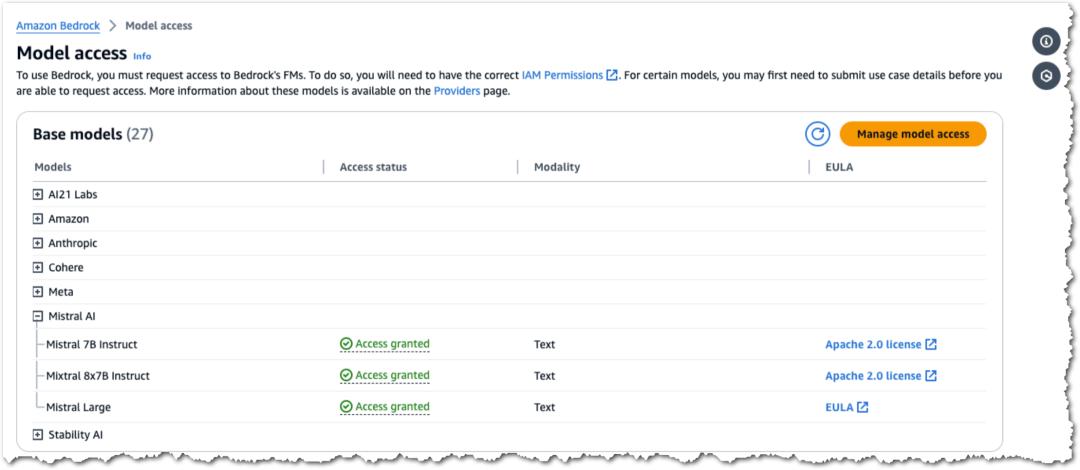

为了查看 Mistral Large 在复杂推理方面的实际运行情况,我在 Playgrounds 部分尝试了以下提示:

提示

Calculate the difference in payment dates between the two customers whose payment amounts are closest to each other in the given dataset:

'{

"transaction_id":{"0":"T1001","1":"T1002","2":"T1003","3":"T1004","4":"T1005"},

"customer_id":{"0":"C001","1":"C002","2":"C003","3":"C002","4":"C001"},

"payment_amount":{"0":125.5,"1":89.99,"2":120.0,"3":54.3,"4":210.2},

"payment_date":{"0":"2021-10-05","1":"2021-10-06","2":"2021-10-07","3":"2021-10-05","4":"2021-10-08"},

"payment_status":{"0":"Paid","1":"Unpaid","2":"Paid","3":"Paid","4":"Pending"}

}'

输出

To find the difference in payment dates between the two customers whose payment amounts are closest to each other, we first need to identify the two closest payment amounts and then find their respective payment dates.

The payment amounts are: 125.5, 89.99, 120.0, 54.3, 210.2

The two closest payment amounts are 125.5 and 120.0.The payment date for the payment amount 125.5 is '2021-10-05' and for the payment amount 120.0 is '2021-10-07'.

The difference in payment dates can be calculated as follows:

Date difference = |'2021-10-07' - '2021-10-05'| = 2 days

So, the difference in payment dates between the two customers whose payment amounts are closest to each other is 2 days.

在这篇文章的开头,我提到 Mistral Large 精通多种语言,对语法和文化背景有细致入微的理解。我测试了模型对法语的理解:

提示

Lequel est le plus lourd une livre de fer ou un kilogramme de plume

输出

Un kilogramme de plumes est plus lourd qu'une livre de fer.

Pour comprendre cela, il est important de se rappeler que :

1.Une livre (lb) est une unité de mesure de masse principalement utilisée aux États-Unis et dans d'autres systèmes de mesure impériaux, tandis qu'un kilogramme (kg) est l'unité de base de masse dans le système international d'unités (SI).

2. 1 kilogramme est approximativement égal à 2,2 livres.

Donc, un kilogramme de plumes est plus lourd qu'une livre de fer, car il correspond à environ 2,2 livres de plumes.

以编程方式

与 Mistral Large 交互

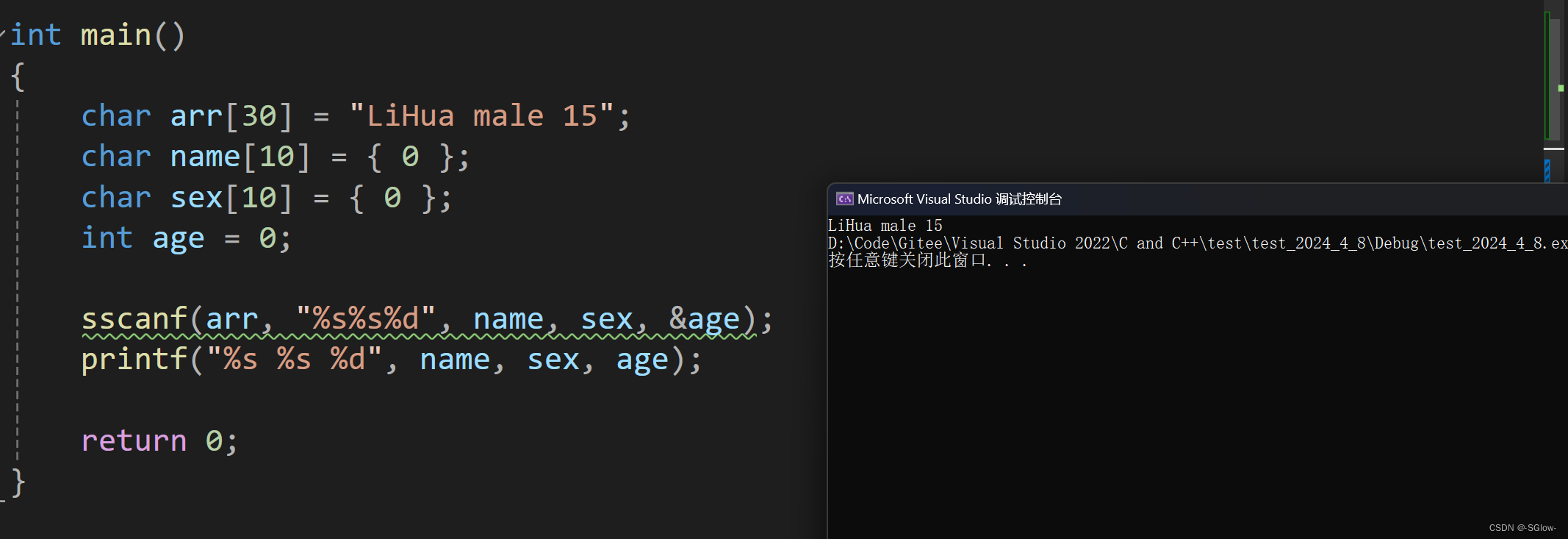

您还可以通过亚马逊云科技命令行界面(Amazon CLI)和软件开发工具包(Amazon SDK)使用 Amazon Bedrock API 进行各种调用。以下是使用 Amazon SDK 与 Amazon Bedrock 运行时系统 API 交互的 Python 示例代码。

如果您在提示中指定“You will only respond with a JSON object with the key X, Y, and Z.”,则可以在简单的下游任务中使用 JSON 格式的输出:

Python

import boto3

import json

bedrock = boto3.client(service_name="bedrock-runtime", region_name='us-east-1')

prompt = """

<s>[INST]You are a summarization system that can provide summaries with associated confidence

scores.In clear and concise language, provide three short summaries of the following essay,

along with their confidence scores.You will only respond with a JSON object with the key Summary

and Confidence.Do not provide explanations.[/INST]

# Essay:

The generative artificial intelligence (AI) revolution is in full swing, and customers of all sizes and across industries are taking advantage of this transformative technology to reshape their businesses.From reimagining workflows to make them more intuitive and easier to enhancing decision-making processes through rapid information synthesis, generative AI promises to redefine how we interact with machines.It’s been amazing to see the number of companies launching innovative generative AI applications on AWS using Amazon Bedrock.Siemens is integrating Amazon Bedrock into its low-code development platform Mendix to allow thousands of companies across multiple industries to create and upgrade applications with the power of generative AI.Accenture and Anthropic are collaborating with AWS to help organizations—especially those in highly-regulated industries like healthcare, public sector, banking, and insurance—responsibly adopt and scale generative AI technology with Amazon Bedrock.collaboration will help organizations like the District of Columbia Department of Health speed innovation, improve customer service, and improve productivity, while keeping data private and secure.Amazon Pharmacy is using generative AI to fill prescriptions with speed and accuracy, making customer service faster and more helpful, and making sure that the right quantities of medications are stocked for customers.

To power so many diverse applications, we recognized the need for model diversity and choice for generative AI early on.We know that different models excel in different areas, each with unique strengths tailored to specific use cases, leading us to provide customers with access to multiple state-of-the-art large language models (LLMs) and foundation models (FMs) through a unified service: Amazon Bedrock.By facilitating access to top models from Amazon, Anthropic, AI21 Labs, Cohere, Meta, Mistral AI, and Stability AI, we empower customers to experiment, evaluate, and ultimately select the model that delivers optimal performance for their needs.

Announcing Mistral Large on Amazon Bedrock

Today, we are excited to announce the next step on this journey with an expanded collaboration with Mistral AI.A French startup, Mistral AI has quickly established itself as a pioneering force in the generative AI landscape, known for its focus on portability, transparency, and its cost-effective design requiring fewer computational resources to run.We recently announced the availability of Mistral 7B and Mixtral 8x7B models on Amazon Bedrock, with weights that customers can inspect and modify.Today, Mistral AI is bringing its latest and most capable model, Mistral Large, to Amazon Bedrock, and is committed to making future models accessible to AWS customers.Mistral AI will also use AWS AI-optimized AWS Trainium and AWS Inferentia to build and deploy its future foundation models on Amazon Bedrock, benefitting from the price, performance, scale, and security of AWS.Along with this announcement, starting today, customers can use Amazon Bedrock in the AWS Europe (Paris) Region.At launch, customers will have access to some of the latest models from Amazon, Anthropic, Cohere, and Mistral AI, expanding their options to support various use cases from text understanding to complex reasoning.

Mistral Large boasts exceptional language understanding and generation capabilities, which is ideal for complex tasks that require reasoning capabilities or ones that are highly specialized, such as synthetic text generation, code generation, Retrieval Augmented Generation (RAG), or agents.For example, customers can build AI agents capable of engaging in articulate conversations, generating nuanced content, and tackling complex reasoning tasks.The model’s strengths also extend to coding, with proficiency in code generation, review, and comments across mainstream coding languages.And Mistral Large’s exceptional multilingual performance, spanning French, German, Spanish, and Italian, in addition to English, presents a compelling opportunity for customers.By offering a model with robust multilingual support, AWS can better serve customers with diverse language needs, fostering global accessibility and inclusivity for generative AI solutions.

By integrating Mistral Large into Amazon Bedrock, we can offer customers an even broader range of top-performing LLMs to choose from.No single model is optimized for every use case, and to unlock the value of generative AI, customers need access to a variety of models to discover what works best based for their business needs.We are committed to continuously introducing the best models, providing customers with access to the latest and most innovative generative AI capabilities.

“We are excited to announce our collaboration with AWS to accelerate the adoption of our frontier AI technology with organizations around the world.Our mission is to make frontier AI ubiquitous, and to achieve this mission, we want to collaborate with the world’s leading cloud provider to distribute our top-tier models.We have a long and deep relationship with AWS and through strengthening this relationship today, we will be able to provide tailor-made AI to builders around the world.”

– Arthur Mensch, CEO at Mistral AI.

Customers appreciate choice

Since we first announced Amazon Bedrock, we have been innovating at a rapid clip—adding more powerful features like agents and guardrails.And we’ve said all along that more exciting innovations, including new models will keep coming.With more model choice, customers tell us they can achieve remarkable results:

“The ease of accessing different models from one API is one of the strengths of Bedrock.The model choices available have been exciting.As new models become available, our AI team is able to quickly and easily evaluate models to know if they fit our needs.The security and privacy that Bedrock provides makes it a great choice to use for our AI needs.”

– Jamie Caramanica, SVP, Engineering at CS Disco.

“Our top priority today is to help organizations use generative AI to support employees and enhance bots through a range of applications, such as stronger topic, sentiment, and tone detection from customer conversations, language translation, content creation and variation, knowledge optimization, answer highlighting, and auto summarization.To make it easier for them to tap into the potential of generative AI, we’re enabling our users with access to a variety of large language models, such as Genesys-developed models and multiple third-party foundational models through Amazon Bedrock, including Anthropic’s Claude, AI21 Labs’s Jurrassic-2, and Amazon Titan.Together with AWS, we’re offering customers exponential power to create differentiated experiences built around the needs of their business, while helping them prepare for the future.”

– Glenn Nethercutt, CTO at Genesys.

As the generative AI revolution continues to unfold, AWS is poised to shape its future, empowering customers across industries to drive innovation, streamline processes, and redefine how we interact with machines.Together with outstanding partners like Mistral AI, and with Amazon Bedrock as the foundation, our customers can build more innovative generative AI applications.

Democratizing access to LLMs and FMs

Amazon Bedrock is democratizing access to cutting-edge LLMs and FMs and AWS is the only cloud provider to offer the most popular and advanced FMs to customers.The collaboration with Mistral AI represents a significant milestone in this journey, further expanding Amazon Bedrock’s diverse model offerings and reinforcing our commitment to empowering customers with unparalleled choice through Amazon Bedrock.By recognizing that no single model can optimally serve every use case, AWS has paved the way for customers to unlock the full potential of generative AI.Through Amazon Bedrock, organizations can experiment with and take advantage of the unique strengths of multiple top-performing models, tailoring their solutions to specific needs, industry domains, and workloads.This unprecedented choice, combined with the robust security, privacy, and scalability of AWS, enables customers to harness the power of generative AI responsibly and with confidence, no matter their industry or regulatory constraints.

"""

body = json.dumps({

"prompt": prompt,

"max_tokens": 512,

"top_p": 0.8,

"temperature": 0.5,

})

modelId = "mistral.mistral-large-2402-v1:0"

accept = "application/json"

contentType = "application/json"

response = bedrock.invoke_model(

body=body,

modelId=modelId,

accept=accept,

contentType=contentType

)

print(json.loads(response.get('body').read()))您可以获得 JSON 格式的输出,如下所示:

JSON

{

"Summaries": [

{

"Summary": "The author discusses their early experiences with programming and writing,

starting with writing short stories and programming on an IBM 1401 in 9th grade.

They then moved on to working with microcomputers, building their own from a Heathkit,

and eventually convincing their father to buy a TRS-80 in 1980.They wrote simple games,

a program to predict rocket flight trajectories, and a word processor.",

"Confidence": 0.9

},

{

"Summary": "The author began college as a philosophy major, but found it to be unfulfilling

and switched to AI.They were inspired by a novel and a PBS documentary, as well as the

potential for AI to create intelligent machines like those in the novel.Despite this

excitement, they eventually realized that the traditional approach to AI was flawed and

shifted their focus to Lisp.",

"Confidence": 0.85

},

{

"Summary": "The author briefly worked at Interleaf, where they found that their Lisp skills

were highly valued.They eventually left Interleaf to return to RISD, but continued to work

as a freelance Lisp hacker.While at RISD, they started painting still lives in their bedroom

at night, which led to them applying to art schools and eventually attending the Accademia

di Belli Arti in Florence.",

"Confidence": 0.9

}

]

}要了解更多 Mistral AI 模型中的提示功能,请访问 Mistral AI 文档:

https://docs.mistral.ai/guides/prompting-capabilities/

现已推出

Mistral Large 以及其他 Mistral AI 模型(Mistral 7B 和 Mixtral 8x7B)现已在美国东部(弗吉尼亚州北部)、美国西部(俄勒冈州)和欧洲地区(巴黎)区域的 Amazon Bedrock 上线;查看完整区域列表以了解未来的更新:

https://docs.aws.amazon.com/bedrock/latest/userguide/models-regions.html

您可以通过我们的生成式 AI 社区分享和学习:

https://community.aws/generative-ai

您也可以立即在 Amazon Bedrock 控制台中试用 Mistral Large,并将反馈发送至 Amazon re:Post for Amazon Bedrock 或通过常用的 Amazon Support 联系人发送。

了解我们与 Mistral AI 的合作:

https://aws.amazon.com/blogs/machine-learning/aws-and-mistral-ai-commit-to-democratizing-generative-ai-with-a-strengthened-collaboration/

点击阅读原文查看博客,

获得更详细内容!

本篇作者

Veliswa Boya

亚马逊云科技资深开发者布道师。她在技术领域担任过许多角色:从开发人员到分析师、架构师到云工程师。

星标不迷路,开发更极速!

关注后记得星标「亚马逊云开发者」

听说,点完下面4个按钮

就不会碰到bug了!

![简单的跳马问题,遍历dp[j][i]到某个值那个数据不对了,如何解决??](https://img-blog.csdnimg.cn/img_convert/c2b7fcf358c900780204ab56182153de.gif)