FaceForensics++数据库下载(超详细版教程)

相信很多做deepfake相关研究的朋友,在对模型进行测试或者对潜前人的研究进行复现时,都需要下载一系列数据库并进行预处理等操作,而FaceForensics++数据库是一个由数千个使用不同DeepFake方法操纵的视频组成,并包含四个假子数据集,即DeepFake Detection (DFD), DeepFake (DF), Face2Face (F2F)和FaceSwap (FS)。

由于这是国外的数据集所以一下的操作都需要挂代理来实现:(如果小伙伴无法挂代理可以评论留言,我发给你)

获取下载脚本并并保存到本地

ondyari/FaceForensics: Github of the FaceForensics dataset![]() https://github.com/ondyari/FaceForensicsff++的官网如上,按照上面的要求填写谷歌的表格,他会通过邮件给你发脚本代码,这里不赘述,我会在文章最后附上这个代码,大家就可以不用填写表格了。

https://github.com/ondyari/FaceForensicsff++的官网如上,按照上面的要求填写谷歌的表格,他会通过邮件给你发脚本代码,这里不赘述,我会在文章最后附上这个代码,大家就可以不用填写表格了。

CMD窗口下载数据库

打开cmd窗口

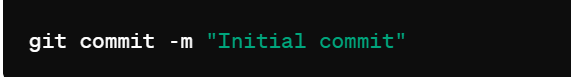

将文后我给的代码粘贴并后缀改为.py文件,确保你的文件命名为FaceForensics++.py

cd you_dir # 转到这个.py文件所属的文件夹下接着cmd窗口会显示已经进入目标文件夹,接着输入下载命令:

python FaceForensics++.py

//前面的python指你的电脑本身的python.exe文件,注意并不一定是“python”,需要观察你自己下载的python中的pythin.exe文件的命名是什么,比如笔者下载的python运行文件的命名是python3.11.exe,所以这里就应该python3.11

<output path>

//这里意思是数据下载的地址,即你的数据集要放在哪里(注意存储空间要足够)

-d <dataset type, e.g., Face2Face, original or all>

//如果你要下载FaceForensics++全部直接-all即可,也可以选择FaceForensics++数据集其中的一项来下载

-c <compression quality, e.g., c23 or raw>

//这里指压缩参数选择,如果想要下载原始数据可以选-raw,笔者下载的是-23压缩版

-t <file type, e.g., videos, masks or models>

//文件下载的类型-video即可下载deepfake的video

比如,要在D盘上的FaceForensics++文件里下载FaceForensics++数据集全部视频,以C23参数压缩,命令可以是:

python3.11 FaceForensics.py E:/FaceForensics++ -d all -c c23 -t videos

注意,运行过程中如果出现“502 BadGateway”提示,可能是你的服务不能使用脚本默认的,而是需要更改,脚本里面提供了三个server可供选择,分别是EU,EU2和CA,对应了欧洲1,2和加拿大,默认使用的是EU,脚本这部分代码如下:

parser.add_argument('--server', type=str, default='EU',

help='Server to download the data from. If you '

'encounter a slow download speed, consider '

'changing the server.',

choices=SERVERS

)

args = parser.parse_args()

# URLs

server = args.server

if server == 'EU':

server_url = 'http://canis.vc.in.tum.de:8100/'

elif server == 'EU2':

server_url = 'http://kaldir.vc.in.tum.de/faceforensics/'

elif server == 'CA':

server_url = 'http://falas.cmpt.sfu.ca:8100/'

else:

raise Exception('Wrong server name. Choices: {}'.format(str(SERVERS)))

args.tos_url = server_url + 'webpage/FaceForensics_TOS.pdf'

args.base_url = server_url + 'v3/'

args.deepfakes_model_url = server_url + 'v3/manipulated_sequences/' + \

'Deepfakes/models/'

return args

所以,在你的CMD窗口可以指定以下server,笔者在出错以后,将server改为EU2就可以顺利下载,总体命令如下:

python3.11 FaceForensics.py E:/FaceForensics++ --server EU2 -d all -c c23 -t videos

PS:–server EU2即指定server为EU2

接下来数据就会开始下载(会有进度条显示下载情况)

由于挂代理,所以可能会出现不稳定的停止下载的情况,如果遇到程序中断,没有关系,确保网络、代理正确连接后重新输入下载命令即可,它会自动跳过已经下载好的文件,继续下载其他文件。

建议下载数据集时尽量保证网速较快,并且代理稳定(否则一中断就重新输入一遍命令很麻烦)。

脚本

附:邮件提供的FaceForensics++脚本如下:(自己复制粘贴做成.py文件)

#!/usr/bin/env python

""" Downloads FaceForensics++ and Deep Fake Detection public data release

Example usage:

see -h or https://github.com/ondyari/FaceForensics

"""

# -*- coding: utf-8 -*-

import argparse

import os

import urllib

import urllib.request

import tempfile

import time

import sys

import json

import random

from tqdm import tqdm

from os.path import join

# URLs and filenames

FILELIST_URL = 'misc/filelist.json'

DEEPFEAKES_DETECTION_URL = 'misc/deepfake_detection_filenames.json'

DEEPFAKES_MODEL_NAMES = ['decoder_A.h5', 'decoder_B.h5', 'encoder.h5',]

# Parameters

DATASETS = {

'original_youtube_videos': 'misc/downloaded_youtube_videos.zip',

'original_youtube_videos_info': 'misc/downloaded_youtube_videos_info.zip',

'original': 'original_sequences/youtube',

'DeepFakeDetection_original': 'original_sequences/actors',

'Deepfakes': 'manipulated_sequences/Deepfakes',

'DeepFakeDetection': 'manipulated_sequences/DeepFakeDetection',

'Face2Face': 'manipulated_sequences/Face2Face',

'FaceShifter': 'manipulated_sequences/FaceShifter',

'FaceSwap': 'manipulated_sequences/FaceSwap',

'NeuralTextures': 'manipulated_sequences/NeuralTextures'

}

ALL_DATASETS = ['original', 'DeepFakeDetection_original', 'Deepfakes',

'DeepFakeDetection', 'Face2Face', 'FaceShifter', 'FaceSwap',

'NeuralTextures']

COMPRESSION = ['raw', 'c23', 'c40']

TYPE = ['videos', 'masks', 'models']

SERVERS = ['EU', 'EU2', 'CA']

def parse_args():

parser = argparse.ArgumentParser(

description='Downloads FaceForensics v2 public data release.',

formatter_class=argparse.ArgumentDefaultsHelpFormatter

)

parser.add_argument('output_path', type=str, help='Output directory.')

parser.add_argument('-d', '--dataset', type=str, default='all',

help='Which dataset to download, either pristine or '

'manipulated data or the downloaded youtube '

'videos.',

choices=list(DATASETS.keys()) + ['all']

)

parser.add_argument('-c', '--compression', type=str, default='raw',

help='Which compression degree. All videos '

'have been generated with h264 with a varying '

'codec. Raw (c0) videos are lossless compressed.',

choices=COMPRESSION

)

parser.add_argument('-t', '--type', type=str, default='videos',

help='Which file type, i.e. videos, masks, for our '

'manipulation methods, models, for Deepfakes.',

choices=TYPE

)

parser.add_argument('-n', '--num_videos', type=int, default=None,

help='Select a number of videos number to '

"download if you don't want to download the full"

' dataset.')

parser.add_argument('--server', type=str, default='EU',

help='Server to download the data from. If you '

'encounter a slow download speed, consider '

'changing the server.',

choices=SERVERS

)

args = parser.parse_args()

# URLs

server = args.server

if server == 'EU':

server_url = 'http://canis.vc.in.tum.de:8100/'

elif server == 'EU2':

server_url = 'http://kaldir.vc.in.tum.de/faceforensics/'

elif server == 'CA':

server_url = 'http://falas.cmpt.sfu.ca:8100/'

else:

raise Exception('Wrong server name. Choices: {}'.format(str(SERVERS)))

args.tos_url = server_url + 'webpage/FaceForensics_TOS.pdf'

args.base_url = server_url + 'v3/'

args.deepfakes_model_url = server_url + 'v3/manipulated_sequences/' + \

'Deepfakes/models/'

return args

def download_files(filenames, base_url, output_path, report_progress=True):

os.makedirs(output_path, exist_ok=True)

if report_progress:

filenames = tqdm(filenames)

for filename in filenames:

download_file(base_url + filename, join(output_path, filename))

def reporthook(count, block_size, total_size):

global start_time

if count == 0:

start_time = time.time()

return

duration = time.time() - start_time

progress_size = int(count * block_size)

speed = int(progress_size / (1024 * duration))

percent = int(count * block_size * 100 / total_size)

sys.stdout.write("\rProgress: %d%%, %d MB, %d KB/s, %d seconds passed" %

(percent, progress_size / (1024 * 1024), speed, duration))

sys.stdout.flush()

def download_file(url, out_file, report_progress=False):

out_dir = os.path.dirname(out_file)

if not os.path.isfile(out_file):

fh, out_file_tmp = tempfile.mkstemp(dir=out_dir)

f = os.fdopen(fh, 'w')

f.close()

if report_progress:

urllib.request.urlretrieve(url, out_file_tmp,

reporthook=reporthook)

else:

urllib.request.urlretrieve(url, out_file_tmp)

os.rename(out_file_tmp, out_file)

else:

tqdm.write('WARNING: skipping download of existing file ' + out_file)

def main(args):

# TOS

print('By pressing any key to continue you confirm that you have agreed '\

'to the FaceForensics terms of use as described at:')

print(args.tos_url)

print('***')

print('Press any key to continue, or CTRL-C to exit.')

_ = input('')

# Extract arguments

c_datasets = [args.dataset] if args.dataset != 'all' else ALL_DATASETS

c_type = args.type

c_compression = args.compression

num_videos = args.num_videos

output_path = args.output_path

os.makedirs(output_path, exist_ok=True)

# Check for special dataset cases

for dataset in c_datasets:

dataset_path = DATASETS[dataset]

# Special cases

if 'original_youtube_videos' in dataset:

# Here we download the original youtube videos zip file

print('Downloading original youtube videos.')

if not 'info' in dataset_path:

print('Please be patient, this may take a while (~40gb)')

suffix = ''

else:

suffix = 'info'

download_file(args.base_url + '/' + dataset_path,

out_file=join(output_path,

'downloaded_videos{}.zip'.format(

suffix)),

report_progress=True)

return

# Else: regular datasets

print('Downloading {} of dataset "{}"'.format(

c_type, dataset_path

))

# Get filelists and video lenghts list from server

if 'DeepFakeDetection' in dataset_path or 'actors' in dataset_path:

filepaths = json.loads(urllib.request.urlopen(args.base_url + '/' +

DEEPFEAKES_DETECTION_URL).read().decode("utf-8"))

if 'actors' in dataset_path:

filelist = filepaths['actors']

else:

filelist = filepaths['DeepFakesDetection']

elif 'original' in dataset_path:

# Load filelist from server

file_pairs = json.loads(urllib.request.urlopen(args.base_url + '/' +

FILELIST_URL).read().decode("utf-8"))

filelist = []

for pair in file_pairs:

filelist += pair

else:

# Load filelist from server

file_pairs = json.loads(urllib.request.urlopen(args.base_url + '/' +

FILELIST_URL).read().decode("utf-8"))

# Get filelist

filelist = []

for pair in file_pairs:

filelist.append('_'.join(pair))

if c_type != 'models':

filelist.append('_'.join(pair[::-1]))

# Maybe limit number of videos for download

if num_videos is not None and num_videos > 0:

print('Downloading the first {} videos'.format(num_videos))

filelist = filelist[:num_videos]

# Server and local paths

dataset_videos_url = args.base_url + '{}/{}/{}/'.format(

dataset_path, c_compression, c_type)

dataset_mask_url = args.base_url + '{}/{}/videos/'.format(

dataset_path, 'masks', c_type)

if c_type == 'videos':

dataset_output_path = join(output_path, dataset_path, c_compression,

c_type)

print('Output path: {}'.format(dataset_output_path))

filelist = [filename + '.mp4' for filename in filelist]

download_files(filelist, dataset_videos_url, dataset_output_path)

elif c_type == 'masks':

dataset_output_path = join(output_path, dataset_path, c_type,

'videos')

print('Output path: {}'.format(dataset_output_path))

if 'original' in dataset:

if args.dataset != 'all':

print('Only videos available for original data. Aborting.')

return

else:

print('Only videos available for original data. '

'Skipping original.\n')

continue

if 'FaceShifter' in dataset:

print('Masks not available for FaceShifter. Aborting.')

return

filelist = [filename + '.mp4' for filename in filelist]

download_files(filelist, dataset_mask_url, dataset_output_path)

# Else: models for deepfakes

else:

if dataset != 'Deepfakes' and c_type == 'models':

print('Models only available for Deepfakes. Aborting')

return

dataset_output_path = join(output_path, dataset_path, c_type)

print('Output path: {}'.format(dataset_output_path))

# Get Deepfakes models

for folder in tqdm(filelist):

folder_filelist = DEEPFAKES_MODEL_NAMES

# Folder paths

folder_base_url = args.deepfakes_model_url + folder + '/'

folder_dataset_output_path = join(dataset_output_path,

folder)

download_files(folder_filelist, folder_base_url,

folder_dataset_output_path,

report_progress=False) # already done

if __name__ == "__main__":

args = parse_args()

main(args)