背景:

在实际交付场景中,有搬迁机房、网络未规划完成需要提前部署、客户使用DHCP分配IP、虚拟机IP更换等场景。目前统一的方案还是建议全部推到重新部署一套业务环境,存在环境清理不干净、重新部署成本较高、周期长、客户对产品能力质疑等问题。因此需要一个统一、智能化的切换IP的技术方案

技术难点:

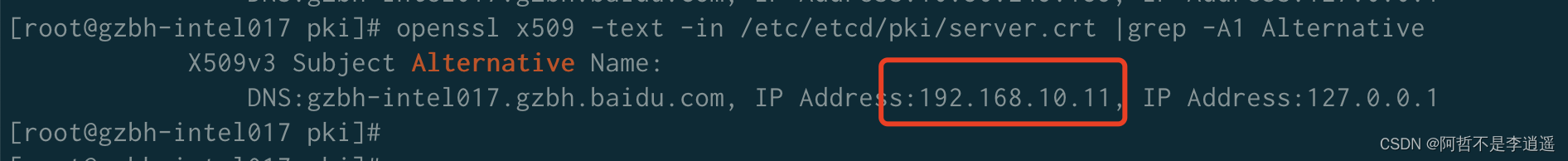

1、k8s集群通过openssl x509证书作为etcd、master、kubelet、cni之间的鉴权认证方式,x509的证书加密时指定了可访问的IP信息,因此当IP发生切换时,证书将无法使用,各组件之间将无法认证通信。

2、业务本身对IP的依赖,对k8s证书的依赖。

解决思路:

问题1通过更换各组件使用的证书,重新生成证书将原来的IP信息进行替换。

问题2,修改绑定IP、证书的配置文件、环境变量、configmap。

验证步骤:

一、替换配置

替换配置、更新证书、重启服务的操作已集成到自动化脚本,无需人工干预。脚本的功能:

1、备份etcd的数据。备份etcd、kubenetes的配置文件。备份目录:/data/backup_xxxx;

2、替换etcd、apiserver、kube-scheduler、kube-controller、kubelet、registry、kube-system下的configmap、其他配置文件中的证书和IP信息

3、重启对应服务

使用方法:

./update.sh "master|node" ip.txt

"master|node":节点类型,master或者node。master将替换etcd、apiserver、kube-scheduler、kube-controller、kubelet、registry、kube-system下的configmap、其他配置文件中的证书和IP信息。 node将替换kubelet、其他配置文件中的IP信息。

ip.txt:将切换的原始IP和新IP,旧IP和新IP用","分隔,一组IP一行。

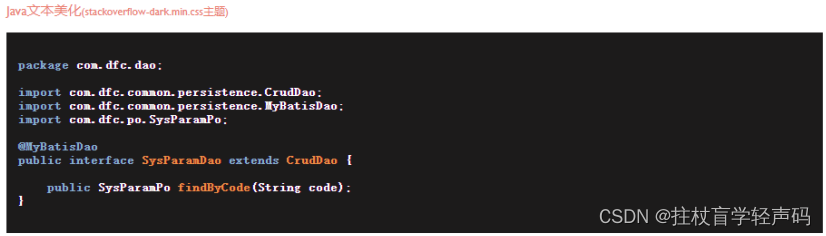

update内容脚本如下

set -xe

NC='\033[0m'

RED='\033[31m'

GREEN='\033[32m'

YELLOW='\033[33m'

BLUE='\033[34m'

log::err() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%2N%z')][${RED}ERROR${NC}] %b\n" "$@"

}

log::info() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%2N%z')][INFO] %b\n" "$@"

}

log::warning() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%2N%z')][${YELLOW}WARNING${NC}] \033[0m%b\n" "$@"

}

help() {

printf "

Useage: ./update_cert.sh \"master|node\" ip.txt

There is two args.

--- The firs is node_type,include \033[32m master \033[0m and \033[32m node \033[0m .

--- The Second ip.txt is ip conf file. You need privoter a old ip and a new ip at least, and separate by ',' ,and new group ip should start a new line.

example:

127.0.0.1,192.168.0.1

127.0.0.2,192.168.0.2

"

}

check_file() {

if [[ ! -r ${1} ]]; then

log::err "can not find ${1}"

exit 1

fi

}

backup_file() {

local file=${1}

local target_dir=${backup_dir}/${file##*/}

if [[ ! -e ${target_dir} ]]; then

mkdir -p ${target_dir}

cp -rp "${file}" "${target_dir}/"

log::info "backup ${file} to ${target_dir}"

else

log::warning "does not backup, ${target_dir} already exists"

fi

}

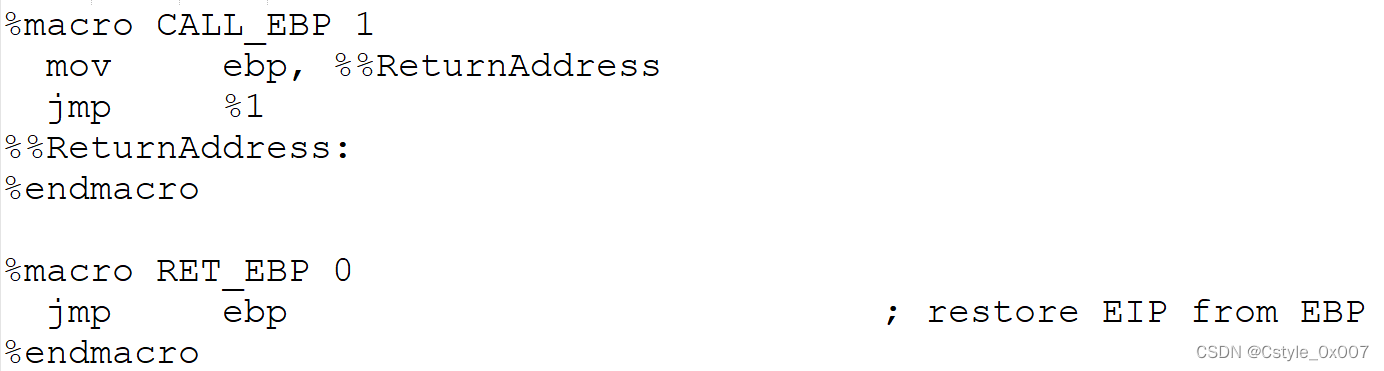

# get x509v3 subject alternative name from the old certificate

get_subject_alt_name() {

local cert=${1}.crt

local alt_name

local tmp_file

tmp_file=/tmp/altname.txt

check_file "${cert}"

openssl x509 -text -noout -in "${cert}" | grep -A1 'Alternative' | tail -n1 | sed 's/[[:space:]]*Address//g'>${tmp_file}

update_conf_ip ${ip_conf} ${tmp_file}

alt_name=$(cat ${tmp_file})

rm -f ${tmp_file}

printf "%s\n" "${alt_name}"

}

# get subject from the old certificate

get_subj() {

local cert=${1}.crt

local subj

check_file "${cert}"

subj=$(openssl x509 -text -noout -in "${cert}" | grep "Subject:" | sed 's/Subject:/\//g;s/\,/\//;s/[[:space:]]//g')

printf "%s\n" "${subj}"

}

gen_cert() {

local cert_name=${1}

local cert_type=${2}

local subj=${3}

local cert_days=${4}

local ca_name=${5}

local alt_name=${6}

local ca_cert=${ca_name}.crt

local ca_key=${ca_name}.key

local cert=${cert_name}.crt

local key=${cert_name}.key

local csr=${cert_name}.csr

local common_csr_conf='distinguished_name = dn\n[dn]\n[v3_ext]\nkeyUsage = critical, digitalSignature, keyEncipherment\n'

for file in "${ca_cert}" "${ca_key}" "${cert}" "${key}"; do

check_file "${file}"

done

case "${cert_type}" in

client)

csr_conf=$(printf "%bextendedKeyUsage = clientAuth\n" "${common_csr_conf}")

;;

server)

csr_conf=$(printf "%bextendedKeyUsage = serverAuth\nsubjectAltName = %b\n" "${common_csr_conf}" "${alt_name}")

;;

peer)

csr_conf=$(printf "%bextendedKeyUsage = serverAuth, clientAuth\nsubjectAltName = %b\n" "${common_csr_conf}" "${alt_name}")

;;

*)

log::err "unknow, unsupported certs type: ${YELLOW}${cert_type}${NC}, supported type: client, server, peer"

exit 1

;;

esac

# gen csr

openssl req -new -key "${key}" -subj "${subj}" -reqexts v3_ext -config <(printf "%b" "${csr_conf}") -out "${csr}" >/dev/null 2>&1

# gen cert

openssl x509 -in "${csr}" -req -CA "${ca_cert}" -CAkey "${ca_key}" -CAcreateserial -extensions v3_ext -extfile <(printf "%b" "${csr_conf}") -days "${cert_days}" -out "${cert}" >/dev/null 2>&1

rm -f "${csr}"

}

update_conf_ip() {

ip_file=$1

conf_file=$2

check_file "${ip_file}"

cat ${ip_file}|sed s/[[:space:]]//g|while IFS=, read old_ip new_ip;

do

sed -i s/${old_ip}/${new_ip}/g ${conf_file}

done

}

update_cm_conf() {

cm_name=$1

tmp_cm_file=/tmp/${cm_name}.yaml

kubectl get cm -n kube-system ${cm_name} -o yaml >${tmp_cm_file}.yaml

update_conf_ip ${ip_conf} ${tmp_cm_file}.yaml

kubectl apply -f ${tmp_cm_file}.yaml

rm -f ${tmp_cm_file}.yaml

log::info "configmap ${cm_name} is updated"

}

update_kubelet_conf_ip() {

backup_file ${KUBE_PATH}

kubelet_conf=${KUBE_PATH}/kubelet.conf

check_file ${kubelet_conf}

update_conf_ip ${ip_conf} ${kubelet_conf}

systemctl restart kubelet

log::info "restart kubelet, kubelet update success"

}

update_hosts_conf() {

hosts_file=/etc/hosts

backup_file ${hosts_file}

update_conf_ip ${ip_conf} ${hosts_file}

log::info "${hosts_file} update success"

}

update_registry_conf () {

registry_conf=/etc/docker/registry/config.yml

check_file ${registry_conf}

backup_file ${registry_conf}

update_conf_ip ${ip_conf} ${registry_conf}

systemctl restart registry

log::info "restart registry, registry update success"

}

restart_other_server() {

#restart other service

kubectl get pod -n kube-system |egrep "flannel|calico"|awk '{print $1}'|xargs kubectl delete pod -n kube-system

kubectl get pod -n local-path-storage |egrep "flannel|calico"|awk '{print $1}'|xargs kubectl delete pod -n local-path-storage

}

update_k8s_configmap() {

for cm in ${cm_list};

do

update_cm_conf ${cm}

if [ ${cm%%-*} != "kubeadm" ]; then

kubectl get pod -n kube-system |grep ${cm}|awk '{print $1}'|xargs kubectl delete pod -n kube-system

fi

log::info "${cm} update success, pod restart"

done

}

update_etcd_cert() {

local subj

local subject_alt_name

local cert

#backup etcd config

backup_file ${ETCD_PATH}

#backup etcd data

ETCDCTL_API=3 etcdctl --cacert=/etc/etcd/pki/ca.crt --cert=/etc/etcd/pki/server.crt --key=/etc/etcd/pki/server.key --endpoints=https://127.0.0.1:2379 snapshot save ${backup_dir}/snapshot.db

#replace ip for etcd config

for file in $(find ${ETCD_PATH} -type f);

do

update_conf_ip ${ip_conf} $file;

done

# generate etcd server,peer certificate

# /etc/kubernetes/pki/etcd/server

# /etc/kubernetes/pki/etcd/peer

for cert in ${ETCD_CERT_SERVER} ${ETCD_CERT_PEER}; do

subj=$(get_subj "${cert}")

subject_alt_name=$(get_subject_alt_name "${cert}")

gen_cert "${cert}" "peer" "${subj}" "${CERT_DAYS}" "${ETCD_CERT_CA}" "${subject_alt_name}"

done

# generate etcd healthcheck-client,apiserver-etcd-client certificate

# /etc/kubernetes/pki/etcd/healthcheck-client

# /etc/kubernetes/pki/apiserver-etcd-client

for cert in ${ETCD_CERT_HEALTHCHECK_CLIENT} ${ETCD_CERT_APISERVER_ETCD_CLIENT}; do

subj=$(get_subj "${cert}")

gen_cert "${cert}" "client" "${subj}" "${CERT_DAYS}" "${ETCD_CERT_CA}"

done

#restart etcd

systemctl restart etcd

log::info "restart etcd, etcd update success"

}

update_kubeconf() {

local cert_name=${1}

local kubeconf_file=${cert_name}.conf

local cert=${cert_name}.crt

local key=${cert_name}.key

local subj

local cert_base64

check_file "${kubeconf_file}"

# get the key from the old kubeconf

grep "client-key-data" "${kubeconf_file}" | awk '{print$2}' | base64 -d >"${key}"

# get the old certificate from the old kubeconf

grep "client-certificate-data" "${kubeconf_file}" | awk '{print$2}' | base64 -d >"${cert}"

# get subject from the old certificate

subj=$(get_subj "${cert_name}")

gen_cert "${cert_name}" "client" "${subj}" "${CERT_DAYS}" "${CERT_CA}"

# get certificate base64 code

cert_base64=$(base64 -w 0 "${cert}")

# set certificate base64 code to kubeconf

sed -i 's/client-certificate-data:.*/client-certificate-data: '"${cert_base64}"'/g' "${kubeconf_file}"

rm -f "${cert}"

rm -f "${key}"

}

update_master_cert() {

local subj

local subject_alt_name

local conf

#backup kubenetes config

backup_file ${KUBE_PATH}

#replace ip for kubenetes config

for file in $(find ${KUBE_PATH} -type f);

do

update_conf_ip ${ip_conf} $file;

done

# generate apiserver server certificate

# /etc/kubernetes/pki/apiserver

subj=$(get_subj "${CERT_APISERVER}")

subject_alt_name=$(get_subject_alt_name "${CERT_APISERVER}")

gen_cert "${CERT_APISERVER}" "server" "${subj}" "${CERT_DAYS}" "${CERT_CA}" "${subject_alt_name}"

log::info "${GREEN}updated ${BLUE}${CERT_APISERVER}.crt${NC}"

# generate apiserver-kubelet-client certificate

# /etc/kubernetes/pki/apiserver-kubelet-client

subj=$(get_subj "${CERT_APISERVER_KUBELET_CLIENT}")

gen_cert "${CERT_APISERVER_KUBELET_CLIENT}" "client" "${subj}" "${CERT_DAYS}" "${CERT_CA}"

log::info "${GREEN}updated ${BLUE}${CERT_APISERVER_KUBELET_CLIENT}.crt${NC}"

# generate kubeconf for controller-manager,scheduler and kubelet

# /etc/kubernetes/controller-manager,scheduler,admin,kubelet.conf

for conf in ${CONF_CONTROLLER_MANAGER} ${CONF_SCHEDULER} ${CONF_ADMIN}; do

update_kubeconf "${conf}"

log::info "${GREEN}updated ${BLUE}${conf}.conf${NC}"

# copy admin.conf to ${HOME}/.kube/config

if [[ ${conf##*/} == "admin" ]]; then

mkdir -p "${HOME}/.kube"

local config=${HOME}/.kube/config

local config_backup

config_backup=${HOME}/.kube/config.old-$(date +%Y%m%d)

if [[ -f ${config} ]] && [[ ! -f ${config_backup} ]]; then

cp -fp "${config}" "${config_backup}"

log::info "backup ${config} to ${config_backup}"

fi

cp -fp "${conf}.conf" "${HOME}/.kube/config"

log::info "copy the admin.conf to ${HOME}/.kube/config"

fi

done

# generate front-proxy-client certificate

# /etc/kubernetes/pki/front-proxy-client

subj=$(get_subj "${FRONT_PROXY_CLIENT}")

gen_cert "${FRONT_PROXY_CLIENT}" "client" "${subj}" "${CERT_DAYS}" "${FRONT_PROXY_CA}"

log::info "${GREEN}updated ${BLUE}${FRONT_PROXY_CLIENT}.crt${NC}"

# restart apiserver, controller-manager, scheduler and kubelet

for item in "apiserver" "controller-manager" "scheduler"; do

docker ps | awk '/k8s_kube-'${item}'/{print$1}' | xargs -r -I '{}' docker restart {} >/dev/null 2>&1 || true

log::info "restarted ${item}"

done

systemctl daemon-reload && systemctl restart kubelet && systemctl restart docker

log::info "restarted kubelet, docker"

}

main() {

local node_type=$1

ip_conf=$2

if [ $# -ne 2 ]; then

help

exit 1

fi

backup_dir=/data/backup_$(date +%Y%m%d%H%M)

CERT_DAYS=3650

KUBE_PATH=/etc/kubernetes

PKI_PATH=${KUBE_PATH}/ssl

ETCD_PATH=/etc/etcd

# configmap lis

cm_list="coredns kube-proxy kubeadm-config"

# master certificates path

# apiserver

CERT_CA=${PKI_PATH}/ca

CERT_APISERVER=${PKI_PATH}/apiserver

CERT_APISERVER_KUBELET_CLIENT=${PKI_PATH}/apiserver-kubelet-client

CONF_CONTROLLER_MANAGER=${KUBE_PATH}/controller-manager

CONF_SCHEDULER=${KUBE_PATH}/scheduler

CONF_ADMIN=${KUBE_PATH}/admin

CONF_KUBELET=${KUBE_PATH}/kubelet

# front-proxy

FRONT_PROXY_CA=${PKI_PATH}/front-proxy-ca

FRONT_PROXY_CLIENT=${PKI_PATH}/front-proxy-client

# etcd certificates path

ETCD_CERT_CA=${ETCD_PATH}/pki/ca

ETCD_CERT_SERVER=${ETCD_PATH}/pki/server

ETCD_CERT_PEER=${ETCD_PATH}/pki/peer

ETCD_CERT_APISERVER_ETCD_CLIENT=${ETCD_PATH}/pki/apiserver-etcd-client

case ${node_type} in

master)

update_etcd_cert

update_master_cert

update_hosts_conf

#wait 10s for start apiserver

sleep 30

update_k8s_configmap

restart_other_server

update_registry_conf

log::info "master upgrade finish"

;;

node)

update_kubelet_conf_ip

update_hosts_conf

log::info "node upgrade finish"

;;

*)

help

exit 1

;;

esac

}

main "$@"

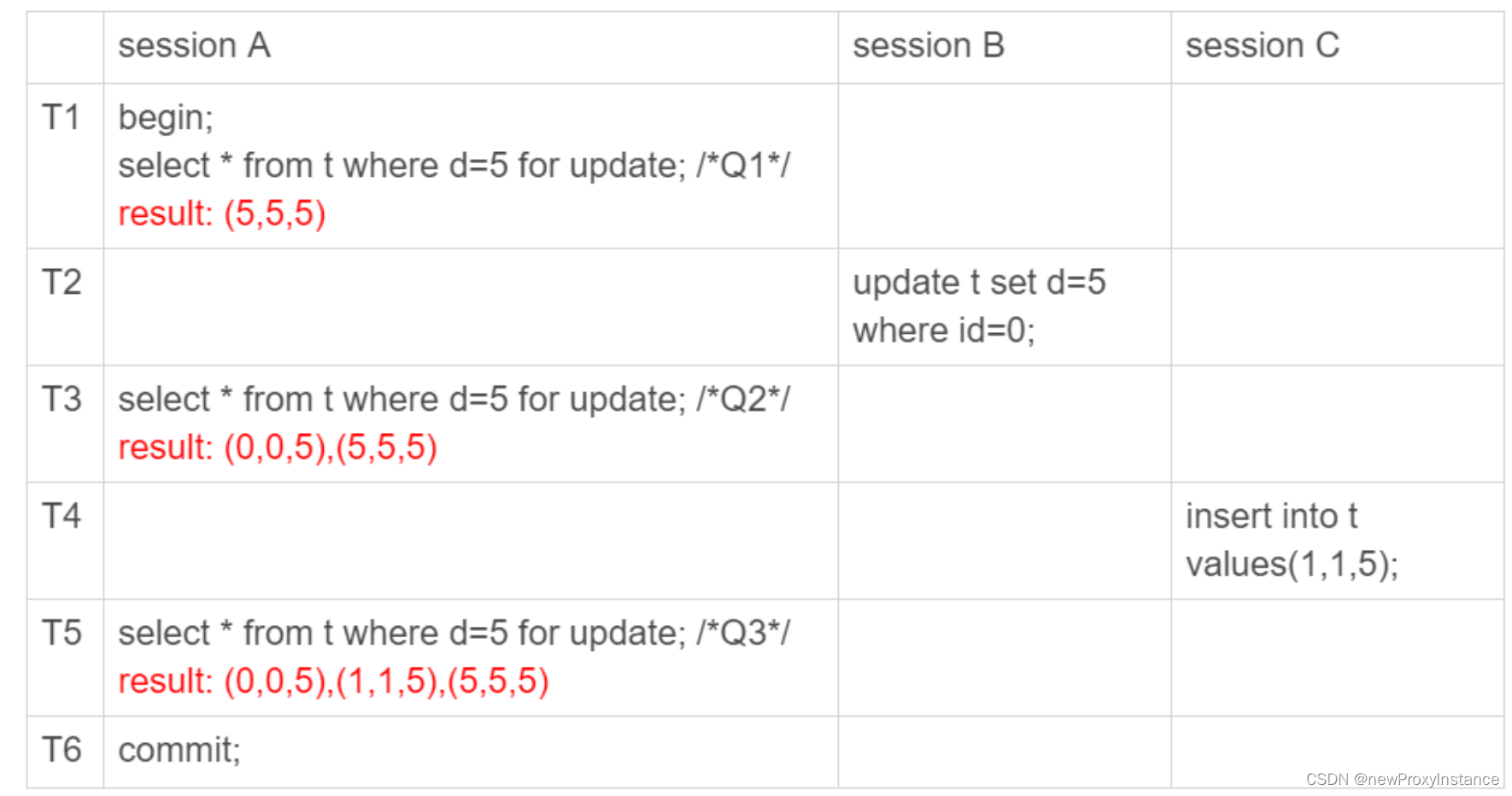

二、业务修改

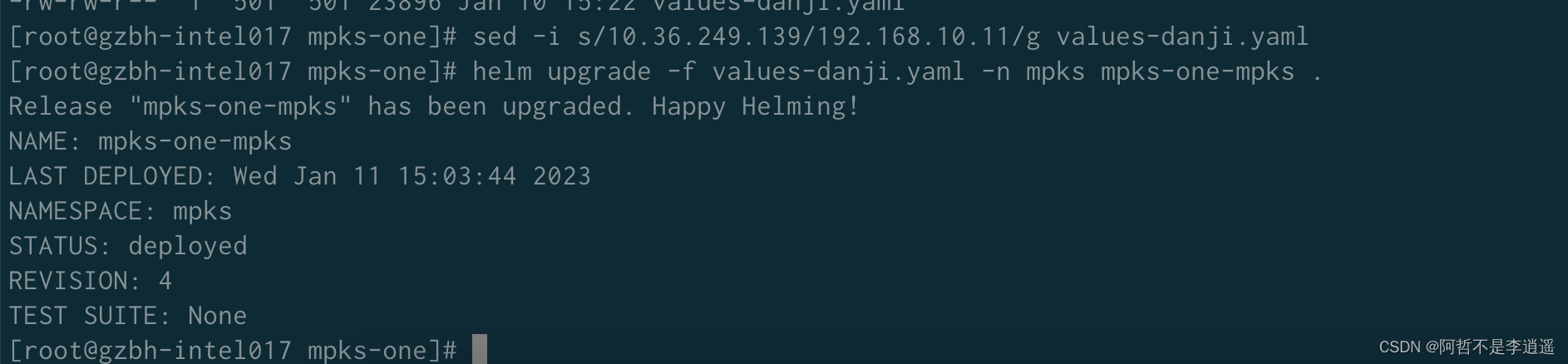

本文通过知识中台验证。修改values文件中的IP

1、通过helm 安装的使用下面的方法更新

sed s/

o

l

d

i

p

/

{old_ip}/

oldip/{new_ip}/g values.yaml

helm upgrade -f values-danji.yaml -n mpks mpks-one-mpks .

2、通过天牛安装的,修改对应的全局变量并同步

三、环境实操

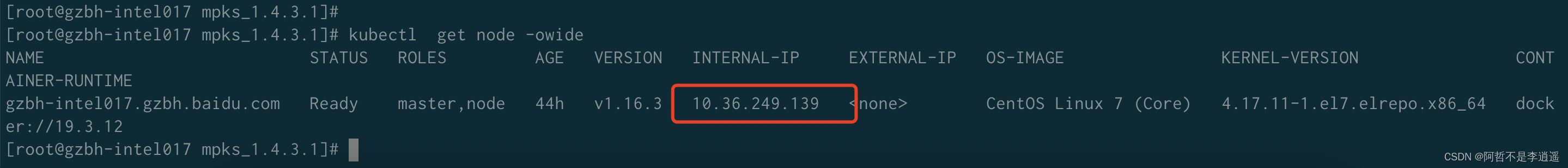

验证机器:10.36.249.139

部署版本:知识中台1.4.3单机版

1、部署完成信息确认

k8s

证书中带有的IP信息

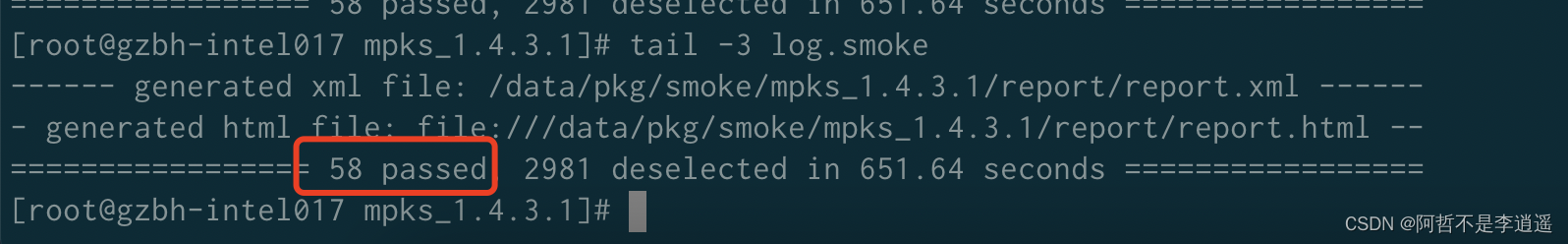

p0测试全通过

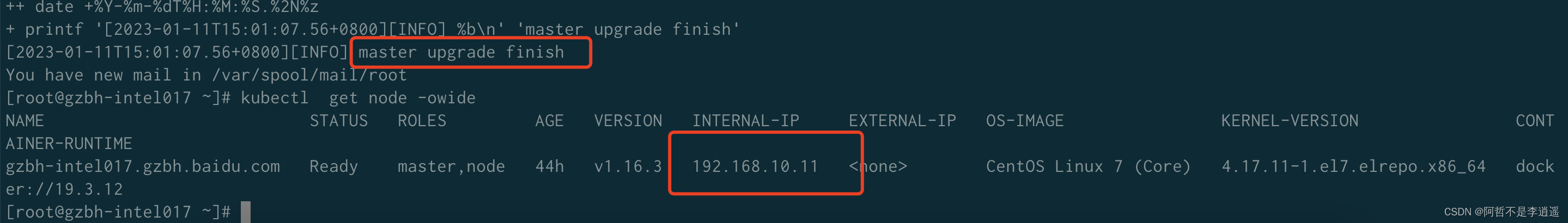

2、切换IP

./update.sh master ip.txt

证书更新

业务更新

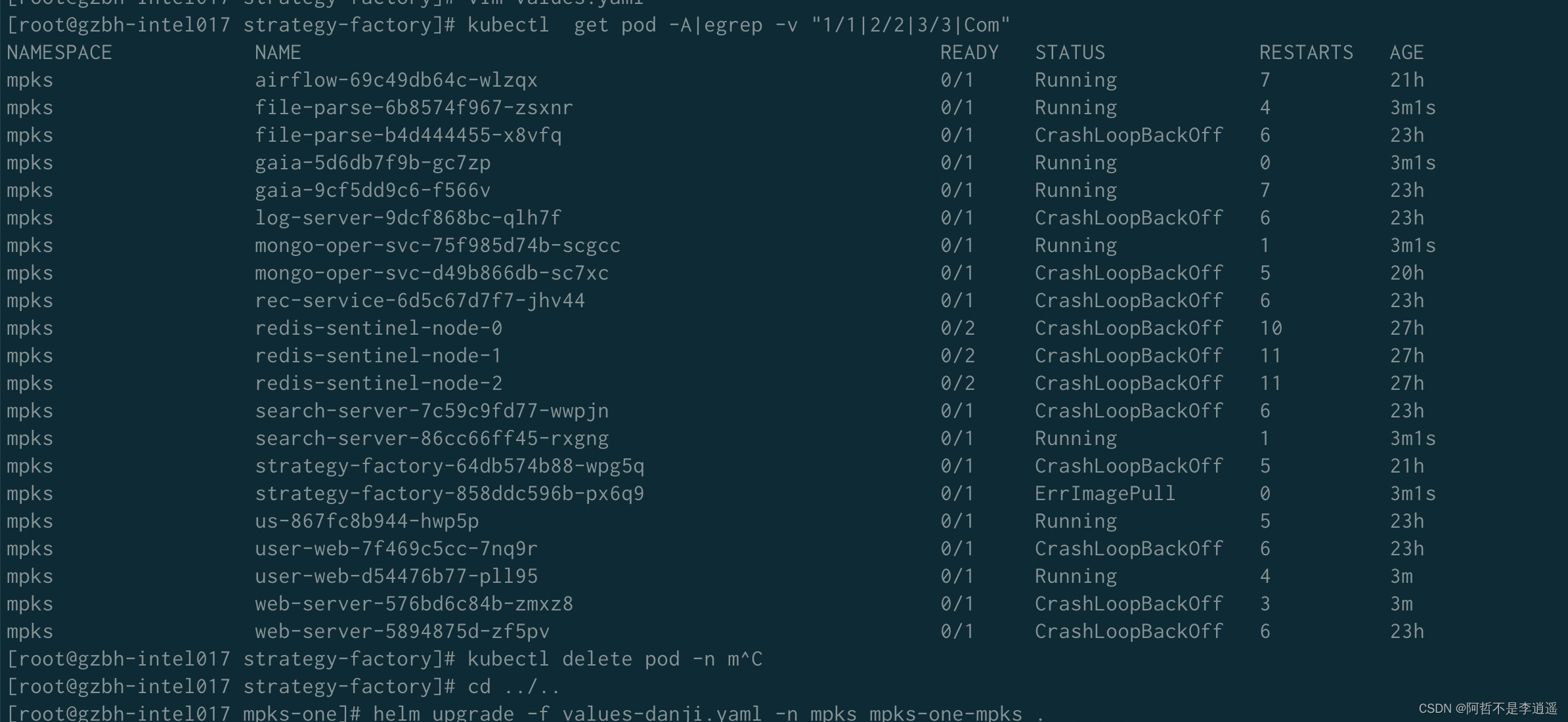

处理不能恢复的异常pod(重启)

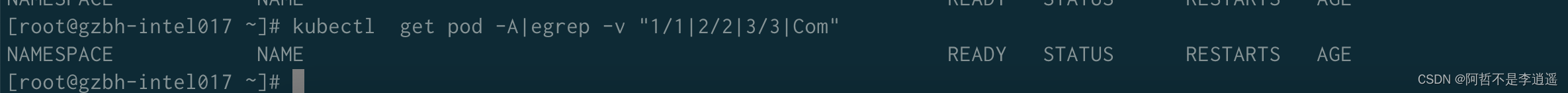

pod恢复正常

p0验证通过

补充点

因为测试环境的限制,验证存在不充分的点:

1、未在多台机器K8S集群替换证书

2、单机版KG无ceph、ppoc,切换IP后未对两个模块验证。