环境:

virtualbox ubantu1604

Linux idea社区版2023

jdk1.8

hadoop相关依赖

使用java操作

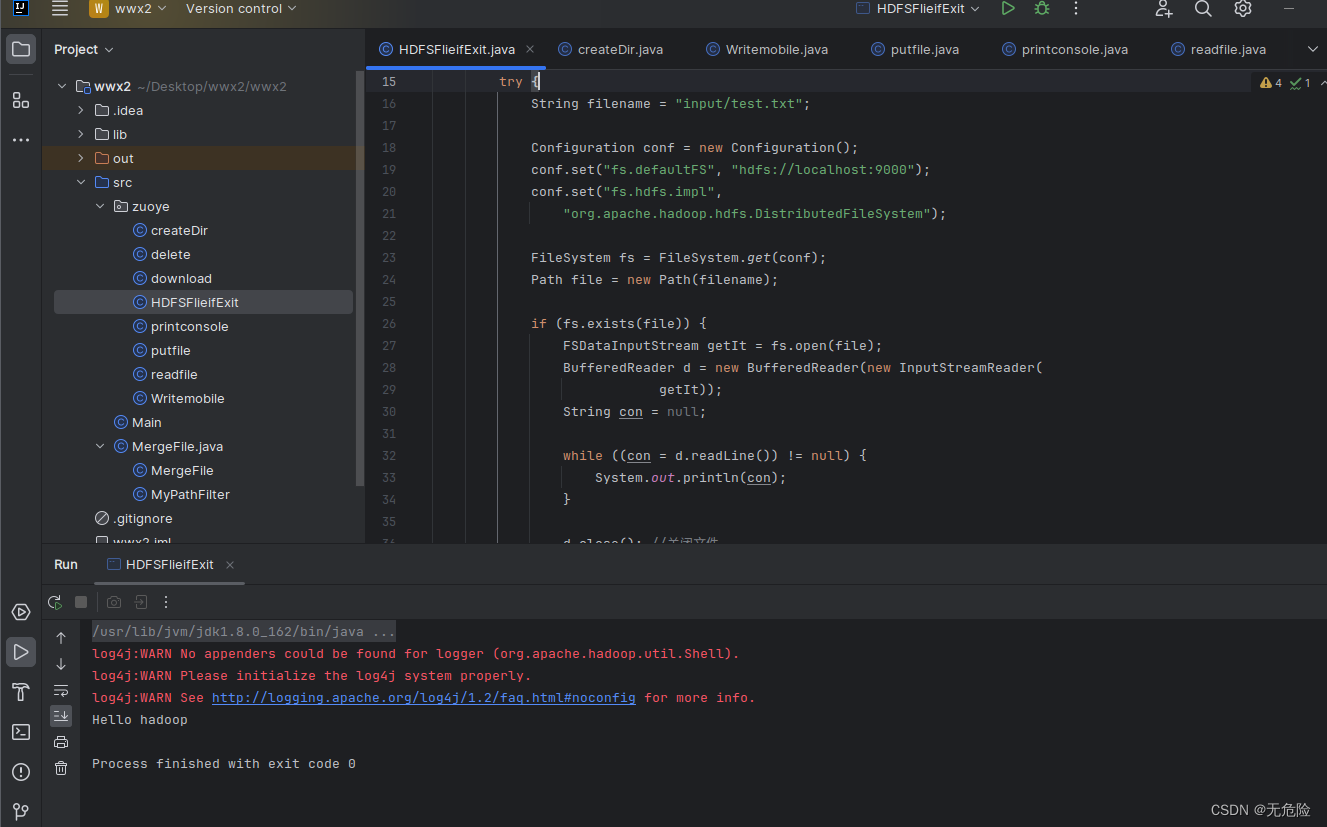

1. 判断/user/stu/input/test.txt文件是否存在,存在则读出文件内容,打印在控制台上。反之,输出“文件不存在”。

package abc;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

public class HDFSFlieifExit {

public static void main(String[] args) {

try {

String filename = "input/test.txt";

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path file = new Path(filename);

if (fs.exists(file)) {

FSDataInputStream getIt = fs.open(file);

BufferedReader d = new BufferedReader(new InputStreamReader(

getIt));

String con = null;

while ((con = d.readLine()) != null) {

System.out.println(con);

}

d.close(); //关闭文件

fs.close(); //关闭hdfs

} else {

System.out.println("文件不存在");

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

2. 使用JAVA编程实现

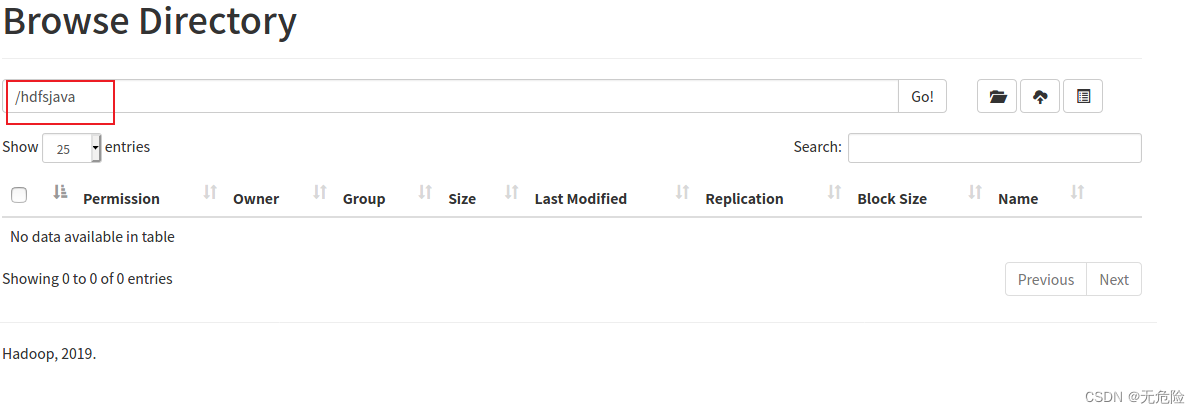

1) 在根目录下创建hdfsjava目录

package abc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class createDir {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

boolean isok = fs.mkdirs(new Path("hdfs:/hdfsjava"));

if (isok) {

System.out.println("成功创建目录!");

} else {

System.out.println("创建目录失败");

}

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

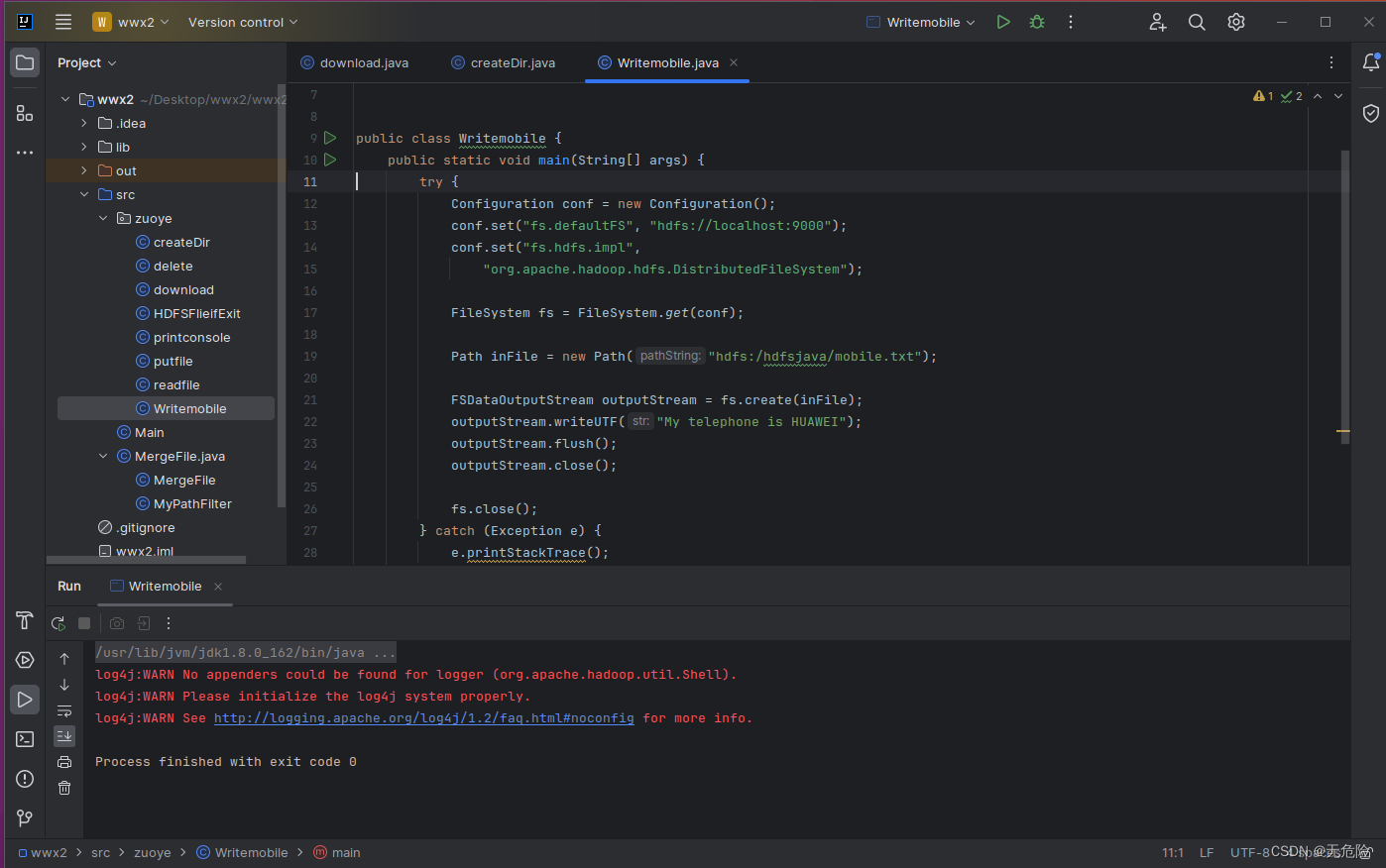

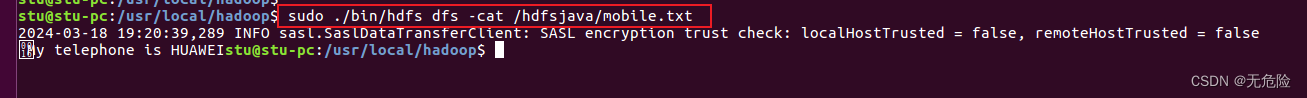

2) 在hdfsjava目录下创建文件mobiles.txt,内容是“My telephone is HUAWEI”

package abc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class Writemobile {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path inFile = new Path("hdfs:/hdfsjava/mobile.txt");

FSDataOutputStream outputStream = fs.create(inFile);

outputStream.writeUTF("My telephone is HUAWEI");

outputStream.flush();

outputStream.close();

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

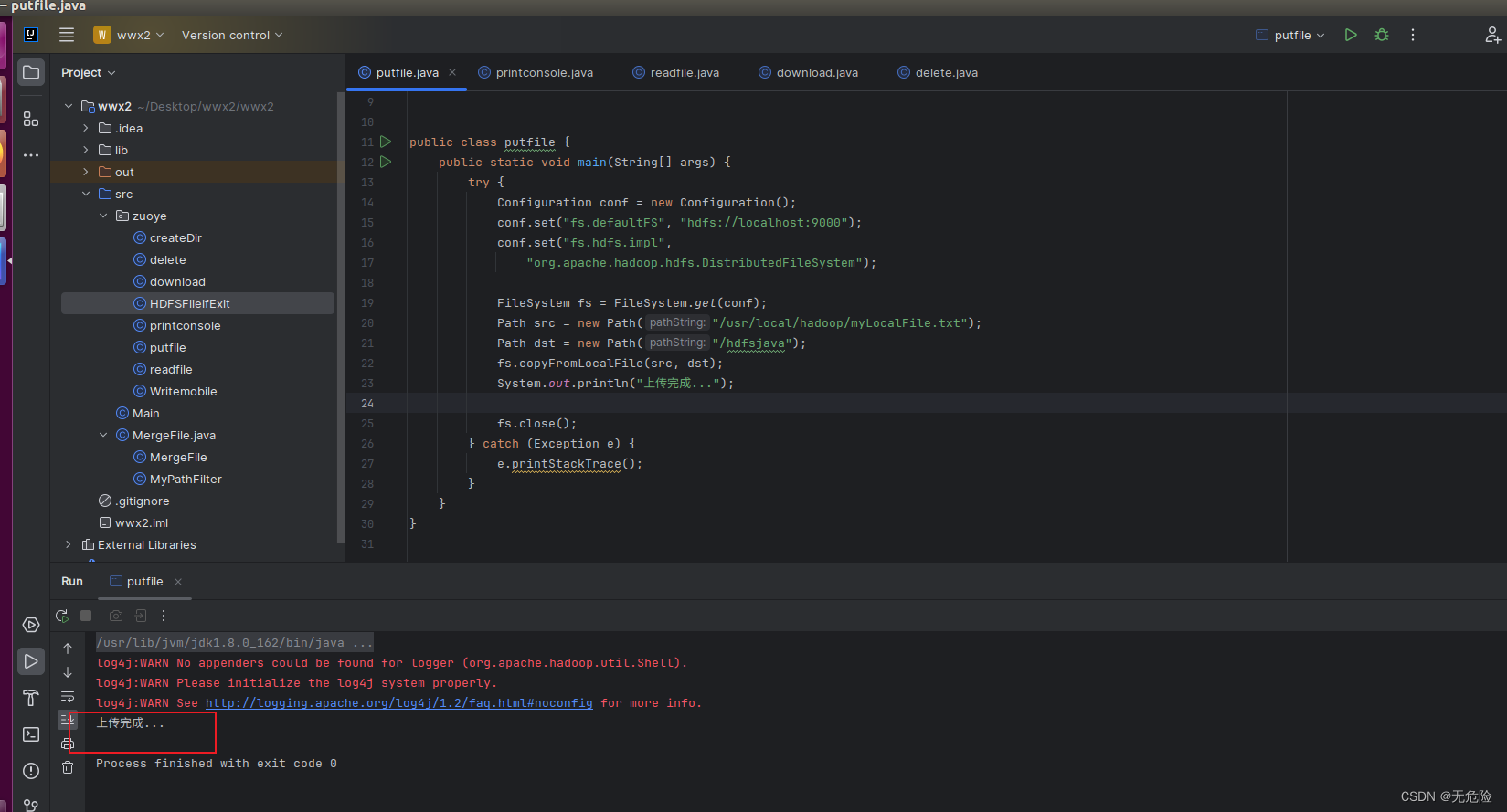

3) 将linux本地的myLocalFile.txt文件上传到hdfsjava目录下。

package abc;

import com.sun.org.apache.xerces.internal.util.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class putfile {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path src = new Path("/usr/local/hadoop/myLocalFile.txt");

Path dst = new Path("/hdfsjava");

fs.copyFromLocalFile(src, dst);

System.out.println("上传完成...");

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

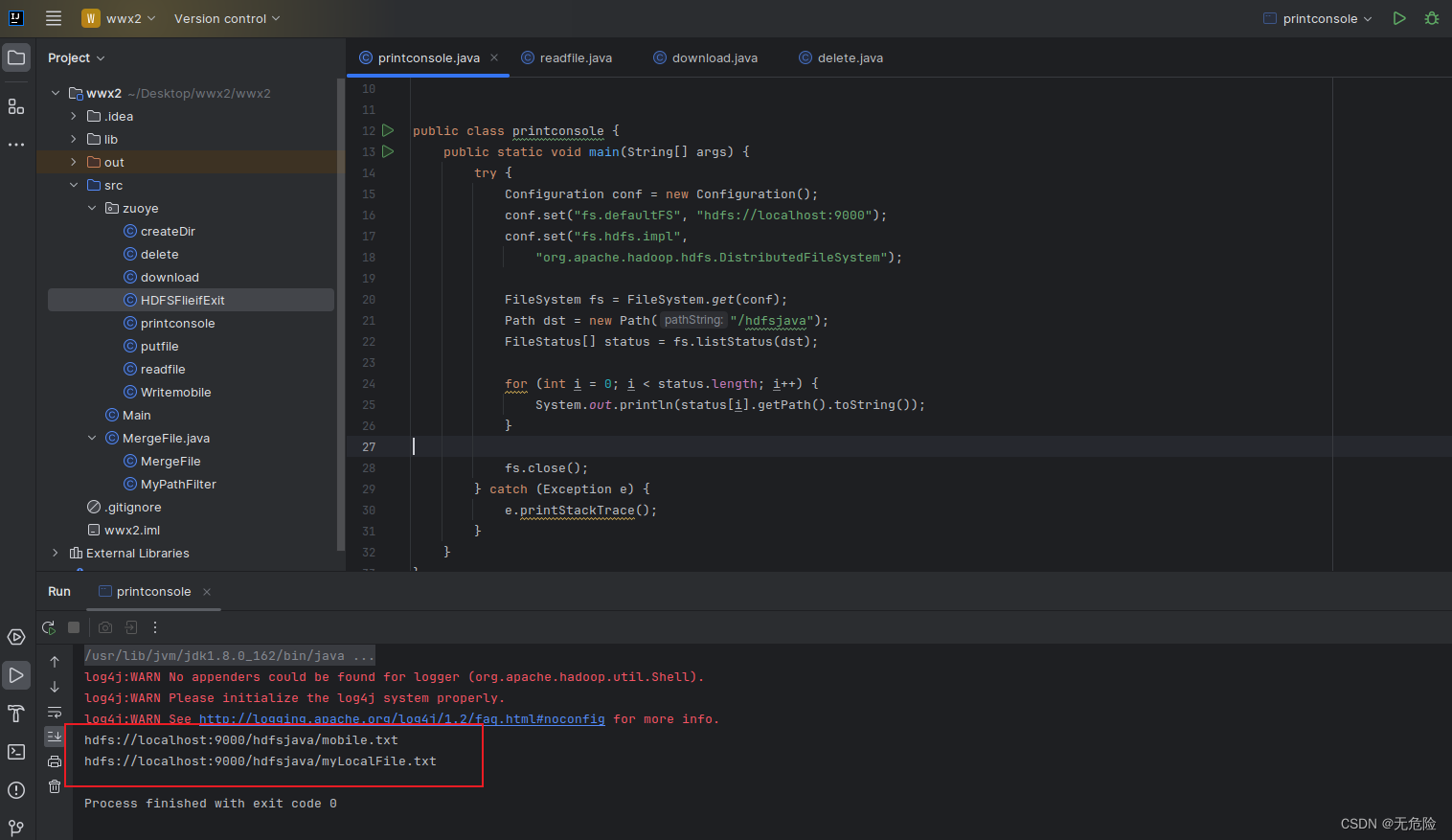

4) 列表显示hdfsjava下的所有文件,打印在控制台上。

package abc;

import com.sun.org.apache.xerces.internal.util.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class printconsole {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path dst = new Path("/hdfsjava");

FileStatus[] status = fs.listStatus(dst);

for (int i = 0; i < status.length; i++) {

System.out.println(status[i].getPath().toString());

}

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

5) 查看hdfs上的hdfsjava目录下myLocalFile.txt文件内容

package abc;

import com.sun.org.apache.xerces.internal.util.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.io.BufferedReader;

import java.io.InputStreamReader;

public class readfile {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path dst = new Path("/hdfsjava/myLocalFile.txt");

FSDataInputStream in = fs.open(dst);

BufferedReader d = new BufferedReader(new InputStreamReader(in));

String line = null;

while ((line = d.readLine()) != null) {

String[] stra = line.split(" ");

for (int i = 0; i < stra.length; i++) {

System.out.print(stra[i]);

System.out.print(" ");

}

System.out.println(" ");

}

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

6) 将hdfs上的hdfsjava目录下mobiles.txt文件下载到本地/home/hadoop中。

7) 删除hdfsjava目录。

package abc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class delete {

public static void main(String[] args) {

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://localhost:9000");

conf.set("fs.hdfs.impl",

"org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(conf);

Path src = new Path("/hdfsjava");

fs.delete(src, true);

} catch (Exception e) {

e.printStackTrace();

}

}

}