安装k8s

需要注意的是k8s1.24+ 已经弃用dockershim,现在使用docker需要cri-docker插件作为垫片,对接k8s的CRI。

硬件环境: 2c2g

主机环境: CentOS Linux release 7.9.2009 (Core)

IP地址: 192.168.44.161

一、 主机配置

-

设置主机名

hostnamectl set-hostname k8s-master -

关闭selinux,防火墙

systemctl disable firewalld --now setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config -

关闭swap分区

swapoff -a 注释掉/etc/fstab的信息

二、 安装容器运行时

-

安装docker engine

1.1. 安装和配置先决条件

转发 IPv4 并让 iptables 看到桥接流量

执行下述指令cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # 设置所需的 sysctl 参数,参数在重新启动后保持不变 cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # 应用 sysctl 参数而不重新启动 sudo sysctl --system # 通过运行以下指令确认 br_netfilter 和 overlay 模块被加载: lsmod | grep br_netfilter lsmod | grep overlay # 通过运行以下指令确认 net.bridge.bridge-nf-call-iptables、net.bridge.bridge-nf-call-ip6tables 和 net.ipv4.ip_forward 系统变量在你的 sysctl 配置中被设置为 1: sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward1.2. 安装docker engine

curl -fsSL https://get.docker.com -o get-docker.sh sh get-docker.sh1.3. 启动自启

systemctl enable docker --now1.4. 修改cgroup

由于kubelet 和 容器运行时必须使用一致的cgroup驱动,kubelet 使用的是systemd 所以需要将docke

engine的cgroup修改为 systemcat > /etc/docker/daemon.json << EOF { "exec-opts": ["native.cgroupdriver=systemd"] } EOFsystemctl daemon-reload systemctl restart docker

三、 安装 docker engine 对接 cri 的垫片 cri-docker

-

安装cri-docker

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.11/cri-dockerd-0.3.11-3.el7.x86_64.rpm rpm -ivh cri-dockerd-0.3.11-3.el7.x86_64.rpm systemctl enable cri-docker --nowcri-docker 默认的socket文件在 /run/cri-dockerd.sock 后面会用到

-

配置cri-docker

只需要配置 ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint

fd:// --network-plugin=cni

–pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9即可

-

–network-plugin:指定网络插件规范的类型,这里要使用CNI

-

–pod-infra-container-image:Pod中的puase容器要使用的Image,默认为registry.k8s.io上的pause仓库中的镜像,由于安装k8s使用阿里云的镜像仓库,所以提前指定 puase 镜像

vi /usr/lib/systemd/system/cri-docker.service[Unit] Description=CRI Interface for Docker Application Container Engine Documentation=https://docs.mirantis.com After=network-online.target firewalld.service docker.service Wants=network-online.target Requires=cri-docker.socket [Service] Type=notify ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always # Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229. # Both the old, and new location are accepted by systemd 229 and up, so using the old location # to make them work for either version of systemd. StartLimitBurst=3 # Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230. # Both the old, and new name are accepted by systemd 230 and up, so using the old name to make # this option work for either version of systemd. StartLimitInterval=60s # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Comment TasksMax if your systemd version does not support it. # Only systemd 226 and above support this option. TasksMax=infinity Delegate=yes KillMode=process

-

重新加载cri-docker

systemctl daemon-reload systemctl restart cri-docker

四、 部署k8s集群

1. 配置yum仓库(使用阿里云的镜像仓库)

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum -y install kubeadm kubectl kubelet --disableexcludes=Kubernetes

-

启动 kubelet

systemctl enable kubelet --now -

使用 kubeadm 创建集群

3.1. 修改初始集群默认配置文件kubeadm config print init-defaults > init-defaults.yamlvim init-defaults.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.44.161

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.28.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

-

advertiseAddress 集群宣告地址(master地址)

-

criSocket cri-docker 的socket文件的地址

-

imageRepository 拉取镜像的地址(这里使用的是阿里云)

-

podSubnet 设置pod的网络范围,后面安装网络插件必须和这个地址一致

-

name 字段也要修改一下,修改为你想要节点叫什么(我这里忘记修改了)

3.2. 使用初始化配置文件,下载镜像

kubeadm config images list --config=init-defaults.yaml # 查看需要哪些镜像

kubeadm config images pull --config=init-defaults.yaml # 拉取镜像

3.3. 初始化集群

kubeadm init --config=init-defaults.yaml

3.4. 如果以root用户执行kub-ctl需要执行如下(根据提示执行)

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profile

3.5. 安装网络插件(这里使用简单的三层网络flannel)

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

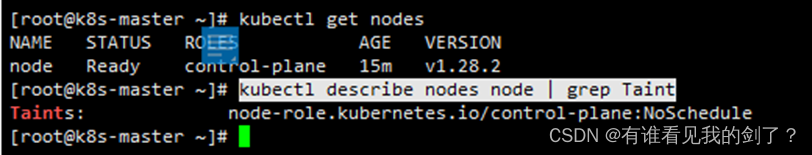

五、 允许master节点调度pod

这是因为master配置了污点所有pod无法调度,只需要把污点删除即可(本地环境或者测试环境可以使用,生产不建议)

关于污点的官方文档介绍:

https://kubernetes.io/zh-cn/docs/concepts/scheduling-eviction/taint-and-toleration/

-

查看节点的污点

kubectl describe nodes node | grep Taint

可以看出master节点被配置了 NoSchedule污点 -

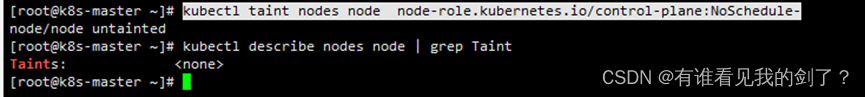

删除污点

kubectl taint nodes node node-role.kubernetes.io/control-plane:NoSchedule-

-

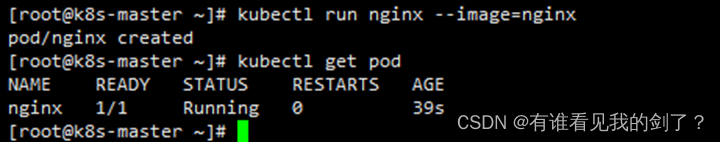

创建pod测试

kubectl run nginx --image=nginx

可以看出master节点现在也能调度pod了