Chat Messages | 🦜️🔗 Langchain

ChatMessageHistory:基类,保存HumanMessages、AIMessages

from langchain.memory import ChatMessageHistory

history = ChatMessageHistory()

history.add_user_message("hi!")

history.add_ai_message("whats up?")

print(history.messages)

#[HumanMessage(content='hi!'), AIMessage(content='whats up?')]Conversation Buffer | 🦜️🔗 Langchain

ConversationBufferMemory,默认return_messages = False。存储为字符串,True存储为message。详细参考langchain学习笔记(十一)-CSDN博客-(3)实践使用-ConversationBufferMemory

from langchain.memory import ConversationBufferMemory

# 默认return_messages = False

memory=ConversationBufferMemory()

memory.save_context({"input":'hi'},{"output":"what's up"})

memory.save_context({"input":'hi'},{"output":"what's up"})

# 存储为字符串

print(memory.load_memory_variables({}))

memory = ConversationBufferMemory(return_messages=True)

memory.save_context({"input": 'hi'}, {"output": "what's up"})

memory.save_context({"input": 'hi'}, {"output": "what's up"})

# 存储为message

print(memory.load_memory_variables({}))

####chain###

from langchain_openai import OpenAI

from langchain.chains import ConversationChain

llm=OpenAI(temperature=0)

conversation=ConversationChain(

llm=llm,verbose=True,memory=ConversationBufferMemory()

)

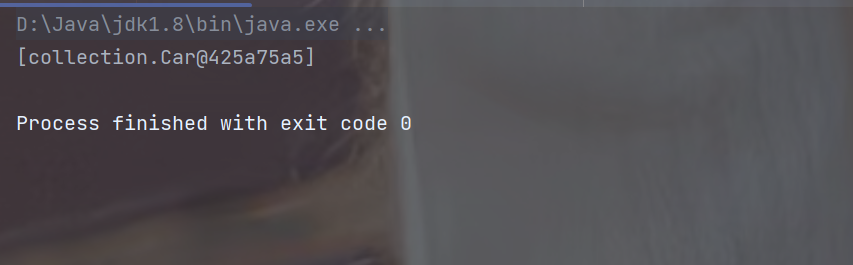

res1=conversation.predict(input='王阳明是哪个朝代的人')

print(res1)

res2=conversation.predict(input="同个朝代还有哪些名人,简要介绍其中三个")

print(res2)Conversation Buffer Window | 🦜️🔗 Langchain

ConversationBufferWindowMemory仅保留最近K个对话,使得缓存不会太大。

相较ConversationBufferMemory仅需要多传入一个参数K(默认k=5),控制需要保留的对话数。其他一致

---------------------------------------------

待续