前置

1、安装NVIDIA驱动

https://www.nvidia.cn/Download/index.aspx?lang=cn

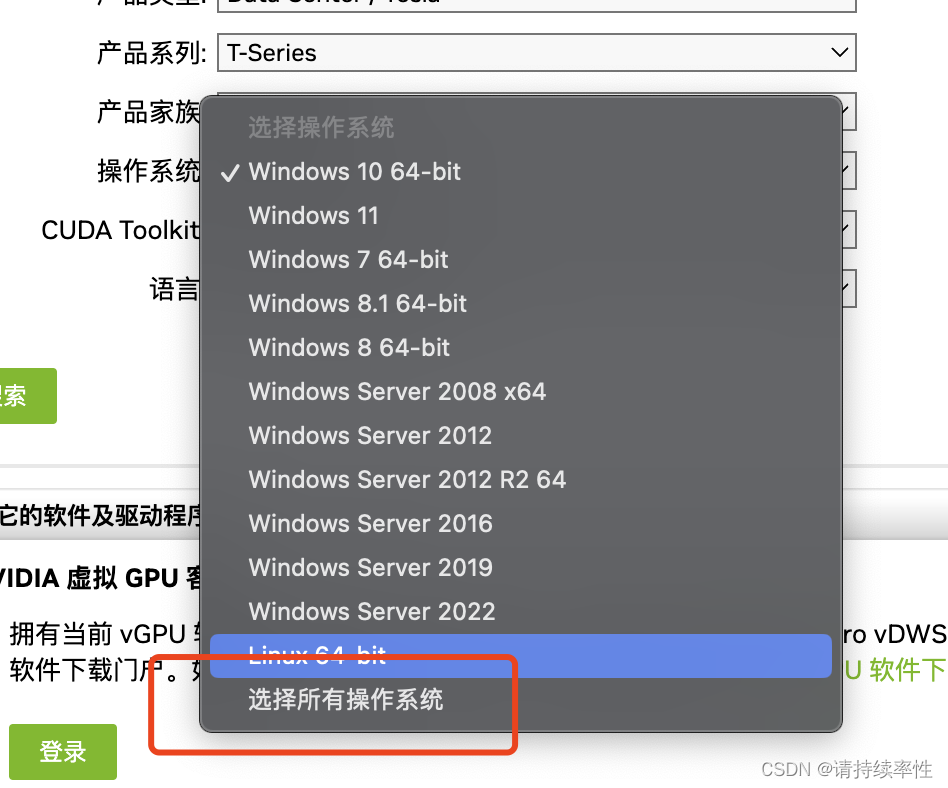

阿里云 Alibaba Cloud Linux 3.2104 LTS 64位,需要选择RHEL8,如果没有RHEL8,则选最下面那个选择所有操作系统

-

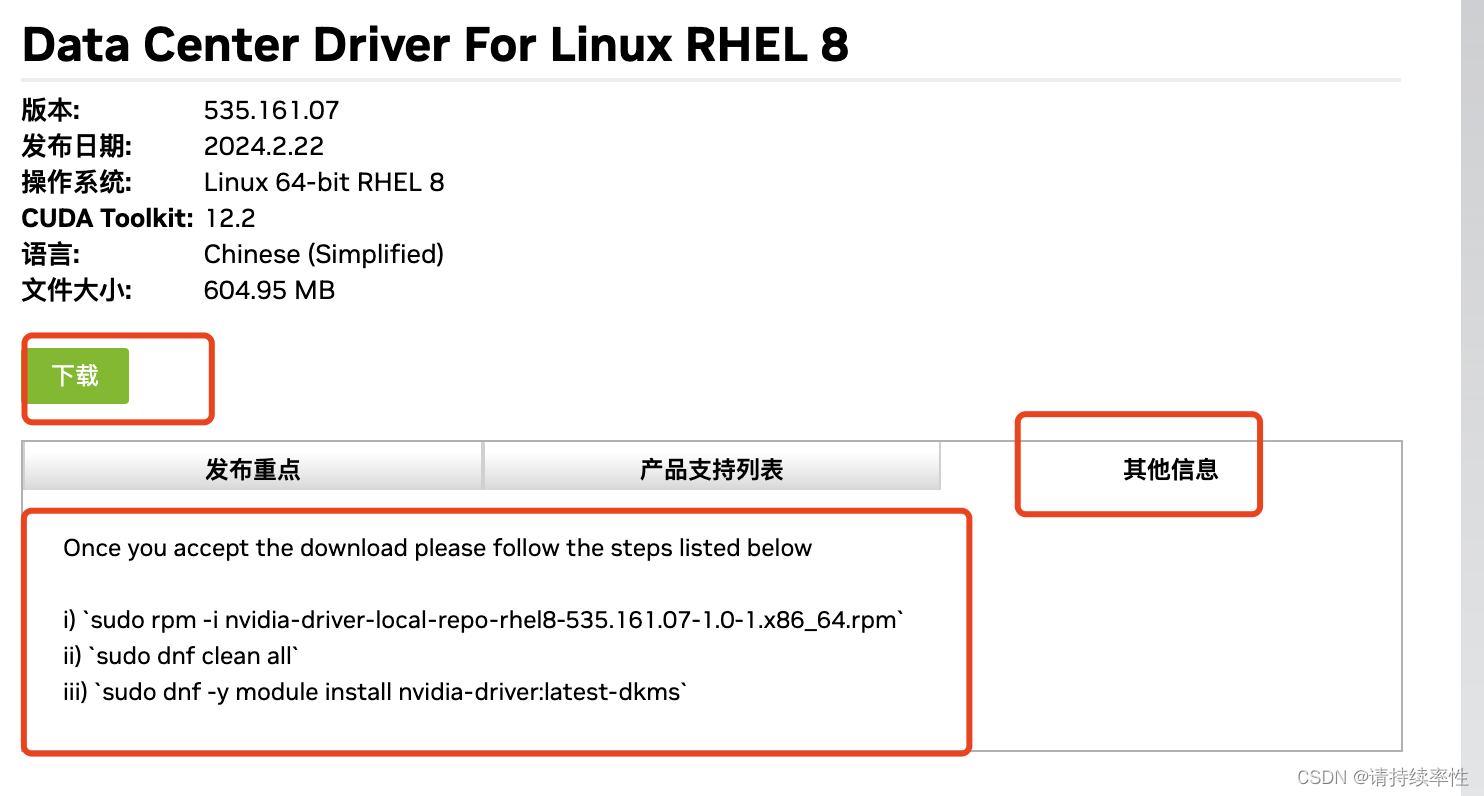

点击搜索,下载这里有安装步骤,记得要看,每个版本安装方式可能不一样

-

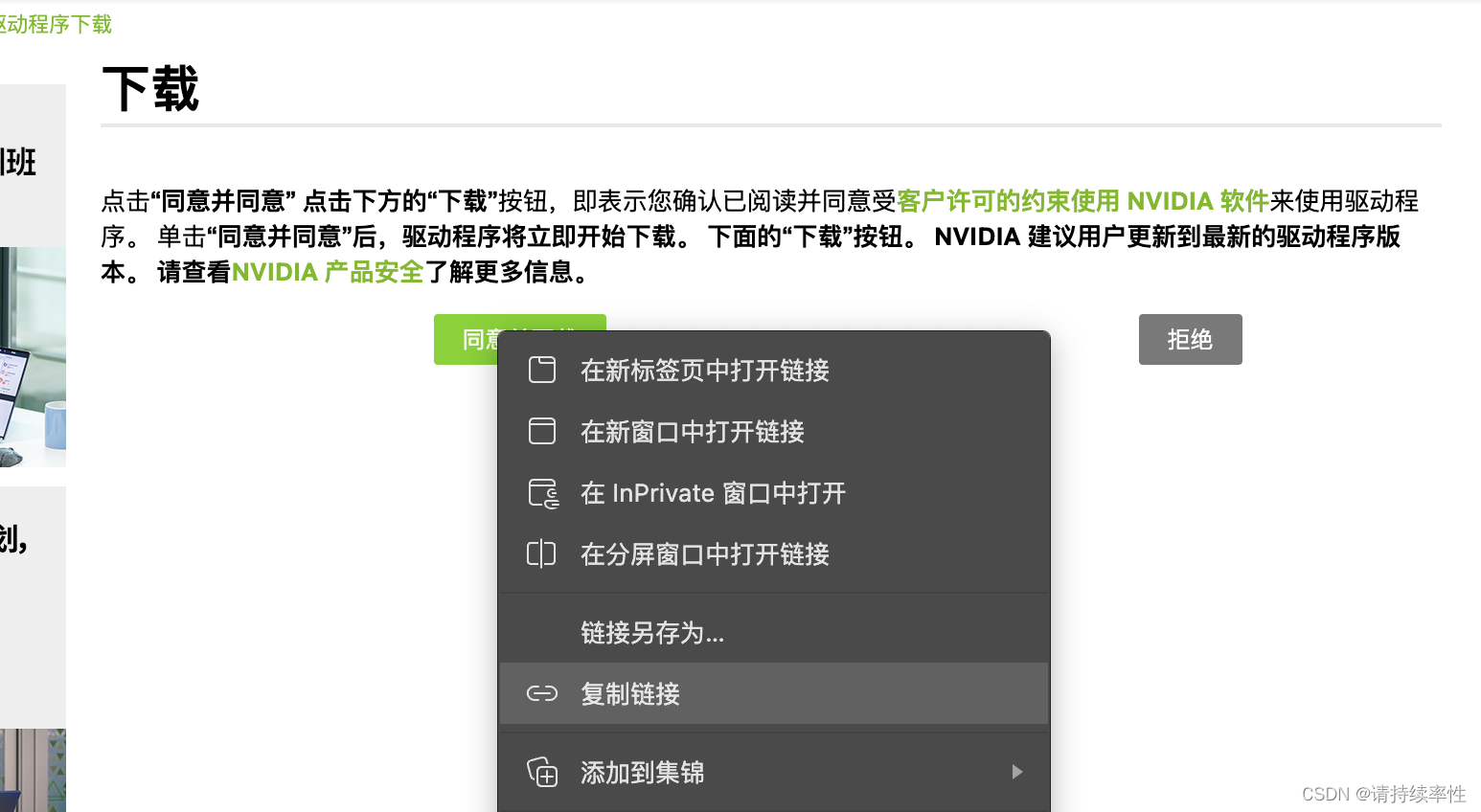

点击下载到这里复制下载链接

-

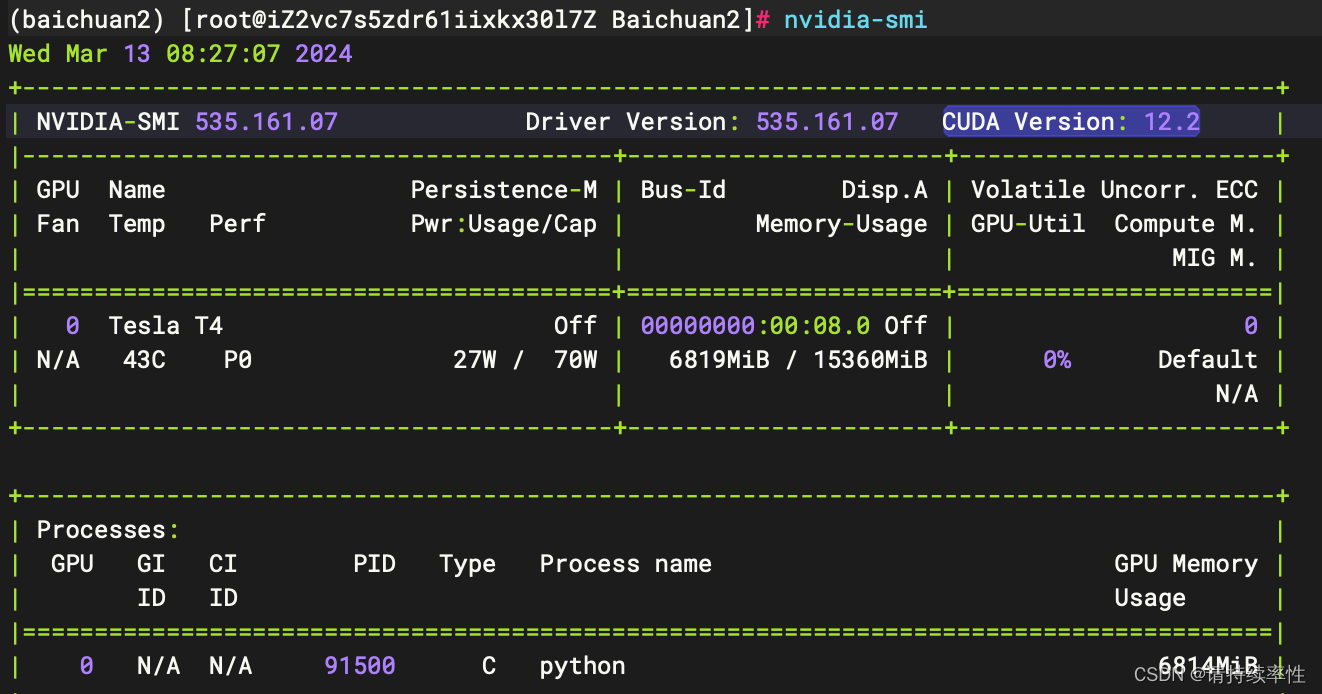

安装后校验

# nvidia-smi

- 输出如下信息表示安装成功,CUDA Version: 12.2需要大于等于后面安装的cuda驱动12.1

2、安装 cuda 11.7

参考:https://developer.nvidia.com/cuda-11-7-0-download-archive?target_os=Linux&target_arch=x86_64&Distribution=RHEL&target_version=8&target_type=runfile_local

wget https://developer.download.nvidia.com/compute/cuda/11.7.0/local_installers/cuda_11.7.0_515.43.04_linux.run

chmod +x cuda_11.7.0_515.43.04_linux.run

sudo sh cuda_11.7.0_515.43.04_linux.run

安装完成后设置环境变量

export PATH=$PATH:/usr/local/cuda/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64

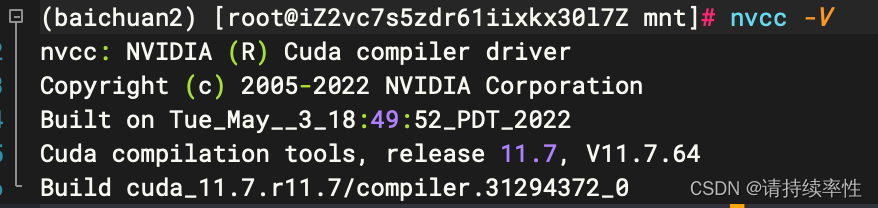

测试

nvcc -V

3、安装anaconda

下载脚本并执行

wget https://repo.anaconda.com/archive/Anaconda3-5.3.0-Linux-x86_64.sh

创建虚拟环境并激活

创建:conda create -n baichuan2 python=3.10

激活:conda activate baichuan2

取消激活:conda deactivate

4、下载模型

https://aliendao.cn/models/baichuan-inc/Baichuan2-7B-Chat-4bits#/

使用wget一个个下,拼接文件路径

http://61.133.217.142:20800/download/models/baichuan-inc/Baichuan2-7B-Chat-4bits/tokenizer.model

安装环境

1、克隆源代码

git clone https://github.com/baichuan-inc/Baichuan2.git

2、安装依赖

cd Baichuan2

pip install -r requirements.txt

测试

python

import torch

print(torch.__version__)

torch.cuda.is_available()

3、安装量化版本

使用4bits的话,需要安装这个量化包

pip install bitsandbytes==0.41.0

pip install transformers==4.30.0

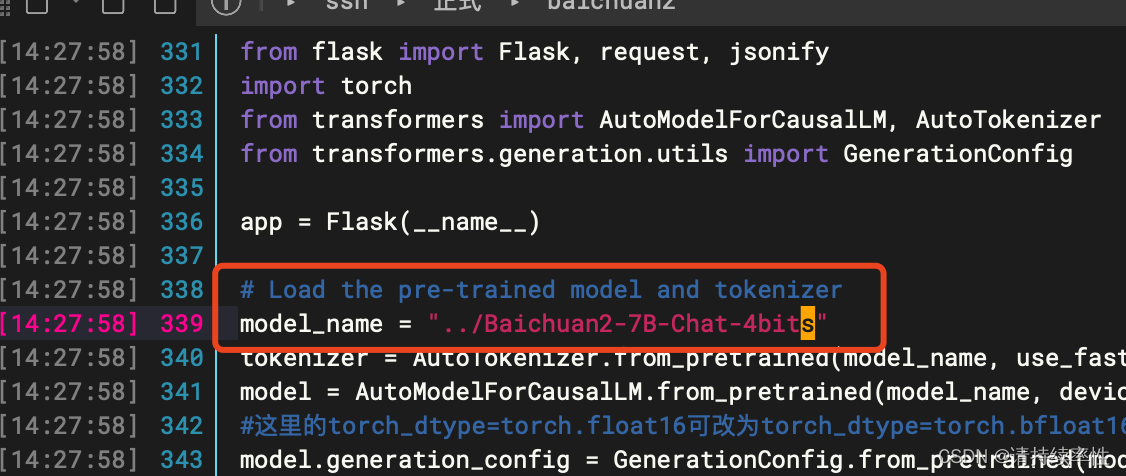

4、启动前修改模型路径

vim OpenAI_api.py

5、启动api服务

如果需要启动cli_demo.py或web_demo.py同样要修改模型路径

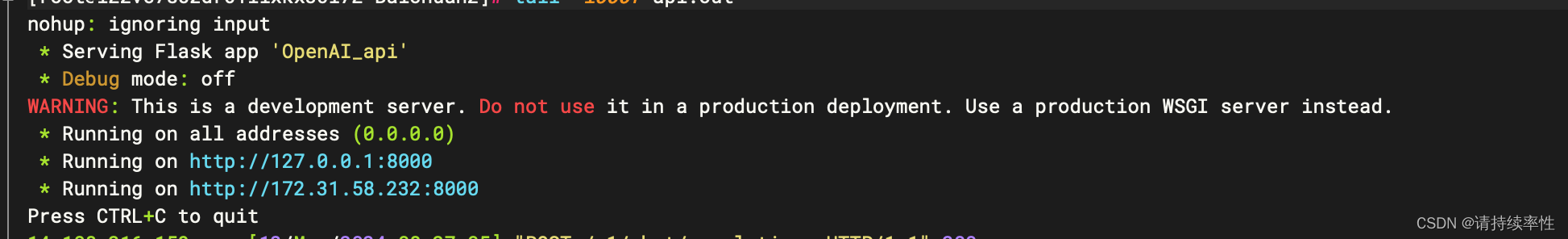

nohup python OpenAI_api.py >api.out 2>&1 &

-

启动成功前可以直接启动查看日志并排查错误

python OpenAI_api.py -

启动成功日志:

-

api请求示例

POST http://127.0.0.1:8000/v1/chat/completions

{

"model": "Baichuan2-Turbo",

"messages": [

{

"role": "user",

"content": "xxx"

}

],

"temperature": 0.3,

"stream": false

}

- 返回示例:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "xx",

"role": "assistant"

}

}

],

"model": "../Baichuan2-7B-Chat-4bits",

"object": "chat.completion",

"usage": {

"completion_tokens": 34,

"prompt_tokens": 216,

"total_tokens": 250

}

}